SQL Server 2012 AlwaysOn – What is it?

This is the first one of several articles we will provide about the new SQL Server 2012 AlwaysOn functionality. In this part we will go over the terms, capabilities and differences to SQL Server Database Mirroring (DBM). In subsequent other parts we will talk more about the details, configuration possibilities and how one could get from e.g. Database Mirroring to AlwaysOn.

The structure of the series we were thinking about will look like:

- General introduction (this part)

- Infrastructure requirements to deploy most significant new parts of AlwaysOn with reference architectures for SAP

- Deploying the new Functionality

- Operating and Administrating AlwaysOn

- Monitoring AlwaysOn

- Troubleshooting AlwaysOn

[Edit] You can find the summary of the complete series here.

Hence let’s start with the first part of the General introduction

What is AlwaysOn?

AlwaysOn is a collection of High-Availability and Disaster Recovery functionality with the goal to minimize Recovery Point Objective (RPO) and Recovery Time Objective (RTO) further below the already good times we could achieve with Database Mirroring. AlwaysOn is offering methods and functionalities which either use shared storage infrastructures in combination with Windows Server Failover Cluster (WSFC) or non-shared storage infrastructure to build out highly available SQL Server configurations. We basically look at important extensions to the SQL Server Failover Cluster functionality in AlwaysOn. On the non-shared storage side a new functionality stack which exceeds capabilities of Database Mirroring or Log-Shipping got added. Log-Shipping and Database Mirroring in its origins will still be part of SQL Server 2012 HA/DR functionality. However Database Mirroring functionality can be completely replaced with new functionality in AlwaysOn. Also a lot of configurations which required Log-Shipping so far can be covered with the newly added functionality. Since Log-Shipping and Database Mirroring didn't get changed in their basics, an upgrade to SQL Server 2012 doesn't force an immediate upgrade to different HA/DR Technologies. Changes to the traditional WSFC usage by SQL Server are also compatible and in case of an upgrade to SQL Server 2012 don't require any changes to keep on working.

What functionality got improved with AlwaysOn?

Over the years having Windows Clustering, Log-Shipping and Database Mirroring used by customers, a lot of feedback accumulated. Especially in the SAP space, Windows Clustering emerged as the minimal default for HA. As well as Log-Shipping became the default with the ever rising numbers of Disaster Recovery configurations. Feedback from this class of customers was that storage in their local High-Availability configuration using WSFC still was a single point of failure. Also the fact that SQL Server could not be clustered over different sub-nets was re-occurring as feedback. Another point of complaint was that the failover would be executed on the whole instance.

Log-shipping feedback was that the RPO could develop too large and hence potential of loss of committed transactions too risky.

The single point of failure problem with Windows Clustering got addressed with Database Mirroring introduction with SQL Server 2005. However customer feedback on DBM looked like:

- Request for more than one mirror

- Ability to read real-time from the mirrors

- Ability to execute backups from the mirror

- Include multiple user databases within one mirroring relationship

- Mixture of synchronous and asynchronous data replication between primary and multiple mirrors

- Extend scalability of the data replication

- Accelerate failover

- Allows multi-subnet failover and reconnects

All these points got addressed with AlwaysOn as delivered in SQL Server 2012. AlwaysOn shows up with the following capabilities:

- Besides the primary one can have 4 mirrors (later on we see that we changed terminology a bit)

- Two of the mirrors can work with synchronous data replication whereas the additional 2 need to be asynchronous

- One can read from a synchronous or asynchronous mirror real time

- One can include multiple user databases in a mirroring relationship (no system databases allowed)

- Larger design changes were done to increase scalability as well as improve failover time

- Multi-Subnet support was added for the SQL Server Failover Cluster functionality

- Unlike a complete SQL Server instance leveraging Windows Server Failover Cluster (WSFC) where the failover unit would be the whole instance or Database Mirroring where the failover unit is a database only, the failover units can be multiple databases.

- New Health Detection for SQL Server which is used for shared disk as well as non-shared disk deployments

Before we go into details of all these new functionalities, we need to go through terms and the principle concepts behind AlwaysOn

Terminology

Let’s go through some of the terms:

Primary: |

Is the active instance which handles the primary workload. In DBM this was called Principal |

Secondary: |

Are the instances which receive the transaction log records. In DBM only one such instance could exist and was called mirror. Now there can be multiple of those secondaries. |

Availability Group: |

Is the logical frame around one or more database and represents the unit of failover. One or multiple databases can be grouped within an Availability Group (AG). An AG is assigned to Secondary instances not the single databases anymore as in DBM. Administration and monitoring is mostly done on the base of an AG. AGs are getting names assigned to them. One also can assign a virtual name to an AG (see later) which is used from SQL Server to connect against the configuration behind the AG. One SQL Server instance can support multiple AGs. The Availability Group is the unit of failover and hence can be 1-n databases which are failing over. However the transmission of transaction log records of the different databases is not ordered or coordinated. Hence it is not guaranteed that the databases are transactional consistent between each other after a failover. |

Availability Replica: |

An Availability Replica (AR) is an instantiation of an availability group that is hosted by a specific instance of SQL Server and maintains a local copy of each availability database that belongs to the availability group. Each AG supports one Primary Replica and up to four Secondary Replicas. |

Availability Database: |

Is one of the databases in an Availability Group. Each Availability Database is present as replica on the Availability Replicas of the Availability Group. A specific database can be part of one Availability Group only and cannot be a part in multiple AGs |

Availability Group Listener |

Virtual name which gets assigned to an AG. The listener either picks a TCP/IP address via DHCP or one can assign static IP addresses to the Listener. Multiple IP addresses are possible because the ARs (Availability Replicas) can be on SQL Server instances which are running in different subnets. The Listener will be used for connection redirection in case of a failover or for Read-Only functionality. Means it is transparent for the application which of the different replicas is in the Primary Role as long as all the ARs are covered by the listener |

Availability Mode |

Mode which defines whether replications of the transaction log records between replicas is performed synchronous or asynchronous. As such two modes exist: 'Synchronous Commit' and 'Asynchronous Commit'. The Availability Mode is set per replica and is applied for the whole AG and with that is valid for all databases within an AG. |

Failover Mode |

Mode which describes whether automatic or manual failover from the primary to a secondary replica is set. As such Failover Mode 'Automatic' and 'Manual' exist. As with the Availability Mode, the Failover Mode is applied to an AG and with that to every single database within the AG. |

Log Cache |

A new cache which got introduced with AlwaysOn functionality and Recovery. The Log Cache is used to cache the log record packages as those get sent from the primary to the secondary. The secondary will keep the received log packages in the Log Cache for a longer time. Even in the case of the secondary lagging a bit behind in recovery, it now is more than likely that the data to recover doesn't need to be read from disk, but can be read from the Log Cache. The cache is also used in case of crash recovery where the data read from the log in a first pass is likely kept in cache and doesn't need to be read from disk again for the roll forward phase. This cache is also used by replication, rollback and redo |

SQL Server FCI |

SQL Server Failover Cluster Instance. Is an instance of SQL Server which is installed on shared storage to use the traditional WSFC cluster failover. The granularity is always the complete SQL Server instance |

Focusing on the non-shared storage part of AlwaysOn, the terminology already indicates that functionality delivered by Database Mirroring so far got severely extended. As in DBM the moment transaction log records get persisted on the instance running as primary, the other replicas get the very same log records sent via the TCP/IP network connection. Thereby one can configure whether the Availability Mode between the primary and a secondary should be done in a synchronous manner. As in DBM, the primary will then wait for acknowledge of the particular secondary. Or an asynchronous mode is available where the primary wouldn't wait for acknowledges.

However since we now can have multiple replicas which need to synchronize with the primary, one general setting to define the Availability Mode as we did with Database Mirroring, will not work anymore. Hence the Availability Mode will now need to be set on each of the replicas. Even for the replica which is in the primary role. The resulting Availability Mode between the primary replica and each one of the secondary replicas will be determined according to this table:

Primary Setting |

Secondary Setting |

Resulting Setting |

Synchronous |

Synchronous |

Synchronous |

Synchronous |

Asynchronous |

Asynchronous |

Asynchronous |

Synchronous |

Asynchronous |

Asynchronous |

Asynchronous |

Asynchronous |

Means both replicas (primary and one of the secondary replicas) need to have synchronous mode set to get a synchronous replication working between them. A similar picture emerges in regards to the failover modes. In a given AG configuration, there only can be ONE secondary which one could failover automatically to. In such a case the primary as well as the secondary need to have the Failover Mode set to ‘Automatic’ in order to fail over and fail back as expected.

Readable Secondary

Despite the fact that SAP NetWeaver instances are not able to work against read-only database, we see a lot of potential with this functionality for SAP customers. There hardly is a customer who does not have any tasks to extract data or administrative tasks which could be done on a readable secondary. The readable secondary functionality implemented in AlwaysOn allows reading real-time from a secondary. Real-Time means as the data gets changed on the primary, the changes are getting transmitted to the secondary and redone there. The changed data becomes visible on the secondary. Unlike DBM where one had to create a Database Snapshot on the mirror in order to read from that, with AlwaysOn one always reads the actual state at query start. One can read from either a synchronous or an asynchronous secondary replica. Creating an AG and adding Availability Replicas the default is having the secondary replicas not enabled for reading. This capability needs to be enabled in an extra step. The problem to realize something like a readable secondary is that the thread redoing data changes, as those got executed on the primary, are in no way to be blocked by queries executed against the very same data. In order to realize that, all the isolation levels of the applications reading from the secondary are mapped to a snapshot isolation level. Even uncommitted read isolation levels.

Snapshot isolation level is enabled at the moment the secondary is set to be readable as well. However in order to become accessible all open transactions which got initiated on the primary and transferred on the secondary need to have opened with readability on the secondary enabled. That means if there is a transaction on the primary running for hours, the ability to read from the secondary might be delayed for quite a while. We will discuss this specific case in a later article in more detail.

On a first glance, the readable secondary could be seen as a possibility to create scale-out readable images of a database where modifications are done on the primary and reading by the same application is done on the secondary replicas. This however will work in special cases only. If we take traditional applications which change and read data, then there is an implicit assumption that from the moment data has been committed, it immediately can be read microseconds later. Like in synchronous DBM we are acknowledging the transfer of the log buffer to a secondary at the moment the log buffer got persisted on the primary and the synchronous secondary replicas. The moment the commit is acknowledged to the application this means that the transaction log data is persisted on primary and synchronous secondary replicas. But it doesn't necessary state that the changes as such have been applied to the data pages on the secondary replicas already. Out of different reasons that could take another few milliseconds or seconds. Hence the implicit assumption by the application that committed data can be read microseconds later is not guaranteed to be true. Therefore scaling out read workload of one application which at the same time applies changes to the primary replica might not necessarily work. However building scale-out read-only replicas for just a read-only application might work perfectly. Whereas one needs to keep in mind that the state in time of data on different secondaries is not necessarily dependent on the fact whether you the replication of the changes is done synchronously or asynchronously. But it is dependent on when the redo is done. Even with Snapshot isolation level which we use on a secondary, queries acquire stability locks which prevent the table schema being changed underneath. Hence the redo of a DDL operation on a secondary can be blocked by queries steadily accessing the table. As a result the complete redo can get delayed on a secondary

The fact that snapshot isolation is used on the secondary might also require more attention to tempdb than one would care for under the primary workload only. Workload which might neither use Read Committed Snapshot Isolation level nor Snapshot Isolation level. Since an AR can be in secondary role or in primary role, one would need to find a tempdb configuration for all the replicas which can sustain the additional load applied by Snapshot Isolation on one of the readable secondary replica.

Since the workload on a readable secondary often is completely different than the workload on the primary additional column statistics might be necessary to get to optimal query plans. SQL Server 2012 will create those additionally required column statistics and will store those in tempdb of the SQL Server instance running the readable secondary replica. However the same unfortunately is not true for additional indexes. If the read-only workload executed against a secondary replica would require additional indexes, those would need to be created on the primary replica in order to get transferred subsequently to the secondary replicas.

Connecting to a readable secondary can be done by directly connecting to the SQL Server instance which runs a readable secondary replica or by connecting to an AG with the AG Listener name. In such a case the decision would be made by the AG to which of the readable secondary replicas, the connection gets assigned. We’ll go into details in some other posts.

Backup from Secondary

Databases within an AG can be backed up from any one of the secondary replicas. The types of backups supported are full database backups in copy_only mode and Transaction log backups. Differential backups can only be done on the instance which is in the primary role. The fact that full backups are done in copy_only methods results in not reseting the differential bitmap which is used to execute differential backups. Means for the primary replica it looks like the full backup on the secondary never happened. Please note that the Tlog backup on secondary replicas is different. Those actually truncate the committed transactions out the the transaction log. All the Tlog Backups done on one or multiple of the different replicas are building one unified Tlog Backup chain. Full database or transaction log backups can be executed at any time on each of the replicas. This feature does not require putting the secondary replica in read-only mode. However the backup history is kept in the msdb database of the replica the backup got executed on. Means the backup history and chain cannot be retrieved out of one instance only.

How to configure this feature and how to actually setup backups taken from secondary replicas will fill another complete blog article, which will be one of the series here on this blog.

Impact of the new Log Cache

In SAP OSS note # 1550337 we described an issue where Database Mirroring could lead to serious latency issues when regular workload mixed with administrative workload such as index build or rebuilds. Reason for those issues were rooted in the architecture of Database Mirroring (which didn't change at all in SQL Server 2012). Problem was that situations could occur under high stress where even on the Principal the data which needed to be transferred, required to be read physically from the Transaction Log of the Principal again. The cache which is used by Database Mirroring to buffer the data to be transmitted proofed to be way too small for such high stress situations. It also was not able to adjust or expand. This is different now with the Log Cache which we saw growing up to several Gigabytes on large servers already. Scenarios of such high stress where we could reproduce Database Mirroring causing problems as described in the OSS note, did not show any problems with AlwaysOn functionality.

Some more Details on Data Replication

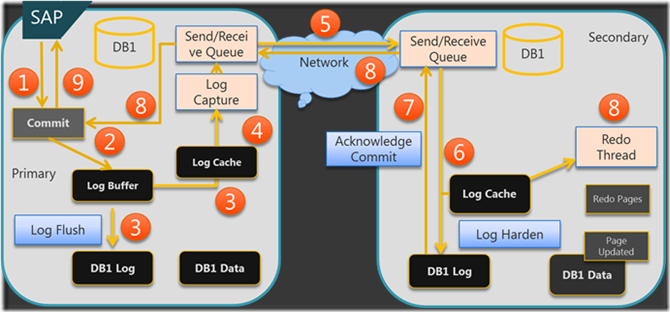

A rough diagram how synchronous transaction log replication is working is given in this rough diagram with 9 steps which we’ll go through. As mentioned the picture below is rough and does hide a lot of detail and also timing to a degree. But it is made to give an overview how the replication of transaction log records from the primary replica to a secondary replica works:

Step 1: In a typical case the SAP application would send a commit to finalize a transaction

Step2: The commit record is inserted into the actual log buffer.

Step 3: The commit record triggers a flush of the log buffer to persist the buffer in the transaction log on the instance running the primary replica. In the same notion the buffer is copied into the new log cache. If all secondary replicas are in an asynchronous availability mode, the success of this step would be good enough to send an acknowledge message of a successful commit back to the application when the I/O to the local transaction log successfully executed.

Step 4: The new log capture framework will now capture the new log data and will hand it over into our messaging framework which will manage the send and receive queues as well as failure detection in the network traffic.

Step 5: The change records as persisted to the transaction log of the primary are getting transferred to the instances in the secondary role. The log content is getting compressed and encrypted before sending it over the instances running the secondary replicas.

Step 6: The content received from the messaging framework gets persisted in the transaction log of the secondary replicas and at the same time get inserted into the log cache of the secondary replicas.

Step 7: The moment the content was successfully persisted in the transaction log of the secondary instance an acknowledge message is sent to the messaging framework

Step 8: As the acknowledge messages of the successful persist on the secondary replica is sent to the primary replica, the secondary replica is starting to apply the changes to the actual data pages. If all the acknowledges of the synchronous secondary replicas is received on the instance running the primary replica the next step can be done

Step 9: The acknowledge message is sent to the SAP application

I think this is enough for a first rough explanation of AlwaysOn. As you likely realized, there was no mentioning of a Witness as we had it in Database Mirroring. The next part of the series will explain why we don't need a witness instance anymore and how it is getting substituted.