NIPING – a useful tool from SAP

This article got motivated by a customer support case we lately engaged in. The issue in the case was that after rolling out new NICs, new drivers and new NIC teaming software the DBMS server of their SAP ERP system seem to respond sluggishly sometimes. What brought us to look into the network was the fact that ST05 traces in ERP showed response times of 50-70ms for very simple queries we would have expected to be executed within a few milliseconds. Checking into the DMV sys.dm_exec_query_stats on the SQL Server side revealed that the same queries which were recorded in the 50-70ms response time range on the SAP side were noted by SQL Server as being executed within 3-4ms. Hence we focused on looking into the network. In order to perform basic investigations we took NIPING in the way as described in this article.

What is NIPING?

NIPING is a tool delivered by SAP in the SAP executable directory (..\usr\sap\<SID>\D<instance enumeration>\exe. The tool is used by SAP support to check on the network connectivity and more important latency and throughput on the network. Where the tool was used very little in the past was around the configuration of SAP landscapes. In the past we relied on the fact that just doubling the # of network cards (NIC) would be sufficient to increase the throughput on the DBMS server whereas the application servers usually were fine with the throughput one NIC could provide. However with the current hardware development of the many-core processors more and more results in network throughput issues even with 1Gb Ethernet NICs. Here and there software component mixes like NIC teaming software and other software interfering with the network packages (like IPSec, eventual encryption of network traffic, etc) also might significantly lower the throughput from the theoretical possible throughput. Hence it makes sense to use this little tool from SAP in order to test network throughput under real conditions with the exact same network stack as SAP programmed it in their disp+work executable.

How does it work?

You need to start at least 2 NIPING sessions. One will be the server side session and at least another one which is so to speak in the client role on another server. It is possible for the server session to receive from multiple transmitting clients. Hence the scenario of a DBMS server receiving data from multiple SAP application servers and then sending data back to the very same application servers is possible. NIPING thereby checks the receiving and transmitting part. Means the # of bytes sent by the NIPING session on an application server is returned by the receiving session to the sender. SAP has an OSS note #500235 which describes the usage of NIPING pretty well.

Let’s describe a small scenario

Let’s assume that we want to check the network throughput of our DBMS server. In order to do so we use the 4 of the SAP application servers to press on the DBMS server. Assuming that we don’t have SAP application software installed on the DBMS server, we need to copy the following 4 files into a directory we created to the DBMS server:

- Niping.exe

- Icudt30.dll

- Icuin30.dll

- Icuuc30.dll

Next step would be to start the nipping session on the DBMS server with the following command line out of a command window in the directory we copied the 4 files into:

niping -s -I 0

After a successful start, a comment like:

Sun Mar 20 15:04:13 2011

ready for connect from client ...

should appear in the command line.

On the application server side, we open a command window and go into one of the SAP directories containing the SAP executables. Here we start the client sessions with the following command line:

niping.exe -c -H <server name which runs the server session> -B 50000 -L 1000

The –B parameter defines the batch size. Whereas the –L parameter defines the # of loops which will be worked through. So the –L parameter is mostly for defining the length of the test. In order to perform a test which shows what the maximum data volume is we can transfer to and from the DBMS server, we took a batch size of 50KB to be submitted in both directions. After the test started on the client side, the following comment should show up in the command line:

Sun Mar 20 15:13:33 2011

connect to server o.k.

In the nipping session on the server side, this comment should show up:

Sun Mar 20 15:13:33 2011

connect from host <fully specified domain name of client>', client hdl 1 o.k.

After the test ran successfully, the client will give the following result:

Sun Mar 20 15:13:34 2011

send and receive 1000 messages (len 50000)

------- times -----

avg 1.904 ms

max 9.982 ms

min 1.656 ms

tr 51287.892 kB/s

excluding max and min:

av2 1.896 ms

tr2 51500.092 kB/s

We usually take the av2 and the tr2 values to calculate the throughput overall. So if we have four client sessions working against one server session we e.g. look at these numbers:

Client 1 |

Client 2 |

Client 3 |

Client 4 |

Sum/Avg |

|

Avg Latency in ms |

3.287 |

3.214 |

2.912 |

2.957 |

3.0925 |

Transmission Volume in Kbits |

238,812 |

243,088 |

259,152 |

257,984 |

999,036 |

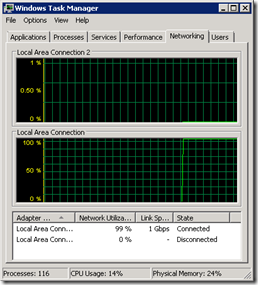

If we look at the numbers, the response time of around 3ms seems to be OK. Looking at the Kbits/sec, we seem already close to the physical possibilities of a 1GBit Ethernet card. Checking in task manager of the server which ran the server session, the network utilization on the only NIC indeed seems to be 99% as shown below.

So the result of the test is that the DBMS server can run on line speed in terms of throughput with acceptable response times.

Reasonable test sizes for everyday situations

The normal situation is to have way smaller package sizes than 50K which are transferred. When looking at a typical SAP ERP, we look at the SAP application instances sending prepared parameterized queries to SQL Server. Means usually the statement text is replaced by a handle. All what is sent besides the handle are the set of parameter value. In return SQL Server will then return some metadata descriptions plus the result set. In typical SAP ERP cases, the result sets usually are on the smaller side. Hence analyzing a SAP ERP system for a while, we saw typical average sizes of a network request sent to SQL Server being in the size of 300-500 bytes. But then it was quite a few thousand or a few ten thousand packets of that size per second. In return SQL Server used to return packets in the size of 1000 to 1500 bytes. Usually tens of thousands packets like that per second. Hence another test can be done where we try to use the smaller packets sizes and where the focus is to push the # of packets send into many tens of thousands. One area we can test with such a test is the configuration of Windows Receive Side Scaling (RSS). A functionality which is essential on the server hardware we use today. In essence this functionality will allow leveraging multiple CPUs on the incoming stream of network traffic. In very old days (before Windows Server 2003 SP2), there only was one CPU used to execute substantial work resulting out a network interrupt. In more recent version of Windows Server, multiple CPUs can work on it using RSS. However different Network Card vendors are supporting RSS in very different ways and very different configurations. Hence it can make sense to test the RSS configuration with niping.

Another real life experience is the fact that more packages are getting pushed from the DBMS server to the various application servers than packages are getting received from the application servers. The difference can be factor 4-5. So not only larger packages are getting sent by the DBMS server, it also is that more packages will be sent.

Some things else to take into account

Some NIC teaming software is bundling sending data only and leverages one NIC for receiving only. In those cases NIPING doesn’t help to figure out the maximum possible send rate volume of the DBMS server side since niping on the other side will return the volume sent. However some NIC teaming software does have options where they can bundle both ways. Bundling both directions is especially interesting for intensive data load situations or the classical SAP homogenous, Unicode or heterogeneous system migration. In those cases SAP application servers are used to run importing R3Load processes. Therefore one needs to see how one can open up as much as possible receive bandwidth for the DBMS server. Such situations revert the requirements of everyday life to a situation where the DBMS server needs to receive drastically more data than it needs to send out.