Troubleshooting AppFabric Error Messages for SharePoint

Otherwise entitled “The Mysteries of the Wobbly AppFabric Cache-Cluster”.

In the daily running of on-premise SharePoint 2013, you may have seen errors & warnings logged about AppFabric/the distributed cache cluster. This article is to clear up why they might be happening. Errors such as:

- Connection to the server terminated, check if the cache host(s) is running

- Cache cluster is down, restart the cache cluster and Retry.

These can appear regularly or randomly but the text is basically the same; that the cache cluster was unavailable in some way. If you’re seeing/have seen these types of errors and you want to know why then there’s 8 reasons why these errors could be being seen or could have been logged. Read on.

Q1: Is this a development/single server machine?

If your SharePoint installation is running on a single machine then behaviour is expected. AppFabric is supposed to be always running before anything else but given a system restart will do all services at once & if you look further down this article you’ll see that AppFabric can take a while to warm-up, then well, tough.

Even if you had 32 processors + 80GB RAM these errors would probably still be shown at some point as IIS/OWSTIMER would almost certainly be ready before AppFabric is warmed-up which would perfectly explain these errors. Ignore them & move on with life, or get a proper farm if they’re really bugging you.

Q2: Is the AppFabric cluster healthy?

Run these commands in a SharePoint PowerShell console a server that has the distributed cache role:

- Get-SPServiceInstance | ? {($_.service.tostring()) -eq "SPDistributedCacheService Name=AppFabricCachingService"} | select Server, Status

- Use-CacheCluster ; Get-CacheHost

The 1st line gives SharePoint’s version of what cache-cluster hosts there are & their respective service statuses – each status should say “online”.

The 2nd line gives AppFabric’s version of what hosts there are & their respective health too – each status should say “up”.

If any of these conditions aren’t exactly met or the list of hosts don’t match up between the two outputs then you should repair the cache-cluster before anything else because it’s unhealthy and this might be related to your errors.

Also bear in mind we don’t recommend having more than 3 cache-clusters for various reasons I won’t go into here; if you have more than 3, remove the role from every server that’s likely to be the idlest servers in the farm; user-profile servers or something. That leads nicely to this mini-question…

On What Servers Should AppFabric Run?

Normally the web-front-end machines because the web-role will use the cache more intensely than other roles. That being said, WFEs tend to get overloaded because they have to run all sorts of mad code & queries that developers don’t realise is going to murder performance when used outside their dev boxes. Also there’s often just not enough WFEs either so for both these reasons I tend to recommend moving this role elsewhere by default if you’re seeing cache issues. There’s no concrete advice I can blog about really on this but in general if your WFEs are being hammered, consider moving AppFabric off them elsewhere on the SPFarm.

Q3: Do cache cluster errors appear on a regular basis?

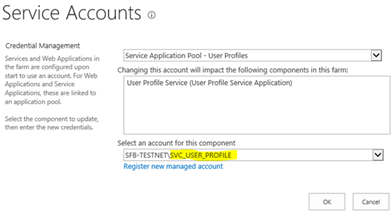

If errors are completely sporadic, skip this bit. If they are weirdly regular then you’re probably looking at an AppFabric permissions error. The user profile service application for example actively uses AppFabric to cache the social data; newsfeeds etc, and will periodically try and update AppFabric with said data (several times an hour in fact) so if permissions aren’t setup then this will be a pretty regular failure & error message. The message is somewhat misleading too; the cluster isn’t down, just inaccessible because of an active denial but the client (SharePoint service-application) will assume it’s just completely offline.

If the user profile app (UPA) account doesn’t have permissions, then you’ll see “Cache cluster is down, restart the cache cluster and Retry” a lot specifically, although with the farm account name in the error details as this is a timer-job.

The fix for this is to grant the service-account running whatever service is failing access to the cache-cluster as sometimes these permissions can be missing:

- Grant-CacheAllowedClientAccount -Account "DOMAIN\service_account"

As mentioned, the most likely culprit here will be the user-profile account.

This guy needs access; normally access is given via the local security groups WSS_ADMIN_WPG and WSS_WPG but sometimes they get out of sync so you can add the accounts directly if necessary.

Q4: Are your AppFabric servers good enough for the job?

At this point we’ve established the cluster is healthy, and these errors are just randomly coming up. The next question is if the machine(s) running AppFabric have the beans to even run the role reliably?

Each SharePoint server in the farm, if it’s a multi-server farm, needs a minimum 12GB of RAM (non-shared, non-dynamic) and 4 real, dedicated CPU cores. No fancy virtualisation tricks will suffice here; no excuses no matter how convincing the sales line is (VMWare, I’m looking at you). In short, unless there’s a 1:1 “virtual CPU” <-> “physical core” relationship for all 4 cores, on each server then your cluster is probably not sufficiently dimensioned and Microsoft will not guarantee AppFabric nor SharePoint will run nicely. Maybe it’ll be fine but we just don’t guarantee it, and if you’re reading this article already then the chances are the hardware is a factor.

If you need AppFabric to be reliable then get decent hardware, the end; amen; this is just non-negotiable. You can even virtualise but just be aware you might need more CPU power on the physical host than you planned for if AppFabric suffers.

Why so serious? I’ve seen AppFabric die several times because the farm in question could just not produce the response times enough to even maintain basic health PINGs and each time it’s been the over-stretched hardware under the hood that’s been the root cause. Sure you can increase the SharePoint time-outs but just know we’re not going to investigate that much if you’re running x3 AppFabric servers on x8 real cores, for example. This comes from many hours of painful investigation concluding the same thing that we’re just not willing to repeat too many times, in the nicest possible way ![]() . AppFabric is particularly sensitive to having real hardware & grunt to power it, in my experience.

. AppFabric is particularly sensitive to having real hardware & grunt to power it, in my experience.

Q5: Have you tried increasing the SharePoint timeouts?

The default client-side timeouts (the “client” being SharePoint) for AppFabric are quite low and it’s possible a CPU peak pushed SharePoint beyond these values. I’ve done a whole section on this, so go read it.

Q6: Are your AppFabric servers drowning in other work?

Similar to Q4; it’s possible your servers are overstretched but due to other roles soaking up all the hardware poor Mr AppFabric doesn’t get a word in edgeways. If the AppFabric machines are also hosting the search component(s) for example, and lots of crawling/searching is going on too then it’s more than possible you’ve effectively DOSed your cache-cluster.

Equally with the web-front-end role (the default); users hitting the site will be soaking up CPU that AppFabric may need. In my experience this is rare but still, is a factor.

For large installations, consider having dedicated hardware for just the distributed cache roles to avoid this scenario.

Q7: Did your AppFabric machine(s) reboot?

Servers have to reboot occasionally; normally “planned” but often not. This will obviously impact availability of the distributed cache, especially as there’s a warm-up period for an AppFabric node as it copies objects off the other machines before it’ll be available (and conversely, copy objects off itself on stopping).

Let’s say there’s a cluster of x2 machines, the idea reboot cycle would be:

Server 1 AppFabric:

Server 2 AppFabric:

I’m guessing that after seeing this graphic it’s not necessary to explain why these server reboots needs to be timed well; we need to always have at least one server in the “running” state, which isn’t necessarily the same thing as just “Windows service running”. Once started, AppFabric service needs to copy various things of its siblings before it’ll be usable so there is a warm-up/warm-down period to be taken into account.

The problem often is that many servers are rebooted via group policy (for example) at a specific time all at once so AppFabric often falls over as this warm-up/down never really happens and we lose the cluster, causing all manner of havoc for our calling client; SharePoint. That leads nicely into…

Q8: Is/Was AppFabric Warmed-up & Ready?

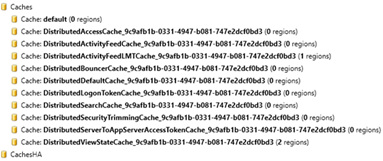

Without the nice restarting scenario shown in Q7, and inevitably at some point even if just once, AppFabric will need to initialise on a cluster level. That takes some time to do as there’s quite a few cache stores to host and during this warm-up time, SharePoint calls to AppFabric are likely to fail.

There’s nothing wrong per-se but SharePoint won’t know that; until all these caches are fully initialised we’re likely to see warnings of doom about the cache-cluster. Looking in the system logs on each AppFabric machine to see if the services restarted recently.

Wait 10 mins; go get a coffee; call the wife/husband, whatever; see if this comes back because your error could be because of this warm-up. You only need to worry if this is a regular occurrence.

Le Fin

So hopefully this’ll help someone figure out some of the deep mysteries off the SharePoint/AppFabric marriage. It’s a funny old beast but when setup properly does work well, even under extreme stress. You just need to know how to press its’ buttons ![]() .

.

Cheers!

// Sam Betts