Efficient Buffer management – Reduced LOH allocation

BufferPools

I’m going to talk about the internals of WCF Bufferpooling as it stands today. Kenny and Andrew already have a posts on bufferpooling that cover them at a higher level. Here I dive into how the bufferPool was optimized further for working with the large object heap. This didn’t make it into .NET 4.0 RTM but we created a hotfix that customers can download if they hit this specific issue of fragmentation and garbage collection - https://support.microsoft.com/kb/983182

This doesn’t impact most customers and since under steady state and when the application has been running for a while they generally won’t observer any issue at all.

The Problem

For services which were transferring a large amount of data per operation, we noticed a large number of un-rooted buffers in the large object heap (LOH - https://msdn.microsoft.com/en-us/magazine/cc534993.aspx). The expectation is that the buffer manager would reuse and hold on to the buffers that were allocated. But there were a large number of objects in the large object heap that did not have any valid root indicating that there were allocated and dropped. The number of such non-rooted objects would spike sometimes and cause the LOH to grow to about 1.8GB until a full GC occurs. This is generally not an issue once the application reaches a stable state but until it does there can be very large GC churn.

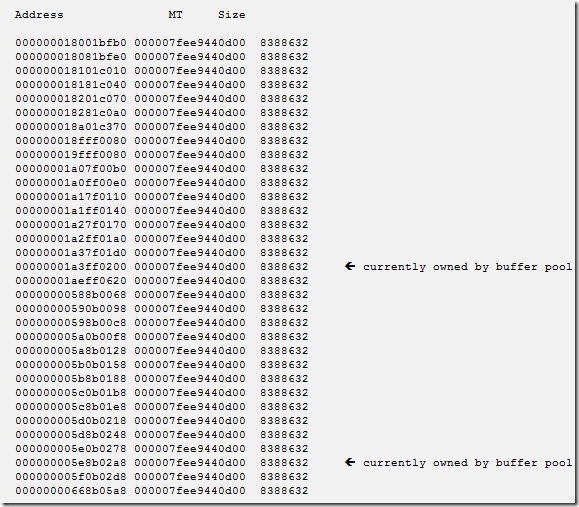

Here is a snapshot from the debugger which shows the LOH and we see only 2 objects rooted to the buffer pool

You can see that the memory has a large number of 8MB chunks of which only a few are rooted to the bufferPool and the rest are actually available for garbage collection which can take a while. Such a spike in memory might be of concern for memory sensitive app. Remember these will be reclaimed once a full GC happens. For most application this would not be an issue since over time the pool would be trained automatically and you would see the applications usage reflect the allocation patterns.

Analysis

Internally we have a class called the PooledBufferManager and to understand this issue we need to have an idea as to how the PooledBufferManager uses the InternalBufferPool where the actual implementation exists. The InternalBufferPool uses a type called SynchronizedPool<byte[]>. The main purpose of the SynchronizedPool is the lock free nature and training heuristics it provides. It has a capability to build thread affinity for buffers and tune quota depending on usage. This is one highly tuned piece of engineering. The PooledBufferManager holds a number of BufferPools of whose sizes increase by power of 2 and one pool who size is equals the MaxBufferSize.

The Synchronized pool itself contains a locked global pool and it starts off with space for just a single entry. As the pool gets used more and more the number of takes and returns are used to compute whether the number of items in a BufferPool should grow which is done by PooledBufferManager. The side effect is that till the size grows large enough, buffers can’t be returned and all the newly allocated buffers would be dropped and GC would have to collect them. Concurrent requests would obviously cause a number of such buffers in flight but only a few can be returned till the buffer pool grows enough to hold all the returns. The training does not allow very fast growth and the size of the pools grow only one at a time.

This is not a problem for object lesser than 85000 since they can be collected by ephemeral GC mostly in gen0. The issue with objects that are larger than 85000 is that they would be allocated on the large object heap which does not get collected until a full GC actually happens. So during training large buffers would be dropped but not collected and would wait for a full GC till they are reclaimed.

The second part of this issue occurs when a particular buffer builds an affinity with a thread. Once a buffer is associated with a thread, it is moved from its global pool into the per thread pool. This also means that that a buffer can be taken and returned only by that particular thread until enough number of misses occurs to promote another thread. If another thread tries to return the buffer but the total number of buffers/entries in the SynchronizedPool is already maxed out then these would also be dropped and requests by other threads would also cause allocation.

Due to these two major code flow paths of the Synchronized pool we noticed very large number of drops and allocations.

Solution

To avoid hitting this code path for large objects we use a simpler pool that does not have any training and only a synchronized stack like the GlobalPool in the SynchronizedPool<T>. This would cause quota tuning to occur much faster and also never drop a buffer if there is available capacity and since there is no thread affinity there would not be any drops during return if there is an available slot on the stack.

Hence we need the BufferPool to support two different kinds of behaviors.

1. For buffers < 85000 we need the default behavior of the buffer pool since scenarios with very small amounts of data are very sensitive to changes.

2. For buffers >=85000 we use a new simpler Pool that doesn’t use the SynchronizedPool<T> and will save GC cost.

This means that the buffer pool would hold onto LOH objects much more aggressively. The result is a POOL with larger buffers will be formed more quickly and hence the reduce GC since there would be lesser large buffer allocations.