How many lines of code does it take to build a complete WP8 speech app?

In today's post, I'm going to show you how to use the three different parts of the speech platform on Windows Phone 8.0: Voice Commands, Speech Recognition, and Speech Synthesis. To do that, we're going to build an actual application, that has real value, but hopefully will be less than 100 lines of code.

Ready? Here we go ...

Here's the plan: Let's build an application that let's the user search Amazon.com for anything they'd like to find, all by simply using their voice. Here's the flow we'll enable today:

User: "Search On Amazon"

Phone: "What would you like to search for on Amazon?"

User: "Electronics" (or whatever they want)

Phone: "Searching Amazon for Electronics" (or whatever they said they wanted)

Sound interesting? Great.

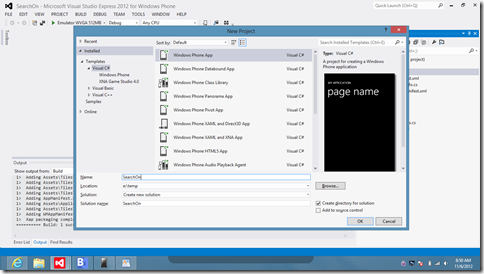

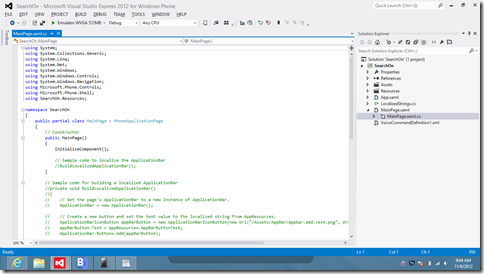

First, let's start off by launching Visual Studio, and creating a new Windows Phone App project.

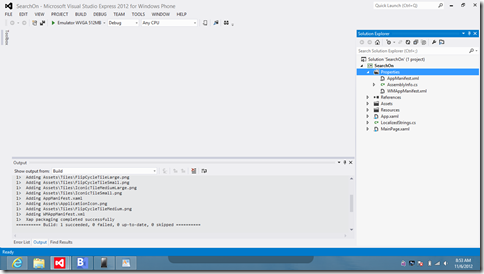

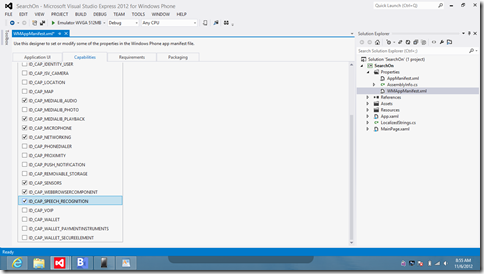

Then, we'll update the application's manifest, so we can use speech in our project.

On the Capabilities tab, click the checkmark next to the Microphone and Speech Recognition items. This tells the Market Place, at application submission time, that your app needs these capabilities. That way, the Market Place can be transparent to the end user, letting them know what your app is really going to do.

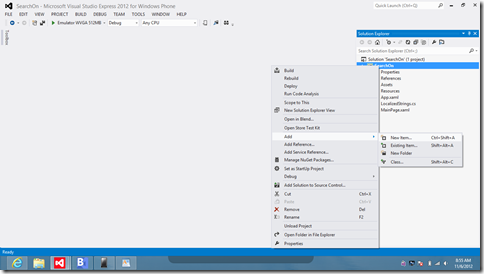

Next, let's add the XML file that specifies what voice commands you want users to be able to say. From the Solution Explorer, add a new item to your project, and select the Voice Command Definition item template.

The default template shows a few examples of what you can do. In a future post, I'll describe in more detail what each XML element does, and why they're important for your application.

For the app we're building today, simply type the following XML into the editor. As you're typing, notice that intellisense is active, making suggestions for you along the way, making it fairly easy to build your own XML file from the ground up.

1: <?xml version="1.0" encoding="utf-8"?>

2:

3: <VoiceCommands xmlns="https://schemas.microsoft.com/voicecommands/1.0">

4:

5: <CommandSet xml:lang="en-US">

6:

7: <CommandPrefix>Search On</CommandPrefix>

8: <Example>Amazon</Example>

9:

10: <Command Name="searchSite">

11: <Example>Amazon</Example>

12: <ListenFor>Amazon</ListenFor>

13: <Feedback>What would you like to search for on Amazon</Feedback>

14: <Navigate Target="MainPage.xaml" />

15: </Command>

16:

17: </CommandSet>

18:

19: </VoiceCommands>

Now that we have a Voice Command Definition file (often referred to as a VCD file), let's open up the MainPage.xaml.cs file to get ready to start writing some actual code! Now, go ahead and remove all the unnecessary comments. That'll help us achieve our 100 line count goal. :-)

Add the following using namespace statements; we need all of these for the code that follows. The first three are namespaces for the three areas of the speech platform on Windows Phone 8.0. The next namespace, System.Threading.Tasks, is needed so we can create our own asynchronous methods that we can call using the new C# await keyword. The last new namespace we'll use today, Microsoft.Phone.Tasks, will help us launch the WP8's version of Internet Explorer, allowing the user to go directly to Amazon's mobile website.

1: using Windows.Phone.Speech.VoiceCommands;

2: using Windows.Phone.Speech.Recognition;

3: using Windows.Phone.Speech.Synthesis;

4: using System.Threading.Tasks;

5: using Microsoft.Phone.Tasks;

Now, let's install the voice commands we've defined up above. To do that, add a line of code to the constructor of our page, after InitializeComponent, that will install our voice command definitions.

1: public MainPage()

2: {

3: InitializeComponent();

4:

5: VoiceCommandService.InstallCommandSetsFromFileAsync(

6: new Uri("ms-appx:///vcd.xml"));

7: }

Don't worry about keeping track of whether you've installed the VCD file before, or not, for now. The VoiceCommandService will do the right thing if you've already installed the voice commands. If you update the VCD file in your application, and hit F5, the new voice commands will be registered. But if nothing has changed since the last call to InstallCommandSetsFromFileAsync, the VoiceCommandService is smart enough to detect that no work is necessary.

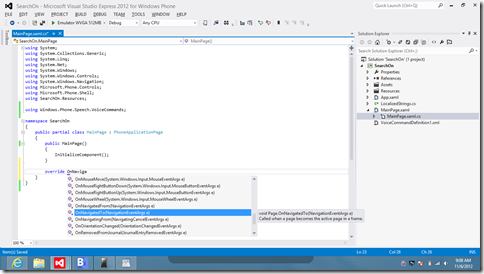

Now that our voice commands are installed, how do we know when a user spoke one of our commands? Great question. That's where the existing OnNavigatedTo method comes in.

When a user speaks a voice command, the VoiceCommandService matches what the user said, with all the phrases you've previously registered when you called InstallCommandSetsFromFileAsync. If the VoiceCommandService determines that it's one of your commands, it will launch your application, on the page specified in your Navigate XML element's Target attribute.

So, let's go ahead and override the OnNavigatedTo protected method.

When OnNavigatedTo is called, we'll be able to tell it's a "New" navigation, as opposed to a "Back", or a "Refresh", by using the NavigationMode enumeration. The event args also contain the entire query string, broken up into an IDictionary of strings. If a query string parameter named "voiceCommandName" is present in that dictionary, you'll know that you've been launched by the VoiceCommandService. The value of that item in the dictionary will tell you which voice command the user has spoken.

So ... In our version of OnNavigatedTo, we'll check to see if it's a new navigation, and that if it's a voice command, and if so, we'll pass the query string dictionary off to another method to actually handle the voice command(s) there.

If OnNavigatedTo is being called for some other reason, you wouldn't normally do what I'm doing here. However, to keep our app as simple as possible, we're simply going to launch IE on Amazon.com's mobile web page.

Also, for this simple application, there's no reason for us to be on the back stack, so we'll try to get ourselves off the back stack. Since I don't know of a clean way to do that, I've opted to call GoBack on the NavigationService, which conceptually makes sense, but will actually throw an exception since we nothing is on the back stack. That's OK for this sample app, because it has the same desired effect.

1: protected override void OnNavigatedTo(NavigationEventArgs e)

2: {

3: base.OnNavigatedTo(e);

4:

5: if (e.NavigationMode == NavigationMode.New &&

6: NavigationContext.QueryString.ContainsKey("voiceCommandName"))

7: {

8: HandleVoiceCommand(NavigationContext.QueryString);

9: }

10: else if (e.NavigationMode == NavigationMode.New)

11: {

12: NavigateToUrl("https://m.amazon.com");

13: }

14: else if (e.NavigationMode == NavigationMode.Back &&

15: !System.Diagnostics.Debugger.IsAttached)

16: {

17: NavigationService.GoBack();

18: }

19: }

Now, let's add the method to dispatch the voice commands. For now, we only have one voice command, but, like I said, we'll add more voice commands in the next post. Structuring the code this way up front, will save us a little time later.

1: private void HandleVoiceCommand(IDictionary<string, string> queryString)

2: {

3: if (queryString["voiceCommandName"] == "searchSite")

4: {

5: SearchSiteVoiceCommand(queryString);

6: }

7: }

OK. Now, let's actually do the site search. But, wait a minute We don't know what the user wants to search for yet. Right?

Let's pick up there, where the VoiceCommandService left off in the conversation with the user. After speaking, "Search On Amazon", they'll hear the prompt of "What would you like to search for on Amazon", due to our <example> element in the VCD file for the searchSite <command>.

So, let's add a new method to handle the conversation, starting at that point, breaking it up into 3 logical pieces.

First, we'll get some text from the speech recognizer. Then, if it recognized something, we'll let the user know what we're doing by telling them that we're about to do the search. Finally, we'll navigate to the site to actually do the search.

1: private async void SearchSiteVoiceCommand(IDictionary<string, string> queryString)

2: {

3: string text = await RecognizeTextFromWebSearchGrammar();

4: if (text != null)

5: {

6: await Speak(string.Format("Searching Amazon for {0}", text));

7: NavigateToUrl(string.Format("https://www.amazon.com/gp/aw/s/ref=is_box_?k={0}", text));

8: }

9: }

OK. Time to use the Speech Recognition APIs. Again, in a future post, I'll describe in more detail what all the various parameters and features, and why we have them as options in the namespace. But for now, just type the following code into Visual Studio:

1: private async Task<string> RecognizeTextFromWebSearchGrammar()

2: {

3: string text = null;

4: try

5: {

6: SpeechRecognizerUI sr = new SpeechRecognizerUI();

7: sr.Recognizer.Grammars.AddGrammarFromPredefinedType("web", SpeechPredefinedGrammar.WebSearch);

8: sr.Settings.ListenText = "Listening...";

9: sr.Settings.ExampleText = "Ex. \"electronics\"";

10: sr.Settings.ReadoutEnabled = false;

11: sr.Settings.ShowConfirmation = false;

12:

13: SpeechRecognitionUIResult result = await sr.RecognizeWithUIAsync();

14: if (result != null &&

15: result.ResultStatus == SpeechRecognitionUIStatus.Succeeded &&

16: result.RecognitionResult != null &&

17: result.RecognitionResult.TextConfidence != SpeechRecognitionConfidence.Rejected)

18: {

19: text = result.RecognitionResult.Text;

20: }

21: }

22: catch

23: {

24: }

25: return text;

26: }

Similarly, here's the method that we'll use to speak the feedback to the end user, using the built in SpeechSynthesizer. It's simpler than using the SpeechRecognizer. Synthesis, often called TTS, or Text-To-Speech, usually is more straight forward from a programmatic standpoint.

1: private async Task Speak(string text)

2: {

3: SpeechSynthesizer tts = new SpeechSynthesizer();

4: await tts.SpeakTextAsync(text);

5: }

And finally, here's the method we'll use to navigate to the specified URL.

1: private void NavigateToUrl(string url)

2: {

3: WebBrowserTask task = new WebBrowserTask();

4: task.Uri = new Uri(url, UriKind.Absolute);

5: task.Show();

6: }

That's it. 100 lines of code. Exactly! Not bad, eh? :-)

Oh yeah ... In my application, I've also removed nearly all the UI elements from the XAML file (MainPage.xaml), so it's not distracting to the user to see while the speech conversation is happening. Typically, you'd actually have a real page, but... This is just a 100 line sample. Right? :-)

OK. Time to try it out. If you haven't typed it all in yet, you can go ahead and cheat copy and paste the code from below. Be sure and remember to do the first few steps, declaring the capabilities required, otherwise you’ll get an Unauthorized exception when you try to call the Speech Recognition APIs.

Press F5, and you should land in the Emulator, on Amazon's web page.

Great. Our Voice Commands should be installed now.

Now, press and hold the Start button (the middle hardware button beneath the screen), and wait for the Speech "Listening..." screen to pop up. If you haven't run speech in the emulator yet, you'll need to accept the speech terms of use. Let's follow the script from above now:

You: "Search On Amazon"

Phone: "What would you like to search for on Amazon?"

You: "Electronics" (or whatever you want)

Phone: "Searching Amazon for Electronics" (or whatever you said you wanted)

Sometimes when you're using a fresh copy of the emulator, or if you haven't accepted the terms of use yet, you'll have to wait a few seconds for the voice commands to become active. If it doesn't work the first time, try try again. Or at least, try twice. :-)

That's it. You have now completed a reasonably useful speech enabled application for Windows Phone 8.0. In 100 lines of code.

Here's the full code listing:

using System;

using System.Collections.Generic;

using System.Net;

using System.Windows;

using System.Windows.Navigation;

using Microsoft.Phone.Controls;

using Windows.Phone.Speech.VoiceCommands;

using Windows.Phone.Speech.Recognition;

using Windows.Phone.Speech.Synthesis;

using System.Threading.Tasks;

using Microsoft.Phone.Tasks;

namespace SearchOn

{

public partial class MainPage : PhoneApplicationPage

{

public MainPage()

{

InitializeComponent();

VoiceCommandService.InstallCommandSetsFromFileAsync(new Uri("ms-appx:///vcd.xml"));

}

protected override void OnNavigatedTo(NavigationEventArgs e)

{

base.OnNavigatedTo(e);

if (e.NavigationMode == NavigationMode.New && NavigationContext.QueryString.ContainsKey("voiceCommandName"))

{

HandleVoiceCommand(NavigationContext.QueryString);

}

else if (e.NavigationMode == NavigationMode.New)

{

NavigateToUrl("https://m.amazon.com");

}

else if (e.NavigationMode == NavigationMode.Back && !System.Diagnostics.Debugger.IsAttached)

{

NavigationService.GoBack();

}

}

private void HandleVoiceCommand(IDictionary<string, string> queryString)

{

if (queryString["voiceCommandName"] == "searchSite")

{

SearchSiteVoiceCommand(queryString);

}

}

private async void SearchSiteVoiceCommand(IDictionary<string, string> queryString)

{

string text = await RecognizeTextFromWebSearchGrammar();

if (text != null)

{

await Speak(string.Format("Searching Amazon for {0}", text));

NavigateToUrl(string.Format("https://www.amazon.com/gp/aw/s/ref=is_box_?k={0}", text));

}

}

private async Task<string> RecognizeTextFromWebSearchGrammar()

{

string text = null;

try

{

SpeechRecognizerUI sr = new SpeechRecognizerUI();

sr.Recognizer.Grammars.AddGrammarFromPredefinedType("web", SpeechPredefinedGrammar.WebSearch);

sr.Settings.ListenText = "Listening...";

sr.Settings.ExampleText = "Ex. \"electronics\"";

sr.Settings.ReadoutEnabled = false;

sr.Settings.ShowConfirmation = false;

SpeechRecognitionUIResult result = await sr.RecognizeWithUIAsync();

if (result != null &&

result.ResultStatus == SpeechRecognitionUIStatus.Succeeded &&

result.RecognitionResult != null &&

result.RecognitionResult.TextConfidence != SpeechRecognitionConfidence.Rejected)

{

text = result.RecognitionResult.Text;

}

}

catch

{

}

return text;

}

private async Task Speak(string text)

{

SpeechSynthesizer tts = new SpeechSynthesizer();

await tts.SpeakTextAsync(text);

}

private void NavigateToUrl(string url)

{

WebBrowserTask task = new WebBrowserTask();

task.Uri = new Uri(url, UriKind.Absolute);

task.Show();

}

}

}