Optimizing Intermediary Services for throughput

I recently had the privilege of working with a couple of engineers (Naveed Zaheer and Manoj Kumar) in an internal lab setting to see how much throughput we could achieve through the Managed Services Engine (MSE) and a downstream WCF service hosted in IIS.

This post highlights some configuration settings that were applied during our work to achieve the results we were looking for. The findings here are specific to the environment we were testing in, which was a controlled lab setting. Any use of these settings should be tested in your own environment prior to placing them in a production environment.

While these findings were specific to testing that was done for MSE, the findings here could be beneficial to anyone who has a service intermediary in their environment.

Environment

The figure below illustrates at a high level the environment we were testing in. The users were simulated using 2 Visual Studio 2008 Test Agents simulating 100 users. The MSE Server and IIS Target WCF Server were both dual-proc machines. The target WCF Service was a singleton service and the method we were calling simply would sleep for 100 milliseconds and return.

MSE, for those not familiar with it, is a service intermediary that sits between a WCF service and its clients. This intermediary piece virtualizes service endpoints for the clients to consume and brokers the calls to the physical WCF service. For more information please refer to these resources.

Service Virtualization – Executive Summary

MSDN Magazine: Service Virtualization With The Managed Services Engine

An Introduction to Service Virtualization on the Microsoft .NET Platform

A CTP of the solution is available here. This solution is also deliverable through Microsoft Consulting Services.

Problem

Initial tests revealed that we could process ~ 55 requests/second. This baseline number was concerning considering the user load of 100 users and the short duration (100 milliseconds) of the method being called. Simple and conservative math would tell you that even if it took a full second per call, we should be able to handle 100 requests / second.

Resolution

Adjustment on the target WCF Service machine

The first thing we noticed was very limited throughput on the backend target WCF service server. There was WCF service throttling in place but increasing the settings didn’t improve the situation. To simplify our testing, we eliminated the MSE server and went directly to the WCF Service hosted in IIS and still observed limited throughput. To resolve this, we added the following configuration to the aspnet.config file.

<system.web>

<applicationPool maxConcurrentRequestsPerCPU="5000" maxConcurrentThreadsPerCPU="0" requestQueueLimit="5000" />

</system.web>

As a result, we saw the throughput on the target IIS Server increase to more than 4x what our baseline throughput was, achieving ~240 requests/second. Support for these settings in the aspnet.config file was added in .NET Framework 3.5 SP1. The values we used for these settings are the default values for .NET Framework 4.0. Further information on these settings are documented here and here.

The location of the aspnet.config file can be found here:

- 32-bit: %WinDir%\Microsoft.NET\Framework\v2.0.50727\aspnet.config

- 64-bit: %WinDir%\Microsoft.NET\Framework64\v2.0.50727\aspnet.config

Adjustment on the MSE machine

Satisfied that we opened up the throughput to the target WCF service hosted in IIS, we re-introduced the MSE Server in the test cases and immediately saw end-to-end throughput go back down to close what our original baseline numbers were. A dump of the MSE process further revealed that there were several threads doing this:

Child-SP RetAddr Call Site

000000004b1cb2a0 000007fef80ce018 System_ni!System.Net.AutoWebProxyScriptEngine.EnterLock

000000004b1cb2f0 000007fef80cc526 System_ni!System.Net.AutoWebProxyScriptEngine.GetProxies

000000004b1cb390 000007fef81228e7 System_ni!System.Net.WebProxy.GetProxiesAuto

000000004b1cb3f0 000007fef81226a4 System_ni!System.Net.ProxyScriptChain.GetNextProxy

000000004b1cb440 000007fef7c15342 System_ni!System.Net.ProxyChain+ProxyEnumerator.MoveNext

000000004b1cb4a0 000007fef7c1516d System_ni!System.Net.ServicePointManager.FindServicePoint

… stack truncated for brevity

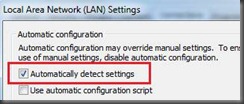

The HTTP bindings in WCF, such as basicHttpBinding and wsHttpBinding, use the system wide proxy settings by default. This was obviously restricting throughput in our scenario since so many threads were doing the same thing – which was, trying to acquire a proxy. Checking the LAN settings revealed that the “Automatically detect settings” option was checked (see below). Since our servers were all local, we unchecked this setting and re-ran the test and found that our throughput was back to ~ 240 requests/second. After making this change, the overhead that MSE added to the full end-to-end call was almost negligible.

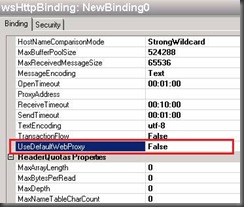

Removing the “Automatically detect settings” option system-wide is probably not the best solution. There are other ways to achieve the same results. One is to create a binding configuration and set the UseDefaultWebProxy to false (see below). This setting is true by default. We tested this approach and got the same results as turning off the “Automatically detect settings” system wide setting. Therefore, our preference is to use this approach so as not to disrupt other applications that may be dependent on the system wide setting.

There are other properties in the binding configuration that can be used to improve performance when a proxy is involved, such as the ProxyAddress and BypassProxyOnLocal. These settings were not tested in our case. However, if you have a proxy to consider and you want your connections to local services to not use the proxy, then setting these properties should result in similar performance improvements.

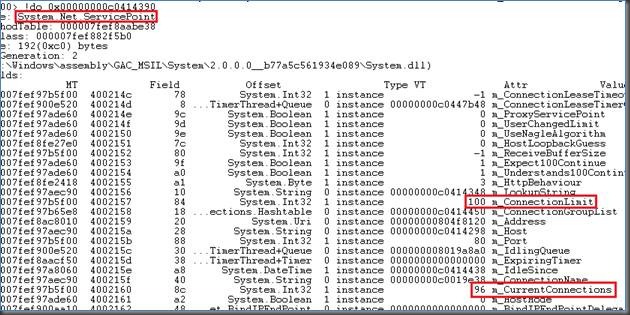

Since resources, such as CPU, were still relatively low (less than 50% utilization) on our MSE and target servers, we wanted to see how much more we could handle. So, we increased the user load to 125 and saw no increase in overall throughput. We increased it to 150 and again, saw no gain. This pattern continued for up to 200 users. Basically, we were seeing the same ~240 requests/second but this time, we had twice the number of users (200). Why? What is unique about 100 users in this scenario was the coincidence that 100 was the number of outbound connections our MSE server was configured for (see below). As you can see, we essentially exhausted all available connections through this service point.

To resolve this, we increased the connection limit in our configuration to 200 as shown here.

<configuration>

<system.net>

<connectionManagement>

<add address = "*" maxconnection = "200" />

</connectionManagement>

</system.net>

</configuration>

After doing this and re-running the test, we observed an overall throughput of ~ 430 requests / second with CPU leveling at about 65%.

An important note here is that we did not see 2x the number of requests/second by doubling the user load. At 100 users, we were handling ~ 240 requests/second. At 200 users, we observed ~430 requests/second (a factor of ~1.8). We also saw the MSE’s overall CPU utilization increase. It is natural to see this trend however and the ratio will go lower with each increase. When making adjustments like this you should carefully measure your application to make sure you’re achieving the optimal throughput. Blindly increasing these values could have adverse effects if you’re not careful. More information about connection management can be found here.

The last optimization suggestion that was noted was to consider using the server version of the CLR GC. By default, the workstation version is used which is appropriate for single proc (sometimes up to dual proc) machines. Since the MSE was running on a multi-proc machine, some performance gains “could be realized” by switching to the server version. We didn’t test this. Nor did we see any concerns with memory pressure or CPU usage. This was just an observation.