University of Wisconsin, Madison finds GIANT number of security bugs during Windows 7 production

In a paper titled: Incremental Compositional Dynamic Test Generation, researchers from the University of Wisconsin, Madison, during the production phase of Windows 7, found one-third of all of the security bugs in Windows 7, using a process called Satisfiability Modulo Theories (SMT) solver or as it also known as: Z3. Since these bugs were found during production prior to release, Windows 7 code was clean prior to any public release, and this is shown by the small number of bugs in the NIST.GOV National Vulnerability Database, with Windows 7 showing 74 security vulnerabilities versus OS X vulnerabilities 491 security vulnerabilities for the past 3 months, and Linux has 101 vulnerabilities.

To be fair, looking at the Windows 7 and Mac OS X, this is a sharp difference between the two approaches to software. Even though Apple states that Mac OS X Snow Leopard is “The world’s most advanced OS”, it is clearly not the world’s most secure OS, security comes at a cost, and I guess one of the costs is the form of Apple coolness. Of course, no OS is secure, all operating systems have vulnerabilities, any assumption that your OS is secure is not a valid assumption.

So how does this work?

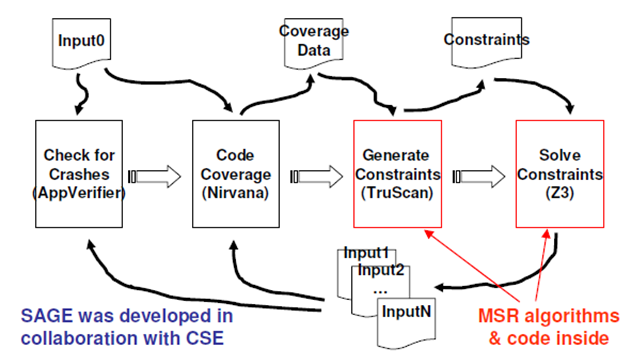

The way it works is that the Whitebox Fuzzer is a software program that runs dynamically while the program that is being tested is also run. The image below shows graphically how it works. Actually, it is a pretty poor schematic. Fuzz testing is form of blackbox testing, so called because in aerospace the aircraft use blackboxes and line technicians have to test them without opening them. In the case of WhiteBox Fuzzers, the system randomly mutates well-formed inputs and tests on the resulting data. Grammars are used to generate the well-formed inputs and encode application-specific knowledge and test heuristics. This could cause low code coverage is the input is a 32-bit input value. Using a concept titled systematic dynamic test generation which generally is based on a search algorithm utilizing collected constraints developed in earlier testing. This models a good human tester as they will repeat know tests that provide breaking scenarios over time. For all of this to make sense you would have to read the paper, which I suggest that you do.

System Architecture

SAGE performs a generational search by repeating four different types of tasks.

- Tester

- Tester task implements the function Run&Check by executing a program under test on a test input and looking for unusual events such as access violation exceptions and extreme memory consumption. The subsequent tasks proceed only if the Tester task did not encounter any such errors. If Tester detects an error, it saves the test case and performs automated triage.

- Tracer

- Tracer task runs the target program on the same input file again, this time recording a log of the run which will be used by the following tasks to replay the program execution offline. This task uses the iDNA framework [3] to collect complete execution traces at the machine-instruction level.

- CoverageCollector

- CoverageCollector task replays the recorded execution to compute which basic blocks were executed during the run. SAGE uses this information to implement the function Score discussed in the previous section.

- SymbolicExecutor

- SymbolicExecutor task implements the function ExpandExecution by replaying the recorded execution once again, this time to collect input related

constraints and generate new inputs using the constraint solver Disolver (yes: it is spelled, disolver, not dissolver)

- SymbolicExecutor task implements the function ExpandExecution by replaying the recorded execution once again, this time to collect input related

What kind of bugs were found?

- Most of the bugs found were Buffer Overruns, and this new technique dynamically tested BILLIONS of instructions using a WhiteBox fuzzer called the SAGE.

Paper summary

The reference paper is a tough read. So here it is in five sentences or less:

- Ask this question: Given a set S of symbolic test summaries for a program Prog and a new version Prog′ of Prog, which summaries in S are still valid must summaries for Prog′?

- Present three algorithms with different precision to statically check which old test summaries are still valid for a new program version.

- Create a simple impact analysis of code changes on the static control-flow and call graphs of the program

- More precise predicate-sensitive refined algorithm using verification-condition generation and automated theorem proving

- Checking the validity of a symbolic test summary against a program regardless of program changes

There, 5 sentences. But really read the paper it is worth the time.