Robo-Ethics: Really? No way…

Recently a few of my colleagues were on Twitter, tweeting about robotic ethics. Seemed pretty dumb to me. I think I  have some real world encounters with autonomous vehicles with my work on auto-flight systems in the late 1980s and early 1990s. The system at the time was designed to perform auto-flight duties such as capture and hold altitude, which when the aircraft weights 300 metric tons and you don’t want the passengers to throw-up their dinners is a difficult thing to do. The auto-flight system was also designed to perform auto-lands bringing the aircraft to a full stop on the runway, without pilot intervention.

have some real world encounters with autonomous vehicles with my work on auto-flight systems in the late 1980s and early 1990s. The system at the time was designed to perform auto-flight duties such as capture and hold altitude, which when the aircraft weights 300 metric tons and you don’t want the passengers to throw-up their dinners is a difficult thing to do. The auto-flight system was also designed to perform auto-lands bringing the aircraft to a full stop on the runway, without pilot intervention.

But this autopilot system was owned by someone and it could make decisions such as determining the cross wind during a landing. The result of it’s decision would be Boolean in nature: Continue the auto-land, or disconnect and perform a stick shake informing the pilots that the auto-flights auto-land system was turned off. At this time, the pilots who were required to track the landing by keeping their hands near the controls would take over. The pilots could also take over if they added around 2 kilograms of force on the “stick”, this would disconnect the auto-land software and the pilots would then land the plane.

Does this make the auto-flight system a robot? Yes.

Does it make the auto-flight system an autonomous robot? No.

What kind of ethics would be involved with this type of robot? The ethics that have built up with respect to chattel. At one time many cultures had the legal theory that children and women were chattel of their fathers or husbands. If these humans misbehaved in any manner, the punishment would extend to the father or husband. In the same way, a non-autonomous robot’s action would extend to it’s owners, and then through civil actions to the creators, if the owners didn’t misuse the non-autonomous robot’s software or mechanical systems.

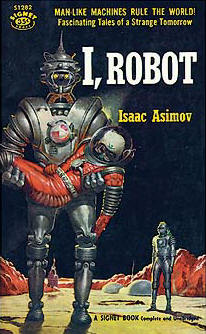

Often, in stories contained in the anthology “I, Robot”, the positronic mind that makes up the robotics “brain” was built by a monopolistic company. By being the only corporation that could build the positronic brains, General Robotics could control the abilities of the robots. Most of the Asimov robotic stories and their derivatives were mostly about how non-autonomous robots would function and interact with society, technicians and even psychologists (Susan Calvin).

In the case of the “I, Robot” style of stories the robots were performing operations under the ultimate control of General Robotics, one of their customers or the government, with a few exceptions. The initial three laws of robotics were an attempt by General Robotics to get humans on Earth to accept the use of robots. The stories were about how the robots would malfunction, within the three laws. A zeroth law, was added to extend the Foundation stories and to make them connect to the “I, Robot” series of stories and fictional universe.

The three laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey any orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

Robots in the real world are not a point where they can autonomously, although the Pleos and other toys act as if they can. Most of the things we think of as robots are usually under some sort of guidance by humans or have an endpoint goal dictated by humans. As a result the ethics of the current breed of robots are the same motivations behind the three laws of robotics: Protect the owner, do what the owner tells you do and protect the investment in the robot. It is the zeroth law of robotics that functions for the autonomous robot: Protect Humanity. Good luck on getting that one to work.

An excellent work on autonomous robots can be found at: https://ethics.calpoly.edu/ONR_report.pdf

The bottom line for Autonomous Robot-Ethics would follow the same rules as human: The robot like a human would be required to deal with situations in the context of the environment and cultural expectations.