Solution for “nightly batch” and Transactional Replication

Customer asked: “We need to reload a table weekly\nightly, batch job takes few minutes, but Replication takes long time to move the batch changes to Subscriber, how can we speed this up”?

If Subscriber is mirror of Publisher, perhaps Replication is the wrong technology! A better solution may be to configure the database for AlwaysOn Availability Groups and setup Readable Secondary. Here too you may want to “re-seed” the Secondary Replica nightly following the batch changes to reduce the log queue send time.

Moving data using SQL Replication technologies falls into (1) Snapshot Replication is a good solution to reload the Subscriber with a fresh copy of the tables. (2) Transactional Replication keeps table refreshed but can be challenged when moving large batches through Replication topology.

If business needs require nightly\weekly refresh and keep Subscriber updated with ongoing changes throughout day, we’ll need to use a hybrid of Snapshot to reload the Subscriber and Transactional Replication to keep it refreshed. Let’s take a closer look and how this would come together.

A common scenario is to DELETEs, then INSERTs reloading a table published with Transactional Replication. If the table had 50 million rows, the published database transaction log grows (TRUNCATE not allowed on published table), Log Reader scans transaction log for the 50 million DELETEs, writes those to Distribution database. The application next executes 50 million INSERTs, again publication database transaction log grows, Log Reader reads 50 million INSERT commands and writes those to Distribution database. Now we have 100 million commands the Distribution Agent need read from Distribution database and write to the Subscribers. A few hours or days later the Distribution Cleanup job runs, purging these rows further adding to Distribution data IO stress. Do this nightly for many tables and your Agents may never catch up the backlog.

What to do?

First, verify the tables need to have updates transferred during the day, if not, move those table to nightly Snapshot Publication. Once data is reloaded, Snapshot Replication BCP out the rows to Replication Snapshot folder, next the Distribution Agent BCP IN rows into the Subscriber tables. Only entries recorded in the Distribution database are “Snapshot Ready” status messages alerting the Distribution agent to start the Subscriber load. None of the 50 million DELETE or INSERT are stored in the Distribution database as the data is moved using BCP.exe utility.

Second, explore using Stored Procedure Replication to push down the DELETE. This feature replicates the “execution of” not the “results of” the DELETE command. For our 50 million rows, Log Reader sends down a single call to our nightly DELETE stored procedures, “spNIGHTLY_DELETE_TABLES” instead of 50 million individual DELETE statements. Yes, the published database’s transaction log will still grow from the DELETE, INSERT logged operations, but the Log Reader only sends ½ the load (newly inserted rows), Distribution Agents only does ½ the work, Cleanup only needs to purge ½ the data.

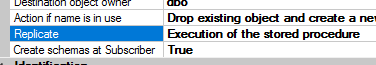

When adding stored procedure for “execution” change the Stored Procedure article default property “Replicate” to “Execution of the stored procedure”.

Executing sp_browsereplcmds on the Distribution database I see 1 row for the delete stored procedure call, not the 50 million deletes. Wow, what a savings!

{call "dbo"."spNIGHTLY_DELETE_TABLES" }

Using stored procedure execute, the published database transaction still grows and Replication Agents still move down the INSERTS for the 50 million rows reloaded into the table. Let’s go one step further and eliminate this workload too.

As the table is “published”, DELETE is not allowed. However, if plan to remove all rows and refresh subscriber, why not “unpublish” the table removing the “no truncate” restriction, then simply truncate, insert, then republish. This could be accomplished all using scripts call as part of the nightly batch processing. The 50 million DELETE don’t bloat the published database transaction log as TRUNCATE is executed and 50 million INSERT are not writing to the Distribution database as INSERT happened when table is not being published. When publication is recreated, Subscriber table reloaded using BCP OUT, BCP IN which is faster than pushing down individual DELETE\INSERTS via Distribution.

Here is high-level view of steps required.

- Drop Publication containing “nightly reload” tables

- Truncate Tables (minimal logged)

- Insert new data (not processed by Log Reader, table not yet published)

- Create Publication (immediate_sync=true)

- Add Subscription

- Start Snapshot Agent Job (sp_startpublication_snapshot — BCP out of new data)

- Start Distribution Agent (sp_startpushsubscription_agent — BCP in of new data, then picks up ongoing data changes)

Hope you find this helpful,

Chris Skorlinski

Microsoft SQL Server Escalation Services