Having performance issues with table variables? SQL Server 2012 SP2 can help!

In a previous blog, I talked about how table variable can impact performance of your query. The reason is that the statements referencing the table variable are compiled when the table variable has no data in it. Therefore, cardinality estimate for the table variable is always 1. If you always insert small number of rows into the table variable, it may not matter. But if you insert large number of rows into the table variable, the query plan generated (based on 1 row assumption) may not be that efficient.

As part of supportability improvement, SQL Server 2012 Service Pack 2 made an improvement. This improvement will help in situations where you have large number of rows inserted into a table variable which joins with other tables. A new trace flag 2453 is introduced to activate this improvement. When SQL Server detects enough rows inserted into the table variable, it will then recompile the subsequent statements referencing the table variable. SQL Server will detect row count of the table variable at the time the statement is recompiled and can produce a more efficient plan. "Enough rows" mentioned is determined by recompile threshold for temp tables in KB https://support.microsoft.com/kb/243586.

This behavior is documented in https://support.microsoft.com/kb/2952444 .

I want to emphasize that trace flag 2453 must be used in order to activate this feature. If you are on SP2 and experience slow performance on a query using table variable, you can give this trace flag a try to see if it helps.

Let's use the same demo which I used in the previous blog to demonstrate the behavior.

First, set up table

dbcc traceoff(2453,-1)

go

dbcc freeproccache

go

set statistics profile off

go

use tempdb

go

if OBJECT_ID ('t2') is not null

drop table t2

go

create table t2 (c2 int)

go

create index ix_t2 on t2(c2)

go

--insert 100,000 rows into the perm table

set nocount on

begin tran

declare @i int

set @i = 0

while @i < 100000

begin

insert into t2 values (@i)

set @i = @i + 1

end

commit tran

go

--update stats

update statistics t2

go

I'm going to use the same query below to show you the estimate difference.

set nocount on

declare @t1 table (c1 int)

begin tran

declare @i int

set @i = 0

while @i < 100000

begin

insert into @t1 values (@i)

set @i = @i + 1

end

commit tran

set statistics profile on

select * from @t1 inner join t2 on c1=c2

go

set statistics profile off

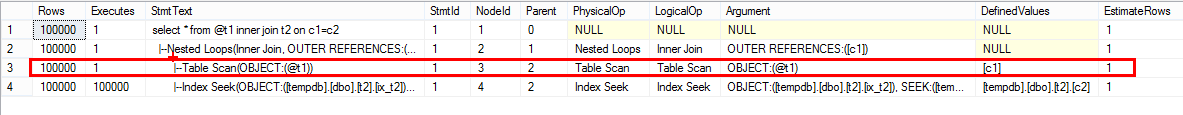

Without the trace flag (2453), the query uses nested loop and the table variable is estimated incorrectly with just one row.

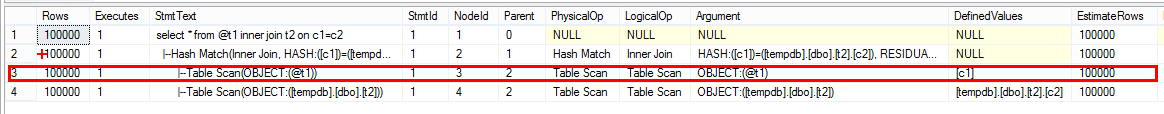

After I enabled the trace flag and flushed plan cache with the following commands, the plan was changed to hash match and the table variable is estimated correctly with 100000 rows.

dbcc freeproccache

go

dbcc traceon(2453,-1)

Jack Li

Senior Escalation Engineer | Microsoft SQL Server Support