How It Works: SQL Server (BCP, Database I/O, Backup/Restore, …) Reports Operating System Error (665, 1450 or 33) when writing to the file - BIG DATA

Suresh and I have blogged about these issues before but this post will put another spin on the information, as it applies to BIG DATA.

Previous Blog References

- https://blogs.msdn.com/b/psssql/archive/2008/07/10/sql-server-reports-operating-system-error-1450-or-1452-or-665-retries.aspx

- https://blogs.msdn.com/b/psssql/archive/2009/03/04/sparse-file-errors-1450-or-665-due-to-file-fragmentation-fixes-and-workarounds.aspx

- https://blogs.msdn.com/b/psssql/archive/2009/01/20/how-it-works-sql-server-sparse-files-dbcc-and-snapshot-databases-revisited.aspx

I ran into a 665 issue with a customer attempting to BCP data out of a database. The scenario was that it worked if one instance of BCP was running but if they started a second instance of BCP, at the same time, (using where clause to divide the table and queryout parameter) the BCP(s) would fail with the following error.

SQLState = S1000, NativeError = 0 Error = [Microsoft][SQL Server Native Client 10.0]I/O error while writing BCP data-file

After reproducing it in the lab and debugging it I found the problem to be the file system limitation (FILE SYSTEM LIMITATION = 665) during the WriteFile call. (I did file work items with the SQL Server development team to surface more error details so we don't have to troubleshoot with the debugger in the future.)

Tracking down the source of the problem it was the physical, disk cluster allocations and the way NTFS tracks allocations for an individual file. I highly recommend you read the following post, it describes the NTFS behavior really nicely: https://blogs.technet.com/b/askcore/archive/2009/10/16/the-four-stages-of-ntfs-file-growth.aspx

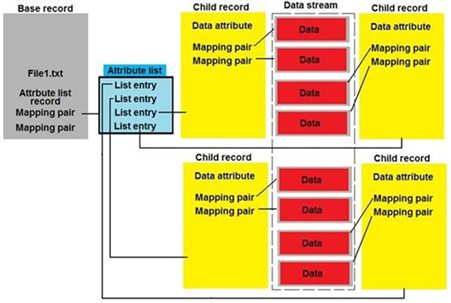

To summarize, when you are at the maximum allocation state for a single NTFS file you have a MTF allocation (1K) for the Attribute List Entries that points to ## of Child records (1K allocations) holding information about the starting physical cluster and how many contiguous clusters are allocated for that segment of the file. The more of these you have the more fragmented the file becomes.

A nice example I found was the following.

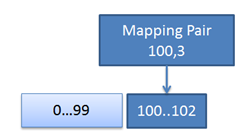

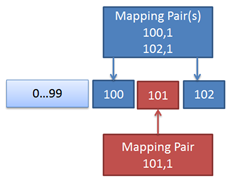

- Mapping Pair (Physical Cluster, ## of Clusters From Physical)

- The file segment starts at physical cluster 100 and used 8 clusters.

- The entry is 100, 8

The mapping pair information can be consolidated and compressed so it it not a simple division calculation of the MTF size / Mapping Pair it depends on the cluster locations, contagious cluster acquire capabilities and compression of the mapping pair (for example if cluster location can be stored in less than 8 bytes NTFS can compress the LARGE_INTEGER value.)

The cluster size and MTF sizes determine the maximum size an NTFS file can accommodate. The following articles highlight these options and limitations.

NTFS Cluster Size: https://support.microsoft.com/kb/140365

NTFS Size Limits: https://technet.microsoft.com/en-us/library/cc938432.aspx

| Best Case - Contiguous Allocations |  |

| Worst Case - Alternating Allocations between files |  |

In the BCP scenario the customer had determined that running 6 instances of BCP on this target system maximized the rows per second transferred. However, the more instances of BCP they enabled the faster they encountered the file system limitation. As you can see the more allocations taking place on the same disk/LUN raises the fragmentation level chances and internally puts pressure in the mapping pair space.

Making it a bit more pronounced is that BCP uses a 4K buffer for its writes. It fills the buffer, writes it and repeats. When on a system that uses 4K clusters this aligns well but it allows 4K osculation of clusters between multiple copies to the same physical media.

Here are a few suggestions for handling Big Data.

| Apply QFE (Requires Format) |

|

| Avoid NTFS Compression |

|

| Use 64K Clusters |

|

| Defragmentation |

|

| BCP |

|

| Backup |

|

| MDF/NDF/LDFs | It is unlikely to encounter on database files but for completeness I have included some aspects of database files.

|

Using Contig.exe from sysinternals (https://technet.microsoft.com/en-us/sysinternals/bb897428.aspx) and the -a parameter you can view the number of fragments used by a given file.

Error References

- 33 - The process cannot access the file because another process has locked a portion of the file. Note: This usually occurs when NTFS compression is enable on the target.

- 665 - The requested operation could not be completed due to a file system limitation

- 1450 - Insufficient system resources exist to complete the requested service

Bob Dorr - Principal SQL Server Escalation Engineer