SQL Server: Clarifying The NUMA Configuration Information

The increased number of cores per socket is driving NUMA designs and in SQL Server support we are seeing more NUMA machines and less pure SMP machines. For whatever reason over the past 2 weeks I have fielded a bunch of questions around NUMA and the information is good for everyone to know so I will try to share it here.

There are various levels of NUMA configurations that can make the this entire discussion complicated. I will try to point some of these things out here as well.

How is the Operating System Presenting NUMA Configuration To SQL Server?

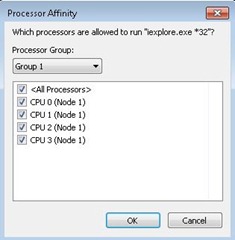

Start with the Windows Task Manager | Process Tab.

Select a process, Right Mouse | Set Affinity -- the following dialog is presented showing you the Processor Groups (K-Group), Nodes and CPUs on the machine.

This is the layout presented to SQL Server.

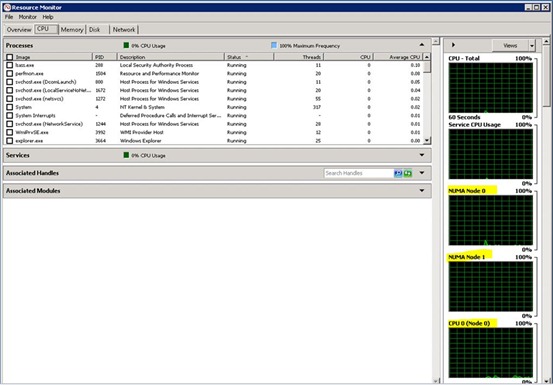

Windows Resource Monitor | CPU Tab shows NUMA information as well.

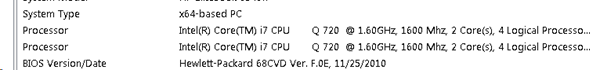

MSINFO32

Information presented in MSINFO does not contain NUMA associations. Here is an example from my 2 socket system with only a single memory node.

Here is an example of my 2 socket system, single memory node but configured using bcdedit /groupsize to create 2 logical groups on my system for testing. The MSINFO32 output looks the same and you can't tell NUMA information from it so don't rely on it for NUMA configuration information.

Issue: The problem with all the previous Windows utilities is that it might not be showing the physical layout presented by the hardware. You may have to go to the BIOS or use the CPUID instruction(s) to determine the physical layout.

Windows does allow configuration of /groupsize for logical testing (https://msdn.microsoft.com/en-us/library/ff564483(v=VS.85).aspx using BCDEDIT or manual establishment in the registry https://support.microsoft.com/kb/2506384. However, it is rare to see these in use on a production system.

API Reference(s)

- GetNumaHighestNodeNumber

- GetNumaNodeProcessorMask

- GetNumaAvailableMemoryNode

SQL Server's View

When SQL Server starts up is outputs the NUMA information in the error log detailing its view of NUMA and provides DMV outputs to show the information as well.

Here is the output from my 2 Processor Group system. A NUMA node can't span a processor group so I am assured to have 2 NUMA nodes with CPUs associated with each node.

2011-11-09 12:38:01.38 Server Node configuration: node 0: CPU mask: 0x000000000000000f:1 Active CPU mask: 0x000000000000000f:1.

2011-11-09 12:38:01.38 Server Node configuration: node 1: CPU mask: 0x000000000000000f:0 Active CPU mask: 0x000000000000000f:0.

The mask for Node 0 shows 0xf which is 4 CPUs associated with the node. This is correct as it is a 2 socket, 2 core, HT aware system (8 total) 4 assigned to each node. The :1 is the processor group the node is associated with.

Notice that Node 0 is assigned to group 1 on my system. This is a bit different if you look at the low level details. SQL Server swaps its tracking of node 0 to play better with the overall system. Since the system usually allocates things on NUMA node 0 during boot, SQL Server tries to move its node 0 to a subsequent node to avoid contention points. Thus, in my case I see the groups appear to be swapped but that was really just a node swap and instead of SQL Server node 0 being associated with group 0 it is associated with group 1.

SQL Server distinctly tracks memory nodes and scheduler nodes and these don't have to be the same. (See SOFT NUMA for more details).

The memory nodes are aligned, and stay aligned, with the memory locality per CPU presented by the operating system. You can see the memory nodes presented to SQL Server using the following DMV query.

select * from sys.dm_os_memory_nodes

You case the scheduler alignments to the scheduling nodes using the following DMV query.

select * from sys.dm_os_schedulers

I point this out because when you use SQL Server SOFT NUMA configuration it does not change the memory node tracking of SQL Server only the scheduler node behaviors.

I have a non-NUMA system and SQL Server Still Shows NUMA - why?

Answer: Expected - One of the most common questions I get and here is why.

SQL Server (SQL OS) is designed to work with groups, nodes and CPUs. To create an ONLINE scheduler for SQL Server it has to be associated with a scheduling node structure so even on a system that is not-NUMA SQL Server will track the schedulers under a single node. It is just design but it can fool you a bit and some of the wording choices add to the confusion as well.

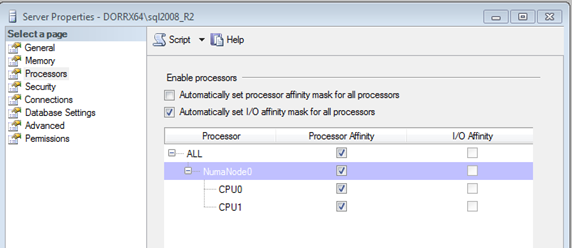

Here is an example from SQL Server Management Studio (SSMS) from my single socket, dual core system. Notice that 'NumaNode0' is shown. It is just a poor choice of wording and is exposing the same node 0 that SQL Server is using when you look at the DMVs for tracking the CPUs on the system within the scheduling node. Everything is technically correct that we have a single (1) memory bank associated with all the CPUs on the system.

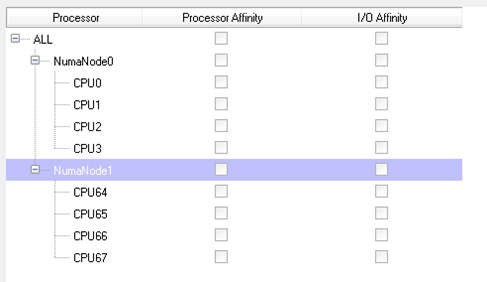

Here is the same SSMS view of my 2 socket, 4 core, 2 memory node system.

Helpful Terms

Group Processor Group |

Windows 2008 R2 added the ability to address more than 64 CPUs, called processor groups. (K-Group = Kernel Group) · NUMA node can’t span a K-Group · K-Group is limited to 64 max CPUs

|

NUMA Node |

Hardware concept that associates CPUs with a specific set of memory resources. |

CPU |

A unit that can process instructions. SQL sees everything as a logical CPU (core, hyper-thread, …) for most operations. |

Node Swap |

SQL assumes hardware NODE=0 is heavier used by the system so it will often swap NODE=0 with NODE=1 allowing SQL to initialize on the NODE=1. -T8025 can be used to avoid the node swapping behavior

|

Soft NUMA |

A SQL specific configuration allowing nodes to be divided. This can be used to target lazywriter, connectivity and some very specific configurations. Soft NUMA is ONLY FOR Scheduling and Connectivity – Memory locality is not impacted. |

Connection Affinity |

SQL allows given ports to be bound to a specific NODE or NODE(s) depending on application need.

|

Round-Robin |

New connections are assigned to nodes that the PORT is bound to by round-robin assignment and then weight of scheduler within each node. |

ONLINE and OFFLINE Schedulers |

A scheduler is ONLINE when its affinity mask is enabled. Let’s say you have a system with 4 CPUs and the affinity mask is 1. You would have 3 offline schedulers (2,3, and 4) and 1 online scheduler (1). SQL Server can create and park the offline schedulers so you can dynamically configure the affinity mask and the schedulers are brought online. If a scheduler is online and you change the affinity mask to make it offline a few things happen. 1. The work currently assigned to the scheduler is allowed to complete 2. No new work is accepted on the scheduler 3. The affinity mask of the threads on the scheduler being taken offline is changed to the other viable schedulers for the instance. This is important because the work is not continuing on the original CPU the affinity was set for but it is sharing the other ONLINE schedulers. So if you have a large process on the original scheduler it can impact time on the shared CPUs until it is complete. |

Memory Divided Equally |

Memory Per Node = Max Memory Setting / ONLINE NUMA Nodes A NODE is considered ONLINE as long as one of the schedulers on the node is ONLINE. All memory for the max memory scheduler is divided amount the ONLINE nodes. What you don’t want is a situation where you OFFLINE schedulers or configure them strangely. For example Node 1 – 4 Schedulers, Node 2 – 2 schedulers. You would have 4 CPUs using ½ the memory and 2 CPUs using the other ½ the memory. |

Trace Flag 8002 |

The trace flag is used to treat the affinity mask as a group setting. Usually the affinity mask sets the threads on the scheduler to ONLY use one CPU for the matching affinity bit. The trace flag tells SQL OS to treat the mask as a group (process affinity like). Group the bits for the same node toghether and allow any scheduler ONLINE for that node to use any of the CPUs that match the bits. Let’s say you had the following affinity for NODE 0 on the system. 0011 - Use CPU 1 and CPU 2 Without trace flag you would get a scheduler for CPU 1 and a scheduler for CPU 2. The workers on scheduler 1 could only use CPU 1 and the workers on scheduler 2 could only use CPU 2. With the trace flag you get the same scheduler layout but the thread on scheduler 1 and scheduler 2 would set their affinity mask to 11 so they could run on either CPU 1 or CPU 2. This allows you to configure an instance of SQL to use a specific set of CPUs but not lock each scheduler into their respective CPUs, allowing Windows to move the threads on a per CPU resource use need. |

Low End NUMA |

On some lower end hardware we used to get reported that each CPU has its own NUMA node. This was usually incorrect and when we detected only a single CPU per NODE we would assume NO NUMA. Trace flag 8021 disables this override

|

ALTER SERVER |

Added for SQL 2008 R2 to replace the sp_configure affinity mask settings. Once we can support more than 64 CPUs the sp_configure values are not enough to hold the extended affinity mask. Helpful Query: SELECT inf.affinity_type AS [AffinityType], nd.node_state_desc AS [NodeStateDesc], mnd.memory_node_id AS [ID], nd.processor_group AS [GroupID], nd.cpu_affinity_mask AS [CpuIds], nd.online_scheduler_mask AS [CpuAffinityMask] FROM sys.dm_os_memory_nodes AS mnd INNER JOIN sys.dm_os_sys_info AS inf ON 1=1 INNER JOIN (Select SUM (cpu_affinity_mask) as cpu_affinity_mask, SUM(online_scheduler_mask) as online_scheduler_mask, processor_group, node_state_desc, memory_node_id from sys.dm_os_nodes group by memory_node_id, node_state_desc, processor_group) AS nd ON nd.memory_node_id = mnd.memory_node_id ORDER BY ID ASC |

References

- https://blogs.msdn.com/b/psssql/archive/2010/02/23/how-it-works-sql-server-2008-numa-and-foreign-pages.aspx

- https://blogs.msdn.com/b/psssql/archive/2010/03/24/how-it-works-bob-dorr-s-sql-server-i-o-presentation.aspx

- https://blogs.msdn.com/b/psssql/archive/2008/01/24/how-it-works-sql-server-2005-numa-basics.aspx

- https://blogs.msdn.com/b/psssql/archive/2010/04/02/how-it-works-soft-numa-i-o-completion-thread-lazy-writer-workers-and-memory-nodes.aspx