Case of using filtered statistics

Technorati Tags: Performance

SQL Server 2008 introduces a new feature called filtered statistics. When used properly, it can dramatically improve cardinality estimate. Let’s use an example below to illustrate how cardinality estimate can be incorrect and how filtered statistics can improve this situation.

We have two tables. Region has only 2 rows. Sales table have 1001 rows but only 1 row has id of 0. The rest of it have id of 1’s.

Table Region

| id | name |

| 0 | Dallas |

| 1 | New York |

Table Sales

| id | detail |

| 0 | 0 |

| 1 | 1 |

| 1 | 2 |

| 1 | 3 |

| 1 | 4 |

| 1 | 5 |

| 1 | 6 |

| 1 | 7 |

| 1 | 8 |

| 1 | 9 |

| 1 | 10 |

| 1 | 11 |

| 1 | 12 |

| 1 | 13 |

| 1 | 14 |

| 1 | 15 |

| 1 | 16 |

| … | … |

| 1 | 1000 |

Now let’s look at query “select detail from Region join Sales on Region.id = Sales.id where name='Dallas'”. From human eye perspective, we immediately know that only one row would qualify. If we look at Dallas there is only one row in Region table and one row in Sales table. But from optimizer perspective, it does not know that when the query is compiled and before query is executed. In order to know that, basically SQL would have to execute the query half way and filter out values for Dallas and take the id of 0 and then evaluate how many rows are there in table Sales. In other words, it would require incremental execution.

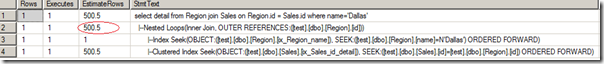

If you execute the query, you will get a plan like this. Note that the nested loop estimated 500.5 rows but only 1 row actually was retrieved.

Now let’s see what happens if we create a statistics on Region.id but put a filter on name (“Dallas”). Here is the statement “create statistics Region_stats_id on Region (id) where name = 'Dallas'”.

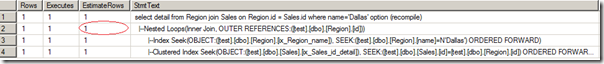

Now if you execute the same select statement (select detail from Region join Sales on Region.id = Sales.id where name='Dallas'), the cardinality estimate is correct as shown below for the nested loop join.

What happened here is the filtered statistics (create statistics Region_stats_id on Region (id) where name = 'Dallas') is used for optimization. When SQL optimizes the query, it sees there is a statistics that matches the where clause. It then discovers there is only 1 id of 0 and thus is able to do a correct estimate.

Correct cardinality estimate is very import for complex joins as it affects join order and join types dramatically.

Here is a complete demo:

drop table Region

go

drop table Sales

go

create table Region(id int, name nvarchar(100))

go

create table Sales(id int, detail int)

go

create clustered index d1 on Region(id)

go

create index ix_Region_name on Region(name)

go

create statistics ix_Region_id_name on Region(id, name)

go

create clustered index ix_Sales_id_detail on Sales(id, detail)

go

-- only two values in this table as lookup or dim table

insert Region values(0, 'Dallas')

insert Region values(1, 'New York')

go

set nocount on

-- Sales is skewed

insert Sales values(0, 0)

declare @i int

set @i = 1

while @i <= 1000 begin

insert Sales values (1, @i)

set @i = @i + 1

end

go

update statistics Region with fullscan

update statistics Sales with fullscan

go

set statistics profile on

go

--note that this query will over estimate

-- it estimate there will be 500.5 rows

select detail from Region join Sales on Region.id = Sales.id where name='Dallas' option (recompile)

--this query will under estimate

-- this query will also estimate 500.5 rows in fact 1000 rows returned

select detail from Region join Sales on Region.id = Sales.id where name='New York' option (recompile)

go

set statistics profile off

go

create statistics Region_stats_id on Region (id)

where name = 'Dallas'

go

create statistics Region_stats_id2 on Region (id)

where name = 'New York'

go

set statistics profile on

go

--now the estimate becomes accurate (1 row) because

select detail from Region join Sales on Region.id = Sales.id where name='Dallas' option (recompile)

--the estimate becomes accurate (1000 rows) because stats Region_stats_id2 is used to evaluate

select detail from Region join Sales on Region.id = Sales.id where name='New York' option (recompile)

go

set statistics profile off