How to Configure a Windows Azure Virtual Machine File Server and use it from within Windows HPC Server Compute Jobs

Recall the following axiom from your CS101 class…?

“To solve a problem, create abstractions. To attain efficiency, remove abstractions.”

Such is the case with I/O in the Cloud (i.e. all Clouds). Efficiency requires designing for the underlying platform with specific considerations for storage account quotas, network constraints, and partitioning strategies across available resources. Regarding storage, the article published here is a good place to start with understanding capabilities and constraints.

Regardless of final solution design, it is often advantageous to initially simply get a prototype solution deployed with minimal configuration and code changes. Such is the promise of Infrastructure as a Service (IaaS). Especially with Windows HPC Server solutions, addition of an SMB File Server to your Windows Azure service enables an easy Cloud integration experience. If your objective is to create an evaluation prototype, the scenario described herein may either completely serve your file I/O requirements or just provide an operational envelope for testing purposes. For illustration purposes, I’ll keep the solution scenario as simple as possible. However, there are several deployment configuration options that would dramatically enhance your storage I/O performance and still not require modifications to your existing solution. I hope to document these advanced configuration options within subsequent posts.

First, a look at what we are to achieve herein…

- Configure a file server (SMB protocol, port 445) with a persistent name (e.g. “myfileserver.cloudapp.net”).

- Create a file share (e.g. “\\myfileserver\myshare”) accessible from other Windows Azure worker-role VMs (e.g. compute-nodes) with user authentication (we will use workstation-mode, NTLM, and not domain authentication).

- Ensure that the file share is backed by blob storage.

- Execute a compute job wherein the working directory path resolves to a UNC path (e.g. “\\myfileserver\myshare”).

Note the following non-objectives (perhaps to be documented in subsequent posts or even superseded by future platform features).

- Load-balance across multiple SMB file servers.

- Optimize I/O (via partitioning) across multiple blob storage accounts.

- Distribute the file structure namespace across multiple file shares (e.g. using mklink from the client perspective).

- Leverage mountable read-only VHD files in order to gain wider distribution and staging of read-only data.

- Utilize alternative file distribution utilities (e.g. ClusterCopyConsole) to stage job input data.

- Address on-premises to file server VM connectivity (e.g. using Windows Azure Connect or Virtual Network).

- Securely configure the service and file server VM instance.

Effectively, for illustration purposes, our Virtual Machine hosted file server instance will be a single point of I/O for any number of compute-node server instances.

Configure the Virtual Machine File Server

Utilize the following procedure to configure a Windows Azure Virtual Machine (VM) instance for use as a File Server.

- Create the File Server VM instance (e.g. “MyFileServer.cloudapp.net”) using the Windows Azure portal. See reference instructions here.

- Attach and format a blob-storage hosted data-disk to the VM.

- Once the File Server instance is running, configure a limited privilege user-account (e.g. “MyUser”). Note that this service account is a primary security mechanism for the server instance. Any other VM within the data-center could access your server or cause denial of service. More advanced server security configuration is beyond the scope of this article.

- Using the Server Manager Console, enable the File Server Role.

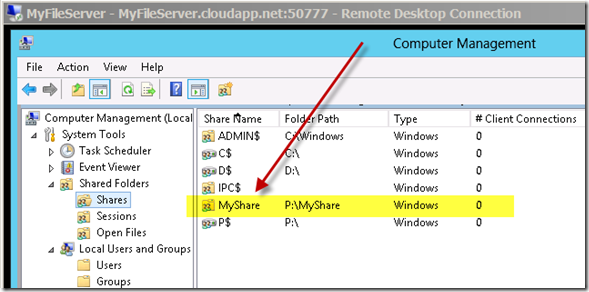

- Create a File Share folder (e.g. “MyShare”) on the attached data-disk and enable read/write access for the MyUser account.

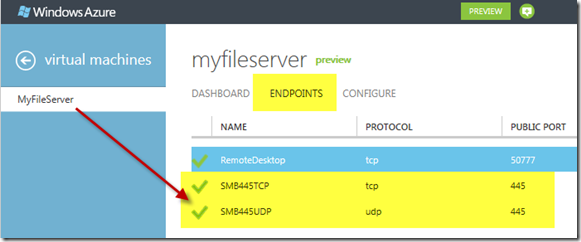

- Add two endpoints to the VM for both TCP port 445 and UDP port 445. This enables the server (at the service deployment level) to communicate SMB protocol with worker-roles hosted in the same Windows Azure data-center. The SMB protocol is not currently routed externally from the data-center.

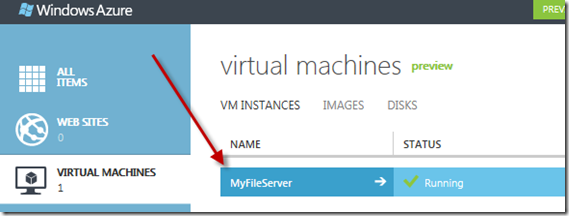

Your File Server configuration should appear as follows from the management portal.

With corresponding service end-points configured as illustrated.

And the file share configuration similar to the following image.

Configure the Compute Node Templates

You may wish to familiarize yourself with the “burst to azure” capability of Windows HPC Server 2008 R2. Also, a previous article discusses how to utilize start-up scripts in connection with “Windows Azure Node Templates”. You can utilize a start-up script to configure your worker-role compute-nodes so that they can “see” \\MyFileServer\MyShare. Let’s illustrate how that’s accomplished…

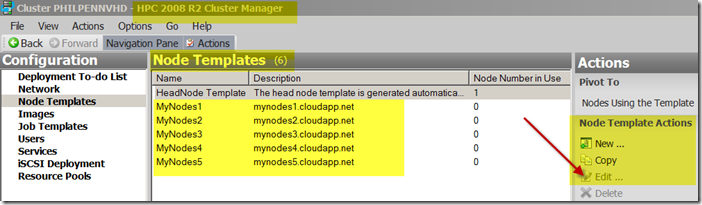

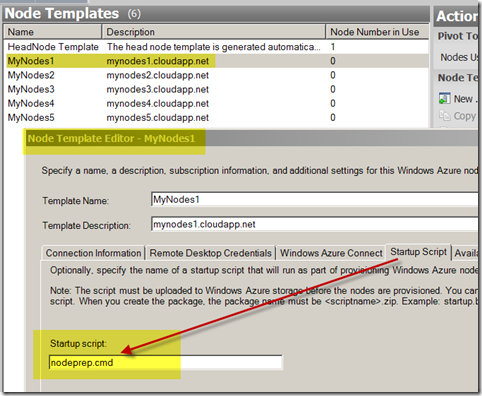

The following image illustrates a list of Compute Node Templates within the HPC Server Cluster Manager console.

Node Templates enable partitioning of node-groups across multiple Windows Azure service deployments. Each service may contain a large number of compute nodes all managed from a single cluster head-node. Refer to the links immediately above for detailed information on how to configure a cluster to utilize Windows Azure worker role VMs as compute nodes. For our immediate purpose, we only need to modify the startup script (i.e. nodeprep.cmd) associated with each node template.

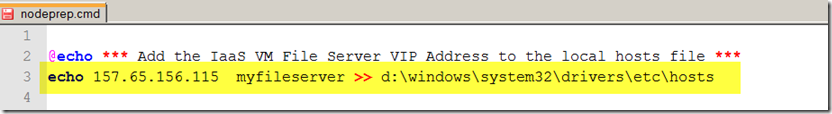

Add the following command line to the nodeprep.cmd file. Obtain your myfileserver.cloudapp.net VIP address from the Windows Azure management portal (e.g. nslookup myfileserver.cloudapp.net). The echo command appends an entry to the etc\hosts file on each local compute node and thus enables subsequent UNC host name resolutions (e.g. \\myfileserver).

Configure Your Job to Use the File Server and Share

When you submit jobs using the HPC Server Scheduler those jobs are run within a user-logon context. With on-premises compute-nodes, the scheduler utilizes domain account credentials. When the target compute-node group for your job is the AzureNodes group, then a temporary local user logon is created for your job. You can verify this by submitting a job using the “whoami” command and viewing the resultant output.

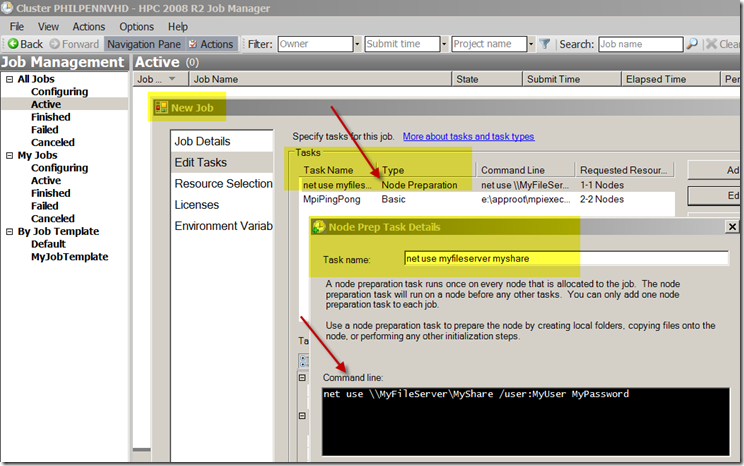

Consequently, your job runs within a context in which any secure external resources (e.g. file shares) requires authenticated user access. You can achieve this by adding a Node Preparation Task to your job as illustrated. This task runs once on each node and precedes execution of any other task within your job.

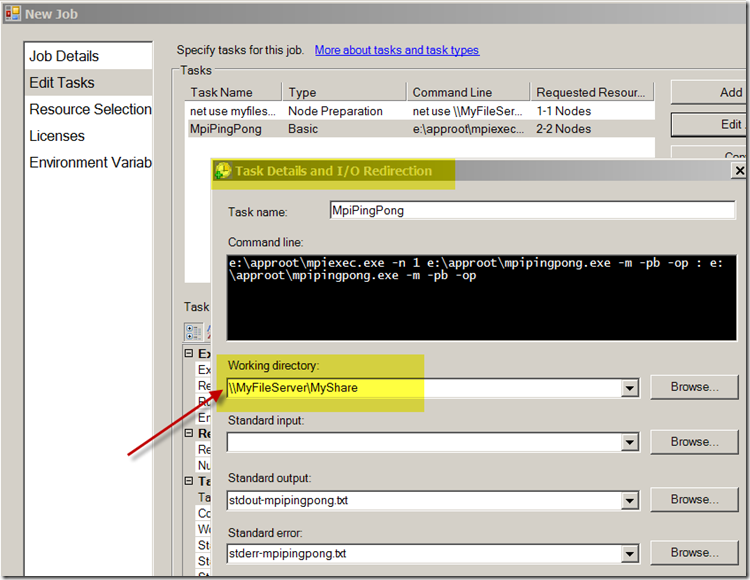

Subsequent tasks, within your job context, will have access to the file server resources. For example, you can configure the Working Directory to utilize the file share for task input or output.

References

Learn about Windows Azure Disks, Drives, and Images.

Learn about Windows Azure Virtual Machines.