IOT for mere mortals, Part I

You must have heard the buzz about IOT. Internet of Things that is.

It is supposed to fix the european economy and cure cancer. The industy heavies are waiting the IOT to solve all our problems ...

Well, in the meantime us regular dudes can actually do something without spending huge amounts of time or cash to get our feet wet in IOT by utilizing ... You guessed it ... good old PaaS approach.

So today we are going to build an infinitely scalable input point and send in some random temperature readings.

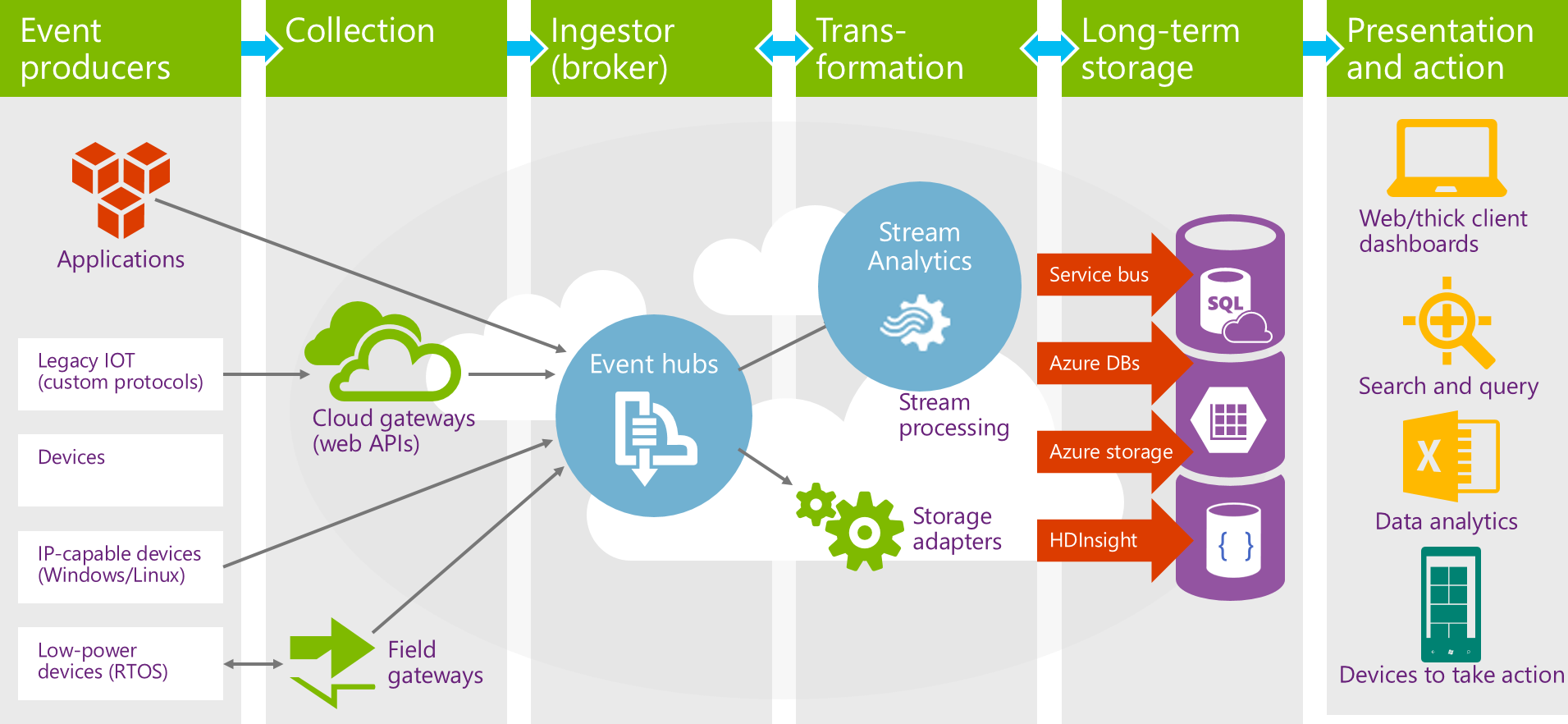

Here's a picture of the overall architecture of a generic IOT solution running on Azure PaaS offering.

We have our gadgets that produce events, optionally some gateways to aggregate messages or transform protocols, Event hubs to catch all the traffic, then some more or less realtime analysis and storage.

So what's new ? ... nothing really.

Other than the fact that now we can do this in an afternoon without building a costly infrastructure first. We do this by utlizing PaaS components and by doing so we get the benefit of world class scalability and security by design. But looking at this purely from a functional standpoint ... it's all stuff that we have been doing for a long ... long time.

Enough talk, let's actually do something...

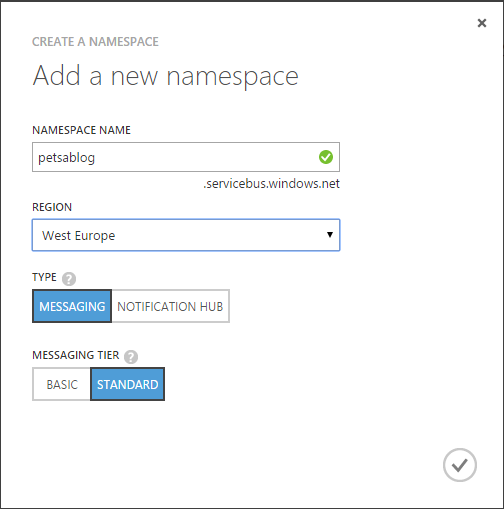

- Create Service Bus namespace to contain our event hub

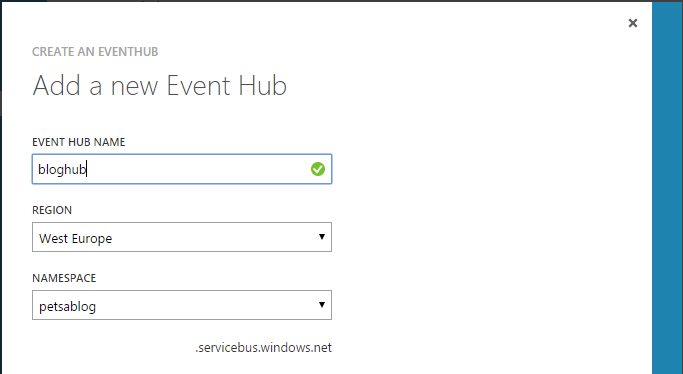

- Create event hub to catch the message from field

- Build a small Simulator to send in events

- Create a Stream Processing Job to do some mild analysis

- Store the data for Part II where we will try to do some reporting and analysis

Namespace

The namespace is a manageable unit that can house several different services like Queues,Topics and Event Hubs, which we will be using today. One interesting thing about the namespace is that it controls the scaling of the Services it contains.

Namespace does this via Throughput Units. One TU can take in roughly 1MB per second. Given a 12 hour day that makes about 50GB per day. Not bad.

TU's are also a billable resource. One TU in standard mode cost about 0.0224€ per hour, which totals about 17€ per month. SO cost isn't the thing keeping Your company from testing IOT.

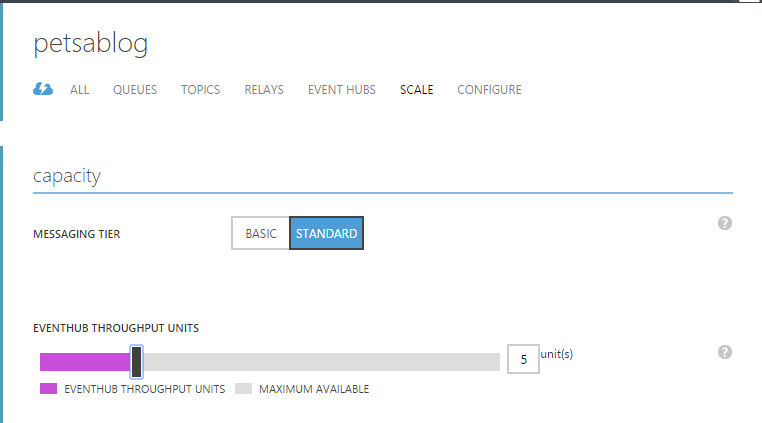

Pay attention to this ! This is the point that decides how scalable Your solutions intake will be and how costly it will be to run. With this slider You can easily provision up to 20 TU's for running Your ingress.

Event Hub

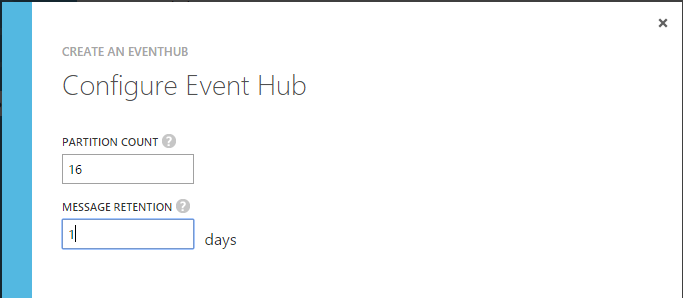

An Event Hub is the actual endpoint to which all the gadgets send their events.You can use Partitions and parition keys in Your data to splice the data up, but if not used the partitions will be used evenly for all data.

Remember to configure Shared Access Policy for Your Event Hub or the Job will not be able find it later. I created a poly called "manage" and ticked all the boxes for access.

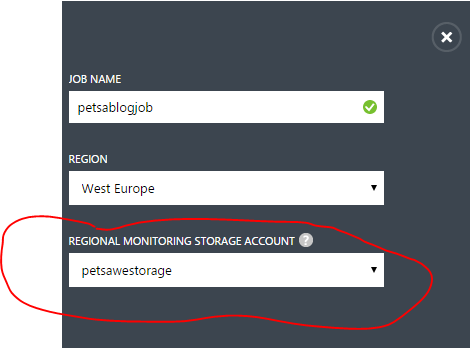

Stream Analytics Job

A Job is actually very simple thing connecting the ingreaa queue with long term storage and performing some optional analysis on the fly.

Jobs monitoring storage account should reside in same region as the job itself or You will be paying for the traffic.

A Job consists of three moving parts :

Input: Here You will reference Your Event Hub.

Output: The long-term storage can be various things I chose Sql Database in the same region that the Job is in. Remember to enable Access for other Azure Services in DB's config ot You will spend some time wondering why the Job is not running.

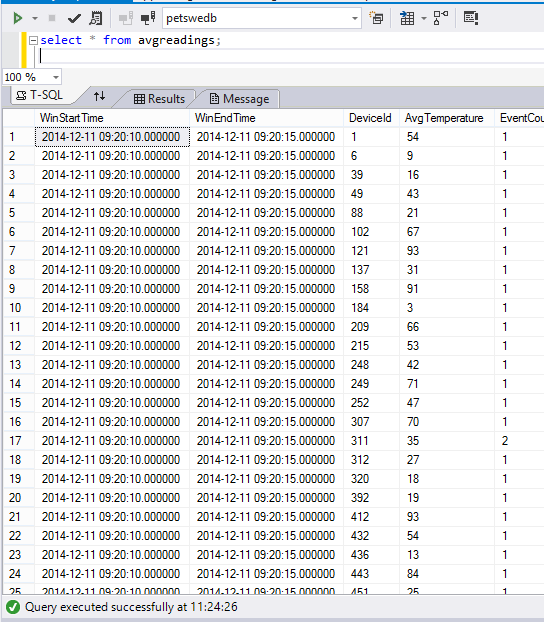

There needs to be a table in the Sql database that matches the structure that Your "query" produces and it needs to be named in the outputs-config, so here goes:

CREATE TABLE [dbo].[AvgReadings](

[WinStartTime] DATETIME2 (6) NULL,

[WinEndTime] DATETIME2 (6) NULL,

[DeviceId] BIGINT NULL,

[AvgTemperature] FLOAT (53) NULL,

[EventCount] BIGINT null

);

CREATE CLUSTERED INDEX [AvgReadings] ON [dbo].[AvgReadings]([DeviceId] ASC);

Query: This is where the realtime analysis and the overall definition of the data structure happens. Basically You create a query and whatever it produces will be the new datatype and it will be store in "output".

A simple query could be like this : "SELECT DeviceId, Temperature FROM input" - this would just store the values received into output-table for later usage.

If You want to do some aggregation or math with the data before it is written to storage You can use some clever sql:https://msdn.microsoft.com/en-us/library/azure/dn834998.aspx

In this case we will just average some values with 5-second window:

"SELECT DateAdd(second,-5,System.TimeStamp) as WinStartTime, system.TimeStamp as WinEndTime, DeviceId, Avg(Temperature) as AvgTemperature, Count(*) as EventCount FROM input GROUP BY TumblingWindow(second, 5), DeviceId"

Note that the "input" needs to refer to the alias-name that You gave when defining Your Job input !!!

Go to Your Jobs Dashboard and hit Start ... and our system should be ready to accept some input.

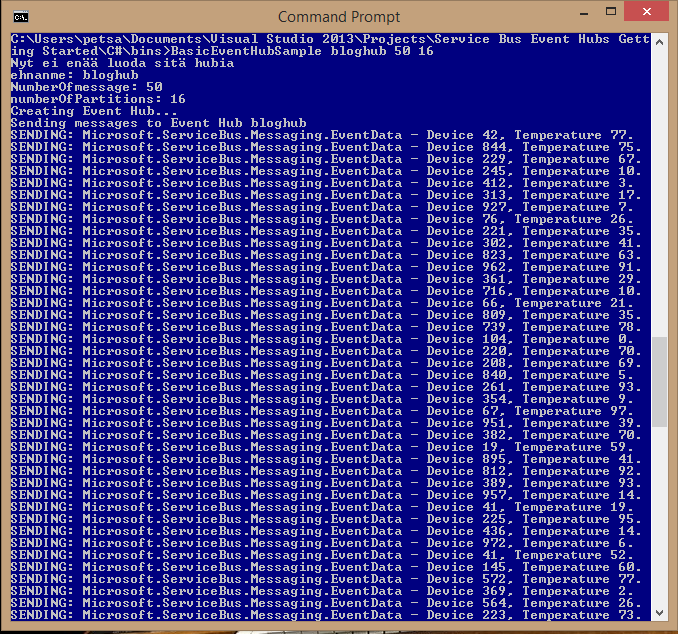

Creating some events

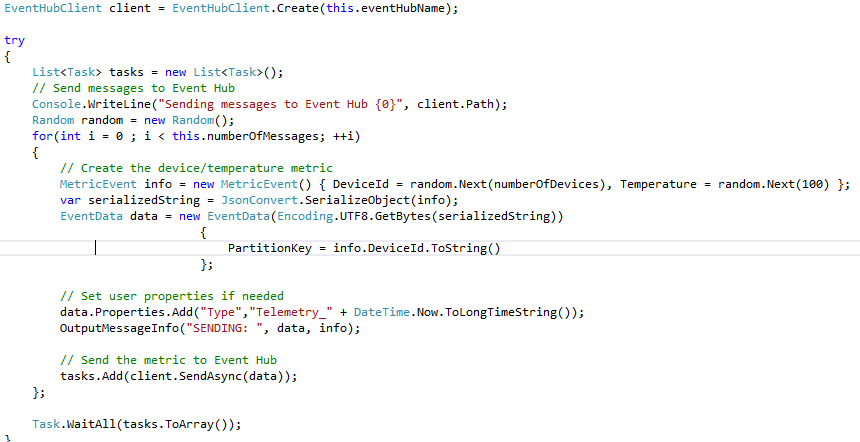

The most important things in our little Simulator client are:

NamespaceManager to connect to our namespace ,credentials stored in app.config.

EventHubClient for accessing the api

EventData to house our data structure.

EventHubCLient.SendAsyc for actually sending.

Don't worry there will be a link from where You can get the client code from.

Running this guy feeds some events in our pipeline.

Note that the second parameter needs to be the name of Your Event Hub inside Your namespace.

Querying tha database will show that we indeed get some events coming in and we are able to perform some light operations on it too.

Conclusions and next steps

So , IOT isn't that hard after all.

By using some PaaS Services we were able to make a solution that can ingest millions of messages per second. By using some good old SQL we were also able to enrich and analyze the incoming data stream.

Not bad.

Next part of this article will elaborate on the client side, namely we will be sending events from scripting language, probabply from Python, We'll use a Raspberry pi as a client if all goes well .

We will also do some reporting and maybe some Machine Learning.

Stay tuned.

In case You have problems finding Your way around Azure management screens there is a more step-by-step oriented tutorial available here, it also has the source code for the client used:

https://azure.microsoft.com/en-us/documentation/articles/stream-analytics-get-started/