Notes - SQL Server Index Fragmentation, Types and Solutions

This is the first in the series of blog posts that I plan on writing to summarize various topics of interest in SQL Server. The goal of these “Notes” posts is to collect important information from multiple sources such as whitepapers, KB articles, MSDN/TechNet articles, credible blogs etc., and publish them in a single post for easy access. These summary posts should provide a quick reference point to those of us who are just looking for a brief summary on specific topics of interest in SQL Server. At the end of each summary article, I will attempt to provide links to all the resources, which I referred to while writing the post.

So I decided to write my first notes post on an important and sometimes perplexing topic - Index Fragmentation in SQL Server. I have often found it challenging to memorize types of index fragmentation, their causes and various differences between Reorganizing vs. Rebuilding indexes. In this post I will attempt to provide notes on these aspects of index fragmentation

What is Index Fragmentation: Index fragmentation is an “expected” and “unavoidable” characteristic of any OLTP environment. Fragmentation is defined as any condition which causes more than the optimal amount of disk I/O to be performed in accessing a table, or causes the disk I/Os that are performed to take longer than they optimally would

Causes of Index Fragmentation: Some of the most common causes of index fragmentation are -

- Insert and Update operations causing Page splits - One of the most common causes of index fragmentation is the “page-split” operation. A page-split occurs when there is no free space on a data page to accommodate new inserts or updates. As a result, SQL Server will moves ~ 50% data from the current page to the newly allocated data page. This is done to keep the index tree balanced so that SQL Server has to traverse through a predictable number of levels to get to any data page in the tree. This is the reason why SQL server indexes are implemented as b-trees where “b” means “balanced”. Page splits are caused by -

-

- Non Sequential inserts – when doing a non-sequential insert, SQL Server moves ~50% of data from the old page to the newly allocated page. This would result in a page split, with each page having ~50% of data from the old page.

- Updates to an existing row value with a larger value, which doesn’t fit on the same page

- GUIDs if included in the clustered index key causes index fragmentation in both clustered and non-clustered indexes – see this article for more details

- Delete operations - Random delete operations, which leave individual pages in use but not completely full of rows will result in Internal Fragmentation. Similarly heavy deletes will cause pages removed to be removed from the page chain resulting in Logical Fragmentation.

- Initial allocation of pages from mixed extents – this is well demonstrated in this blog post

- Large row size - for example, if rows are 3200 bytes each, then only two rows can fit on a 8K page, leaving approximately 1600 bytes unused on every page

Various Types of Index Fragmentation:

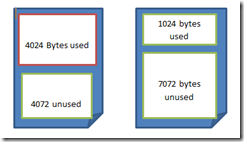

- Internal Fragmentation –When pages are less than fully used, the part of each page that is unused constitutes a form of fragmentation, since the table’s or index’s rows are no longer packed together as tightly as they could be. This is known as Internal Fragmentation and usually occurs due to -

- Random deletes resulting in empty space on data pages

- Page-splits due to inserts or updates

- Shrinking the row such as when updating a large value to a smaller value

- Using a fill factor of less than 100

- Using large row sizes

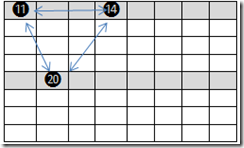

Logical Fragmentation - SQL Server uses 8KB Pages to store data on disk. When a clustered index is created on a table, SQL Server creates a b-tree data structure for the index and links all data pages at the leaf level of the tree in a logical order by using a doubly linked list. Logical fragmentation occurs when the pages in this doubly linked list are not contiguous in the index, meaning that indexes have pages in which the logical ordering of pages, which is based on the key value, does not match the physical ordering inside the data file. This could happen due to -

- Page-splits due to inserts or updates

- Heavy deletes that can cause pages be removed from the page chain, resulting in dis-contiguous page chain

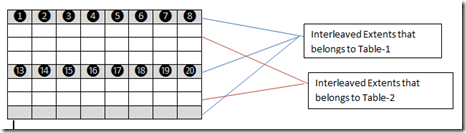

Extent Fragmentation - Extent fragmentation occurs when the extents of a table or index are not contiguous with the database leaving extents from one or more indexes intermingled in the file. This can occur due to:

-

- Random deletes, which could leave some of the pages in an extent unused while the extent itself is still reserved as part of the table’s space allocation. Think of it like Internal fragmentation, but in extents instead of pages

- Deletes on ranges of contiguous rows within the table, causing one or more entire extents to become de-allocated, thus leaving a gap between the surrounding extents of the table or index

- Interleaving of a table’s data extents with the extents of other objects

-

Why does Index Fragmentation matters: High fragmentation can affect performance of read-intensive disk-bound workloads. Optimal performance of SELECT queries is only possible with contiguous data pages within the database file, and if the data pages are packed as fully as possible. Internal fragmentation will cause queries to read more data pages in order to retrieve the same number of records because the pages are not fully packed. Similarly Logical fragmentation and Extent fragmentation will cause the read performance to slow down because SQL Server can’t perform Read-Ahead reads. The SQL Server Read-Ahead manager dynamically adjusts the size of reads it performs based on the physical ordering of the underlying pages. With high Logical and Extent fragmentation, the read-ahead manager must read smaller blocks of data. A few important things to note about the effects of index fragmentation on SQL Server performance -

- Fragmentation only affect performance if the pages have to be read from physical disk (aka physical read). However, if the pages being read are already in buffer pool cache (aka logical read), fragmentation does not affect query performance

- Fragmentation happens because of DML operations. So if there is very little DML activity on a table, it may not get fragmented at all.

- Fragmentation only impacts range queries and not the singleton SELECT queries.

- Reducing or eliminating Internal fragmentation can help you save disk space.

How to detect Index Fragmentation: Use the sys.dm_db_index_physical_stats DMF, that replaces the deprecated DBCC SHOWCONTIG command. This DMF has three modes -

- DETAILED - reads all data and index pages. Be careful with using this options since it causes the entire index be read into memory and may result in IO/Memory issues

- SAMPLED: reads 1% of the pages if more than 10,000 pages

- LIMITED: only reads the parent level of b-tree (same as DBCC SHOWCONTIG WITH FAST). Limited option doesn't report page density, since it does not read the leaf level pages

Pay attention to the following columns in the output of this DMF -

- Avg_fragmentation_in_percent – depicts logical fragmentation

- Avg_page_space_used_in_percent – depicts internal fragmentation

Solutions to address Index Fragmentation: Main choices are -

- Recreate - CREATE INDEX WITH DROP_EXISTING – Not a good choice because of possible issues with constraints (Foreign Keys etc.)

- Rebuild - ALTER INDEX ... REBUILD (replaces DBCC REINDEX)

- Reorganize - ALTER INDEX ... REORGANIZE (replaces DBCC INDEXDEFRAG)

- To reduce the extent fragmentation of a heap, create a clustered index on the table and then drop the index

Reorganize vs. Rebuilding indexes

# |

Characteristic | Alter Index REORGANIZE | Alter Index REBUILD |

1 |

Online or Offline | Online | Offline (unless using the Online keyword) |

2 |

Address Internal Fragmentation | Yes (can only raise page density) | Yes |

3 |

Address Logical Fragmentation | Yes | Yes |

4 |

Transaction Atomicity | Small Discrete Transactions | Single Atomic Transaction |

5 |

Rebuild Statistics Automatically | No | Yes |

6 |

Parallel Execution in multi-processor machines | No | Yes |

7 |

Untangle Indexes that have become interleaved within a data file | No | Yes |

8 |

Transaction log space used | Less | More |

9 |

Additional free space required in the data file | No | Yes |

# 1 - DBCC INDEXDEFRAG is an online operation; therefore, the table and indexes are available while the index is being defragmented. DBCC DBREINDEX is an offline operation. When DBCC INDEXDEFRAG is run on non-quiescent systems on which updates to the underlying data are taking place, DBCC INDEXDEFRAG skips locked pages as it encounters them. As a result, DBCC INDEXDEFRAG may not be able to completely eliminate fragmentation. SQL Server 2005 introduces the ability to rebuild your indexes in an online fashion so that other processes are able to access the table while the rebuild is occurring. Because you can access the indexes during the rebuild, you are not limited to only rebuilding indexes during off-peak hours. For more information on online index operations, please refer to this article

# 2 - REORGANIZE attempts to raise the page-density level of pages to the original fillfactor. DBCC INDEXDEFRAG does not, however, reduce page density levels on pages that currently have a higher page density than the original fillfactor.

# 3 - Rebuilding indexes is usually faster then reorganizing indexes if the logical fragmentation is high. A general rule of thumb is to rebuild indexes when the average logical fragmentation is more than 30%

# 4 - DBCC INDEXDEFRAG can be stopped and restarted without losing any work. The entire DBCC DBREINDEX operation runs as one atomic transaction. This means if you stop DBCC DBREINDEX the entire operation is rolled back, and you must start over. However, if you stop DBCC INDEXDEFRAG it stops instantly and no work is lost, because each unit of work performed by DBCC INDEXDEFRAG occurs as a separate transaction.

# 5 - Note that while Rebuilding indexes will automatically rebuild all index statistics (which is equivalent to a full scan), it does not update column statistics created by Query Optimizer (statistics names starting with _WA in sys.stats view) automatically.

# 7 - Interleaving occurs when index extents (a group of eight index pages) for an index are not completely contiguous within the data file, leaving extents from one or more indexes intermingled in the file. Interleaving can occur even when there is no logical fragmentation, because all index pages are not necessarily contiguous, even when logical ordering matches physical ordering.

# 8 - When in full recovery mode, Alter Index REBUILD logs images of each index page, which does not occur when in bulk logged mode. For this reason, in full recovery mode, the log space required by the REBUILD operation is roughly equivalent to the number of index pages multiplied by 8 KB. On the other hand the amount of work that Alter Index REORGANIZE performs when defragmenting indexes is dependent on the number of page movements and the amount of page compaction necessary. You can reclaim the log space used by the REORGANIZE operation by backing up the log because the work performed is a series of small transactions

# 9 - Rebuilding indexes requires adequate free space in the data file(s). With not enough free space in the data file(s), re-index operation may be unable to rebuild the indexes, or the indexes may be rebuilt with logical fragmentation values above zero. Without large enough contiguous blocks of free space, rebuilding indexes may be forced to reuse other areas of free space within the data files, resulting in indexes being rebuilt with a small amount of logical fragmentation

References:

- Index Fragmentation – video from Paul Randal

- Microsoft SQL Server 2000 Index Defragmentation Best Practices

- Fragmentation and Database Performance

- Example: Index fragmentation with insert/updates, measuring it and fixing it

- Can GUID cluster keys cause non-clustered index fragmentation?

- Fragmentation (part 1): What are records?

- Fragmentation (part 2): What are pages?

- Fragmentation (part 3): What are extents?

- Fragmentation (part 4): what are heaps?

- SQL Server 2008 Fragmentation – Jose Barreto’s notes

- sys.dm_db_index_physical_stats

- Reorganizing and Rebuilding Indexes

- How Online Index Operations Work

- Reading Pages