Subject Search Result Evaluation Tool

https://www.codeplex.com/SubjectSearchEva

This is another result for Hylanda wordbreaker testing...

The story is very interesting. After we got 18000+ search results for each wordbreaker, we need to evaluate the results to decide when, where and how the new wordbreaker is better than the original one. Such thing cannot be decided by myself, or by any of the physical measure. When people say "Hey, you know sometimes Yahoo is better than Google", they are measuring it with their own eyes.

This is quite similar with my research for my Master's degree. I studied Psychological Acoustics for two years in that time, try to use some way to measure sound quality. This is called subject quality evaluation. So I used the same method, to measure search result quality.

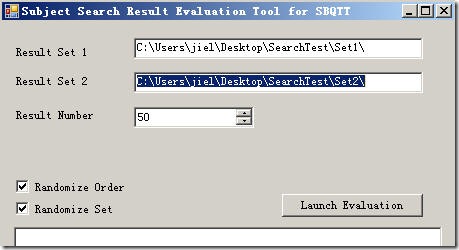

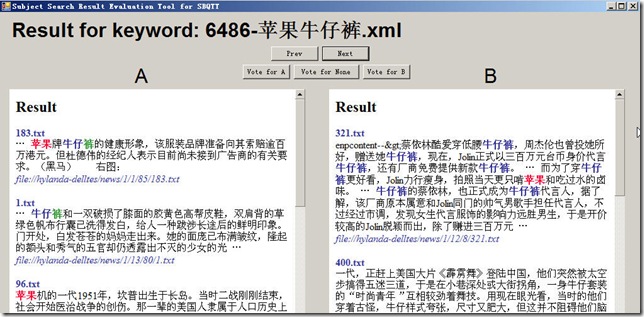

This method is called Paired Comparison. The tester will be given two sets of result, and choose which is better. For example, there're 50 results in each set(A, B). So first, A1 and B1 show up and the user choose A, then A2/B2 show up and user choose B... In the end, count the number of A and B, you will get tester's preference.

To make sure there's no psychological interfere during the test, the order and set can be randomized. Tester will not know which is A and which is B, they just need to choose the better one.

Here's the interface of the program.

Testing. If tester cannot choose which is better(sometimes the two sets are the same good or bad), he can choose not to vote or vote for none.

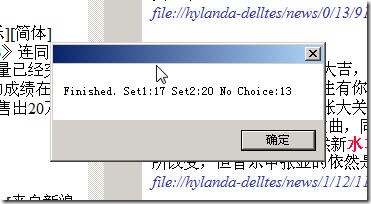

Final result will displayed in a message box.

Of course, this is just a simple proof of concept. I shared the source and a sample in the release package so you can do your own research work.