The Mystery of Lsass.exe Memory Consumption, (When all components get involved)

Hi All, this is Karim Elsaid and I’m a Support Escalation Engineer working with the Dubai platforms support team. Recently I was working on a very challenging and interesting case, and I wanted to share that experience with you.

One of our customers was experiencing a problem on all his Domain Controllers (Running x86 Windows Server 2003), where intermittently the DCs ran out of resources. When one of the servers ran out of resources it had to be restarted to restore its functionality. The following event was logged on the DC when the problem occurred:

Event Type: Error

Event Source: NTDS General

Event Category: Internal Processing

Event ID: 1169

Date:

Time:

User: N/A

Computer:

Description:

Active Directory could not allocate the needed amount of memory.

Memory (bytes):

4568

Active Directory will continue to operate, but may not function correctly.

User Action

Restart this domain controller. If this condition continues, increase the available

physical or virtual memory.

Additional Data

Internal ID:

30205bd

For more information, see Help and Support Center at

https://go.microsoft.com/fwlink/events.asp.

This event gets logged when the Local Security Authority Subsystem (lsass.exe) process is unable to allocate memory from the heap.

When this problem occurs, users are not able to authenticate and Exchange servers cease functioning until the DC gets rebooted.

By the time we got the call, the DC that was exhibiting the issue had been rebooted, hence when we checked the lsass.exe memory usage it was very low, but when we checked another DC on the same site, the lsass.exe memory usage was around 1.7GB. Well, that’s pretty high but it is worth mentioning that by default lsass.exe may utilize a large amount of virtual memory for the ESENT Cache (Active Directory database), nevertheless this should not cause any issues on DCs as it should back-off when needed.

The issue here is that the memory usage for lsass.exe kept growing and never got released back again - this is called a process memory leak.

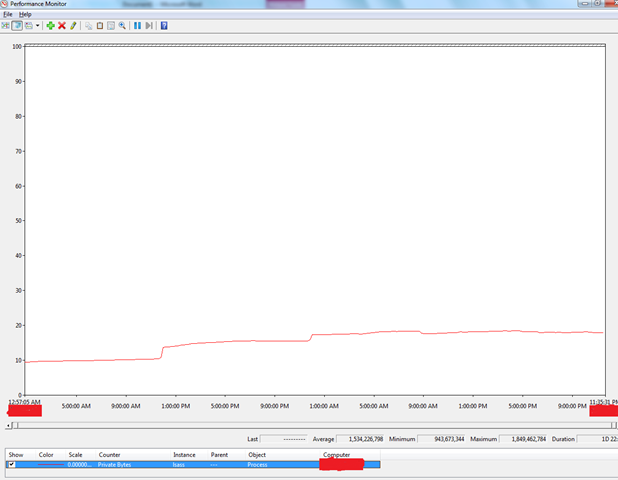

To confirm our theory we obtained a Perfmon Log from the DC and checked how Lsass.exe is doing with memory, which proved the theory that lsass.exe is actually leaking memory.

We can see from the graph above a steady increase in lsass.exe private bytes. The DC was booted without /3GB and hence the limit of the user mode process address space is 2GB.

For more information on this topic, please check the previous post “The Memory Shell Game”

Now, we need to explore the options on how to track and address such leaks. We could obtain an lsass.exe process dump and check the heap, or obtain a Full memory dump of the server while in the failed state, to check the lsass.exe heap usage and overall system state, but the thing is none of the above may immediately reveal what’s going on. We would need to be very lucky to get the dump while the actual memory call that happens inside lsass.exe process is executing, which is very slim.

What we are looking for is a “pattern”, the pattern which is causing the actual leak inside the lsass.exe process.

For that we will turn to a tool that I see many of the customers are not aware of, but in fact it is very handy and easy to troubleshoot such a memory leak problem. We will use the “User Mode Dump Heap” or UMDH. By using this tool it will do the job for us, it will monitor the all heap calls that happen in the lsass.exe process. All we need to do is to download the “Debugging Tools For Windows”, enable user mode stack acquisition from the command line, download the symbols from Microsoft public symbol servers, reboot the server and we are good to go.

You can find information on UMDH; how to set it up and use it “Here”

So now, all heap blocks that the lsass.exe process allocates will get monitored by UMDH, and the tool will tell us information about that specific call stack. For example, the number of allocations using this stack, number of bytes consumed, etc…

Back to our main problem: We have setup the DC for UMDH and started monitoring the lsass.exe process. We verified UMDH is working after the reboot by generating a sample log - it is very critical to generate this simple log to make sure that you are able to resolve the symbols correctly.

We kept generating UMDH logs daily and then the interesting pattern started to appear, which shows:

+ 123097760 ( 123098480 - 720) 1538731 allocs BackTrace4BA0

+ 1538722 ( 1538731 - 9) BackTrace4BA0 allocations

ntdll!RtlAllocateHeapSlowly+00000041

ntdll!RtlAllocateHeap+00000E9F

msvcrt!malloc+0000006C

NTDSA!ABBind_local+0000031C

NTDSA!NspiBind+0000013D

RPCRT4!Invoke+00000030

RPCRT4!NdrStubCall2+00000299

RPCRT4!NdrServerCall2+00000019

RPCRT4!DispatchToStubInCNoAvrf+00000038

RPCRT4!RPC_INTERFACE::DispatchToStubWorker+0000011F

RPCRT4!RPC_INTERFACE::DispatchToStub+000000A3

RPCRT4!OSF_SCALL::DispatchHelper+00000149

RPCRT4!OSF_SCALL::DispatchRPCCall+0000010D

RPCRT4!OSF_SCALL::ProcessReceivedPDU+0000057F

RPCRT4!OSF_SCALL::BeginRpcCall+00000194

RPCRT4!OSF_SCONNECTION::ProcessReceiveComplete+00000435

RPCRT4!ProcessConnectionServerReceivedEvent+00000021

RPCRT4!LOADABLE_TRANSPORT::ProcessIOEvents+000001B8

RPCRT4!ProcessIOEventsWrapper+0000000D

RPCRT4!BaseCachedThreadRoutine+0000009D

RPCRT4!ThreadStartRoutine+0000001B

kernel32!BaseThreadStart+00000034

From the backtrace 4BA0 we can see that there are ~1.5 heap allocation calls that have occurred without being freed from the first day we started to monitor lsass using UMDH.

There is also another call stack with exactly the same symptoms:

+ 51525199 ( 51525506 - 307) 1538802 allocs BackTrace4B92

+ 1538793 ( 1538802 - 9) BackTrace4B92 allocations

ntdll!RtlAllocateHeapSlowly+00000041

ntdll!RtlAllocateHeap+00000E9F

LSASRV!LsapAllocatePrivateHeapNoZero+0000004F

RPCRT4!operator new+0000000D

RPCRT4!W2AAttachHelper+00000032

RPCRT4!CNlDelUnicodeAnsi::Convert+00000014

NTDSA!ABBind_local+0000005D

NTDSA!NspiBind+0000013D

RPCRT4!Invoke+00000030

RPCRT4!NdrStubCall2+00000299

RPCRT4!NdrServerCall2+00000019

RPCRT4!DispatchToStubInCNoAvrf+00000038

RPCRT4!RPC_INTERFACE::DispatchToStubWorker+0000011F

RPCRT4!RPC_INTERFACE::DispatchToStub+000000A3

RPCRT4!OSF_SCALL::DispatchHelper+00000149

RPCRT4!OSF_SCALL::DispatchRPCCall+0000010D

RPCRT4!OSF_SCALL::ProcessReceivedPDU+0000057F

RPCRT4!OSF_SCALL::BeginRpcCall+00000194

RPCRT4!OSF_SCONNECTION::ProcessReceiveComplete+00000435

RPCRT4!ProcessConnectionServerReceivedEvent+00000021

RPCRT4!LOADABLE_TRANSPORT::ProcessIOEvents+000001B8

RPCRT4!ProcessIOEventsWrapper+0000000D

RPCRT4!BaseCachedThreadRoutine+0000009D

RPCRT4!ThreadStartRoutine+0000001B

kernel32!BaseThreadStart+00000034

From both call stacks above we can see that the originating function that leads to the heap allocation is “NTDSA!NspiBind”

NSPI is the interface which the Exchange Address Book provider uses to talk to the Global Catalogs. Typically when Outlook clients want to talk to the directory for address book, they talk to the Exchange server directly and then the Exchange server will look up the appropriate GC, forward the request to it and finally send the result back to the originating clients. This method is called the DSproxy method. Outlook clients can also send referral requests (RFR) to the Exchange server to get a referral to a GC and then start talking to this GC directly. You can find more information about this operation “Here”

With this new information in hand, we turned our attention to the Exchange server for a while. We did so by obtaining a netmon trace from the Exchange server, and started examining it to reveal a massive number of RFR requests originating from outlook client machines toward the Exchange server:

3795 11:50:59.1426170 10.3876170 GC_SERVER EXCHSERVER NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:535, TCP:488, IPv4:118}

3796 11:50:59.1426170 10.3876170 EXCHSERVER 10.208.164.176 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:502, TCP:496, IPv4:326}

3797 11:50:59.1426170 10.3876170 EXCHSERVER 10.208.164.176 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:509, TCP:508, IPv4:326}

3798 11:50:59.1426170 10.3876170 EXCHSERVER 10.208.164.176 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:494, TCP:484, IPv4:326}

9807 11:51:13.1894920 24.4344920 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1144, TCP:1142, IPv4:326}

10084 11:51:13.3769920 24.6219920 EXCHSERVER GC_SERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1145, TCP:1143, IPv4:118}

10085 11:51:13.3769920 24.6219920 GC_SERVER EXCHSERVER NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:1145, TCP:1143, IPv4:118}

10086 11:51:13.3926170 24.6376170 EXCHSERVER 10.208.164.176 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:1144, TCP:1142, IPv4:326}

10103 11:51:13.3926170 24.6376170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1182, TCP:1180, IPv4:326}

10108 11:51:13.3926170 24.6376170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1151, TCP:1149, IPv4:326}

10111 11:51:13.3926170 24.6376170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1200, TCP:1196, IPv4:326}

10115 11:51:13.3926170 24.6376170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1164, TCP:1163, IPv4:326}

10148 11:51:13.4863670 24.7313670 10.34.51.69 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:447, TCP:446, IPv4:367}

10149 11:51:13.4863670 24.7313670 EXCHSERVER GC_SERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:473, TCP:448, IPv4:69}

10172 11:51:13.4863670 24.7313670 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1199, TCP:1197, IPv4:326}

10174 11:51:13.4863670 24.7313670 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1187, TCP:1186, IPv4:326}

10180 11:51:13.4863670 24.7313670 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1170, TCP:1168, IPv4:326}

10183 11:51:13.4863670 24.7313670 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1153, TCP:1152, IPv4:326}

10185 11:51:13.4863670 24.7313670 GC_SERVER EXCHSERVER NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:473, TCP:448, IPv4:69}

10186 11:51:13.4863670 24.7313670 EXCHSERVER 10.34.51.69 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:447, TCP:446, IPv4:367}

10224 11:51:13.5176170 24.7626170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1209, TCP:1208, IPv4:326}

10227 11:51:13.5176170 24.7626170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1192, TCP:1190, IPv4:326}

10232 11:51:13.5176170 24.7626170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1159, TCP:1157, IPv4:326}

10235 11:51:13.5176170 24.7626170 10.208.164.176 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:1175, TCP:1173, IPv4:326}

10240 11:51:13.5176170 24.7626170 10.34.51.69 EXCHSERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:447, TCP:446, IPv4:367}

10241 11:51:13.5176170 24.7626170 EXCHSERVER GC_SERVER NSPI NSPI:NspiBind Request, Flags=0x0 {MSRPC:473, TCP:448, IPv4:69}

10251 11:51:13.5332420 24.7782420 GC_SERVER EXCHSERVER NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:473, TCP:448, IPv4:69}

10255 11:51:13.5332420 24.7782420 EXCHSERVER 10.34.51.69 NSPI NSPI:NspiBind Response, ReturnValue=0x0 {MSRPC:447, TCP:446, IPv4:367}

Snipp…..

In the netmon capture we saw an excessive number of NspiBind requests been sent from the Outlook client machines to the Exchange server and Exchange server in turn sending these NspiBind requests to the GC, in the netmon capture we didn’t find any frames for NspiUnBind requests, which should be used to destroy that connection.

When we checked the Exchange server security logs we found a massive number of logon events that are occurring for every single client:

Event Type: Success Audit

Event Source: Security

Event Category: (2)

Event ID: 540

Date: 5/26/2010

Time: 3:49:09 PM

User:

Computer: EXCHSERVER

Description:

Successful Network Logon:

User Name: User_Name

Domain: CONTOSO.COM

Logon ID: (0x0,0x3DA1F42)

Logon Type: 3

Logon Process: Kerberos

Authentication Package: Kerberos

Workstation Name:

Logon GUID: {b03f9ad2-3150-e1be-f50a-b3abb9d2c09d}

Caller User Name: -

Caller Domain: -

Caller Logon ID: -

Caller Process ID: -

Transited Services: -

Source Network Address: 10.11.12.13

Source Port: 0

For more information, see Help and Support Center at https://go.microsoft.com/fwlink/events.asp.

So, now we know the problem of lsass.exe memory consumption on the DCs is actually occurring because of a massive number of NspiBind requests that are being sent to the DCs without proper NspiUnbind requests.

So we turned our attention to the client machines that have Outlook installed and started to list all 3rd party add-ins that are installed on them.

We found that all affected client machines are actually sharing 3rd party add-ins for email content archival. Further investigation on this software revealed a strange MAPI Wrapper DLL library, and our customer confirmed that the start time of the problem approximately matches the time they have rolled out the content archival software on the client machines.

We uninstalled this content archival add-in from a couple of machines and these machines stopped hammering the Exchange Server and GC with NspiBind requests.

Later we uninstalled it from the rest of the client machines and the lsass.exe process on the DCs has finally stabilized and we no longer have any issues with its memory usage.

I’d like to mention that starting with Windows Server 2008 we have implemented a “NSPI Max Sessions Per User” where you can limit the number of NSPI requests from a client to the server.

You can read more information about this new functionality “Here”

That’s it! I hope you enjoyed reading and hopefully this should help you to troubleshoot any memory leaks you may encounter. The information contained here can be applied to other processes when you suspect heap leaks.

See you again on another adventure.

Best Regards,

Karim Elsaid