Bicycle Computer #4 – UI continued – Fonts and Touch

This is the fourth in a series of articles demonstrating how someone with modest .NET programming capabilities can now write applications for embedded devices using the .NET Micro Framework and Visual Studio. To jump to the initial article, click here. I am still working on getting this project posted out to CodePlex or somehow made available to you in total. Remember, you can help determine what we cover in the series with your questions and suggestions.

We left off an earlier article after we had drafted the riding UI but the two fonts that come in the SDK were not quite the right set. If you recall, the larger on was legible but the smaller one was a little hard to read. We want something very easy to read so that the rider can just glance to get information.

So what are our font options if these two fonts are not sufficient? The fonts in the .NET Micro Framework are unique in their format to reduce the size requirements as much as possible. As a result, you need to use a tool provided in the .NET Micro Framework (TFConvert) to convert a TrueType font file into a ‘Tiny Font’ (.tinyfnt) file. TinyFont files are fixed size to reduce the code and simplify display. That means that you need to create a TinyFont file for each size font that you intend to use.

Using TFConvert

First, fonts are intellectual property and are licensed in specific contexts. Just because you can convert a font file to use with the .NET Micro Framework does not mean that you have the rights to do that. Consult the license that the font was provided under to be sure. Fortunately, there are 6 example fonts provided with the .NET Micro Framework SDK which can be found in the \Tools\Fonts\TrueType subdirectory of your .NET Micro Framework installation. These fonts are provided by Ascender Corporation and to get extended version or additional fonts, contact them directly via their Web site https://www.ascendercorp.com.

Using TFConvert is very straightforward and is described in detail in the help files - https://msdn.microsoft.com/en-us/library/cc533016.aspx.

The first step is to create a font definition file. Here are the contents of the definition file that I created:

AddFontToProcess fonts\truetype\miramob.ttf

SelectFont "FN:Miramo Bold,HE:-14,WE:700"

ImportRange 32 122

and saved as Miramob.fntdef in the same directory as the fonts. (Note: this is a little different than the example in the documentation. I could not get a quoted path to work for ‘AddFontToProcess’. )

To use the font, you need to add it to the resource directory and then add it to the resources and then add it into the RSX file as an existing file. When you are ready, compile the project and check the code generated in Resource .Designer.cs to make sure that it is added in. It should look something like:

[System.SerializableAttribute()]

internal enum FontResources : short

{

nina48 = 1528,

pescab = 4084,

miramob = 8617,

small = 13070,

nina14 = 19188,

}

Using this new font instead of the small font makes the labels more legible.

Adding Gestures (Touch)

Hardware buttons are the most common user input mechanism but they are not very flexible since the number and labels are obviously ‘hardcoded’. Soft buttons are a little more flexible. You can change the number and labels to suit your needs. But either of these requires that you make some fairly exact movements and take your eyes off the road to coordinate the button press. Gestures on the other hand can be done anywhere on the 3.5 inch screen. There is also a simple semantic mapping that we can do with the gestures. I have several alternative screen presentations in mind. With gestures, you can scroll through them horizontally with a left or right gesture. The only other semantic that I think I need at this point is to start and stop things. There are a few screens, like the setup and data screens, that are not used while riding that I will use soft buttons on.

So, how hard is it to add gestures? Not hard at all and it makes the devices we create much easier to use.

First, I initialize touch when I initialize the Controller. Initialization has application scope and I have one set of gestures for the whole UI so I can hook my gesture event handler to the mainWindow. I do this in my constructor of my Controller class (ComputerController) that manages the state of the application. As you can see, the last thing that I do at the end of the constructor is to set up the ReadyView (the initial view of the application showing the title and the background bitmap). Then I call the Initialize method for the Touch class and hand it the application and set up the TouchGestureChanged event handler that will get an event whenever an event is detected. You may notice a very slight delay between the start of the gesture and the event. We have to wait until your finger has been lifted from the screen before we can determine exactly what the gesture was. We tuned this a little in version 4.1.

public ComputerController(Program application, …)

{

_application = application;

….

ChangeView(_readyView);

Microsoft.SPOT.Touch.Touch.Initialize(_application);

_mainWindow.TouchGestureChanged += new TouchGestureEventHandler(ComputerController_TouchGestureChanged);

}

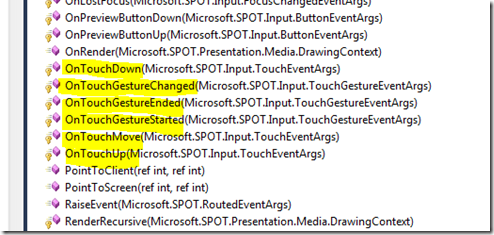

Touch is hooked into the UIElement.

OK - now I write the gesture handler. There are left and right gestures to change the display and up and down gestures to change the riding states. There are a number of other gestures available but I want to keep the ui as simple as possible so every up gesture starts something and down gestures will stop something and left and right will change views. The displays that are available change with the riding state:

void ComputerController_TouchGestureChanged(object sender, TouchGestureEventArgs e) // this handles the gestures for the non-ride state

{

if (!_model.isInRide())

{

if (e.Gesture == TouchGesture.Right)

{

if (_mainWindow.Child == _readyView || _mainWindow.Child == _dataView)

{

_mainWindow.Background = new SolidColorBrush(ColorUtility.ColorFromRGB(200,250,200));

ChangeView(_settingsView);

}

else

{

ChangeView(_dataView);

}

}

else if (e.Gesture == TouchGesture.Left)

{

if (_mainWindow.Child == _readyView || _mainWindow.Child == _settingsView)

{

_mainWindow.Background = new SolidColorBrush(ColorUtility.ColorFromRGB(200, 250, 200));

ChangeView(_dataView);

}

else

{

ChangeView(_settingsView);

}

}

else if (e.Gesture == TouchGesture.Up)

{

_mainWindow.Background = new SolidColorBrush(ColorUtility.ColorFromRGB(200, 250, 200));

ChangeView(_rideView);

_model.ChangeRideState(gesture.Up);

}

} //end not in Ride

else

{

if (e.Gesture == TouchGesture.Down)

{

_model.ChangeRideState(gesture.Down);

_rideView.PauseRide();

_summaryView.PauseRide();

}

else if (e.Gesture == TouchGesture.Up)

{

_model.ChangeRideState(gesture.Up);

_rideView.ResumeRide();

_summaryView.ResumeRide();

}

else if (e.Gesture == TouchGesture.Right || e.Gesture == TouchGesture.Left)

{

if (_mainWindow.Child == _rideView)

{

ChangeView(_summaryView);

}

else

{

ChangeView(_rideView);

}

}

}

}

In the next article, I will continue the discussion of the UI with creating some custom controls for the Settings View.