How to Compile a Database Twice as Fast (or faster)

Compiling a complete NAV database can take quite a while. Even on powerful development machines with a lot of CPU cores this is still the case – the development environment wasn’t designed for the multi-core era. The only way to speed things up is to use separate instances of the development environment and have each compile a (distinct) subset of the objects in the database. With the new Development Environment Commands for PowerShell that were included in the Microsoft Dynamics NAV 2015 Development Shell, this has become a lot easier.

Before heating up those cores, let’s first introduce the command that we need for this: Compile-NAVApplicationObject. In the following example we’ll assume that the database and Development Shell reside on the same machine. To compile all non-compiled objects in a database named the command simply takes a parameter that specifies the database (i.e., MyApp) and optionally if and how schema changes should be synchronized:

Compile-NAVApplicationObject -DatabaseName MyApp -SynchronizeSchemaChanges NoTo compile all objects in a database regardless their current compiled state use the Recompile switch:

Compile-NAVApplicationObject -DatabaseName MyApp -SynchronizeSchemaChanges No -RecompileThe command also takes a filter, e.g.:

Compile-NAVApplicationObject -DatabaseName MyApp -Filter ID=1..100compiles all non-compiled objects with an ID in the range 1 to 100.

Now to parallelize the compilation process we need to partition the set of objects in distinct sets that can be compiled in parallel. The most straightforward way to do this is based on object type. For each object type we can start a compilation job using the AsJob parameter. Using this parameter an instance of the development environment is started in the background and a handle to this background job is returned. PowerShell comes with a set of commands to work with jobs, for instance, to get the output of a job (Receive-Job) or to wait for a job to finish (Wait-Job). Occasionally, race conditions could occur while updating the object table. As a result, some objects may fail to compile. Therefore, after all background jobs have completed we compile all non-compiled objects in a final sweep. This is all we need to understand the following function that compiles all objects in a database in 7 parallel processes:

function ParallelCompile-NAVApplicationObject

(

[Parameter(Mandatory=$true)]

$DatabaseName

)

{

$objectTypes = 'Table','Page','Report','Codeunit','Query','XMLport','MenuSuite'

$jobs = @()

foreach($objectType in $objectTypes)

{

$jobs += Compile-NAVApplicationObject $DatabaseName -Filter Type=$objectType -Recompile -SynchronizeSchemaChanges No -AsJob

}

Receive-Job -Job $jobs -Wait

Compile-NAVApplicationObject $DatabaseName -SynchronizeSchemaChanges No

}

Just for fun, let’s measure the performance gain. We can do this using Measure-Command:

Measure-Command { Compile-NAVApplicationObject MyApp -SynchronizeSchemaChanges No -Recompile }Measure-Command { ParallelCompile-NAVApplicationObject MyApp }

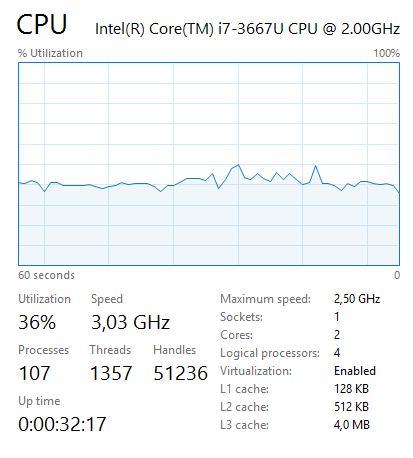

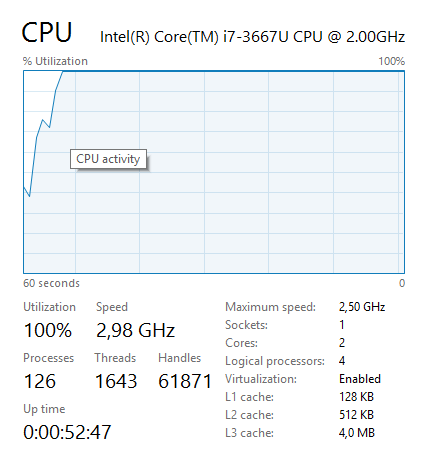

These two screenshots from task manager illustrate the difference in CPU utilization: while running the non-parallel version CPU utilization is hovering around 40%; while running the parallel version CPU utilization is maxed out at 100%.

|

|

On my laptop (with 2 CPU cores) compilation of the 4148 objects in the W1 application takes 8 minutes and 46 seconds using the non-parallel version; using the parallel version it takes only 4 minutes and 32 seconds. Machines with more CPU cores may produce even better results. Note that when parallelizing this way (i.e., based on object type) only four processes are active most of the time – compilation of Queries, XMLports and MenuSuites finishes relatively quick. So if you have a machine with a lot of cores (say 6 or 8) that you want to put to use, you need to find a way to partitioning the set of objects into a larger number of smaller sets.

Who beats 4 minutes and 32 seconds? And please share how you did it!