Arrays are Row Major in C++ AMP

Hello my name is David Callahan, I am a Distinguished Engineer at Microsoft working in parallelism features including C++ AMP. In this post I’ll explain one of our design choices, and we’ll use it as a base for future posts to build on.

In C++ AMP, we chose to implement the storage order of concurrency::array using a style known as row major . In a two-dimensional problem, if we have an array:

array<float,2> x(2,3);

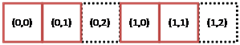

there are 6 total elements where there are 2 rows and 3 columns. In this case there are two options to layout the memory locations in a linear order. In the row-major style, rows are contiguous, as in:

In contrast, column major order has columns contiguous in memory:

When we generalize this idea to N dimensions, C++ AMP follows the same basic rule that C++ does for its multi-dimensional arrays: leading (or “leftmost”) index positions are more significant than trailing (or “rightmost”) index positions in terms of distance between elements in the linear order. Thus, when we layout

array<float,3> y(4,2,3);

which now has 24 elements, it looks in memory like 4 copies of “x” in a row so that the section of “y” described by the expression

y.section(index<3>(1,0,0), extent<3>(1,2,3))

consists of 6 elements which are contiguous in memory and laid out just like “x” above. The result of “section” is an array_view rather than an array. The array_view refers to the same underlying location and uses the same indexing rules so they are also effectively row-major.

This basic rule also allows what we call a projection, i.e. an overload of the indexing call operator where if you provide just single integer index value to an array or array_view with rank N greater than 1, that operation returns an array_view with rank N-1 which drops the most significant dimension.In this example we might have

array_view<float,2> z = y[1];

where the extent of “z” will be the same as the extent of the array “x”above, (2,3).

Lots of numerical programming was done in FORTRAN and there are still many FORTRAN libraries around. FORTRAN chose to use column-major order which illustrates that, at least mathematically, either choice seems reasonable. What matters is that you (the performance-minded programmer) are aware of the layout choice of your language because it has a performance implication. In both CPUs and GPUs, data is transferred from memory to processors in multi-word chunks. Furthermore, virtual memory is implemented almost universally now in terms of pages. It is very advantageous to read memory in the order in which it is laid out so that you read all of a chunk or all of a page before advancing to the next one. Otherwise you waste bandwidth and may thrash the virtual memory system.

Now that you know C++ AMP is row major you would prefer to craft your loop nests so that you iterate over elements of a row in the inner loops. Thus this loop structure

for(int i = 0; i < x.extent[0]; i++) for(int j = 0; j < x.extent[1]; j++) x(i,j) = y(1,i,j);

is preferable over this one:

for(int j = 0; j < x.extent[1]; j++) for(int i = 0; i < x.extent[0]; i++) x(i,j) = y(1,i,j);

The first one will march through “x” (and the section of “y”) in the linear order they are stored in memory while the second one will bounce around. This matters little with only a handful of values but becomes very important when arrays are many megabytes in size.

There is an important implementation detail impacted by storage order. On an accelerator like a GPU, a loop nest is collapsed into a compute domain that we represent in an extent object, and so we express a GPU computation like this with parallel_for_each:

parallel_for_each(x.extent, [=](index<2> idx) restrict(amp) { x[idx] = y[1][idx]; });

The loops here are gone and it is actually the case that they are recreated in essence by the hardware as it schedules threads and warps. The system is built to be row-major so that consecutive threads in a warp will mostly have consecutive least-significant index values (“idx[1]” in this example) and so data will be accessed in the most efficient contiguous manner.

An array view formed as a section of an array need not be contiguous when it uses multiple rows but not entire rows. However, we do guarantee that the least-significant dimension (rows in the rank 2 case) always refers to contiguous data. For example, the section

x.section(index<2>(0,0), extent<2>(2,2))

is an array view that would refer to these elements of the array

The array_view::data method which can be used for views of rank 1 can be used to get an underlying C++ pointer to the data. That pointer can be passed to pointer-based CPU interfaces or used to directly access the data. Continuing the example above we can project down to a single row of “y” and access the data, which in this case has 3 valid locations.

float * p = y[1][2].data();

Future blog posts will discuss other aspects of C++ AMP that rely on row-major order and also more aspects of performance tuning that depend on this as well. Your comments as usual are welcome below or in our forum.

![clip_image001[10] clip_image001[10]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/04/99/metablogapi/4527.clip_image00110_thumb_5D7AA95C.png)

![clip_image001[8] clip_image001[8]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/04/99/metablogapi/2772.clip_image0018_thumb_4F3C306C.png)