Concurency::parallel_for and Concurrency::parallel_for_each

Marko Radmilac, Senior Developer for Microsoft’s Concurrency Runtime, offers the following discussion of parallel iteration in the Concurrency Runtime:

Let me say up front that this is my first blog, so I apologize if my writing format does not match what people have come to expect from a blog. I do hope, however, that this blog will contain enough information to provide some introductory information on the Parallel Patterns Library’s parallel_for and parallel_for_each constructs, when and how to use them. This blog has a Q and A format and contains both basic and advanced information, so feel free to jump to the section that is of most interest to you.

What is parallel_for and where do I find it? As you are probably aware (since you are reading this blog), the native C++ libraries in Visual Studio 2010 were extended to provide rich support for parallel programming. There are different layers at which users can interact with the parallel runtime, the highest one of which is the Parallel Patterns Library (using the header file ppl.h). In it, users can find different constructs that allow them to quickly parallelize their programs without extensive knowledge of scheduling decisions, underlying threads, the surrounding environment, etc. One of these constructs is the parallel_for construct, which allows a user to parallelize a for-loop quickly. Its close cousin is parallel_for_each, providing the same level of ease in parallelizing std::for_each construct.

How do I use parallel_for and when? Parallel for is a construct which takes the body of a for-loop written by a user captured in a functor (for details on functors and lambdas please read Stephan’s excellent blog), divides the work (number of iterations) amongst the available computing resources (processors) and executes that work in parallel. Here’s a simple example of serial to parallel transformation:

for (int i = 0; i < n; i++)

{

iter();

}

becomes

Concurrency::parallel_for(0, n,

[] (int i)

{

iter();

});

A naïve user might decide go ahead and convert all for-loops in the program into parallel for-loops. That would be a mistake, because without an initial feasibility analysis this conversion might be detrimental to the program. There are two aspects that must be considered before a conversion is made, correctness and performance, and they are both explained in the following few paragraphs.

I have converted my for-loops into parallel for-loops. Why doesn’t my code work properly? Converting all for-loops in a program into parallel for-loops may yield an incorrect program. There are quite a few algorithms in which the individual iterations of the loop are not independent. They often require another iteration to have been executed beforehand, so executing them in isolation would likely yield wrong results. A typical example would be a partial sum computation “in place”, on the original array (look at std::partial_sum):

for (int i = 0; i < n; i++)

{

// Array “a” contains both an original sequence

// and the end result

a[i] += a[i-1];

}

In order to compute kth term in a resulting sequence, the k-1th term must be known. If one were to execute iterations in parallel it could be that the k-1th term is not populated by the time the kth term is processed, yielding an incorrect result.

Concurrency::parallel_for(0, n,

[] (int i)

{

a[i] += a[i-1]; // incorrect!

});

I have converted my for-loops into parallel for-loops. Why does my code run slower than serial code? Converting all for-loops in a program into parallel for-loops may yield performance degradation instead of an improvement. Parallel for, as mentioned before, will divide the work amongst available processors and set it up for parallel execution. The initial division of work, setting up worker threads, and invoking a functor for each iteration, all incur a certain cost. That cost is not big, but it is not negligible either. If the work done in a single iteration and number of iterations itself are both small, this overhead is likely to show up as a less than expected speedup, sometimes even a slowdown.

Concurrency::parallel_for(0, 100,

[] (int i)

{

a[i] = b[i] + 1; // only a few cycles

});

In the example above, there is one memory read, one memory write and one add to be performed in a single iteration of the loop. On top of that, there are only 100 iterations in the entire loop. In this case, making a function call to perform this one iteration is likely to be on the same order of magnitude, if not greater, than the entire iteration itself. Therefore, this loop is not a good candidate for parallelization.

I am frustrated! When should I use parallel_for then? You should analyze your program first and identify “hot spots”, i.e. places in your code that are computationally intensive. Identify for-loops that control that computation and try to rewrite them in a way that eliminates dependencies between iterations. Also, in the presence of nested for-loops, it is often more beneficial to parallelize at the outer most loop level, in order to increase the amount of work being done per iteration. Once this analysis is complete, apply parallel for to the given loops and watch your program run faster J.

I was using OpenMP and I noticed that OpenMP executes my workload much faster than parallel_for does. Why is that? OpenMP uses a simple mechanism for scheduling the parallel iterations. It counts the number of iterations, divides them up by the number of processors P, and then schedules P workers to execute individual chunks of work. Each iteration in OpenMP consists of only a function call to the outlined function (in parallel for’s case this is a functor/lambda). On the other hand, parallel for is a more general mechanism, does much more work during preparation for parallel invocation, and much more during each iteration. This impacts the total amount of work (and the number of iterations in the loop) needed for parallel for to be profitable, and that number is higher than OpenMP’s. Therefore, you must have a for-loop with just enough work to make OpenMP profitable, but not enough to do the same for parallel for. If you had less work, both would be slower than serial execution; if you had more, both would be equally profitable because the overhead is amortized by the amount of work being done.

Why does it take so much work per iteration for parallel_for to turn profitable? OpenMP does it with far less work per iteration. As mentioned above, parallel for is a much more general construct than any construct in OpenMP. It should be noted that the Concurrency Runtime (ConcRT) is not less performant than the OpenMP runtime, but the parallel for construct tries to address many more issues than a simple OpenMP for-loop, and these come at a cost. It is possible to reduce the generality of parallel for to a minimum, at which point it becomes very close to the performance of the OpenMP counterpart. You can find a less generalized implementation of parallel_for in our sample deck, called parallel_for_fixed (it uses fixed-partitioning of iterations, without range stealing). The reason for generality of parallel for implementation is given in the following few paragraphs.

My workload inside the for-loop is uneven. Can parallel for help me distribute it properly? Yes, parallel for by default does range stealing and dynamic redistribution of work. That means that if any worker thread finishes its range, it will attempt to help other worker threads with their workloads. This ensures that all computing resources are fully utilized during parallel for execution. OpenMP does this to an extent with dynamic and guided scheduling, but parallel for does it on a per iteration level without using interlocked operations to mark the progress.

Can parallel for support both unsigned and signed loop indices? Do I have to worry about overflow? Yes, parallel_for handles both signed and unsigned indices equally well. As long as the type supports some basic operations found on an integer type, parallel for will handle it well. It will also make sure that the range does not overflow a signed type. This was not a case with OpenMP below version 3.0.

Do I have to worry about overloading the system? What if there are other parts of a process using the Concurrency Runtime? Parallel for automatically detects system load and identifies available processors to use. Therefore, it will be cooperative with the rest of the process, and use only available resources. Once other, busy resources become available, parallel for will quickly find use for them (they will join the computation). OpenMP provides this through its dynamic mode; although, once a parallel region has started executing, any available resources arriving after that point would not be used in the computation.

Can I have blocking code in my loop iterations? What happens if I block? Yes, you can have blocking in your code as long as it is cooperative (using ConcRT blocking APIs, or using Win32 blocking APIs on user mode scheduling -- UMS). When blocking happens, parallel for and ConcRT ensure that another worker is scheduled to pick up where the previous one left off. As a result, parallel for enables users to write code that would not work properly as serial code. The prime example is enabling forward blocking dependency, where ith iteration is blocked until jth iteration executes, where j > i. This code would deadlock in serial. OpenMP does not provide any support for blocking between iterations.

Concurrency::event e;

volatile LONG counter = 0;

Concurrency::parallel_for(0, 64,

[&] (int i)

{

InterlockedIncrement(&counter);

if (i == 63)

{

// all other iterations blocked;

// unblock them

e.set();

}

else

{

// wait for iteration 63 to unblock

e.wait();

}

});

// counter must be at 64 at this point

Can I cancel parallel for in the middle of computation? Yes, you can cancel parallel for at any point by throwing an exception from one of its iterations, or canceling its parent task group. This technique is very useful in performing long searches where computation can be stopped as soon as the result is found.

Concurrency::parallel_for(0, n,

[] (int i)

{

if (found(i))

{

throw ItemFound();

// cancels work immediately

}

});

This can also be achieved by cancelling the parent task_group, thus enabling cancellation on the entire subtree of work.

Parallel for guarantees that only iterations that have already started will complete after cancellation is initiated. This ensures that resources are not wasted. OpenMP does not have any support for cancellation, although one can be built in using a flag.

bool flag = false;

#pragma omp parallel for

for (int i = 0; i < n; i++)

{

if (flag)

{

return;

}

else if (found(i))

{

flag = true; // cancels work

}

}

It should be noted that even with this hand-crafted cancellation scheme OpenMP does not support cancellation of the entire sub-tree of computation, which parallel for does.

Can I throw exceptions inside the parallel for? Will that tear down my application? Yes, you can throw exceptions in the parallel for functor, and that will not result in the termination of your application. An exception on a worker thread will safely be transported to the main thread that initiated the parallel for (if exception is not caught in the body of the functor, naturally) so the user will have an opportunity to catch the exception outside of parallel for. OpenMP has no exception handling support.

Can I use parallel for for STL iterators? This is why we have parallel for each. If your iterator supports random access, it will use the same mechanism used for parallel for. If your iterator only supports forward access, then we have provided code that reduces this case to a random access, at which point parallel for construct is reused.

How many iterations, exactly, does it take to make parallel for better than serial? How many cycles should the functor contain in order for parallel for to beat the serial implementation? This is a difficult question to answer. It depends on a machine configuration, whether there is other parallel work in the process, etc. Therefore, instead of giving a general and non-useful answer, I will attempt to give a concrete answer for a 4-core box that I am using, without any other work on it.

This is how I decided to measure it:

i) I will have an outside loop with the number of repetitions for the experiment. This loop amortizes the initial spin of threads in both OpenMP and ConcRT. Also, it magnifies the result to a level that is easily measurable (above 100 ms).

ii) I will have a dial that controls the amount of work being done per iteration.

iii) I will have a dial that controls the number of iterations in a loop.

When ii) is small and iii) is big we will measure the overhead per iteration (provided that the initial amount of work per iteration is small); when they are both small we will measure the overhead for the initial setup of threads, dividing work, etc.

The work being done will be a simple function call without any work in that function, and ii) will control how many times that function is being called. This is how a simple version of this program would look like:

#include <ppl.h>

#include <windows.h>

#include <stdio.h>

#include <omp.h>

using namespace Concurrency;

#define RUN_TEST test_parallel_for

#define SIZE_OF_ARRAY 10000

#define REPEAT 10000

#define WORK 2

long long counter() {

LARGE_INTEGER li;

QueryPerformanceCounter(&li);

return li.QuadPart;

}

long long frequency() {

LARGE_INTEGER li;

QueryPerformanceFrequency(&li);

return li.QuadPart;

}

// compiled /Od in a separate object as {}

// to avoid inlining and invariant code motion

void noworkhelper();

void work(int index)

{

for (int i=0; i<WORK; i++)

{

noworkhelper();

}

}

typedef void (*FNPTR) (int i);

FNPTR Func = work;

__declspec(noinline)

void test_parallel_for()

{

parallel_for(0, SIZE_OF_ARRAY, Func);

}

__declspec(noinline)

void test_omp_for()

{

#pragma omp parallel for

for (int i = 0; i < SIZE_OF_ARRAY; i++)

{

Func(i);

}

}

void run_data()

{

for (int i = 0; i < REPEAT; i++)

{

RUN_TEST();

}

}

void main()

{

SchedulerPolicy policy(1,

SchedulerKind, ThreadScheduler);

Scheduler::SetDefaultSchedulerPolicy(policy);

double etime = 0.0;

long long start = counter();

run_data();

long long finish = counter();

etime = (finish - start) * 1000.0 / frequency();

printf("Elapsed time for test: %g ms\n", etime);

Scheduler::ResetDefaultSchedulerPolicy();

}

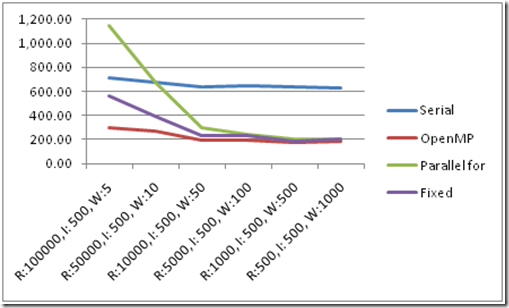

Before showing the results, it should be noted that these are pure overhead tests, designed to show costs related to creating, running and maintaining parallel regions. Here are the raw tables:

R:100000, I: 500, W:5 |

R:50000, I: 500, W:10 |

R:10000, I: 500, W:50 |

R:5000, I: 500, W:100 |

R:1000, I: 500, W:500 |

R:500, I: 500, W:1000 |

|

Serial |

713.2 |

670.9 |

637.1 |

649.4 |

632.9 |

630.2 |

OpenMP |

295.1 |

265.5 |

197.5 |

189.6 |

173.4 |

184.6 |

Parallel for |

1142.1 |

671.7 |

293.7 |

241.3 |

202.5 |

200.9 |

Fixed |

564.3 |

388.9 |

232.7 |

233.6 |

183.3 |

206.7 |

R – the number or repeats, I – the number of iterations, W – the number of units of work, measured times all in milliseconds

Small number of iterations, small work 1

Small number of iterations, small work 1

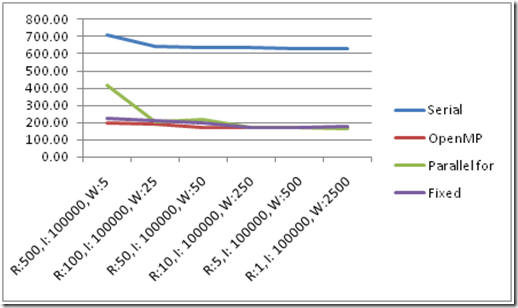

Now let’s look at what happens at high number of iterations and low amounts of work. This will tell us how costly each iteration is to execute in OpenMP and parallel for.

R:500, I: 100000, W:5 |

R:100, I: 100000, W:25 |

R:50, I: 100000, W:50 |

R:10, I: 100000, W:250 |

R:5, I: 100000, W:500 |

R:1, I: 100000, W:2500 |

|

Serial |

712.1 |

645.2 |

638.4 |

637.1 |

632.7 |

630.5 |

OpenMP |

198.6 |

194.6 |

170.9 |

168.3 |

169 |

168.5 |

Parallel for |

420.3 |

207.6 |

219.9 |

169.1 |

170.6 |

164.9 |

Fixed |

226.6 |

213.6 |

196.8 |

169.1 |

172.9 |

179.5 |

R – the number or repeats, I – the number of iterations, W – the number of units of work, measured times all in milliseconds

Large number of iterations, small work 1

Conclusion -- Parallel for is a general purpose mechanism for executing serial for-loops in parallel. It is up to the user to determine legality and profitability of such a transformation. Parallel for construct increases the robustness and the flexibility of parallelized code by having built in support for features such as: cooperative blocking during iterations, immediate cancellation, signed integer iteration type with arbitrarily large ranges, dynamic redistribution of workload, support for STL iterators, etc. However, the generality of parallel for comes at a cost, and there are 2 types of overhead that make our parallel for implementation slower than OpenMP in some cases. The first one is the initial setup of the worker threads and the range stealing mechanism, and the second one is additional work being done per iteration to support cancellation and range stealing. This may result in poor scaling of the parallel for-loop, if the amount of work being done per iteration is very small. If your program falls into that category, and you would still like to see speedup from executing in parallel, please use a specialized, fixed-partitioning version of parallel for, parallel_for_fixed, available in our samples collection. It will scale as good as OpenMP in the cases where limiting overhead is essential.