Concurrency Runtime and Windows 7

Microsoft has recently released a beta for Windows 7, and a look at the official web site (https://www.microsoft.com/windows/windows-7/default.aspx) will show you a pretty impressive list of new features and usability enhancements. I encourage you to go look them over for yourself, but in this article I’m going to focus on a couple of Win7 features that are of particular importance to achieving the most performance of your parallel programs.

1. Support for more than 64 processors

2. User-Mode Scheduled Threads

Both of the new features I’m going to talk about will be supported in the Microsoft Concurrency Runtime, which will be delivered as part of Visual Studio 10.

An important note about each of these features is that they’re only supported on the 64-bit Windows 7 platform.

More Than 64 Processors

The most straightforward of these new features is Windows 7’s support for more than 64 processors. With earlier Windows OS’s, even high end servers could only schedule threads among a maximum of 64 processors. Windows 7 will allow threads to run on more than 64 processors by allowing threads to be affinitized to both a processor group, and a processor index within that group. Each group can have up to 64 processors, and Windows 7 supports a maximum of 4 processor groups. The mechanics of this new OS support are detailed in a white paper which is available at https://www.microsoft.com/whdc/system/Sysinternals/MoreThan64proc.mspx.

However, unless you actively modify your application to affinitize work amongst other processor groups, you’ll still be stuck with a maximum of 64 processors. The good news is that if you use the Microsoft Concurrency Runtime on Windows 7, you don’t need to be concerned at all with these gory details. As always, the runtime takes care of everything for you, and will automatically determine the total amount of available concurrency (e.g., total number of cores), and utilize as many as it can during any parallel computation. This is an example of what we call a “light-up” scenario. Compile your Concurrency Runtime-enabled application once, and you can run it on everything, from your Core2-Duo up to your monster Win7 256-core server.

User Mode Scheduling

User Mode Scheduled Threads (UMS Threads) is another Windows 7 feature that “lights up” in the Concurrency Runtime.

As the name implies, UMS Threads are threads that are scheduled by a user-mode scheduler (like Concurrency Runtime’s scheduler), instead of by the kernel. Scheduling threads in user mode has a couple of advantages:

1. A UMS Thread can be scheduled without a kernel transition, which can provide a performance boost.

2. Full use of the OS’s quantum can be achieved if a UMS Thread blocks for any reason.

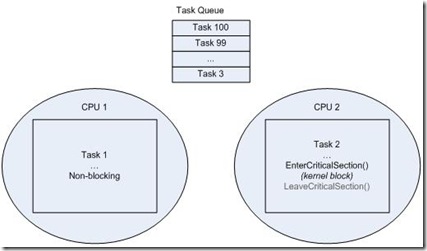

To illustrate the 2nd point, let’s assume a very simple scheduler with a single work queue. In this example, I’ll also assume that we have 100 tasks that can be run in parallel on 2 CPU’s.

Here we’ve started with 100 items in our task queue, and two threads have picked up Task 1 and Task 2 and are running them in parallel. Unfortunately, Task 2 is going to block on a critical section. Obviously, we would like the scheduler (i.e. the Concurrency Runtime) to use CPU 2 to run the other queued tasks while Task 2 is blocked. Alas, with ordinary Win32 threads, the scheduler cannot tell the difference between a task that is performing a very long computation and a task that is simply blocked in the kernel. The end result is that until Task 2 unblocks, the Concurrency Runtime will not schedule any more tasks on CPU 2. Our 2-core machine just became a 1-core machine, and in the worst case, all 99 remaining tasks will be executed serially on CPU 1.

This situation can be improved somewhat by using the Concurrency Runtime’s cooperative synchronization primitives (critical_section, reader_writer_lock, event) instead of Win32’s kernel primitives. These runtime-aware primitives will cooperatively block a thread, informing the Concurrency Runtime that other work can be run on the CPU. In the above example, Task 2 will cooperatively block, but Task 3 can be run on another thread on CPU 2. All this involves several trips through the kernel to block one thread and unblock another, but it’s certainly better than wasting the CPU.

The situation is improved even further on Windows 7 with UMS threads. When Task 2 blocks, the OS gives control back to Concurrency Runtime. It can now make a scheduling decision and create a new thread to run Task 3 from the task queue. The new thread is scheduled in user-mode by the Concurrency Runtime, not by the OS, so the switch is very fast. Now both CPU 1 and CPU 2 can now be kept busy with the remaining 99 non-blocking tasks in the queue. When Task 2 gets unblocked, Win7 places its host thread back on a runnable list so that the Concurrency Runtime can schedule it – again, from user-mode – and Task 2 can be continued on any available CPU.

You might say, “hey my task doesn’t do any kernel blocking, so does this still help me?” The answer is yes. First, it’s really difficult to know whether your task will block at all. If you call “new” or “malloc” you may block on a heap lock. Even if you didn’t block, the operation might page-fault. An I/O operation will also cause a kernel transition. All these occurrences can take significant time and can stall forward progress on the core upon which they occur. These are opportunities for the scheduler to execute additional work on the now-idle CPU. Windows 7 UMS Threads enables these opportunities, and the result is greater throughput of tasks and more efficient CPU utilization.

(Some readers may have noticed a superficial similarity between UMS Threads and Win32 Fibers. The key difference is that unlike a Fiber, a UMS Thread is a real bona-fide thread, with a backing kernel thread, its own thread-local state, and the ability to run code that can call arbitrary Win32 API’s. Fibers have severe restrictions on the kinds of Win32 API’s they can call.)

Obviously the above example is highly simplified. A UMS Thread scheduler is an extremely complex piece of software to write, and managing state when an arbitrary page fault can swap you out is challenging to say the least. However, once again users of the Concurrency Runtime don’t have to be concerned with any of these gory details. Write your programs once using PPL or Agents, and your code will run using Win32 threads or UMS Threads.

For more details about UMS Threads, check out Inside Windows 7 - User Mode Scheduler (UMS) with Windows Kernel architect Dave Probert on Channel9.

Better Together

Windows 7 by itself is a great enabler of fine grain parallelism, because of the above two specific features, as well as some other performance improvements made at the kernel level. The Concurrency Runtime helps bring the fullest potential of Windows 7 into the hands of programmers in a very simple and powerful way.