Creating distributed workflows with Azure Durable Functions

Editor's note: The following post was written by Visual Studio and Development Technologies MVP Tamir Dresher as part of our Technical Tuesday series Mia Chang of the MVP Award Blog Technical Committee served as the technical reviewer for this piece.

Creating a distributed system is not an easy task. Besides writing the code that does the functionality you want, you must protect against the inevitable faults that a distributed system may bring – such as network loss, VM shutdown due to service upgrade, and latency, to name a few. You can probably think of more examples that are likely caused the fallacies of distributed computing, too.

Azure Functions and the trend of serverless architecture has opened the door to a new and exciting world of opportunities, which allow developers to create cloud applications easily and rapidly.

However, one of the areas that serverless architecture doesn’t make easier is the orchestration of a long-running distributed process. Orchestration in this sense, is your processes ability to span multiple functions that might depend on one another -for example, an output from one function needs to be sent as an input to another function - and making sure that all the parts play nicely together in a synchronized and consistent way. All this happens while still being resilient to the faults that might occur when you run in a cloud environment.

Developers have been struggling with ways to create distributed workflows for decades. Examples of such workflows are billing processes, Multi-factor authentication (MFA), or validating a loan request. All these workflows span multiple services, and can last between a few seconds to a few days.

Patterns for achieving distributed workflows were introduced over the years - including for example, Message-based communication and Idempotency of requests. Still developers had to write the same boilerplate code to work with those concepts, which made their code harder to understand and error-prone.

Azure Durable Functions

Azure Durable Functions is a new and exciting feature in the serverless computing sphere. Unlike traditional Functions, which run in a stateless and independent nature, Durable Functions allows you to create workflows that can span and coordinate multiple workers, with two new binding functions, while still keeping your algorithm correct and your deployments agile.

Behind the scenes, Azure Durable Functions will create Queues and Tables on your behalf and hide the complexity from your code so you can concentrate on the real problem you’re trying to solve.

Disclaimer: At the time of writing, Azure Durable Functions is still in beta and is not production ready. Therefore, the things written in this article are subject to change.

To start using Azure Durable Functions, you need to follow the installation steps described here: https://azure.github.io/azure-functions-durable-extension/articles/installation.html

Hello Durable Functions

Let's look at the basic "Hello World" example, and then introduce you to the new concepts that Azure Durable Functions brings.

using System.Collections.Generic;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

public static class HelloSequence

{

[FunctionName("HelloWorld")]

public static async Task<List<string>> HelloWorld(

[OrchestrationTrigger] DurableOrchestrationContext context,

TraceWriter log)

{

var outputs = new List<string>();

outputs.Add(await context.CallFunctionAsync("Say", "Hello"));

outputs.Add(await context.CallFunctionAsync("Say", " "));

outputs.Add(await context.CallFunctionAsync("Say", "World!"));

log.Info(string.Concat(outputs));

// returns ["Hello", " ", "World!]

return outputs;

}

[FunctionName("Say")]

public static string Say(

[ActivityTrigger] DurableActivityContext activityContext,

TraceWriter log)

{

string word = activityContext.GetInput();

log.Info(word);

return word;

}

}

In this example, we have two Azure Functions interacting with one another. The HelloWorld function is the orchestrator which determines the overall flow. In this case, HelloWorld calls the Say function three times in order to build the sentence "Hello World!”, providing each part of the sentence in each call. The Say function plays this role, and Echo function prints the word received as input and then returns it to the caller.

What makes this code different from regular functions is that whenever the call to the Say function is made, the Say function will start its execution on some worker-node. But meanwhile the HelloWorld orchestrator is dormant and the resources are freed. When the Say function completes its execution, the HelloWorld function state is restored and its execution is resumed.

How to write durable functions

The Azure Durable Function library adds two function bindings that are used by the system to find which functions should be treated as Durable:

- OrchestrationTrigger – All orchestrator functions must use this trigger type. This input binding is connected to the DurableOrchestrationContext class that is used by the orchestrator to call durable-activities and create durable control flow

- ActivityTrigger – marks a function as activity, which allows it to be called by an orchestrator function. This input binding is connected to the DurableActivityContext class which allows the activity function to get the input and set the output.

These two triggers and related classes are the basic elements you need in order to write durable functions, but there are a few guidelines to remember. First and foremost, the orchestrator function must be determenstic. This means that function must avoid running code with side-effects (for example using DateTime.Now) except for using the functionality provided by the DurableOrchestrationContext (for example CurrentUtcDateTime that provides the current date/time in a safe and durable way). This also means that you should avoid calling I/O operations (for example but using HttpClient or File.Open() ) except for calling durable activities by using the DurableOrchestrationContext.CallFunctionAsync().

The correct way to run this non-deterministic code or I/O bound code, is to put it inside a durable-activity function.

Executing the orchestrator function

So far I've shown you how to write your durable functions, but now I’ll show you how to actually run them.

Orchestrator function can be invoked by using the DurableOrchestrationClient. You can use an instance of this class by specifying the OrchestrationClient binding inside a regular Azure Function, possibly one that is exposed by HttpTrigger.

public static class HelloSequence

{

[FunctionName("HelloWorldExecutor")]

public static async Task<HttpResponseMessage> ExecuteHelloWorld(

[HttpTrigger(AuthorizationLevel.Anonymous, methods: "post", Route = "HelloWorld")] HttpRequestMessage req,

[OrchestrationClient] DurableOrchestrationClient client)

{

object functionInput = null;

string instanceId =

await client.StartNewAsync(nameof(HelloWorld), functionInput);

return req.CreateResponse(HttpStatusCode.OK,

$"New HelloWorld instance created with ID = '{instanceId}'");

}

// rest of the class implementation

}

In this example, I created an HTTP triggered function and bound one of its parameters to the DurableOrchestrationClient by specifying the OrchestrationClient attribute.

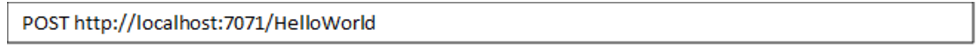

The function is invoked by sending an HTTP POST request to the address https://{host}/HelloWorld . The ExecuteHelloWorld function starts a new instance of the HelloWorld orchestratorfunction by calling the DurableOrchestrationClient.StartNewAsync() method, and passing it the name of the function and an input, which is empty in this case.

Here is a simple output from the request :

Here’s the response:

Beside starting new orchestrator functions, the DurableOrchestrationClient provides the following services:

- GetStatusAsync – returns the status of a Durable Function, including its result if completed successfully or the error message if it failed.

- TerminateAsync – abruptly stop a Durable Function.

- RaiseEventAsync – send an event to function. This allows you to control the behavior of a function by signaling it from outside, for example by using a human-driven interaction such as one that is received from UI.

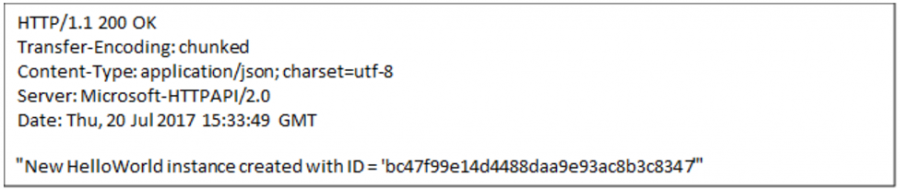

It's also worth mentioning that the services mentioned above can also be exposed as REST APIs by simply calling the DurableOrchestrationClient.CreateCheckStatusResponse() method. So if I change the return statement in the ExecuteHelloWorld function into return client.CreateCheckStatusResponse(req, instanceId); then the response from executing the function will change into this (trimmed for brevity):

I've bolded the important parts for clarity. The Location header shows the URI of the instance of the HelloWorld orchestrator function. With this URI we can check the status of the execution (shown in statusQueryGetUri), signal the workflow with an event (shown in sendEventPostUri), or stop the execution (shown in terminatePostUri).

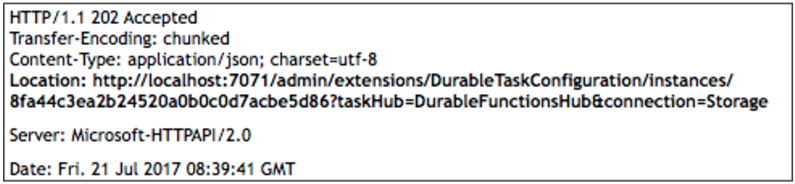

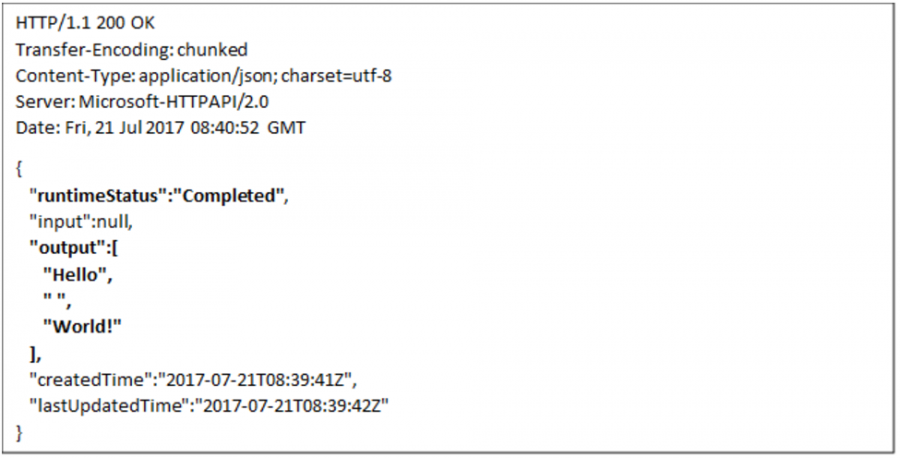

For example, sending a HTTP request to the URI shown in to the statusQueryGetUri after the function has completed, will yield these results.

Request:

Response:

Looking under the hood - What makes the Durable Functions Durable?

Durable Communication

You might be wondering what the Durable stands for. In order to understand where the durability comes from when to looks under the hood of how Azure Durable Functions work.

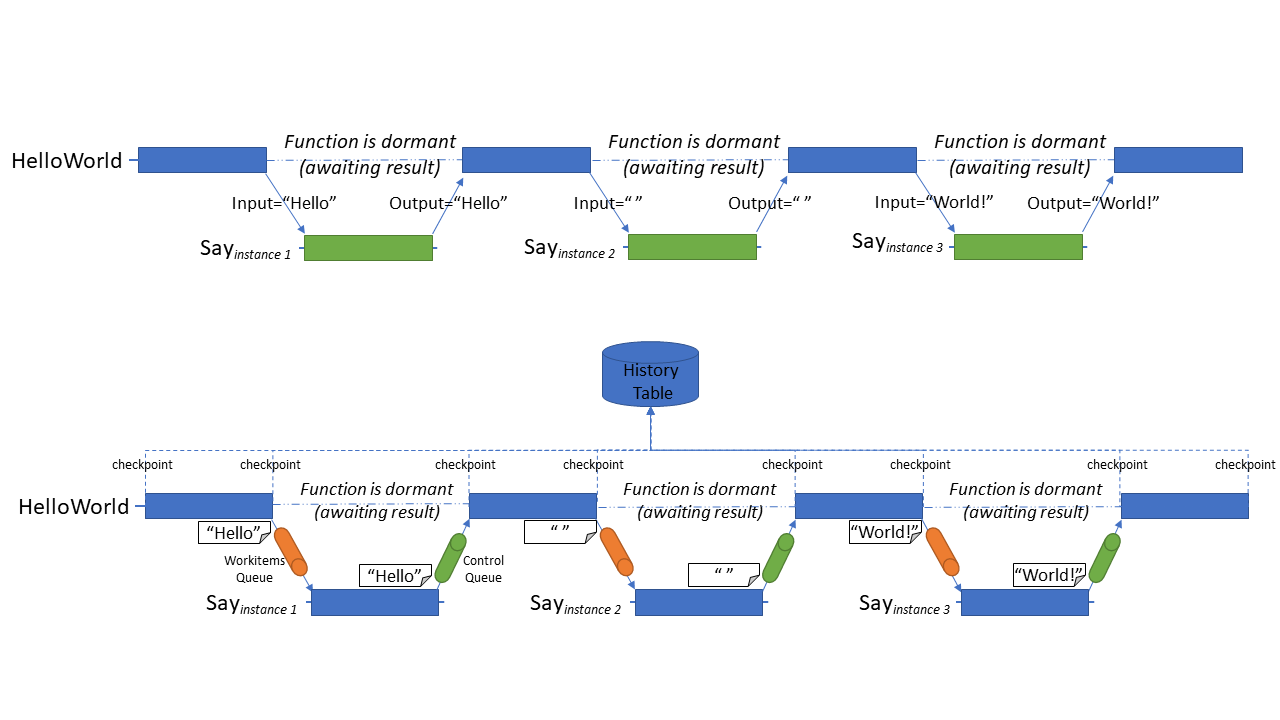

Although the HelloWorld function has a very simple functionality, it's actually very interesting when you look under the cover. Here's an illustration of what's really going on.

All the communication between the Durable Orchestrator and the Durable Activities is done through queues. There are two type of queues that are used

- Work-item Queue – used by the orchestrator to request a processing by some Durable Activity Function

- Control Queue – multiple instance of these queue-type exist and they hold the messages that control the Durable Orchestrator Functions.

Whenever a call to an activity function is made through the DurableOrchestrationContext.CallFunctionAsync() method, a message is enqueued to the Work-items queue. The Work-multiple instance of theseitems queue is monitored by multiple workers to achieve scalability and the message inside will trigger the execution of a durable activity function.

When the Activity Function is completed, the result is sent back to the Orchestrator Function through the Control Queue. Note that more than one Control Queue might exist at the same time to achieve scalability, but the result will always be sent to queue associated with the orchestrator function that originated the request.

Besides results coming from Activity Functions, the Control Queue is used to send other control messages to the Orchestrator Function - such as initiating a new instance, timer messages, and events singling.

One of the great benefits of working with message-based communication through the queues is that in the case of faults that cause the function to stop while processing the messages, the message won’t get lost. And another instance could start processing the message and by doing this, we get the durability of the communication.

Durable State with Checkpoints

I mentioned before that while the Orchestrator Function awaits the call that was made to the Activity Function, the resources are freed. And after the result is received the Orchestrator is resumed.

This brings the question: How is the state maintained and restored in the case that the VM executed the function isn't there anymore?

Azure Durable Functions use a technique called Checkpoint & Replay. In a nutshell, the framework uses an history table that keeps track of the progress of the function by adding checkpoints whenever the state changes. And whenever the function needs to resume execution, the function starts executing from the start and state changes are replayed.

The history table follows the Event Sourcing pattern, and instead of saving the final state, every change to the state is added to the table. This includes the results that were received from the activity functions, and so on. When the function is replayed and reaches the call to the activity function, instead of re-executing it again, the previous result is restored immediately. This makes the Orchestrator Function not only durable and reliable but also deterministic, regardless of whether the VM executing the function was changed.

Summary

Developing distributed systems has always been challenging. And as developers we are happy to use tools that makes our tasks easier. Azure Durable Functions helps to make the development of distributed workflows as easy as if they were running in single process in a sequential way.

Azure Durable Functions is still young, and there are still things that needs to mature - versioning, for example -before it can be promoted to run in production, but the potential it has in the world of cloud application is impressive. I encourage you follow the development in the github repository https://github.com/Azure/azure-functions-durable-extension, and participate in the discussions that can shape its future.

Tamir Dresher is a software architect, consultant, instructor and technology addict that works as Senior Architect at CodeValue Israel. Tamir is a Microsoft MVP in Visual Studio and Development Technologies and is the Author of the book Rx.NET in Action published by Manning. Tamir teach software engineering at the Ruppin Academic Center and is a regular speaker at local user groups and international conferences. Follow him on Twitter @ tamir_dresher

Tamir Dresher is a software architect, consultant, instructor and technology addict that works as Senior Architect at CodeValue Israel. Tamir is a Microsoft MVP in Visual Studio and Development Technologies and is the Author of the book Rx.NET in Action published by Manning. Tamir teach software engineering at the Ruppin Academic Center and is a regular speaker at local user groups and international conferences. Follow him on Twitter @ tamir_dresher