ReFS: Log-Structured Performance And I/O Behavior

Anton Kolomyeytsev is the CEO, Chief Architect & Co-Founder of StarWind Inc. Anton is a Microsoft Most Valuable Professional [MVP], and a well-known virtualization expert. He is the person behind 99% of StarWind's technologies.

Anton Kolomyeytsev is the CEO, Chief Architect & Co-Founder of StarWind Inc. Anton is a Microsoft Most Valuable Professional [MVP], and a well-known virtualization expert. He is the person behind 99% of StarWind's technologies.

Follow him on Twitter @RedEvolutionIX

Microsoft introduced Resilient File System (ReFS) with Windows Server 2012 in order to replace NTFS. ReFS is a local file system that provides advanced protection from common errors and silent data corruption. What’s unique about it, is that it is designed to provide scalability and performance for constantly growing data set sizes and dynamic workloads. It can repair the corrupted data in action with the usage of the alternate copy provided by the Microsoft Storage Spaces. If the damage is not fixed automatically, the saving process is placed in the corruption area, and this avoids volume downtime.

Metadata protection and optional data protection can be provided in ReFS for every single volume, directory, or file. Additionally, it features error-scanning; this means that a data-integrity scanner inspects the volume to identify latent corruption, and initiate the repair process. The file system provides high scalability - notably, file sizes up to 16 exabytes and a maximum theoretical volume size of 1 yottabyte (one trillion terabytes) are supported.

There is a 64-bit checksum for all ReFS metadata, and it is stored separately from the file data. The file system, in turn, can have its own checksum in a separate integrity stream, too. With FileIntegrity on, ReFS automatically examines this checksum data to check data integrity. For reference, with ReFS predecessors, administrators had to manually run CHKDSK or similar utilities for this purpose. So, with FileIntegrity on, the system “knows” about any data corruption. With FileIntegrity off, it doesn’t.

We were curious about how ReFS works and decided to do some testing in order to explore this. So, we looked at how good the ReFS functions were under a normal virtualization workload, and ran tests in several stages.

The first test was intended to study I/O behavior in ReFS with the FileIntegrity option off and on. We were aiming to explore the influence of the scanning and repairing processes on it. The full testing process is described here.

The second test was designed to explore ReFS performance, again with FileIntegrity option off and on.

Test 1: I/O Behaviour

We decided to check if the FileIntegrity option is effective for random I/O, which is typical for virtualization workloads. We therefore examined how a ReFS-formatted disk worked with FileIntegrity off and then with FileIntegrity on, while doing random 4K block writes.

In order to monitor the tests, we used two utilities – DiskMon, which logs and shows all HDD activity on a system, and ProcMon, which displays real-time file system, registry and process/thread activity.

The workload was created with the Iometer.

The testing procedure was as follows:

- First, the disk was ReFS-formatted with the FileIntegrity off. Then we started DiskMon and configured it to monitor the target HDD, and started ProcMon to filter Iometer test file. Afterwards, we started the Iometer with random 4K access pattern and pulled the utility logs together.

- Next, the disk was ReFS-formatted with the FileIntegrity on. As in the previous step, we again started DiskMon and configured it to monitor the target HDD, and started ProcMon to filter Iometer test file. Then, we started the Iometer with random 4K access pattern and collected the utility logs.

- Finally, we exported the logs into MS Excel and compared the HDD work in both scenarios.

What we observed during the testing:

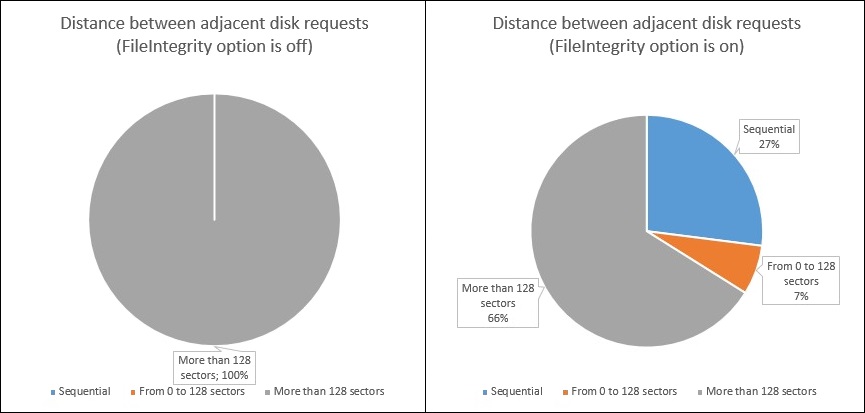

- With FileIntegrity off, all random address requests were transferred to the target physical disk in the same order. With FileIntegrity on, they were partly (27%) transformed into sequential.

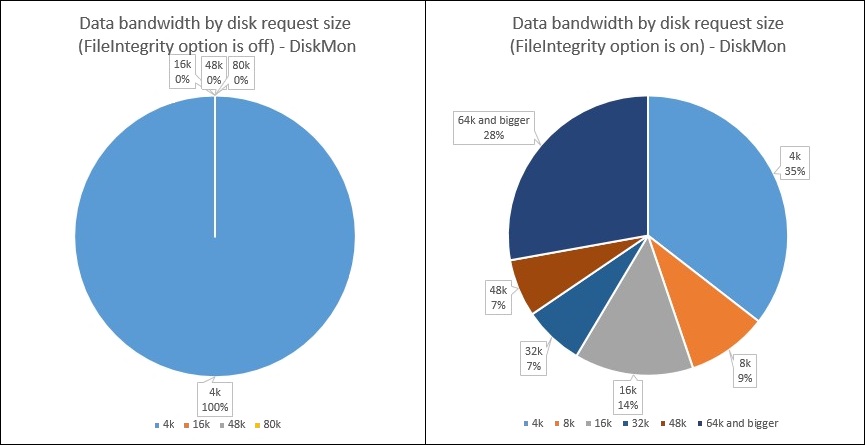

- With FileIntegrity off, the 4К write requests were transferred to the target disk in the same state. When FileIntegrity was on, 35% of the requests were transferred as is, and the rest of them were transformed into bigger block requests.

Click here to learn more and see the whole testing procedure.

Click here to learn more and see the whole testing procedure.

Test 2: Performance

To see how ReFS performs with FileIntegrity off and on, we decided to test operation of the hard drive under the following scenarios:

- Unformatted

- NTFS-formatted

- ReFS-formatted with FileIntegrity off

- ReFS-formatted with FileIntegrity on

As in the previous test, the workload was created with the Iometer.

The testing results showed:

- NTFS-formatted disk and RAW disk performances were almost the same. The requests sizes and requests sequences were identical in both cases. The explanation is that, as a conventional file system, NTFS does not change requests sequence and requests pattern.

- ReFS-formatted disk performance with FileIntegrity off is equal to the performance for this disk RAW and NTFS-formatted. The size of I/O blocks didn’t change, and there were no considerable changes in the pattern, either. Also, there were no changes in free disk space, which is a typical feature of a conventional file system.

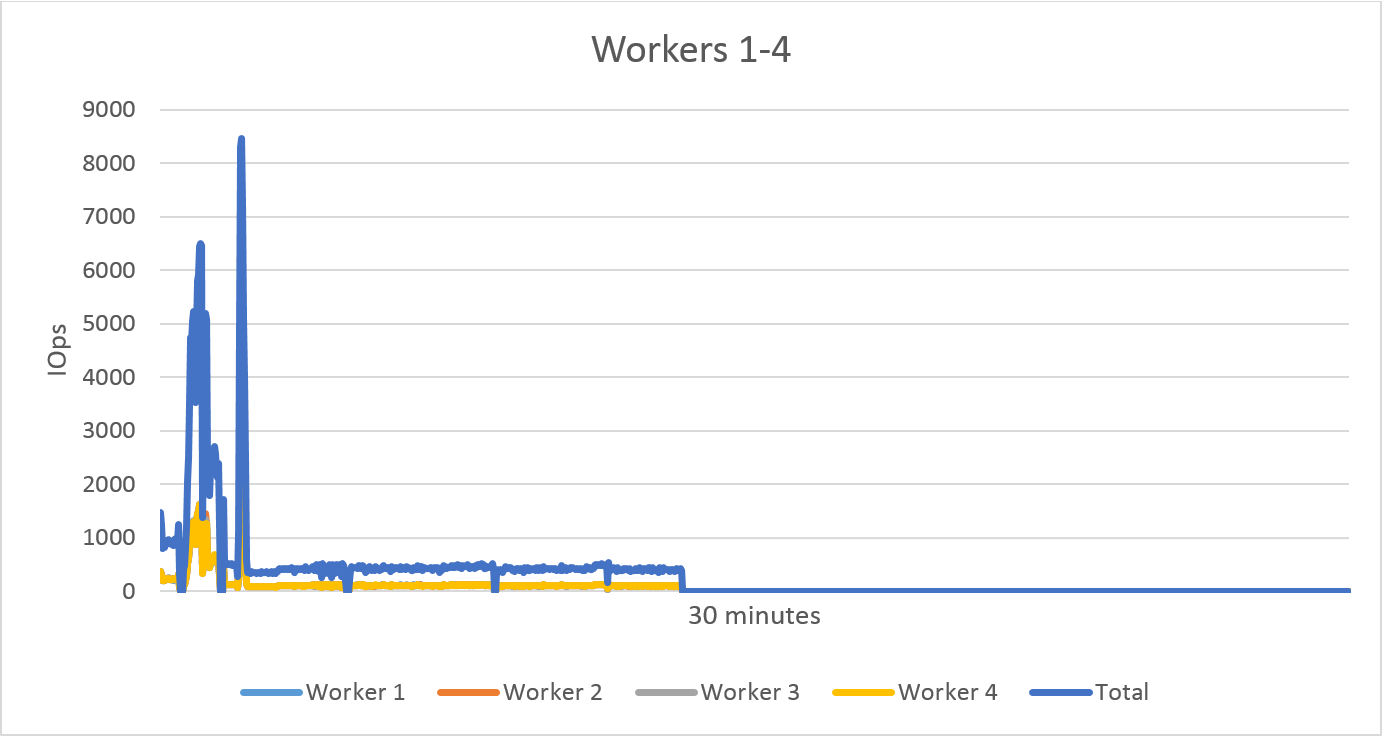

- As for the ReFS-formatted disk with FileIntegrity on, a strong increase of performance value was observed first. But after a while, these values reverted to normal. In the end, the system froze with zero results. This is shown on the diagram below. The free disk space was volatile (within 1 Gigabyte), which is characteristic of the log-structured file system.

Conclusion

ReFS with FileIntegrity off has functions similar to conventional file systems before it, like NTFS. We’ve seen proof of this during our testing: the way random write requests were processed, as well as the free disk space condition. In this mode, ReFS is well suited for the modern high-capacity disks and huge files, because no chkdsk or scrubber are active.

With FileIntegrity on, ReFS becomes similar to Log-Structured File System. There are fluctuations in free disk space, changes of sequence of commands, and changes of requests. There are also changes in 4K writes – some of them become sequential and some become bigger. This works well for virtualization workloads, because transforming multiple small random writes into bigger pages improves performance and helps avoid the so-called “I/O blender” effect. This issue typically occurs for virtualization and stands for an effect of strong performance deprivation, which occurs as a result of merger of multiple virtualized workloads into a stream of random I/O. Solving this problem used to be expensive. Now we have LSFS (WAFL, CASL) and as we discovered, ReFS can help to tackle this problem, too.

However before we finish, there is another issue that arises: when the writes become sequential, the reads are scrambled, so in the case that an application makes a log, we have a problem--typically called “log-on-log” (check it out here).

Our main findings are that ReFS with FileIntegrity on works like Log-Structured File System. Is this unquestionably good news? The issue is that, with FileIntegrity on, we don’t know the exact amount of the workload. So, there are more things about ReFS to test and explore. We’ll keep you posted.

*This is technically a digest for a series of articles on ReFS testing. You can read the full versions here:

https://www.starwindsoftware.com/blog/log-structured-file-systems-microsoft-refs-v2-short-insight https://www.starwindsoftware.com/blog/log-structured-file-systems-microsoft-refs-v2-investigation-part-1 https://www.starwindsoftware.com/blog/refs-performance