Private Kubernetes Cluster in Azure (Government)

In previous blog posts I have discussed how to deploy Kubernetes clusters in Azure Government and configure an Ingress Controller to allow SSL termination, etc. In those previous scenarios, the clusters had public endpoints. This may not work for Government agencies that have to comply with Trusted Internet Connection (TIC) rules, etc. In this blog post I will show that the clusters can be made private and we can expose services with private IP addresses outside the cluster. If you have not read the previous blog posts, I would recommend doing so before continuing.

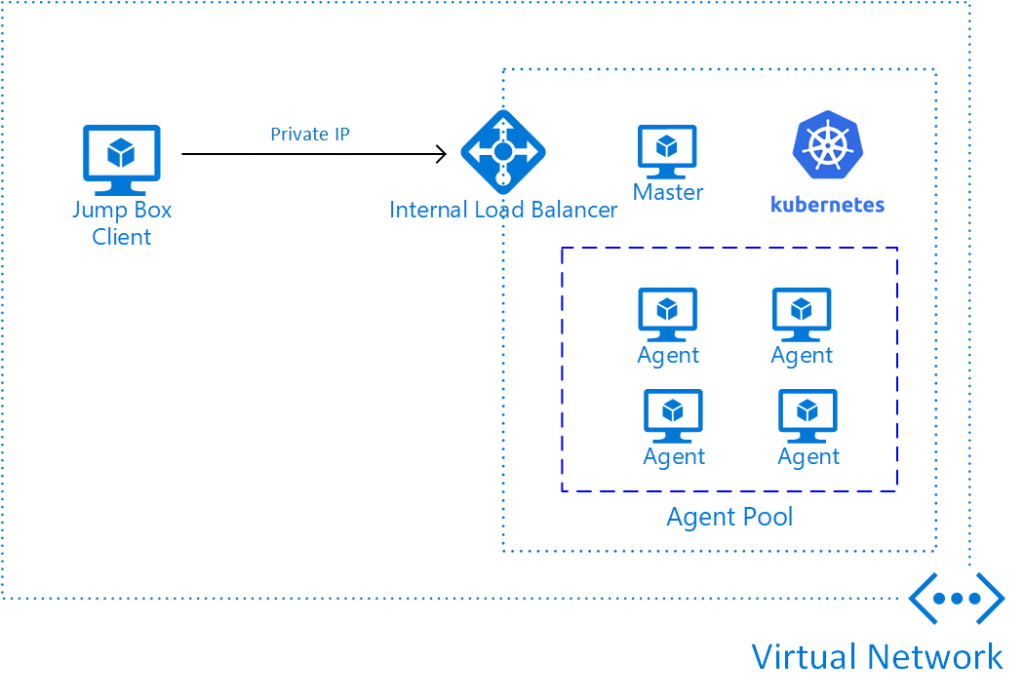

The topology we are aiming for would look something like this:

The entire Kubernetes cluster is within a virtual network and the clients accessing the cluster do so from within the network. In the diagram above, we have deployed an jump box that we will be using to interact with the cluster and the applications running within it. In a more practical scenario, the clients could be workstations deployed in the virtual network, a virtual network peered with the Kubernetes network or even an on-premises client accessing the virtual network through a VPN or Express Route connection.

Deploying a private cluster

ACS Engine makes it easy to deploy a private cluster; you simply have to define the "privateCluster" section of the cluster definition (a.k.a. api model). In my acs-utils GitHub repository, I have an example of private cluster definition. To deploy this cluster:

[shell]

#Get sub ID, make sure you are logged in

subscription=$(az account show | jq -r .id)

#Name cluster resource group and choose region

rgname=mykubernetes

loc=usgovvirginia

#Create resource group and service principal

rg=$(az group create --name $rgname --location $loc)

rgid=$(echo $rg | jq -r .id)

sp=$(az ad sp create-for-rbac --role contributor --scopes $rgid)

#Convert the api model file to add Service Principal, etc.

./scripts/convert-api.sh --file api-models/kubernetes-private.json --dns-prefix privateKube -c $(echo $sp | jq -r .appId) -s $(echo $sp | jq -r .password) | jq -M . > converted.json

#Deploy the cluster

acs-engine deploy --api-model converted.json --subscription-id $subscription --resource-group $rgname --location $loc --azure-env AzureUSGovernmentCloud

[/shell]

After deploying, you will not be able to reach the cluster until you log into the jump box (unless you are deploying from a VM in the virtual network). You will be able to access the jump box with:

[shell]

ssh azureuser@DNS-NAME.REGION-NAME.cloudapp.usgovcloudapi.net

[/shell]

The kubeconfig file should already be on the jump box and kubectl should be installed. If you need information on setting this up, please see the first blog post on Kubernetes in Azure Government.

Deploying a workload with private service endpoint

Now that we have a private cluster, we will want services that are exposed outside the cluster to have private IP addresses. I have made a simple example of a manifest file that does that:

[plain]

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: web-service-internal

spec:

replicas: 1

template:

metadata:

labels:

app: web-service-internal

spec:

containers:

- name: dotnetwebapp

image: hansenms/aspdotnet:0.1

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: frontend-service-internal

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

selector:

app: web-service-internal

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

[/plain]

The private IP address is achieved with the service.beta.kubernetes.io/azure-load-balancer-internal: "true" annotation on the Service resource. You can deploy with with:

[plain]

kubectl apply -f manifests/web-service-internal.yaml

[/plain]

and verify the internal IP address with:

[plain]

kubectl get svc

[/plain]

You will see that the EXTERNAL-IP is in fact a private IP address in the virtual network - it is external to the cluster but not to the virtual network.

Ingress Controller with private IP address

In my previous blog post, I showed how to set up an Ingress Controller and use Ingress rules to do SSL termination, etc. You will want to do the same for your private cluster. The configuration of the Ingress Controller is almost identical, first we have to deploy the Ingress Controller itself:

[shell]

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

[/shell]

Now instead of deploying the default Service to expose the Ingress Controller outside the cluster, you can use my modified one:

[shell]

kubectl apply -f https://raw.githubusercontent.com/hansenms/acs-utils/master/manifests/ingress-loadbalancer-internal.yaml

[/shell]

I have reproduced that Service definition here:

[plain]

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app: ingress-nginx

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

externalTrafficPolicy: Local

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

[/plain]

If you compare to the generic one, you will notice that the only difference is the service.beta.kubernetes.io/azure-load-balancer-internal: "true" that we used before.

You can find the private IP address of the Ingress Controller with:

[shell]

kubectl get svc --all-namespaces -l app=ingress-nginx

[/shell]

Now we can deploy a TLS secret:

[bash]

kubectl create secret tls SECRET-NAME --cert /path/to/fullchain.pem --key /path/to/privkey.pem

[/bash]

You can then use my example web-service-ingress.yaml to deploy a private web app with an SSL cert:

[shell]

kubectl apply -f manifests/web-service-ingress.yaml

[/shell]

You can validate that the certificate has been appropriately installed with:

[shell]

curl --resolve mycrazysite.mydomain.us:443:XX.XX.XX.XX https://mycrazysite.mydomain.us

[/shell]

You should not see any certificate validation errors.

Conclusions

This blog post concludes a series of three posts where we walked through setting up a Kubernetes cluster in Azure Government, setting up an Ingress Controller for reverse proxy and SSL termination, and finally we demonstrated that this could all be done in a private cluster with no public IP addresses.

Let me know if you have questions/comments/suggestions.