Using Azure Traffic Manager for Private Endpoint Failover – Automation

In a recent blog post, I described that Azure Traffic Manager (ATM) can be useful in failover scenarios for applications with private endpoints, e.g. internal web apps running in an Internal Load Balancer (ILB) App Service Environment (ASE). In the previous post, I described how the failover can be done manually in the portal and in this blog post, I will describe how the failover can be automated. If you have not done so yet, please read 1) the Azure Traffic Manager documentation, and b) my previous blog post.

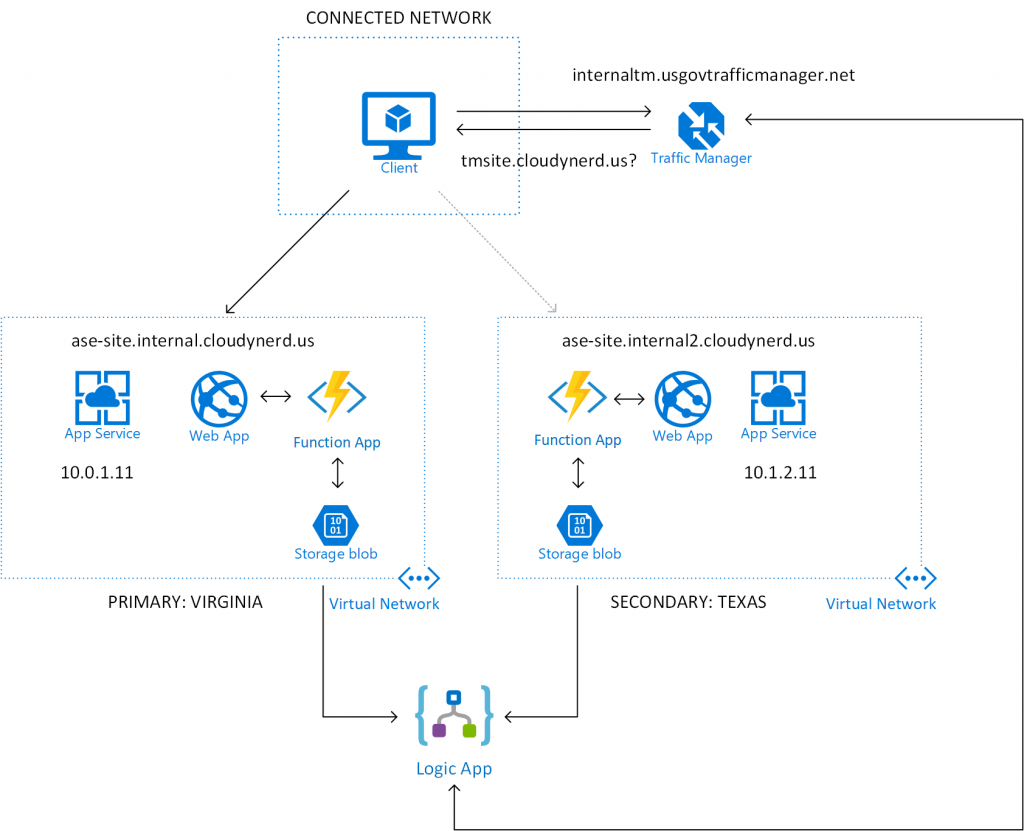

I have expanded the previous scenario to look something like this:

There is a lot going on here, but the basic principle is that I have added an Azure Function App in each of the ASEs. The function runs on a regularly scheduled trigger and probes the web app to see if it is up. The result of this probe is written as a heart beat (more details below) in a blob storage container. An Azure Logic App is then used to read the heart beat information from the blob storage and make a decision on which of the endpoints to direct traffic to. It then updates the traffic manager endpoints accordingly. Let's take a look at some of the details of each component.

The Azure function code is running in both ASEs to probe each of the sites individually. The code is very simple:

[csharp]

#r "Microsoft.WindowsAzure.Storage"

#r "Newtonsoft.Json"

using System;

using System.Net;

using System.IO;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Blob;

using Newtonsoft.Json;

public static async Task<HttpResponseMessage> Run(TimerInfo myTimer, TraceWriter log)

{

string probeEndpoint = GetEnvironmentVariable("ProbeEndpoint");

string connectionString = GetEnvironmentVariable("StorageConnectionString");

string heartBeatContainer = GetEnvironmentVariable("HeartBeatContainer");

log.Info(connectionString);

HttpClient client = new HttpClient();

HttpResponseMessage response = await client.GetAsync(probeEndpoint);

HeartBeat beat = new HeartBeat();

beat.Time = DateTime.Now;

beat.Status = (int)response.StatusCode;

using (HttpContent content = response.Content)

{

string result = await content.ReadAsStringAsync();

beat.Content = result;

}

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(connectionString);

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference(heartBeatContainer);

container.CreateIfNotExists();

CloudBlockBlob blockBlob = container.GetBlockBlobReference("beat.json");

blockBlob.UploadText(JsonConvert.SerializeObject(beat));

log.Info($"C# Timer trigger function executed at: {DateTime.Now}");

return new HttpResponseMessage(HttpStatusCode.OK);

}

public static string GetEnvironmentVariable(string name)

{

return System.Environment.GetEnvironmentVariable(name, EnvironmentVariableTarget.Process);

}

public class HeartBeat

{

public DateTime Time { get; set; }

public int Status { get; set; }

public string Content { get; set; }

}

[/csharp]

It uses an HTTP client to probe the endpoint. It does not make any decisions based on this result, it simply stores a heart beat in a storage account. The result of that would look something like this:

[js]

{

"Time": "2018-05-24T19:40:00.0492292+00:00",

"Status": 200,

"Content": "...RESPONSE..."

}

[/js]

In this scenario, the probe is placed inside the virtual network of the web app. One could argue that this does not test whether the application can be reached from some other point on the network, but the nice thing is that you can really define what available means. Specifically, you could place the probing code anywhere on your organizations network and update the blobs accordingly. It could even involve multiple probes if appropriate.

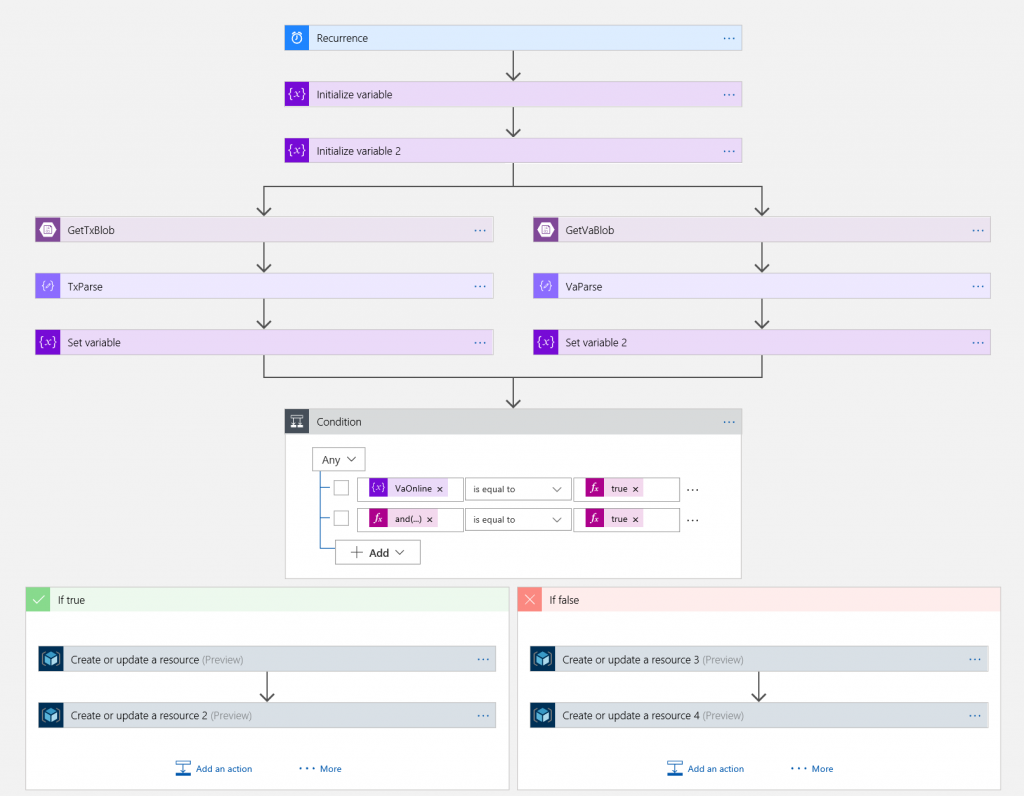

The failover orchestration itself is handled by a Logic App. Her is the overview of the flow:

It may look a little complicated, but it is actually fairly simple. The sequence is:

Set up Boolean variables (one for each region), indicating whether the region is up or not.

(For each region). Read the heart beat blob.

(For each region). Parse the JSON contents according to schema.

(For each region). Determine if the region is up or down. This is where you would have to define what that means, but my definition is that there has to be a heart beat with an HTTP status code of 200 (OK) within the last 5 minutes. You can define such an expression with a combination of dynamic content (from the JSON parsing) and expressions, e.g. for the Texas region, it would look like:

[plain]

and(equals(body('TxParse')?['Status'],200),greater(ticks(addMinutes(body('TxParse')?['Time'],5)),ticks(utcNow())))

[/plain]The two paths (for each region) are combined with a logic expression. Again, the specific logic will depend on what makes sense for your application, but my logic is that if the Virginia region is up or both regions are down, I will point to Virginia. In other cases (only Texas is up), I will point to Texas.

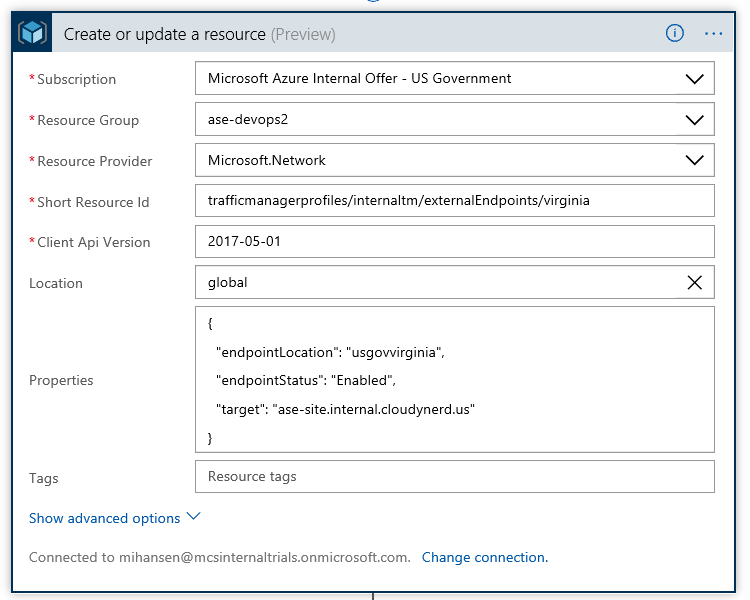

The Traffic Manager endpoints are updated using a "Create or update resource" action:

You can configure the Resource Manager connection with your Azure credentials or use a Service Principal.

You can validate this setup by verifying a) traffic is routed to the primary site as long as it is up, b) if you turn off the primary web app (or the probe), traffic will flow to the secondary site.

There are a number of improvements that one could add to the setup. The logic app should be running in both regions to ensure proper behavior in the event of complete regional failure. There are also some storage account outages that should maybe be handled as edge cases, but with geo redundant storage, they would be exceedingly rare. Things have been kept simple here to mane the scenario easier to digest, but you can add all the bells and whistles you would like.

In the Logic App, you can also include steps such as email alerts and/or push data to Log Analytics. This would create the needed visibility and alerts in connection with failover events.

And that's it. Between this blog post and the previous one, we have looked at how to use Azure Traffic Manager to orchestrate failover for private endpoints; either manually or automated. All the services used are managed services that are available in both Azure Government and Azure Commercial.