SSIS – Chunking number of inserts with a For Each container

My main customer utilizes SSIS for some SQL and Oracle integration requirements. One challenge they’ve had is timeouts from the Oracle side. We’ve tried everything to eliminate the issue in terms of extending timeout values within SSIS, but the problem appears to be that for very large data transfers into Oracle, Oracle simply gets tired of waiting and the connection gets closed. One of the developers working on this project has found a way around this by transferring data in smaller chunks to Oracle. He asked me about a way to automate this in SSIS so that the records are loaded in smaller chunks. I pointed him to the FOR LOOP construct and recommended creating a second table with an identity column that could be used as a means to ensure that there was a key value associated with the record range.

That’s about all I did, the rest of this is his work. My thanks to Robert Skinner, HP for sharing the finished package with me. I’ve modified the package to remove the application-specific data/fields and reduced down to just a simple table with a couple of fields, so we can focus on the approach and not get bogged down in the schema. For our example, we will use a flat file for import and just a SQL database for output, the destination connectors can be changed as needed to support exporting to another OLE DB destination such as Oracle without changing the actual components. Let’s walk through the design and implementation.

There are 3 main steps to the process:

- Ensure that a staging table exists and is prepared to store the imported rows that will need to be exported to Oracle. The table needs to be empty prior to importing. The staging table has the following attributes:

- All of the columns from the imported table

- A primary identity key.

- Load the imported data into the staging table.

- Prepare the destination (in this scenario, this requires truncating the data in the destination).

- Cycle through the staging table selecting the range of keys associated with the “chunking” number and copying to the destination table. For example, if only 50,000 rows are being loaded per insertion, each selection would be the next 50,000 rows – for example: 1 – 50,000, 50,0001 to 100,000, etc.

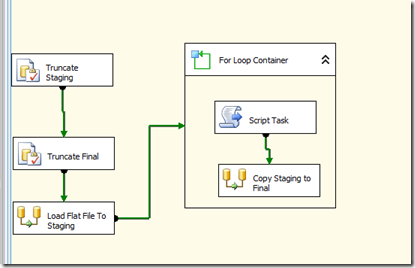

Here is a screen snapshot of the package.

Before loading the import data, we want to have a clean table to load into and make sure the identity range is reset. The easiest way to do this is with a TRUNCATE table if it already exists, otherwise simply create it. Robert actually uses the same logic as is generated by scripting using the SQL Server tasks/script that will check for the table, truncate it if empty, and if not actually create the table. For our scenario, we just truncate the 2 tables in the first 2 steps using a OLE DB Source with a SQL Command:

- Truncate Table Quote_Staging

- Truncate Table Quote

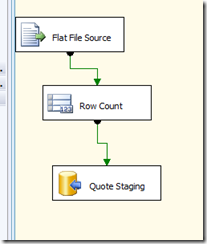

Next, we load from the flat file into the Staging Table. This will result in all of the original rows being assigned to a sequential identifier. Since we truncated the table, we are guaranteed that each number is sequential. Note the use of the Row Count component, this will capture the total number of rows in the flat file that get loaded into the staging table.

Here are the first few rows after the load step.

| StagingId | Symbol | Date | Open | High | Low | Close | Volume |

| 1 | A | 00:00.0 | 16.52 | 17.07 | 16.21 | 16.9 | 2790900 |

| 2 | AA | 00:00.0 | 9.03 | 9.28 | 8.92 | 9.14 | 28107000 |

| 3 | AAI | 00:00.0 | 7.5 | 7.52 | 7.08 | 7.39 | 5395300 |

| 4 | AAP | 00:00.0 | 44.19 | 44.39 | 42.5 | 42.63 | 1818700 |

| 5 | AAR | 00:00.0 | 11.68 | 11.95 | 11.61 | 11.92 | 7700 |

| 6 | AAV | 00:00.0 | 2.89 | 2.96 | 2.86 | 2.93 | 703600 |

| 7 | AB | 00:00.0 | 16.11 | 16.62 | 15.88 | 16.29 | 700000 |

| 8 | ABA | 00:00.0 | 25.87 | 26.05 | 25.87 | 25.97 | 2800 |

| 9 | ABB | 00:00.0 | 14.77 | 15.18 | 14.69 | 15 | 4501200 |

| 10 | ABC | 00:00.0 | 35.41 | 36.06 | 34.15 | 34.58 | 3436700 |

| 11 | ABD | 00:00.0 | 2.19 | 2.29 | 2.04 | 2.22 | 452400 |

| 12 | ABG | 00:00.0 | 8.2 | 8.29 | 7.57 | 7.8 | 563900 |

| 13 | ABK | 00:00.0 | 1 | 1.02 | 0.95 | 1.01 | 4878800 |

| 14 | ABM | 00:00.0 | 17.06 | 17.72 | 16.9 | 17.45 | 253800 |

| 15 | ABN-E | 00:00.0 | 7.41 | 7.55 | 7.35 | 7.37 | 47300 |

| 16 | ABN-F | 00:00.0 | 8 | 8.24 | 7.25 | 8.08 | 14100 |

| 17 | ABN-G | 00:00.0 | 7.39 | 7.65 | 7.26 | 7.51 | 105400 |

| 18 | ABR | 00:00.0 | 1.35 | 1.69 | 1.35 | 1.48 | 372000 |

| 19 | ABT | 00:00.0 | 43.57 | 43.9 | 42.88 | 43.09 | 9309500 |

| 20 | ABV | 00:00.0 | 54.12 | 54.73 | 53.61 | 53.81 | 772900 |

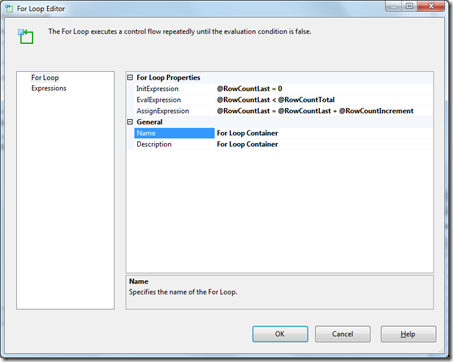

Let’s look more closely at how the FOR LOOP works with the script task.

The For Loop Properties controls the initialization, evaluation, and assignment. For this scenario, we have the following variables:

- RowCountLast: The identity of the last row loaded.

- RowCountIncrement: The amount of rows to load in each chunk.

- RowCountTotal: The total number of rows in the table

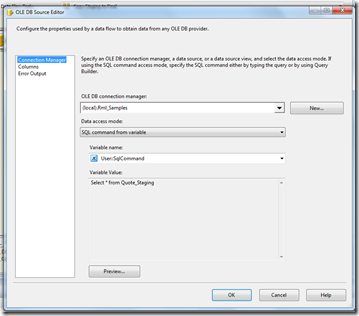

- SqlCommand: Contains the SQL command to execute on each iteration of the loop to “chunk” the data rows. Initially this should be set to just “Select * from the staging table – this is “Select * from Quote_Staging” for our example.

The script is used to build the SQL command variable that selects on the range identified for each iteration of the for loop. For example, on iteration 1, using an increment of 500, the SQL command would be set to query all of the rows between 0 and 500. On the next iteration, the last row would be set to 500 and the SQL Command altered to load from 501 – 1000. This continues until the number of rows loaded exceeds the total row count. Each iteration of the for loop establishes a new connection and only load the desired number of rows. Although, this is normally not optimal for most loading, this works around the Oracle issue. This is also useful if there are a huge number of rows, and monitoring of the process at a lower granularity is needed than for the whole table.

Here is the script. Note that this script is highly reusable, the only item that needs to be changed for another package would be the name of the table and the identity column. To make this even more reusable, variables could be created.

Code Snippet

- ' Microsoft SQL Server Integration Services Script Task

- ' Write scripts using Microsoft Visual Basic 2008.

- ' The ScriptMain is the entry point class of the script.

- Imports System

- Imports System.Data

- Imports System.Math

- Imports Microsoft.SqlServer.Dts.Runtime

- <System.AddIn.AddIn("ScriptMain", Version:="1.0", Publisher:="", Description:="")> _

- <System.CLSCompliantAttribute(False)> _

- Partial Public Class ScriptMain

- Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

- Enum ScriptResults

- Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

- Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

- End Enum

- ' The execution engine calls this method when the task executes.

- ' To access the object model, use the Dts object. Connections, variables, events,

- ' and logging features are available as static members of the Dts class.

- ' Before returning from this method, set the value of Dts.TaskResult to indicate success or failure.

- '

- ' To open Code and Text Editor Help, press F1.

- ' To open Object Browser, press Ctrl+Alt+J.

- Public Sub Main()

- Dim RowCountLast As Integer

- RowCountLast = CType(ReadVariable("RowCountLast"), Integer)

- Dim RowCountIncrement As Integer

- RowCountLast += RowCountIncrement

- RowCountIncrement = CType(ReadVariable("RowCountIncrement"), Integer)

- Dim SqlCommand As String

- SqlCommand = "Select * from Quote_Staging where StagingId >= " & RowCountLast & " and StagingId < " & RowCountLast + RowCountIncrement

- WriteVariable("SqlCommand", SqlCommand)

- End Sub ' Main

- Private Function ReadVariable(ByVal varName As String) As Object

- Dim result As Object

- Try

- Dim vars As Variables

- Dts.VariableDispenser.LockForRead(varName)

- Dts.VariableDispenser.GetVariables(vars)

- Try

- result = vars(varName).Value

- Catch ex As Exception

- Throw ex

- Finally

- vars.Unlock()

- End Try

- Catch ex As Exception

- Throw ex

- End Try

- Return result

- End Function

- Private Sub WriteVariable(ByVal varName As String, ByVal varValue As Object)

- Try

- Dim vars As Variables

- Dts.VariableDispenser.LockForWrite(varName)

- Dts.VariableDispenser.GetVariables(vars)

- Try

- vars(varName).Value = varValue

- Catch ex As Exception

- Throw ex

- Finally

- vars.Unlock()

- End Try

- Catch ex As Exception

- Throw ex

- End Try

- End Sub

- End Class

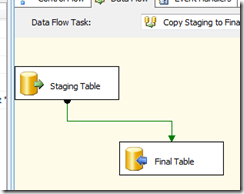

The data flow task in the for loop simply uses the SQL Command variable for the source and the final table for the destination.

Attached is a zip file containing a sample database schema, flat file with import data, and SSIS package.

My thanks again to Robert Skinner for providing me a sample SSIS package for using this including the script.

Technorati Tags: SSIS,Oracle,Chunking,SSIS For Loop