From the MVPs: Being a UC Superhero with Lync QoE Superpowers

This is the 49th in our series of guest posts by Microsoft Most Valued Professionals (MVPs). You can click the “MVPs” tag in the right column of our blog to see all the articles.

Since the early 1990s, Microsoft has recognized technology champions around the world with the MVP Award . MVPs freely share their knowledge, real-world experience, and impartial and objective feedback to help people enhance the way they use technology. Of the millions of individuals who participate in technology communities, around 4,000 are recognized as Microsoft MVPs. You can read more original MVP-authored content on the Microsoft MVP Award Program Blog .

This post is by Lync MVPs Andrew Morpeth and Curtis Johnstone . Thanks, Andrew and Curtis!

Being a UC Superhero with Lync QoE Superpowers

Microsoft Lync, by virtue of being a Unified Communications (UC) solution, relies on an ecosystem of infrastructure to ensure a good user experience for rich real-time communication features such as voice and video. A simple Lync audio call for example is dependent on the network, end-user devices, and many other components that can jeopardize quality.

Trying to pinpoint the underlying cause of poor user experiences in this ecosystem is very challenging; so much so, a new set of superpowers are required. This article introduces you to the Lync Quality of Experience (QoE) data, and gets you started on learning how to leverage it to determine the causes of poor Lync media calls so you can take action and be a true UC superhero.

How can Lync QoE data help you?

Unlike traditional phone systems where a problem is typically isolated to a single system, the most common Lync dependencies that need to be considered when troubleshooting quality issues are:

- Network Connectivity (including the status of routers and switches)

- Lync client operating system hardware and software

- Lync server operating system hardware and software

- Endpoint devices

- Voice Gateway’s (to an IP-PBX or PSTN breakout)

- Internet Telephony Service Provider’s (ITSP)

Fortunately Lync has some in-depth reporting capability built-in out of the box (provided you have decided to install the Lync monitoring service). Endpoints involved in a conversation (this includes not only clients, but phones, servers and gateways) will report QoE data back to the monitoring server in as part of the SIP protocol (specifically a SIP SERVICE request) at the end of the call. This is very powerful because it gives Administrators the ability to view quality metrics about a particular Lync conversation from multiple vantage points without the need for complex end to end monitoring or routers, switches, etc.

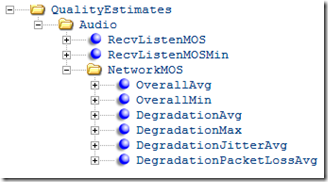

A sample of the quality data collected by the Lync client and sent back to the Lync server for storage in the Lync QoE database is shown here in Figure A:

Figure A - Example of QoE data stored as XML that the client collects and sends back to the server |

Note: if you did want more in-depth end-to-end monitoring (e.g. more network related metrics and metrics for the portion of a call outside of the Lync environment) the new Software-Defined Networking (SDN) API opens up even greater possibilities to monitoring solution vendors, allowing network monitoring information to be combined with the Lync QoE data.

What do all those Numbers Mean?

In order to truly get the most out of QoE metrics you need to understand what the metrics are, and what constitutes a good and bad Lync experience.

The QoE data can be viewed in several ways – directly in the database or in a Lync Reporting solution. Lync ships with a set of default reports which span usage and quality data. These are referred to as the Lync Monitoring reports and are part of the Monitoring service installation. These reports are not integrated with Active Directory and contain only the data that exists in the QoE database at the time (which depends on the QoE retention settings) but are still valuable with some knowledge and a little digging.

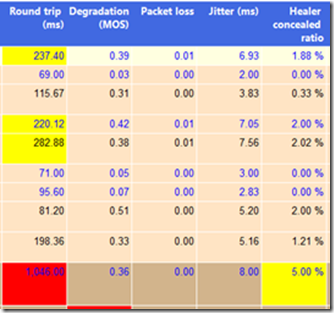

When you access one of the monitoring reports with QoE information, the quality metrics will likely be overwhelming. One of the most basic and easiest ways to determine which metrics you need to pay attention to is by looking at the metrics highlighted in Yellow and Red. A Yellow means this metric is likely ‘not good’, and a Red means the value exceeded a threshold such that it contributed to a poor Lync experience. An example of a native monitoring report with the highlighted QoE metrics is shown in Figure B here:

Figure B – A real example of good and bad QoE metrics highlighted in the monitoring reports

Before we can continue interpreting the numbers we need a clarification on some terminology. It is essential that you understand the difference between “Sessions”, “Calls”, and “Streams” (at this point even superman might be confused).

The terms “Session” and “Call” are sometimes used interchangeably but a “session” typically refers to the generic communication experience in the Lync application from start to finish. Technically put a Session is a SIP protocol session from start to finish between two endpoints - whereas a call refers to a specific media exchange between two endpoints. For example a peer-to-peer call is a communication session between two Lync endpoints, using any media such as audio video, desktop sharing, etc.

The terms “Session” and “Call” are sometimes used interchangeably but a “session” refers to the whole interactive communication experience between one or more users at the Lync Application layer, whereas a “call” more specifically refers to the media streams in a session (typically audio or video). For example, in one peer-to-peer session between User A and User B, there might be an audio call, a video call added 12 minutes into the session, and desktop sharing at some point.

A Stream refers specifically to the media flowing in one direction (e.g. from User A to User B) in a session. This is an extremely important concept. Take a simple peer to peer audio call between User A and User B. There is one Lync audio call, but two streams: audio flowing from User A to B, and another from User B to A.

This is important because in the Monitoring Reports, the Lync QoE metrics you see reported usually apply to Streams.

A much smaller set of QoE metrics also apply to Calls. Arguably the most important is ClassifiedPoorCall. This metric is a binary value which by default is set to “1” (aka “Yes” this is a poor call) if any of the conditions are met:

- RatioConcealedSamplesAvg > 7%

- PacketLossRate > 10%

- JitterInterArrival > 30ms

- RoundTrip > 500ms

- DegradationAvg > 1.0

This is a simplistic determination of ‘call quality’ but nonetheless it can be a very useful starting point to investigate which calls need attention. Note that in Lync Server 2010 the ClassifiedPoorCall metric applied to the whole call. In Lync Server 2013 it is applied to each stream in the call, and was deprecated at the call level.

Tip: For more information about ClassifiedPoorCall and all the other QoE metrics, and how they can be used in the broader topic of “infrastructure health”, Appendix B of the Network Planning, Monitoring, and Troubleshooting with Lync Server guide published by Microsoft is an essential read.

Now back to QoE metrics highlighted as Yellow or Red in the monitoring reports. A call highlighted in Yellow is classified as a “moderate” call, and calls highlighted in Red are classified as “bad” calls. These highlights are useful guideposts but are not to be taken as the whole story. Superhero’s regularly see calls classified as moderately good but were perceived as perfect by both the sender and the received.

The topic of “perceived” is the good introduction to Mean Opinion Scores, and is the subject of our next section.

Mean Option Scores (MOS)

MOS scores are one of the first things that you should look at when trying to identify a problem. Lync uses a mathematical algorithm to determine a MOS score between 1 and 5, to indicate how a real user might have rated the call.

Score |

Quality |

Impairment |

5 |

Excellent |

Imperceptible |

4 |

Good |

Perceptible but not annoying |

3 |

Fair |

Slightly annoying |

2 |

Poor |

Annoying |

1 |

Bad |

Very annoying |

There a 4 different types of MOS scores that you need to be aware of.

Listening MOS

This metric is a prediction of the wideband Listening Quality (MOS-LQ) of the audio stream that is played to the user. The Listening MOS varies depending on:

- The codec used for the call

- Whether the selected codec is wideband or narrowband

- The characteristics of the audio capture device used by the person speaking (person sending the audio).

- Any codec transcoding or mixing that occurred

- Defects from packet loss or packet loss concealment

- The speech level and background noise of the person speaking (person sending the audio)

Sending MOS

This is a prediction of the wideband Listening Quality (MOS-LQ) of the audio stream that is being sent from the user.

The Sending MOS varies depending on the:

- Users audio capture device characteristics

- Speech level and background noise of the user’s device

Due to the number of factors that influence the listening and sending MOS, it will give a good indication of the overall call quality for the listener or sender, but it is most useful when viewed statistically over a period of time to identify widespread network issues.

Network MOS

This is a prediction of the wideband Listening Quality (MOS-LQ) of audio that is played to the user. This value only considers network factors such as codec used, packet loss, packet reorder, packet errors and jitter.

It is important to note that as a result of codec compression, the maximum MOS score that is achievable will vary depending on the codec selected for the call; therefore it is usually more interesting to look at the Average Network MOS Degradation values, which include the amount of MOS value lost to network jitter and packet loss.

Here are the maximum achievable Network MOS scores for specific codes in some common scenarios:

Scenario |

Codec |

Maximum Achievable Network MOS |

PC-PC call |

RTAudio WB |

4.10 |

Conference call |

Siren |

3.72 |

PC-PSTN call |

RTAudio NB |

2.95 |

PC-PSTN call |

Siren |

3.72 |

Avg. network MOS degradation

Network MOS degradation is an integer that represents the amount of the MOS value lost to network affects.

< 0.5 Acceptable degradation

> 1.0 BadNMOS degradation (jitter)

Network MOS degradation percentage as a result of Jitter. By examining this field, you can determine if jitter was the major contributor to Network MOS degradation. This value will contribute to Avg. network MOS degradation.

NMOS degradation (packet loss)

Network MOS degradation percentage as a result of Packet Loss. By examining this field, you can determine if packet loss was the major contributor to Network MOS degradation. This value will contribute to Avg. network MOS degradation.

Conversational MOS

This is a prediction of the narrowband Conversational Quality (MOS-CQ) of the audio stream that is played to the user.

The Conversational MOS varies depending on the same factors as Listening MOS, as well as the following:

- Echo

- Network delay

- Delay due to jitter buffering

- Delay due to devices

Call Legs

Earlier we described the difference between Lync Calls, Sessions, and Streams. There is one additional important definition: call Legs. Call legs are just an identifiable route between two endpoints along the call path. Consider driving a car from New York City to Washington. Instead of doing the drive in one day you might break the drive up into two legs: Leg #1 could be from New York to Philadelphia, and Leg #2 might be from Philadelphia to Washington. Legs along a Lync call path usually represent points when the call passes through a gateway (or server) on-route to another system (e.g. when an outbound call transfers from a Lync audio call to an IP-PBX).

Where applicable the QoE data is split in to call legs, and each call leg has two streams (in & out). Remember earlier we defined a stream as a media path going in a particular direction. From the vantage point of one endpoint there is typically a media stream coming in (i.e. audio from the other endpoint) and another stream going out (i.e. to the other endpoint).

QoE metrics for inbound and outbound streams are shown in the Monitoring Reports for those call legs that Lync knows about. When the call path leaves the Lync system (e.g. it leaves the Lync Mediation server outbound for an IP-PBX) Lync has no capability to natively capture the QoE information like it can for media paths under its control. For example, in an outbound call to the PSTN, the call from the Lync Client to the Lync Mediation Server is the 1st leg, and the 2nd leg is from the Mediation Server to the Voice Gateway (that connects the call to PSTN). The stream of in each call leg is separated and identified in the monitoring reports as follows:

1. The outbound stream = “caller -> callee”

2. The inbound stream = “callee -> caller”

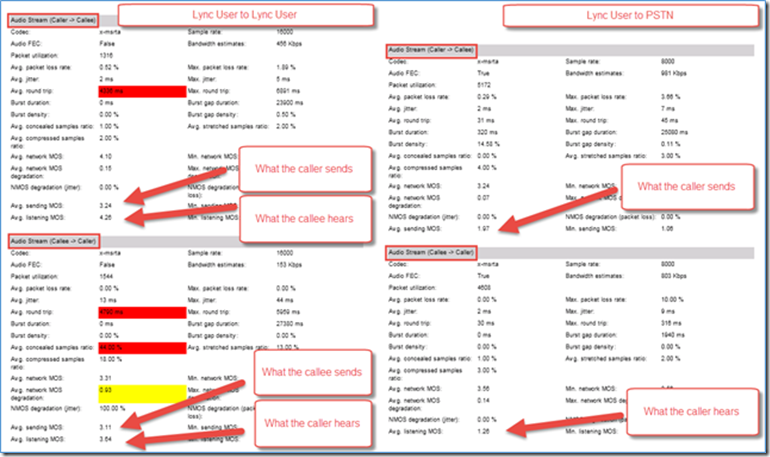

With these definitions in place let’s look at interpreting the sending and receiving MOS metrics in two examples: a Lync to Lync call, and a Lync to PSTN call. To make things a little easier to follow, the examples will use User A and User B terminology, instead of Caller and Callee.

A Lync to Lync Audio Call

A Lync client (User A) audio call to another Lync client (User B) on the same network will typically have a single call leg. This leg will have two streams (in & out) and a sending and listening MOS score for each stream as follows:

Lync User A to Lync User B Example

Stream A to B (“caller -> callee”)

- Avg. Sending MOS - The MOS Lync user A is sending

- Avg. Listening MOS - The MOS Lync user B is hearing

Stream B to A (“callee -> caller”)

- Avg. Sending MOS - The MOS Lync user B is sending

- Avg. Listening MOS - The MOS Lync user A is hearing

A Lync to PSTN Call

For the outbound PSTN call you will notice that there is only information on the callers MOS score. This is normal and is a result of the PSTN gateway not providing QoE metrics to the Lync Monitoring Server. Compare this to the Lync to Lync call scenario above which had data provided by both ends of the call.

Lync User A to PSTN User B Example

Stream A to B (“caller -> callee”)

- Avg. Sending MOS - The MOS Lync user A is sending

- Avg. Listening MOS -

The MOS PSTN user B is hearing(Lync does not know this)Stream B to A (“callee -> caller”)

- Avg. Sending MOS -

The MOS PSTN user B is sending(Lync does not know this)- Avg. Listening MOS - The MOS Lync user A is hearing

The image below (Figure C) shows a real snapshot of both the examples we just covered:

Figure C. A monitoring report snapshot showing QoE metrics for a Lync-to-Lync audio call, and a Lync outbound call to a PSTN endpoint.

Familiar Network Measures

Some of the QoE metrics are familiar network performance measures, and are very useful in determining if network conditions could be affecting call quality.

There are a couple of important notes to keep in mind while looking at these numbers:

- The criteria (e.g. “good” vs “bad”) stated for each metric is based on several references and experiences in the field.

- These are network measurements – they are useful guideposts in determining what potential issues affected Lync performance - but they are network measurements and are most useful to the network engineer.

- The absolute metrics numbers should be evaluated in the context of the other metrics and environment they are being reported from (e.g. geographic location and network type).

- The numbers stated below are generally for “unmanaged” networks. The criteria for a “bad” or “poor” call will be considerable less on a managed network given that managed networks generally perform better and allow Lync to leverage QoS on the network when compared to unmanaged.

- The net effect for each metric on the Lync end-user experience will also depend on the codec being used.

Here are the metrics:

Avg. packet loss rate: Packet Loss (%) represents the percentage of packets that did not make it to their destination. Packet loss will cause the audio to be distorted or missing.

< 3% Good

> 7% Poor

> 10% BadAvg. jitter: Jitter (ms) measures the variability of packet delay. VoIP packets are sent at regular intervals from the sender to the receiver, but because of network latency the interval in which packets are received at their destination can vary, resulting in distorted or choppy audio.

< 20ms Good

> 30ms Poor

> 45ms BadAvg. round trip: Average Round Trip Time (RTT) is the most common measure of latency and is measured in milliseconds (ms). This measure is the round trip time for VoIP packets between endpoints. When latency is high, users will likely hear the words, but there will be delays in sentences and words.

< 200ms Good

> 200ms Poor

> 500ms Bad

Compensating For Poor Network Conditions

Lync uses a number of methods to compensate for poor network conditions. The rate at which some of these methods are utilised are included in the QoE data, and can give a good indication if a user’s call was effected audibly.

Avg. concealed samples ratio: Average ratio of concealed audio samples, to the total to the total number of samples. A concealed audio sample is a technique used to smooth out the abrupt transition that would usually be caused by dropped network packets. High values indicate significant levels of loss concealment were applied, and results in distorted or lost audio.

< 2% Good

> 7% BadAvg. compressed samples ratio: Average ratio of compressed audio samples to the total number of samples. Compressed audio is audio that has been compressed to help maintain call quality when a dropped network packet has been detected. High values indicate significant levels of sample compression, and result in audio sounding accelerated or distorted.

Avg. stretched samples ratio: Average ratio of stretched audio samples to the total to the total number of samples. Stretched audio is audio that has been expanded to help maintain call quality when a dropped network packet has been detected. High values indicate significant levels of sample stretching caused by jitter, and result in audio sounding robotic or distorted.

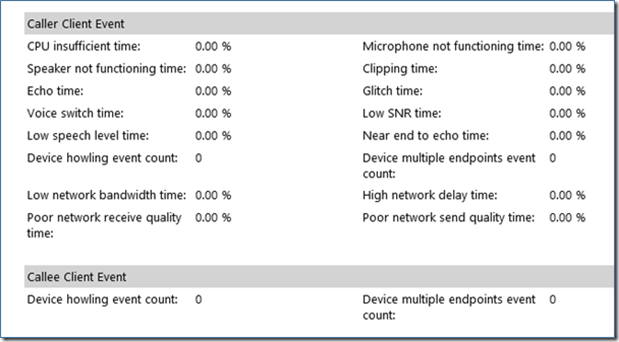

Client Conditions

As mentioned at the beginning of this guide, the client endpoint is a common variable which can affect Lync call quality. It is very useful to understand the client conditions around the call that you are investigating. The Quality of Experience data contains some useful client statistics to help you better understand a user’s endpoint connectivity, location and audio device.

Bandwidth estimates: Available bandwidth estimated on the client-side. Absolute thresholds are not that helpful, but when the client detects bandwidth is low (< 100 Kbps) audio quality can easily be impacted by other applications or network congestion.

Connection Type: Network connection type e.g. Ethernet (wired), WiFi

Caller inside: Whether the caller was inside the network (internal). e.g. True/False

Caller VPN: Whether the caller connected over a virtual private network e.g. True/False

Capture device: Capture (Mic) device name e.g. Polycom CX300

Render device: Render (Speaker) device name e.g. Polycom CX300

What triggers client warnings about call quality?

It’s also useful to know the events that can trigger a visual indicator of network conditions in the Lync client.

Here are the types of metrics that trigger the visual indicators followed by a high level explanation of those triggers:

- CPU insufficient time: Percentage of time there was insufficient CPU for processing current modalities and applications, causing audio distortion

- Speaker not functioning time: Percentage of time the speaker currently used is not functioning correctly, causing one-way audio issues. Check render buffer status

- Echo time: Percentage of time the device or setup is causing echo beyond the ability of the system to compensate:

- Timestamp noise

- Dynamic and Adaptive NLP attenuation

- Post-AEC echo percentage

- Microphone clipping due to far-end signal

- Voice switch time: Percentage of the call that had to be conducted in half duplex mode in order to prevent echo. In half duplex mode, communication can travel in only one direction at a time, similar to the way users take turns when communicating with a walkie-talkie. Flag the event when device is in voice switch mode

- Low speech level time: Percentage of time the user's speech is too low

- Device howling event count: Audio feedback loop detected (caused by multiple endpoints sharing audio path) Check for howling/screeching from other endpoints in the room.

- Low network bandwidth time: Percentage of time the available bandwidth is insufficient for acceptable voice/video experience. Bandwidth limits dynamic based on codec

- Poor network receive quality time: Percentage of time the Concealed Packet Ratio on send stream is severe and introducing distortion

- Concealed Packet Ratio: Good <2%, Bad >3%

- Microphone not functioning time: Percentage of time the Microphone currently used is not functioning correctly, causing one-way audio issues. Check capture buffer status

- Clipping time: Percentage of time the user's speech level is too high for the system to handle and is causing distortion. Microphone clipping during near end-only portions.

- Glitch time: Percentage of time there was severe glitches in audio rendering, causing distortion; can be caused by driver issues, deferred procedure call (DPC) storm (drivers), high CPU usage. Look for glitches after adaptive render buffer.

- Low SNR time: Percentage of time there was poor capture quality from user; distortion from noise or user being too far from microphone.

- Near end to echo time: Percentage of time the user's speech is too low compared to the echo being captured, limits ability to interrupt a user

- Device multiple endpoints event count: Multiple audio endpoints detected in the same session, system compensates by reducing render (speaker) volume.

- High network delay time: Percentage of time the network latency is severe and preventing interactive communication.

- RTT: Good <300ms, Bad >500ms

- Poor network send quality time: Percentage of time the packet loss and jitter on receive stream is severe and introducing distortion.

- Jitter: Good <20ms, Bad >30ms

- Packet Loss: Good <3%, Bad >7%

Summary

This quick reference guide has provided an introduction to the Lync quality of experience data, what it means, and how to use it to pinpoint quality issues and start resolving them.

The next time a quality issue is reported, use your new superpower to dig into the Lync Monitoring Reports, find the associated Lync call and use the QoE data to your advantage. With your newfound understanding you will be surprised at what you will find and how useful it is.

After you are comfortable with the material in this reference continue your Superhero journey and read the fantastic Microsoft Networking Guide - Network Planning, Monitoring, and Troubleshooting with Lync Server (by Jigar Dani, Craig Hill, Jack Wight, Wei Zhong). This guide not only gives you deeper knowledge of the native Lync reports and how to identify and resolve specific quality issues in the broader UC ecosystem, but presents a model to prevent them in the first place! Superman would be proud.

References

- Networking Guide - Network Planning, Monitoring, and Troubleshooting with Lync Server

- Troubleshooting Lync Call Quality Locally with Snooper

- Microsoft Lync Server 2010: Work Smart Guide for Monitoring Server Reports

- Voice Quality Improvements in Lync Server 2010

- Key Tips to Get Started with Lync Monitoring & Reporting

- A Primer on Lync Audio Quality Metrics

- Lync Call Quality Methodology in Lync Server 2013

- Media Quality Summary Report in Lync Server 2013

- Mean Opinion Score and Metrics

- Call List Report in Lync Server 2013

- Media Traffic Network Usage

- Server Performance Report in Lync Server 2013

- Device Report in Lync Server 2013

- AudioSignal table in Lync Server 2013

- MediaLine view in Lync Server 2013

- Understanding Lync Video Quality Reports