Why building highly scalable applications is hard – Part 1

In this first part of a 2 part article (click here for part 2) I’m going to discuss what causes applications to have poor performance and what you need to look at to enable them to process more work, faster and more efficiently. This discussion is relevant to ALL applications, from the smallest phone app, to the largest web site hosted on-premise or in the cloud and the biggest weather forecasting super computer.

The first thing to understand is that ALL applications have a Primary Bottleneck - unless you have infinite resources you can’t avoid it. The Primary Bottleneck is the first resource in an application that hits a hard limit as you increase the volume of work you want it to process. The application will normally still work after it hits this bottleneck, but any further attempt to increase the volume of work you want it to process will normally just result in the time it takes to complete the work to increase.

Even massive web sites, like those run by Google and Amazon, have a Primary Bottleneck. If you manage to generate enough traffic against them they will hit their Primary Bottleneck and they will slow down - they have just done a really good job of making sure that the volume of traffic required to hit the primary bottleneck is so high that you will never normally be able to reach it. To find out what the Primary Bottleneck is and to be sure it isn’t hit until the application is handling a greater volume of traffic than it needs to support in peak use, you need to undertake performance testing and analysis to find and remove as many bottlenecks as possible. This article will discuss the causes of bottlenecks, how you do the testing and analysis to find and fix bottlenecks will be the subject of some future articles.

To understand what causes bottlenecks and why it is actually quite hard to create highly responsive applications that can also scale to handle high volumes of work, we need to understand exactly what latency, throughput and concurrency are and how they are interdependent. We’ll define these terms by using an analogy of water flowing through a pipe:

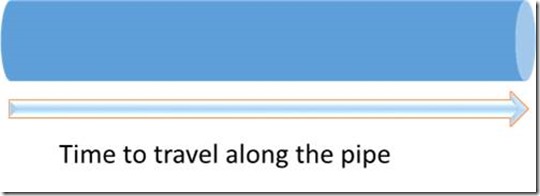

Latency

Latency is a measure of how long it takes an operation to complete and is normally measured in seconds. An operation could be an http web request, a database transaction, a message or any other measurable activity – an example of latency for a web site is “It takes 200ms to load a web page”. It terms of a water pipe, it’s how long it takes the water to get from one end of the pipe to the other:

Latency is affected by the length of the pipe (the shorter the pipe, the lower the latency) and the speed that the water is travelling along it (the faster the water is travelling, the lower the latency).

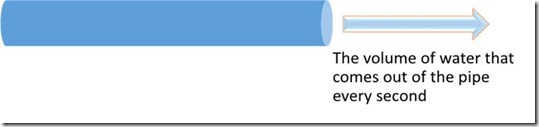

Throughput

Throughput is a measure of the rate that work can be completed and is measured in operations per second. An example of throughput for a web site is “The web site can process 500 pages per second”. Using the pipe analogy, it’s the volume of water that comes out of the end of the pipe every second:

Throughput is affected by the diameter of the pipe (a wider pipe allows a greater volume of water to traverse the pipe at any moment in time) and the speed that the water travels through the pipe (the higher the speed of the water, the more water can come out of the pipe every second).

Hold on! We can begin to see that there is a relationship between latency and throughput - they are both affected by the speed the water travels through the pipe. The thing that ties them together and allows us to define an equation to measure this relationship is…

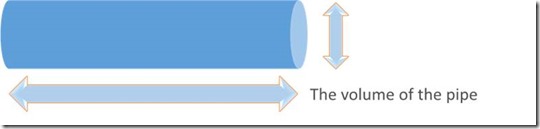

Concurrency

Concurrency is the number of operations that are being processed at any moment it time. An example of concurrency for a web site is “The Web site can process 1000 http requests simultaneously”. Using the pipe analogy, it is the volume of water that is in the pipe at any moment in time and is therefore determined by the length and diameter of the pipe:

Interesting… so Concurrency has a relationship between both Latency (affected by the length of the pipe) and Throughput (affected by the diameter of the pipe) and it turns out there is a simple equation that defines this interaction:

Concurrency = Latency x Throughput.

If you want to dig into this more, this is actually known as “Little’s Law” and forms part of Queueing theory. As an example of how this works, if your web site has a requirement that HTTP requests have an execution time (latency) of 0.1 seconds and maintains a throughput of 1000 requests per second, then the application must be able to process 0.1 x 1000 = 100 concurrent active requests at any moment in time. If it can’t achieve this level of concurrency then the required latency and\or throughput CANNOT be met!

So we now know that for our application to achieve low latency and high throughput we must also support high levels of concurrency. Let’s look at the things that effect throughput, latency and concurrency in any application using current IT technologies.

Core system resources

The performance characteristics of any application is ultimately defined by its use of five resources:

1. CPU

2. Memory

3. Disk

4. Network

5. Artificial resources

The maximum concurrency (and therefore throughput and latency) is going to be constrained by whichever one of these resources hits its limit first and becomes the Primary Bottleneck in the application. In the next part of this article we’ll look at each of these resources, understand what determines their latency, throughput and concurrency and talk about what we try and do to avoid them becoming a bottleneck.

Written by Richard Florance