Why building highly scalable applications is hard – Part 2

In the first part of this 2 part article I described what a Primary bottleneck is, defined what Latency, Throughput and Concurrency are and showed that they are entirely dependent on each other using an analogy of a pipe. In this part we’ll look at each of the core resources in current computer systems and discuss how they can affect the Latency, Throughput and Concurrency of an application.

CPU utilisation

CPU becomes a bottleneck at the point it becomes 100% utilised. Once you run out of spare CPU capacity, throughput and concurrency cannot increase anymore, so latency starts going up (it takes longer to get stuff done as more work is trying to share a fixed amount of CPU capacity). Historically this was resolved by making CPUs faster (reducing latency, which increases throughput). Nowadays it is more likely to be resolved by adding more CPUs (or cores) which doesn’t reduce latency but does increase concurrency and therefore throughput – assuming latency stays constant and the application can successfully distribute itself across multiple CPUs.

In theory each CPU core provides a concurrency of one (i.e. to process 100 concurrent operations you would need 100 CPU cores, as one core can only be processing one operation at any moment in time). However in practice this ends up not being the case because most operations don’t just need CPU and actually spend most of their time waiting for other (much slower!) resources like disk or network, which frees the CPU up to work on other things. It’s not uncommon for one CPU core to be able to handle hundreds of concurrent operations before it’s fully utilised, because over 99% of its time is spent waiting for other things to happen.

Making CPUs do large quantities of unnecessary work (like repeatedly executing the same compute intensive work rather than doing it once and caching it) reduces the CPUs ability to make itself free to process other operations and is a common cause of CPU bottlenecks.

In summary:

· CPU Speed determines the minimum latency.

· CPU utilisation determines the maximum throughput.

· Adding more CPU cores increases concurrency.

Memory capacity and speed

When memory runs out one of two things tend to happen – the application crashes or throughput falls and latency increases massively as memory is swapped out to (much slower) page files on disk. In some compute intensive applications with very low latency requirements (in the order of a few microseconds), the access time of main memory itself can start becoming a factor. However for most applications, memory is plentiful and fast and therefore rarely a bottleneck to performance – in fact caching resources in memory to avoid the higher latency or low throughput of other resources is the most common way of boosting application performance!

In summary:

· Memory access speed determines the minimum latency.

· Memory capacity determines the maximum throughput and concurrency.

Disk throughput and latency

Currently disk storage tends to be the poorest performing resource in most applications – it has the highest latency and lowest throughput of any component in a server. However it is improving all the time with the advent of Solid State Drives (SSDs) which almost eliminate the latency issues of mechanical drives (because they’re not actually drives, they’re just persistent random access memory) and generally have much better throughput as well.

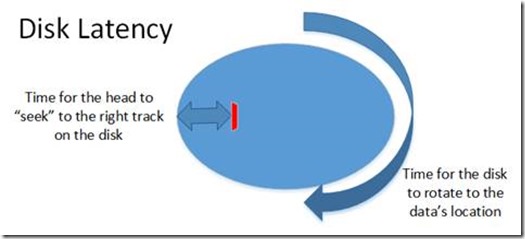

Mechanical hard drives are much slower than people tend to realise and many applications suffer from bad performance because of a lack of understanding of the performance characteristics of hard disks. On average the time to access any data (latency) on a mechanical drive is about 5 milliseconds (this is the average time it takes for the drive head to move to the required track on the disk platter and the disk to spin to the required point on the track) which limits a hard drives throughput to about 200 read or write requests a second. This is partially offset by the fact that if the data you need is all located in one place on the drive, then the drive head doesn’t need to move and the data can be streamed onto\off the disk at over 20Megabytes a second (which is why your PCs hard disk can stream high resolution videos without stuttering).

High end systems reduce these fairly severe performance limitations by grouping together several hard disks into an array and effectively treating them as a single unit by accessing them in parallel, which increases concurrency and throughput and therefore reduces the average latency.

In contrast, the latency of a current, enterprise class SSD is measured in microseconds, it has a throughput that can exceed over 40,000 reads\writes per second and has streaming rates that can achieve over a Gigabyte per second. Ultimately SSDs will end up as fast as main memory at which point disk performance issues will become a distant memory, but until then, and especially while mechanical drives are still around, disk will remain the slowest resource in most systems.

In summary:

· Disk seek time determines the minimum latency.

· Disk read\write rates determine the maximum throughput.

· Putting several disks in an array increases concurrency.

Network throughput and latency

Within data centres, network performance tends to be pretty good where the servers are locally connected and it not uncommon to obtain throughput of 1 or 10 Gigabits per second with a latency of less than 1ms. That being said, applications still want to avoid making unnecessary network round trips between servers to avoid adding too much latency. For example, many applications are guilty of making far more trips to a database server than they need to, when all the data needed could be obtained in one or two database calls, avoiding lots of unnecessary network latency and making better use of the available throughput.

However both throughput and latency become a much bigger deal over the internet where consumers may have poor DSL lines that only download at 1 or 2 Mbit\sec (and upload at an even lower rate!) and there is an end-to-end latency that can easily be many tens of milliseconds.

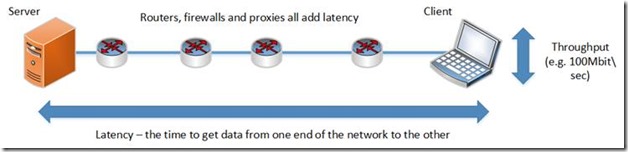

Latency tends to get overlooked when talking about networks. Just because a network has a throughput of 1Gbit\sec doesn’t necessarily mean it’s going to be fast (remember from the pipe analogy - throughput doesn’t take into account how long it takes to get through the pipe, just the rate that it comes out the end). On the internet, it’s not uncommon for a request to have to pass through several routers, and possibly a couple of firewalls on its journey between the server and the final recipient of the data. Each of these hops adds latency and it could easily take tens of milliseconds for a packet to get from one end to the other. (I’ve just pinged www.microsoft.com in the US from my desk in the UK and the request passed through 12 routers and had a roundtrip of 144ms – which means it took 72ms for my request to get to the server in the US and another 72ms to get the response back).

The way many websites try to reduce the effect of this high latency is by making use of the fact that often only part of the available network bandwidth (throughput) is utilised. If a browser downloads one request at a time (i.e. has a concurrency of one) it might only utilise 10% of the available throughput, so by increasing the concurrency we can more fully utilise the available throughput, which in turn reduces the average latency. This is achieved by getting the browser to make several HTTP requests simultaneously.

For example, say it takes 12ms to download an image from a web site and this uses 2Mbits\sec bandwidth. If we download six images one at a time then it will take a total of 72ms and use 2Mbit\sec bandwidth. If instead we download all six in parallel then, assuming we have 12Mbit\sec bandwidth available, we can now download the same six files in just 12ms. Even if the available throughput is less than 12Mbits\sec we’re still better off – say we actually only have 8Mbit\sec bandwidth available, we will make full use of our available bandwidth and we will still download all the content in around 24ms - about 1/3rd of the time compared to downloading one at a time.

In summary:

· Network bandwidth determines the maximum throughput and effective concurrency.

· Network length and intermediate devices determine the latency of travelling along the network.

Artificial resource limits

The first thing to clarify here is what exactly are “artificial resources”? These don’t necessarily have a physical manifestation but are usually software resources managed by an application which have an upper limit, or throttle, on their usage. Typical examples of these are:

· Locks to control concurrent access to an area of memory (e.g. critical sections, semaphores, events). These can affect throughput and latency significantly as they commonly reduce concurrency to one.

· Limits on the number of connections that can be made to a service (e.g. Database connections, TCP sockets).

· Limits on the creation of key resources (e.g. thread pools, limits on the number of active requests\transactions\operations).

All these artificially created throttles put a limit on the maximum concurrency that an application can support, and as we now know, if concurrency can’t increase, throughput will go down and\or latency will increase. These limits are normally in place for very good reasons: locks stop data from being corrupted. Other limits are because of design decisions (e.g. the number of possible TCP sockets is limited to 64K because it’s a 16 bit number) or are in place to protect a resource from hitting throughput limits when bad things might happen.

Overcoming concurrency throttling because of memory locks is generally one of the hardest of these problems to fix. It will require code changes and probably a redesign of the application to get rid of them. Often the best you can do is minimise the scope of the lock, to keep the time spent holding the lock to a minimum (reduce the locks latency and therefore increase the throughput).

Hard limits (like TCP sockets) are normally worked around in a couple of ways. The simplest is to scale out, to multiply the quantity of the resource available (increasing concurrency). The harder, but ultimately more efficient way, is to make better use of the available resource (e.g. reuse existing sockets rather than recycle, or put multiple sessions\requests over a single socket, which should increase throughput).

It’s the last case, where an artificial cap is in place to protect a resource which, in theory, should be the easiest to fix – because these limits are normally configurable and therefore all you should need to do is increase the limit until enough of the resource can be created so that it’s no longer a bottleneck. In reality it turns out that these can be some of the hardest performance issues to find, because:

· Often the limits are not obvious (they are system limits which you didn’t implement and aren’t even aware of), so it can be hard to find out what artificial limits even exist and what their impact is.

· The System doesn’t actually let you know that the limit has been hit. Commonly these limits get hit silently, with no tracing or logging being generated to indicate they have come into effect. All you know is that the performance isn’t very good for some reason. Quite often you can end up looking in the wrong place for the source of the problem, as it creates symptoms in other parts of the application that look like they might be the cause of the bottleneck, but aren’t!

· There are often several related limits that could be causing the problem, so even if you know which limits exist it can be hard to work out which one needs changing. (So you end up significantly increasing all of them, which gets rid of the bottleneck, but then you wonder why the limits were there in the first place…)

The first sign that you normally see that an artificial limit is being hit is when, regardless of what volume of traffic your application tries to process, you never hit a limit in one of the other major resources (CPU, Memory, Disk or Network) but latency gets worse as you try to increase the throughput. There is often no simple way of finding or resolving these issues other than lots of research, detective work and debugging. The ideal solution is to have good logging or monitoring which tells you that a limit is being hit and what it is.

In summary:

· Artificial limits are normally implemented by fixing the maximum concurrent access to a resource.

· The effect of them is that they put a cap on Throughput and therefore cause Latency to increase when that throughput limit is hit.

· They’re generally harder to find and fix than they should be!

So why does all this matter?

The fundamental problem is that the overall throughput of the solution is only as fast as the Primary Bottleneck. This can be demonstrated using our pipe analogy again. Imagine our pipe doesn’t have the same diameter along the whole of its length, but there is a narrower section in the middle…

It doesn’t matter how large the ends of the pipe are, water is only going to be able to flow from end-to-end as fast as it can get through the narrow constriction (the bottleneck!) in the middle, so the overall throughput and concurrency is going to be determined by the diameter of the narrow section, not the diameter of the end of the pipe.

So if an application is to have good scalability and remain responsive, every resource used by it has to be able to maintain high throughput and low latency as traffic volumes increase. The ability to stay highly responsive under load is limited by the Primary Bottleneck, but there are many things in large, complex applications which can cause bottlenecks and they all need to be understood and managed. It doesn’t matter if 99% of the execution path can support 10000 requests per second, if the other 1% only supports 10 - the application as a whole WILL only support 10 requests per second before response times start suffering and your customers start going elsewhere.

Finding the primary bottleneck is where performance testing comes in. You could try and guess where the primary bottleneck will be, but I can guarantee that at least 80% of the time you will guess wrong – and even if you guess right, you’ll have no idea at what level of load it’s going to start causing problems and what its impact will be. The only way to be sure where the primary bottleneck is, when it will hit and what its effect will be, is to put realistic load through the application, monitor it to see where problems start to occur and fix the problem to push the bottleneck further away. How you go about doing that will be the subject of future articles.

Written by Richard Florance