Code profiling IIS sites in deployed environments with Visual Studio code profiler

So, you’ve run a load test against your web site (you are performance testing your site, aren’t you?…), on your system test rig and you’ve found that the performance isn’t what you were hoping for. What do you do next to improve the sites performance? You could try guessing where the problems are and randomly start hacking bits of your code in the hope that it will speed up; or, you can take the slightly more scientific approach and measure your code to find out exactly where the bottlenecks are and fix them knowing it WILL improve the performance. The weapon of choice for this task is the code profiler.

There are several code profilers on the market for the .NET environment, all of which work in a fairly similar way, and much of the discussion here will apply to all of them. However being a Microsoft person, the one I’m going to talk about is the Profiler which ships with Visual Studio 2010 Premium and Ultimate editions (and previously with VS 2005\2008 Developer and Team System editions).

The challenge now is that I need to profile the web site on my test rig which doesn’t have Visual Studio (VS) installed on it (and the project’s build\deploy meister has just turned red and is fit to explode when I suggest installing a full copy of VS on his pre production server!). The answer is to install the lightweight, command line driven version of the VS profiler instead.

The msi installers for the 32 bit and 64 bit versions of the command line profiler can be found on the VS DVD in the folder “ \Standalone Profiler”, or they can be downloaded from here:

https://www.microsoft.com/downloads/en/details.aspx?FamilyID=c6db79bf-b076-413b-90c7-ea6457025ebd

Note that this only installs the profiler capture engine. You still need a full copy of Visual Studio 2010 premium or ultimate to view and analyse the output.

Get the right msi for the bit-ness (32 bit or 64 bit) of the server the profiler is to be installed on, copy it onto the server and install it. It gets installed to “c:\Program Files\Microsoft Visual Studio 9.0\Team Tools\Performance Tools”. It is a good idea to add this path onto your system PATH environment variable so that the profiler tools can be accessed from anywhere. Note that the 64 bit msi should always be installed on 64bit OS’s even if the binaries to be profiled are 32 bit.

If you want to profile on Windows Vista, Windows 7 or Server 2008 or later it will also be necessary to launch an administrator command prompt in which to run the profiler.

Which profiling mode to use?

The Visual Studio profiler can work in two very different modes, Sampling and Instrumentation\Tracing. It is important to understand the distinction between the two and what the benefits and limitations of each mode are.

Sample profiling

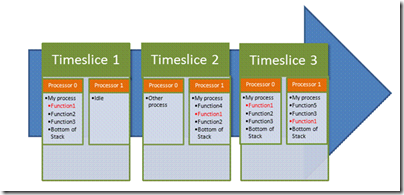

Sample profiling is effectively “statistical” profiling. This means the quality (or accuracy) of the data it produces is directly dependant on number of times the code executes during the profiler run. It works by time slicing the process(es) being profiled at regular intervals (by default every 10 million clock cycles) and takes a snapshot of the call stack of each thread executing at the time.

In the above example, the PC has two processor cores and the profiler has been setup to profile “My process”. Function1 appears in four stack traces and therefore has an ‘inclusive’ count of four. It’s at the top of the stack (i.e. it is running now) in two of the stacks and therefore has an ‘exclusive’ count of two.

The profiler then counts up how many times a function appears in a stack trace during the entire profile run and assumes (reasonably) that if one function appears in more snapshots than another function then it was probably either running for longer or being called more frequently than the other function (you can’t tell which when using the sample profiler). It should also be noted that it only tracks threads which were actually consuming CPU during the time slice. It does NOT track threads that were waiting on locks or for I\O to complete. Its usefulness is therefore limited to scenarios where the process is CPU bound. It is however very lightweight (it has minimal performance impact on the system), requires no instrumentation and is easy to use.

Trace (Instrumentation) profiling

Within the Visual Studio profiler, the terms “Instrumentation profiling” and “Trace profiling” are used all over the place. However these are just two different terms for same thing. I will refer to Trace profiling from now on, but feel free to substitute “Instrumentation” for “Trace” at any time.

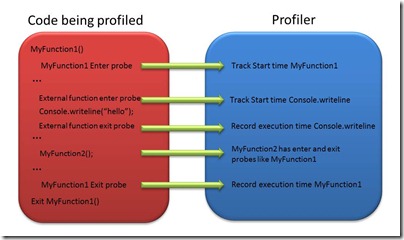

For Trace profiling to work, the binaries to be profiled must be instrumented first (hence the alternative name). This instrumentation involves inserting probes into the target code at the start and end of each function being instrumented, and before and after calls to external functions not being directly instrumented, so that the entry into and exit from every function call can be tracked. The following diagram demonstrates this.

This means that when the code is run under the profiler, the exact number of times a function is called and how long it takes to execute can be exactly measured. However, capturing this detail comes at a cost. There is a fairly significant processing overhead being introduced on every function call (usually at least 10% and can easily be 100% or more, but is more or less dependent on the size of the functions being profiled). The increase in code size and execution of additional code in the profiler can also cause some adverse CPU caching effects. This can be partially offset by not profiling small functions, where the profiler overhead is at its greatest, (this is an option of the profiler’s instrumenter – see later) at the cost of a loss of some detail in the profiler output.

The key benefit of Trace profiling is that an accurate measure of the CPU and wall clock (Elapsed) time spent in functions. This means we can accurately track the time spent waiting on locks, web service calls, SQL Server calls, disk I\O and any other events the code may stall on – which are extremely interesting in a distributed solution like a web site. Also all processes that load the instrumented binaries will get profiled automatically, i.e. you don’t need to explicitly attach to the processes you want to profile, which makes cold start profiling much easier. In my opinion these benefits are worth the extra effort of getting Trace profiling working. The main additional complexity in using Trace profiling is the effort in instrumenting the code to be profiled, especially if it is required to profile signed or GACed binaries.

The steps for sample profiling and trace profiling are very different, which is why there are separate sections below for the two modes. Sampling is much simpler to setup than Tracing. This is the main reason why it is generally a good idea to start with sample profiling and then move onto trace profiling when all avenues for analysis using sampling have been exhausted.

Sample profiling

Setting up for sample profiling

To prepare the environment for sample profiling IIS processes, start a command prompt, ensure the profiler folder is on the correct path, and run the following command:

Vsperfclrenv /globalsampleon

This command simply sets some system environment variables informing the .NET framework that we wish to attach the sampling profiler to the framework. Then reboot the system to ensure these settings are picked up by all .NET processes (this may not be necessary for sample profiling, but if you find the profiler isn’t working as expected rebooting is the first thing to try).

This is all the setup needed to enable sample profiling.

Capturing a sample profile

The Sample profiler will only profile processes it is specifically attached to. This means the profiler must either be used to launch the target process (which is hard with IIS as w3wp.exe needs to be started with some fairly esoteric command line parameters) or it must be attached to one or more existing processes. In the case of IIS, this will generally mean attaching to a running instances of w3wp.exe, so the web site needs to be warmed up by issuing an http request against it before it can be sample profiled.

The other key thing to think about with sample profiling is that, due to its statistical nature, it is necessary for the activity being profiling to either be long running or for it to repeat a large number of times, so that many instances of the activity can be sampled. The easiest way of doing this with a web based application is to run a load test against the site, or failing that, walk the sequence of pages you want to profile several times in your favourite browser (if it is just one page, then use F5 several times). In a low volume scenario it is probably worth increasing the sampling frequency of the profiler, so that more frequent samples are captured using the /timer:n switch on the vsperfcmd command line; where n is the number of clock ticks between samples, the default being 10,000,000.

All this means that sample profiling is not really up for profiling web site cold starts as most start up activity only occurs once (which is bad for the accuracy of the data). Even if you manage to attach to it fairly quickly after the process launches you will probably miss most of the interesting activity! If you need to understand why a web site takes a long time to start up, you will need to invest in trace profiling (or look at the xperf profiler https://msdn.microsoft.com/en-us/performance/cc825801.aspx, which is beyond the scope of this blog article).

To start sample profiling of a w3wp process, ensure your web site is warmed up (ideally your loadtest should already be executing, otherwise browsing to the sites home page will do the job) then enter the following command line:

Vsperfcmd –start:sample –output:<mysamplefile> -attach:<PID>

Where <PID> is the process Id of the process you want to profile (Task Manager is the easiest place to obtain this Id – add the PID column onto the Processes tab) and <mysamplefile> is the name of the output file to be created.

The profiler is now capturing data. If it is necessary to capture data from more than one process simultaneously (bear in mind each app pool will have its own w3wp process) then determine the processes PIDs and enter additional commands like the following to include profiling of them:

Vsperfcmd –attach:<PID2>

Once you think you have profiled for long enough (if you are profiling a load test leave it running for a couple of minutes) then run the following commands to stop the profiler:

Vsperfcmd –detach

Vsperfcmd –shutdown

This will leave the target processes running but you will now have a saved profile named <mysamplefile>.vsp. Before copying the .vsp file to the machine with VS installed on to analyse it, there is one more step needed to ensure all the relevant debug symbols are embedded in the .vsp file. Run the following command to embed the symbols into the vsp file:

vsperfreport –packsymbols –summary:all <mysamplefile>.vsp

<mysamplefile>.vsp can now be loaded into Visual Studio for analysis. Make sure symbols for any unmanaged binaries referenced in the vsp file are referenced in the symbol path.

Trace Profiling

Setting up for trace profiling

On the server to be profiled, to prepare the environment for trace profiling the IIS processes, start a command prompt and run the following command:

Vsperfclrenv /globaltraceon

Then reboot the system to ensure this is picked up by all .NET processes. This command simply sets some system environment variables informing the .NET framework that we wish to attach the trace profiler to the framework.

It is now necessary to instrument all the binaries that are to be profiled. This is where one or two gotchas can be hit. When a binary is instrumented, probes are inserted into it which changes the file. This means that if the binary is signed, the signature becomes invalid; this affects all strong named managed binaries. There are two ways to resolve this. Either resign the binaries after instrumentation, or (my preference, because it’s easier!) disable strong name verification using the sn.exe tool which ships with Visual Studio.

Also, it needs to be borne in mind that the profiler will only capture data for binaries that are instrumented. Anything not instrumented will be entirely “invisible” in the profile viewer. Therefore make sure that everything that might be relevant to the investigation is instrumented.

Vsinstr.exe is the command line tool used for instrumenting binaries. In its simplest form it takes the name of the binary to be instrumented. However I usually use the following command line:

Vsinstr /EXCLUDESMALLFUNCS /NOWARN:2001;2006;2013 FileToBeInstrumented.dll

This avoids instrumenting small functions (for reasons discussed earlier) and hides some common warnings that aren’t interesting. I usually create a batch file which instruments all the binaries in one go. This is useful for when a code refresh is deployed and you want to re-instrument the new release for profiling. The result of running this command is that the original binary is renamed “filename.dll.orig” and a new instrumented copy of the binary is left with the original filename. This means that to revert to the original binary you simply need to delete the instrumented binary and rename the .orig files to remove the .orig extension.

If I need to profile signed binaries (which includes anything in the GAC), rather than going through the pain of instrumenting the binaries and then having to get the build master to resign them for me, I simply disable signing verification for the duration of my profiling session using the command:

sn –Vr *,*

sn.exe comes as part of Visual Studio (any .NET based version). Obtain this from a machine with Visual Studio installed and copy it onto the server being profiled. Note that there are 32 bit and 64 bit versions of sn.exe (the 64 bit version is in an “x64” folder under the folder containing the 32 bit version) and there are separate strong name exclusion lists for 32 and 64 bit (use “sn –Vl” to see the current strong name settings). Make sure you use the right version of sn.exe for the bitness of your binaries on the machine being profiled.

Profiling GAC’ed binaries creates an additional challenge as only signed binaries can be dropped in the GAC (even with signing verification disabled). Therefore the way I handle that is to ensure the normal, uninstrumented binaries are successfully placed in the GAC and then instrument the binaries, in place, in the GAC.

To do this, start a command prompt and run “cd c:\windows\assembly\gac_msil”. Then “cd” into the folder with the name of the binary to be instrumented and then “cd” into the folder under this. This folder name will be based on the assembly version number. The binary that needs instrumenting will be in this folder. Use vsinstr (with the same syntax used above) on this DLL. This will work on any managed binaries in the GAC - you can just as easily instrument the .NET Framework itself or binaries forming part of applications such as BizTalk.

Capturing a Trace profile

There are a few things that you need to be aware of to successfully capture a trace profile of your instrumented code.

- If you are connecting to the server you are profiling on using a remote desktop connection then you need to make sure you use the /crosssession switch (can be abbreviated to /cs) on the vsperfcmd command line. I tend to always include this regardless as it doesn’t hurt to have this switch in place even if you aren’t using Terminal services.

- The user accounts that the process(es) you want to profile are making use of, need to be given permission to call the profiler. For IIS processes this includes the user accounts specified for the app pools the web sites are running under. Add a /user:<useraccount> switch on the vsperfcmd command line for every app pool account being used; add as many instances of this switch as necessary.

- To successfully capture a trace profile, the processes to be profiled must start AFTER the trace profiler has been initialised. However it is not necessary to actually start profiling immediately and the capturing of profile data can be turned on and off at will so that only specific scenarios are captured. Therefore unless you want to profile the cold start of IIS, you will want to add the /globaloff switch to the vsperfcmd command line to tell it not to immediately start capturing data.

Given these caveats, your command line to launch the instrumentation profiler will normally look something like this:

Vsperfcmd –start:Trace –output:<mytracefile> -cs –globaloff –user:<domain>\<user1> -user:<domain>\<user2>

I usually put this command line into a batch file...

After running this command, perform an iisreset to ensure all the IIS processes are restarted and will be profiled. This is ultra important if the web site was already started before running this command!

At any time, run “vsperfcmd –status” to check the profiler engine is running and which processes it is currently attached to. It is reassuring to run this after the IIS processes have restarted to verify that the profiler has attached to all the IIS processes successfully.

Now start running the scenario to be exercised (typically a load test of some sort) and when everything is warmed up and you’ve used “vsperfcmd –status” to make sure all the processes you are interested in have been picked up by the profiler, execute the command:

vsperfcmd –globalon

The profiler is now capturing data. Unlike sample profiling, it isn’t necessary to leave the profiler capturing for very long (in fact you don’t want to because the vsp file will get massive…). Ideally keep profiling for a bit longer than the total execution time of a single instance of your test scenario and then issue the command:

Vsperfcmd –globaloff

The vsp file for trace profiling can grow very large very quickly. On a busy server, even profiling for only 30 seconds can generate a multi-gigabyte trace file!

It is possible to issue the -globalon and -globaloff commands several times if required during the profile session.

Once you have captured all the profiling data you need, shutdown the profiler and stop the processes being profiled. To stop the profiler, enter:

Vsperfcmd –shutdown

You will now be told to stop or kill the processes being profiled before the profiler shutdown can complete. If you’re profiling IIS processes, the easiest way to do this is to run iisreset. If you’re profiling a windows service then stop the service.

Once all the processes being profiled have stopped, vsperfcmd will exit. You should now have a (potentially very large) .vsp file which can be analysed by loading it into Visual Studio. As with Sample profiling it is necessary to embed the debug symbols into the .vsp file before it is copied by executing the following command:

vsperfreport –packsymbols –summary:all <mytracefile>.vsp

<mytracefile>.vsp can now be loaded into Visual Studio for analysis.

Analysing the output

I’ll produce a future blog article on how I go about analysing the output of the profilers in Visual Studio. But there are some good articles out there already. Here are links to a couple to get you started:

https://msdn.microsoft.com/en-us/magazine/cc337887.aspx

https://msdn.microsoft.com/en-us/library/ms182372.aspx

Written by Richard Florance