Using WebGL to Render Kinect Webserver Image Data

Our 1.8 release includes a sample called WebserverBasics-WPF that shows how HTML5 web applications can leverage our webserver component and JavaScript API to get data from Kinect sensors. Among other things, the client-side code demonstrates how to bind Kinect image streams (specifically, the user viewer and background removal streams) to HTML canvas elements in order to render the sequence of images as they arrive so that users see a video feed of Kinect data.

In WebserverBasics-WPF, the images are processed by first sending the image data arriving from the server to a web worker thread, which then copies the data pixel-by-pixel into a canvas image data structure which can then be rendered via the canvas “2d” context. While this solution was adequate for our needs, being able to process more than the minimum required 30 frames per second of Kinect image data, we would start dropping image frames and get a kind of stutter in the video feed when we attempted to display data from more than one image stream (e.g.: user viewer + background removal streams) simultaneously. Even when displaying only one image stream at a time there was a noticeable load added to the computer's CPU. This reduced the amount of multitasking that the system could perform.

Now that the latest version of every major browser supports the WebGL API we can get even better performance without requiring a dedicated background worker thread (that can occupy a full CPU core). While you should definitely test on your own hardware, using WebGL gave me over 3x improvement in image fps capability—and I don’t even have a high-end GPU!

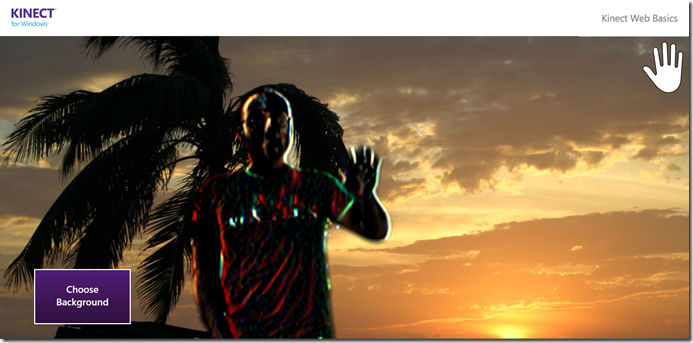

Also, once we are using WebGL it is very easy to apply additional image processing or perform other kinds of tasks without adding latency or burdening the CPU. For example, we can use convolution kernels to perform edge detection to process this image

So let’s send some Kinect data over to WebGL!

Getting Started

- Make sure you have the Kinect for Windows v1.8 SDK and Toolkit installed

- Make sure you have a WebGL-compatible web browser installed

- Get the WebserverWebGL sample code, project and solution from CodePlex. To compile this sample you will also need Microsoft.Samples.Kinect.Webserver (also available via CodePlex and Toolkit Browser) and Microsoft.Kinect.Toolkit components (available via Toolkit Browser).

Note: The entirety of this post focuses on JavaScript code that runs on the browser client. Also, this post is meant to provide a quick overview on how to use WebGL functionality to render Kinect data. For a more comprehensive tutorial on WebGL itself you can go here or here.

Encapsulating WebGL Functionality

WebGL requires a non-trivial amount of setup code so, to avoid cluttering the main sample code in SamplePage.html, we defined a KinectWebGLHelper object constructor in KinectWebGLHelper.js file. This object exposes 3 functions:

- bindStreamToCanvas(DOMString streamName, HTMLCanvasElement canvas)

Binds the specified canvas element with the specified image stream.

This function mirrors KinectUIAdapter.bindStreamToCanvas function, but uses canvas “webgl” context rather than the “2d” context. - unbindStreamFromCanvas(DOMString streamName)

Unbinds the specified image stream from previously bound canvas element, if any.

This function mirrors KinectUIAdapter.unbindStreamToCanvas function. - getMetadata(DOMString streamName)

Allows clients to access the “webgl” context managed by KinectWebGLHelper object.

The code modifications (relative to code in WebserverBasics-WPF) necessary to get code in SamplePage.html to start using this helper object are fairly minimal. We replaced

uiAdapter.bindStreamToCanvas(

Kinect.USERVIEWER_STREAM_NAME,

userViewerCanvasElement);

uiAdapter.bindStreamToCanvas(

Kinect.BACKGROUNDREMOVAL_STREAM_NAME,

backgroundRemovalCanvasElement);

with

glHelper = new KinectWebGLHelper(sensor);

glHelper.bindStreamToCanvas(

Kinect.USERVIEWER_STREAM_NAME,

userViewerCanvasElement);

glHelper.bindStreamToCanvas(

Kinect.BACKGROUNDREMOVAL_STREAM_NAME,

backgroundRemovalCanvasElement);

Additionally, we used the glHelper instance for general housekeeping such as clearing the canvas state whenever it’s supposed to become invisible.

The KinectWebGLHelper further encapsulates the logic to actually set up and manipulate the WebGL context within an “ImageMetadata” object constructor.

Setting up the WebGL context

The first step is to get the webgl context and set up the clear color. For historical reasons, some browsers still use “experimental-webgl” rather than “webgl” as the WebGL context name:

var contextAttributes = { premultipliedAlpha: true };

var glContext = imageCanvas.getContext('webgl', contextAttributes) ||

imageCanvas.getContext('experimental-webgl', contextAttributes);

glContext.clearColor(0.0, 0.0, 0.0, 0.0); // Set clear color to black, fully transparent

Defining a Vertex Shader

Next we define a geometry to render plus a corresponding vertex shader. When rendering a 3D scene to a 2D screen the vertex shader would typically transform a 3D world-space coordinate into a 2D screen-space coordinate but, since we’re rendering 2D image data coming from a Kinect sensor into a 2D screen, we just need to define a rectangle using 2D coordinates and map the Kinect image onto this rectangle as a texture so the vertex shader ends up being pretty simple:

// vertices representing entire viewport as two triangles which make up the whole

// rectangle, in post-projection/clipspace coordinates

var VIEWPORT_VERTICES = new Float32Array([

-1.0, -1.0,

1.0, -1.0,

-1.0, 1.0,

-1.0, 1.0,

1.0, -1.0,

1.0, 1.0]);

var NUM_VIEWPORT_VERTICES = VIEWPORT_VERTICES.length / 2;

// Texture coordinates corresponding to each viewport vertex

var VERTEX_TEXTURE_COORDS = new Float32Array([

0.0, 1.0,

1.0, 1.0,

0.0, 0.0,

0.0, 0.0,

1.0, 1.0,

1.0, 0.0]);

var vertexShader = createShaderFromSource(

glContext.VERTEX_SHADER,

"\

attribute vec2 aPosition;\

attribute vec2 aTextureCoord;\

\

varying highp vec2 vTextureCoord;\

\

void main() {\

gl_Position = vec4(aPosition, 0, 1);\

vTextureCoord = aTextureCoord;\

}");

We specify the shader program as a literal string that gets compiled by the WebGL context. Note that you could instead choose to get the shader code from the server as a separate resource from a designated URI.

We also need to let the shader know how to find its input data:

var positionAttribute = glContext.getAttribLocation(

program,

"aPosition");

glContext.enableVertexAttribArray(positionAttribute);

var textureCoordAttribute = glContext.getAttribLocation(

program,

"aTextureCoord");

glContext.enableVertexAttribArray(textureCoordAttribute);

// Create a buffer used to represent whole set of viewport vertices

var vertexBuffer = glContext.createBuffer();

glContext.bindBuffer(

glContext.ARRAY_BUFFER,

vertexBuffer);

glContext.bufferData(

glContext.ARRAY_BUFFER,

VIEWPORT_VERTICES,

glContext.STATIC_DRAW);

glContext.vertexAttribPointer(

positionAttribute,

2,

glContext.FLOAT,

false,

0,

0);

// Create a buffer used to represent whole set of vertex texture coordinates

var textureCoordinateBuffer = glContext.createBuffer();

glContext.bindBuffer(

glContext.ARRAY_BUFFER,

textureCoordinateBuffer);

glContext.bufferData(

glContext.ARRAY_BUFFER,

VERTEX_TEXTURE_COORDS,

glContext.STATIC_DRAW);

glContext.vertexAttribPointer(textureCoordAttribute,

2,

glContext.FLOAT,

false,

0,

0);

// Create a texture to contain images from Kinect server

// Note: TEXTURE_MIN_FILTER, TEXTURE_WRAP_S and TEXTURE_WRAP_T parameters need to be set

// so we can handle textures whose width and height are not a power of 2.

var texture = glContext.createTexture();

glContext.bindTexture(

glContext.TEXTURE_2D,

texture);

glContext.texParameteri(

glContext.TEXTURE_2D,

glContext.TEXTURE_MAG_FILTER,

glContext.LINEAR);

glContext.texParameteri(

glContext.TEXTURE_2D,

glContext.TEXTURE_MIN_FILTER,

glContext.LINEAR);

glContext.texParameteri(

glContext.TEXTURE_2D,

glContext.TEXTURE_WRAP_S,

glContext.CLAMP_TO_EDGE);

glContext.texParameteri(

glContext.TEXTURE_2D,

glContext.TEXTURE_WRAP_T,

glContext.CLAMP_TO_EDGE);

glContext.bindTexture(

glContext.TEXTURE_2D,

null);

Defining a Fragment Shader

Fragment Shaders (also known as Pixel Shaders) are used to compute the appropriate color for each geometry fragment. This is where we’ll sample color values from our texture and also apply the chosen convolution kernel to process the image.

// Convolution kernel weights (blurring effect by default)

var CONVOLUTION_KERNEL_WEIGHTS = new Float32Array([

1, 1, 1,

1, 1, 1,

1, 1, 1]);

var TOTAL_WEIGHT = 0;

for (var i = 0; i < CONVOLUTION_KERNEL_WEIGHTS.length; ++i) {

TOTAL_WEIGHT += CONVOLUTION_KERNEL_WEIGHTS[i];

}

var fragmentShader = createShaderFromSource(

glContext.FRAGMENT_SHADER,

"\

precision mediump float;\

\

varying highp vec2 vTextureCoord;\

\

uniform sampler2D uSampler;\

uniform float uWeights[9];\

uniform float uTotalWeight;\

\

/* Each sampled texture coordinate is 2 pixels appart rather than 1,\

to make filter effects more noticeable. */ \

const float xInc = 2.0/640.0;\

const float yInc = 2.0/480.0;\

const int numElements = 9;\

const int numCols = 3;\

\

void main() {\

vec4 centerColor = texture2D(uSampler, vTextureCoord);\

vec4 totalColor = vec4(0,0,0,0);\

\

for (int i = 0; i < numElements; i++) {\

int iRow = i / numCols;\

int iCol = i - (numCols * iRow);\

float xOff = float(iCol - 1) * xInc;\

float yOff = float(iRow - 1) * yInc;\

vec4 colorComponent = texture2D(\

uSampler,\

vec2(vTextureCoord.x+xOff, vTextureCoord.y+yOff));\

totalColor += (uWeights[i] * colorComponent);\

}\

\

float effectiveWeight = uTotalWeight;\

if (uTotalWeight <= 0.0) {\

effectiveWeight = 1.0;\

}\

/* Premultiply colors with alpha component for center pixel. */\

gl_FragColor = vec4(\

totalColor.rgb * centerColor.a / effectiveWeight,\

centerColor.a);\

}");

Again, we specify the shader program as a literal string that gets compiled by the WebGL context and, again, we need to let the shader know how to find its input data:

// Associate the uniform texture sampler with TEXTURE0 slot

var textureSamplerUniform = glContext.getUniformLocation(

program,

"uSampler");

glContext.uniform1i(textureSamplerUniform, 0);

// Since we're only using one single texture, we just make TEXTURE0 the active one

// at all times

glContext.activeTexture(glContext.TEXTURE0);

Drawing Kinect Image Data to Canvas

After getting the WebGL context ready to receive data from Kinect, we still need to let WebGL know whenever we have a new image to be rendered. So, every time that the KinectWebGLHelper object receives a valid image frame from the KinectSensor object, it calls the ImageMetadata.processImageData function, which looks like this:

this.processImageData = function(imageBuffer, width, height) {

if ((width != metadata.width) || (height != metadata.height)) {

// Whenever the image width or height changes, update tracked metadata and canvas

// viewport dimensions.

this.width = width;

this.height = height;

this.canvas.width = width;

this.canvas.height = height;

glContext.viewport(0, 0, width, height);

}

glContext.bindTexture(

glContext.TEXTURE_2D,

texture);

glContext.texImage2D(

glContext.TEXTURE_2D,

0,

glContext.RGBA,

width,

height,

0,

glContext.RGBA,

glContext.UNSIGNED_BYTE,

new Uint8Array(imageBuffer));

glContext.drawArrays(

glContext.TRIANGLES,

0,

NUM_VIEWPORT_VERTICES);

glContext.bindTexture(

glContext.TEXTURE_2D,

null);

};

Customizing the Processing Effect Applied to Kinect Image

You might have noticed while reading this post that the default value for CONVOLUTION_KERNEL_WEIGHTS provided by this WebGL sample maps to the following 3x3 convolution kernel:

1 1 1 1 1 1 1 1 1

and this corresponds to a blurring effect. The following table shows additional examples of effects that can be achieved using 3x3 convolution kernels:

Effect |

Kernel Weights |

Resulting Image |

|||||||||

Original |

|

|

|||||||||

Blurring |

|

|

|||||||||

Sharpening |

|

|

|||||||||

Edge Detection |

|

|

It is very easy to experiment with different weights of a 3x3 kernel to apply these and other effects by changing the CONVOLUTION_KERNEL_WEIGHTS coefficients and reloading application in browser. Other kernel sizes can also be supported by changing the fragment shader and its associated setup code.

Summary

The new WebserverWebGL sample is very similar in user experience to WebserverBasics-WPF, but the fact that it uses the WebGL API to leverage the power of your GPU means that your web applications can perform powerful kinds of Kinect data processing without burdening the CPU or adding latency to your user experience. We didn't add WebGL functionality previously because it was only recently that WebGL became supported in all major browsers. If you're not sure if your clients will have a WebGL-compatible browser but still want to guarantee they can display image-stream data, you should implement a hybrid approach that uses "webgl" canvas context when available and falls back to using "2d" context otherwise.

Happy coding!

Additional Resources

- WebserverWebGL sample code

- Kinect for Windows Programming Guide for Web Applications

- Kinect for Windows Sample Description for WebserverBasics-WPF

- Kinect for Windows JavaScript API Reference

- WebGL API Reference

Eddy Escardo-Raffo

Senior Software Development Engineer

Kinect for Windows