Using Kinect Background Removal with Multiple Users

Introduction: Background Removal in Kinect for Windows

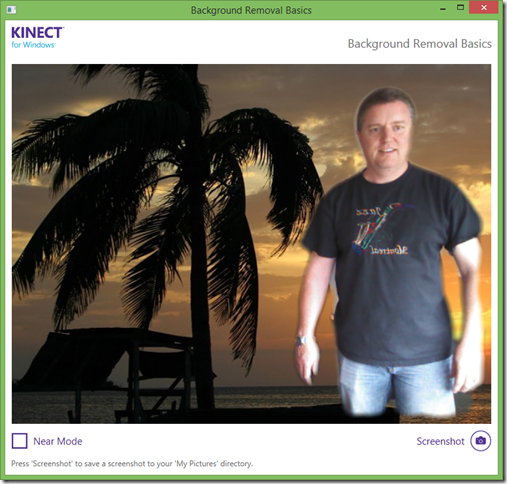

The 1.8 release of the Kinect for Windows Developer Toolkit includes a component for isolating a user from the background of the scene. The component is called the BackgroundRemovedColorStream. This capability has many possible uses, such as simulating chroma-key or “green-screen” replacement of the background – without needing to use an actual green screen; compositing a person’s image into a virtual environment; or simply blurring out the background, so that video conference participants can’t see how messy your office really is.

To use this feature in an application, you create the BackgroundRemovedColorStream, and then feed it each incoming color, depth, and skeleton frame when they are delivered by your Kinect for Windows sensor. You also specify which user you want to isolate, using their skeleton tracking ID. The BackgroundRemovedColorStream produces a sequence of color frames, in BGRA (blue/green/red/alpha) format. These frames are identical in content to the original color frames from the sensor, except that the alpha channel is used to distinguish foreground pixels from background pixels. Pixels that the background removal algorithm considers part of the background will have an alpha value of 0 (fully transparent), while foreground pixels will have their alpha at 255 (fully opaque). The foreground region is given a smoother edge by using intermediate alpha values (between 0 and 255) for a “feathering” effect. This image format makes it easy to combine the background-removed frames with other images in your application.

As a developer, you get the choice of which user you want in the foreground. The BackgroundRemovalBasics-WPF sample has some simple logic that selects the user nearest the sensor, and then continues to track the same user until they are no longer visible in the scene.

private void ChooseSkeleton()

{

var isTrackedSkeltonVisible = false;

var nearestDistance = float.MaxValue;

var nearestSkeleton = 0;foreach (var skel in this.skeletons)

{

if (null == skel)

{

continue;

}if (skel.TrackingState != SkeletonTrackingState.Tracked)

{

continue;

}if (skel.TrackingId == this.currentlyTrackedSkeletonId)

{

isTrackedSkeltonVisible = true;

break;

}if (skel.Position.Z < nearestDistance)

{

nearestDistance = skel.Position.Z;

nearestSkeleton = skel.TrackingId;

}

}if (!isTrackedSkeltonVisible && nearestSkeleton != 0)

{

this.backgroundRemovedColorStream.SetTrackedPlayer(nearestSkeleton);

this.currentlyTrackedSkeletonId = nearestSkeleton;

}

}

Wait, only one person?

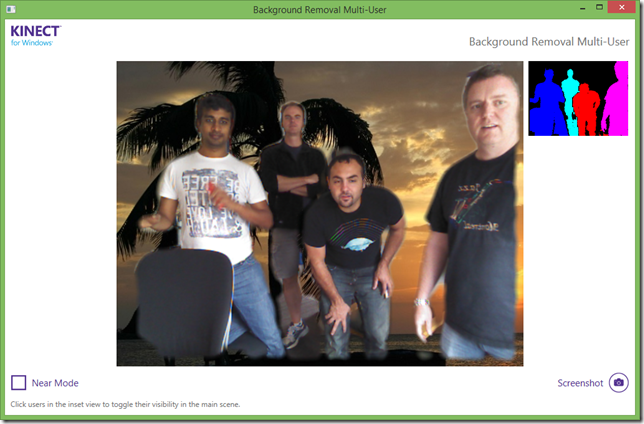

If you wanted to select more than one person from the scene to appear in the foreground, it would seem that you’re out of luck, because the BackgroundRemovedColorStream’s SetTrackedPlayer method accepts only one tracking ID. But you can work around this limitation by running two separate instances of the stream, and sending each one a different tracking ID. Each of these streams will produce a separate color image, containing one of the users. These images can then be combined into a single image, or used separately, depending on your application’s needs.

Wait, only two people?

In the most straightforward implementation of the multiple stream approach, you’d be limited to tracking just two people, due to an inherent limitation in the skeleton tracking capability of Kinect for Windows. Only two skeletons at a time can be tracked with full joint-level fidelity. The joint positions are required by the background removal implementation in order to perform its job accurately.

However, there is an additional trick we can apply, to escape the two-skeleton limit. This trick relies on an assumption that the people in the scene will not be moving at extremely high velocities (generally a safe bet). If a particular skeleton is not fully tracked for a frame or two, we can instead reuse the most recent frame in which that skeleton actually was fully tracked. Since the skeleton tracking API lets us choose which two skeletons to track at full fidelity, we can choose a different pair of skeletons each frame, cycling through up to six skeletons we wish to track, over three successive frames.

Each additional instance of BackgroundRemovedColor stream will place increased demands on CPU and memory. Depending on your own application’s needs and your hardware configuration, you may need to dial back the number of simultaneous users you can process in this way.

Wait, only six people?

Demanding, aren’t we? Sorry, the Kinect for Windows skeleton stream can monitor at most six people simultaneously (two at full fidelity, and four at lower fidelity). This is a hard limit.

Introducing a multi-user background removal sample

We’ve created a new sample application, called BackgroundRemovalMultiUser-WPF, to demonstrate how to use the technique described above to perform background removal on up to six people. We started with the code from the BackgroundRemovalBasics-WPF sample, and changed it to support multiple streams, one per user. The output from each stream is then overlaid on the backdrop image.

Factoring the code: TrackableUser

The largest change to the original sample was refactoring the application code that interacts the BackgroundRemovedColorStream, so that we can have multiple copies of it running simultaneously. This code, in the new sample, resides in a new class named TrackableUser. Let’s take a brief tour of the interesting parts of this class.

The application can instruct TrackableUser to track a specific user by setting the TrackingId property appropriately.

public int TrackingId

{

get

{

return this.trackingId;

}set

{

if (value != this.trackingId)

{

if (null != this.backgroundRemovedColorStream)

{

if (InvalidTrackingId != value)

{

this.backgroundRemovedColorStream.SetTrackedPlayer(value);

this.Timestamp = DateTime.UtcNow;

}

else

{

// Hide the last frame that was received for this user.

this.imageControl.Visibility = Visibility.Hidden;

this.Timestamp = DateTime.MinValue;

}

}this.trackingId = value;

}

}

}

The Timestamp property indicates when the TrackingId was most recently set to a valid value. We’ll see later how this property is used by the sample application’s user-selection logic.

public DateTime Timestamp { get; private set; }

Whenever the application is notified that the default Kinect sensor has changed (at startup time, or when the hardware is plugged in or unplugged), it passes this information along to each TrackableUser by calling OnKinectSensorChanged. The TrackableUser, in turn, sets up or tears down its BackgroundRemovedColorStream accordingly.

public void OnKinectSensorChanged(KinectSensor oldSensor, KinectSensor newSensor)

{

if (null != oldSensor)

{

// Remove sensor frame event handler.

oldSensor.AllFramesReady -= this.SensorAllFramesReady;// Tear down the BackgroundRemovedColorStream for this user.

this.backgroundRemovedColorStream.BackgroundRemovedFrameReady -=

this.BackgroundRemovedFrameReadyHandler;

this.backgroundRemovedColorStream.Dispose();

this.backgroundRemovedColorStream = null;

this.TrackingId = InvalidTrackingId;

}this.sensor = newSensor;

if (null != newSensor)

{

// Setup a new BackgroundRemovedColorStream for this user.

this.backgroundRemovedColorStream = new BackgroundRemovedColorStream(newSensor);

this.backgroundRemovedColorStream.BackgroundRemovedFrameReady +=

this.BackgroundRemovedFrameReadyHandler;

this.backgroundRemovedColorStream.Enable(

newSensor.ColorStream.Format,

newSensor.DepthStream.Format);// Add an event handler to be called when there is new frame data from the sensor.

newSensor.AllFramesReady += this.SensorAllFramesReady;

}

}

Each time the Kinect sensor produces a matched set of depth, color, and skeleton frames, we forward each frame’s data along to the BackgroundRemovedColorStream.

private void SensorAllFramesReady(object sender, AllFramesReadyEventArgs e)

{

...

if (this.IsTracked)

{

using (var depthFrame = e.OpenDepthImageFrame())

{

if (null != depthFrame)

{

// Process depth data for background removal.

this.backgroundRemovedColorStream.ProcessDepth(

depthFrame.GetRawPixelData(),

depthFrame.Timestamp);

}

}using (var colorFrame = e.OpenColorImageFrame())

{

if (null != colorFrame)

{

// Process color data for background removal.

this.backgroundRemovedColorStream.ProcessColor(

colorFrame.GetRawPixelData(),

colorFrame.Timestamp);

}

}using (var skeletonFrame = e.OpenSkeletonFrame())

{

if (null != skeletonFrame)

{

// Save skeleton frame data for subsequent processing.

CopyDataFromSkeletonFrame(skeletonFrame);// Locate the most recent data in which this user was fully tracked.

bool isUserPresent = UpdateTrackedSkeletonsArray();// If we have an array in which this user is fully tracked,

// process the skeleton data for background removal.

if (isUserPresent && null != this.skeletonsTracked)

{

this.backgroundRemovedColorStream.ProcessSkeleton(

this.skeletonsTracked,

skeletonFrame.Timestamp);

}

}

}

}

...

}

The UpdateTrackedSkeletonsArray method implements the logic to reuse skeleton data from an older frame when the newest frame contains the user’s skeleton, but not in a fully-tracked state. It also informs the caller whether the user with the requested tracking ID is still present in the scene.

private bool UpdateTrackedSkeletonsArray()

{

// Determine if this user is still present in the scene.

bool isUserPresent = false;

foreach (var skeleton in this.skeletonsNew)

{

if (skeleton.TrackingId == this.TrackingId)

{

isUserPresent = true;

if (skeleton.TrackingState == SkeletonTrackingState.Tracked)

{

// User is fully tracked: save the new array of skeletons,

// and recycle the old saved array for reuse next time.

var temp = this.skeletonsTracked;

this.skeletonsTracked = this.skeletonsNew;

this.skeletonsNew = temp;

}break;

}

}if (!isUserPresent)

{

// User has disappeared; stop trying to track.

this.TrackingId = TrackableUser.InvalidTrackingId;

}return isUserPresent;

}

Whenever the BackgroundRemovedColorStream produces a frame, we copy its BGRA data to the bitmap that is the underlying Source for an Image element in the MainWindow. This causes the updated frame to appear within the application’s window, overlaid on the background image.

private void BackgroundRemovedFrameReadyHandler(

object sender,

BackgroundRemovedColorFrameReadyEventArgs e)

{

using (var backgroundRemovedFrame = e.OpenBackgroundRemovedColorFrame())

{

if (null != backgroundRemovedFrame && this.IsTracked)

{

int width = backgroundRemovedFrame.Width;

int height = backgroundRemovedFrame.Height;WriteableBitmap foregroundBitmap =

this.imageControl.Source as WriteableBitmap;// If necessary, allocate new bitmap. Set it as the source of the Image

// control.

if (null == foregroundBitmap ||

foregroundBitmap.PixelWidth != width ||

foregroundBitmap.PixelHeight != height)

{

foregroundBitmap = new WriteableBitmap(

width,

height,

96.0,

96.0,

PixelFormats.Bgra32,

null);this.imageControl.Source = foregroundBitmap;

}// Write the pixel data into our bitmap.

foregroundBitmap.WritePixels(

new Int32Rect(0, 0, width, height),

backgroundRemovedFrame.GetRawPixelData(),

width * sizeof(uint),

0);// A frame has been delivered; ensure that it is visible.

this.imageControl.Visibility = Visibility.Visible;

}

}

}

Limiting the number of users to track

As mentioned earlier, the maximum number of trackable users may have a practical limit, depending on your hardware. To specify the limit, we define a constant in the MainWindow class:

private const int MaxUsers = 6;

You can modify this constant to have any value from 2 to 6. (Values larger than 6 are not useful, as Kinect for Windows does not track more than 6 users.)

Selecting users to track: The User View

We want to provide a convenient way to choose which users will be tracked for background removal. To do this, we present a view of the detected users in a small inset. By clicking on the users displayed in this inset, we can select which of those users are associated with our TrackableUser objects, causing them to be included in the foreground.

We update the user view each time a depth frame is received by the sample’s main window.

private void UpdateUserView(DepthImageFrame depthFrame)

{

...

// Store the depth data.

depthFrame.CopyDepthImagePixelDataTo(this.depthData);

...

// Write the per-user colors into the user view bitmap, one pixel at a time.

this.userViewBitmap.Lock();unsafe

{

uint* userViewBits = (uint*)this.userViewBitmap.BackBuffer;

fixed (uint* userColors = &this.userColors[0])

{

// Walk through each pixel in the depth data.

fixed (DepthImagePixel* depthData = &this.depthData[0])

{

DepthImagePixel* depthPixel = depthData;

DepthImagePixel* depthPixelEnd = depthPixel + this.depthData.Length;

while (depthPixel < depthPixelEnd)

{

// Lookup a pixel color based on the player index.

// Store the color in the user view bitmap's buffer.

*(userViewBits++) = *(userColors + (depthPixel++)->PlayerIndex);

}

}

}

}this.userViewBitmap.AddDirtyRect(new Int32Rect(0, 0, width, height));

this.userViewBitmap.Unlock();

}

This code fills the user view bitmap with solid-colored regions representing each of the detected users, as distinguished by the value of the PlayerIndex field at each pixel in the depth frame.

The main window responds to a mouse click within the user view by locating the corresponding pixel in the most recent depth frame, and using its PlayerIndex to look up the user’s TrackingId in the most recent skeleton data. The TrackingID is passed along to the ToggleUserTracking method, which will attempt to toggle the tracking of that TrackingID between the tracked and untracked states.

private void UserViewMouseLeftButtonDown(object sender, MouseButtonEventArgs e)

{

// Determine which pixel in the depth image was clicked.

Point p = e.GetPosition(this.UserView);

int depthX =

(int)(p.X * this.userViewBitmap.PixelWidth / this.UserView.ActualWidth);

int depthY =

(int)(p.Y * this.userViewBitmap.PixelHeight / this.UserView.ActualHeight);

int pixelIndex = (depthY * this.userViewBitmap.PixelWidth) + depthX;

if (pixelIndex >= 0 && pixelIndex < this.depthData.Length)

{

// Find the player index in the depth image. If non-zero, toggle background

// removal for the corresponding user.

short playerIndex = this.depthData[pixelIndex].PlayerIndex;

if (playerIndex > 0)

{

// playerIndex is 1-based, skeletons array is 0-based, so subtract 1.

this.ToggleUserTracking(this.skeletons[playerIndex - 1].TrackingId);

}

}

}

Picking which users will be tracked

When MaxUsers is less than 6, we need some logic to handle a click on an untracked user, and we are already tracking the maximum number of users. We choose to stop tracking the user who was tracked earliest (based on timestamp), and start tracking the newly chosen user immediately. This logic is implemented in ToggleUserTracking.

private void ToggleUserTracking(int trackingId)

{

if (TrackableUser.InvalidTrackingId != trackingId)

{

DateTime minTimestamp = DateTime.MaxValue;

TrackableUser trackedUser = null;

TrackableUser staleUser = null;// Attempt to find a TrackableUser with a matching TrackingId.

foreach (var user in this.trackableUsers)

{

if (user.TrackingId == trackingId)

{

// Yes, this TrackableUser has a matching TrackingId.

trackedUser = user;

}// Find the "stale" user (the trackable user with the earliest timestamp).

if (user.Timestamp < minTimestamp)

{

staleUser = user;

minTimestamp = user.Timestamp;

}

}if (null != trackedUser)

{

// User is being tracked: toggle to not tracked.

trackedUser.TrackingId = TrackableUser.InvalidTrackingId;

}

else

{

// User is not currently being tracked: start tracking, by reusing

// the "stale" trackable user.

staleUser.TrackingId = trackingId;

}

}

}

Once we’ve determined which users will be tracked by the TrackableUser objects, we need to ensure that those users are being targeted for tracking by the skeleton stream on a regular basis (at least once every three frames). UpdateChosenSkeletons implements this using a round-robin scheme.

private void UpdateChosenSkeletons()

{

KinectSensor sensor = this.sensorChooser.Kinect;

if (null != sensor)

{

// Choose which of the users will be tracked in the next frame.

int trackedUserCount = 0;

for (int i = 0; i < MaxUsers && trackedUserCount < this.trackingIds.Length; ++i)

{

// Get the trackable user for consideration.

var trackableUser = this.trackableUsers[this.nextUserIndex];

if (trackableUser.IsTracked)

{

// If this user is currently being tracked, copy its TrackingId to the

// array of chosen users.

this.trackingIds[trackedUserCount++] = trackableUser.TrackingId;

}// Update the index for the next user to be considered.

this.nextUserIndex = (this.nextUserIndex + 1) % MaxUsers;

}// Fill any unused slots with InvalidTrackingId.

for (int i = trackedUserCount; i < this.trackingIds.Length; ++i)

{

this.trackingIds[i] = TrackableUser.InvalidTrackingId;

}// Pass the chosen tracking IDs to the skeleton stream.

sensor.SkeletonStream.ChooseSkeletons(this.trackingIds[0], this.trackingIds[1]);

}

}

Combining multiple foreground images

Now that we can have multiple instances of TrackableUser, each producing a background-removed image of a user, we need to combine those images on-screen. We do this by creating multiple overlapping Image elements (one per trackable user), each parented by the MaskedColorImages element, which itself is a sibling of the Backdrop element. Wherever the background has been removed from each image, the backdrop image will show through.

As each image is created, we associate it with its own TrackableUser.

public MainWindow()

{

...

// Create one Image control per trackable user.

for (int i = 0; i < MaxUsers; ++i)

{

Image image = new Image();

this.MaskedColorImages.Children.Add(image);

this.trackableUsers[i] = new TrackableUser(image);

}

}

To capture and save a snapshot of the current composited image, we create two VisualBrush objects, one for the Backdrop, and one for MaskedColorImages. We draw rectangles with each of these brushes, into a bitmap, and then write the bitmap to a file.

private void ButtonScreenshotClick(object sender, RoutedEventArgs e)

{

...

var dv = new DrawingVisual();

using (var dc = dv.RenderOpen())

{

// Render the backdrop.

var backdropBrush = new VisualBrush(Backdrop);

dc.DrawRectangle(

backdropBrush,

null,

new Rect(new Point(), new Size(colorWidth, colorHeight)));// Render the foreground.

var colorBrush = new VisualBrush(MaskedColorImages);

dc.DrawRectangle(

colorBrush,

null,

new Rect(new Point(), new Size(colorWidth, colorHeight)));

}renderBitmap.Render(dv);

...

}

Summary

While the BackgroundRemovedColorStream is limited to tracking only one user at a time, the new BackgroundRemovalMultiUser-WPF sample demonstrates that you can run multiple stream instances to track up to six users simultaneously. When using this technique, you should consider – and measure – the increased resource demands (CPU and memory) that the additional background removal streams will have, and determine for yourself how many streams your configuration can handle.

We hope that this sample opens up new possibilities for using background removal in your own applications.

John Elsbree

Principal Software Development Engineer

Kinect for Windows