Using Kinect Webserver to Expose Speech Events to Web Clients

In our 1.8 release, we made it easy to create Kinect-enabled HTML5 web applications. This is possible because we added an extensible webserver for Kinect data along with a Javascript API which gives developers some great functionality right out of the box:

- Interactions : hand pointer movements, press and grip events useful for controlling a cursor, buttons and other UI

- User Viewer: visual representation of the users currently visible to Kinect sensor. Uses different colors to indicate different user states

- Background Removal: “Green screen” image stream for a single person at a time

- Skeleton: standard skeleton data such as tracking state, joint positions, joint orientations, etc.

- Sensor Status: Events corresponding to sensor connection/disconnection

This is enough functionality to write a compelling application but it doesn’t represent the whole range of Kinect sensor capabilities. In this article I will show you step-by-step how to extend the WebserverBasics-WPF sample (see C# code in CodePlex or documentation in MSDN) available from Kinect Toolkit Browser to enable web applications to respond to speech commands, where the active speech grammar is configurable by the web client.

A solution containing the full, final sample code is available on CodePlex. To compile this sample you will also need Microsoft.Samples.Kinect.Webserver (available via CodePlex and Toolkit Browser) and Microsoft.Kinect.Toolkit components (available via Toolkit Browser).

Getting Started

To follow along step-by-step:

- If you haven’t done so already, install the Kinect for Windows v1.8 SDK and Toolkit

- Launch the Kinect Toolkit Browser

- Install WebserverBasics-WPF sample in a local directory

- Open the WebserverBasics-WPF.sln solution in Visual Studio

- Go to line 136 in MainWindow.xaml.cs file

You should see the following TODO comment which describes exactly how we’re going to expose speech recognition functionality:

//// TODO: Optionally add factories here for custom handlers:

//// this.webserver.SensorStreamHandlerFactories.Add(new MyCustomSensorStreamHandlerFactory());

//// Your custom factory would implement ISensorStreamHandlerFactory, in which the

//// CreateHandler method would return a class derived from SensorStreamHandlerBase

//// which overrides one or more of its virtual methods.

We will replace this comment with the functionality described below.

So, What Functionality Are We Implementing?

More specifically, on the server side we will:

- Create a speech recognition engine

- Bind the engine to a Kinect sensor’s audio stream whenever sensor gets connected/disconnected

- Allow a web client to specify the speech grammar to be recognized

- Forward speech recognition events generated by engine to web client

- Registering a factory for the speech stream handler with the Kinect webserver

This will be accomplished by creating a class called SpeechStreamHandler, derived fromMicrosoft.Samples.Kinect.Webserver.Sensor.SensorStreamHandlerBase. SensorStreamHandlerBase is an implementation of ISensorStreamHandler that frees us from writing boilerplate code. ISensorStreamHandler is an abstraction that gets notified whenever a Kinect sensor gets connected/disconnected, when color, depth and skeleton frames become available and when web clients request to view or update configuration values. In response, our speech stream handler will send event messages to web clients.

On the web client side we will:

- Configure speech recognition stream (enable and specify the speech grammar to be recognized)

- Modify the web UI in response to recognized speech events

All new client-side code is in SamplePage.html

Creating a Speech Recognition Engine

In the constructor for SpeechStreamHandler you’ll see the following code:

RecognizerInfo ri = GetKinectRecognizer();

if (ri != null)

{

this.speechEngine = new SpeechRecognitionEngine(ri.Id);if (this.speechEngine != null)

{

// disable speech engine adaptation feature

this.speechEngine.UpdateRecognizerSetting("AdaptationOn", 0);

this.speechEngine.UpdateRecognizerSetting("PersistedBackgroundAdaptation", 0);

this.speechEngine.AudioStateChanged += this.AudioStateChanged;

this.speechEngine.SpeechRecognitionRejected += this.SpeechRecognitionRejected;

this.speechEngine.SpeechRecognized += this.SpeechRecognized;

}

}

This code snippet will be familiar if you’ve looked at some of our other speech samples such as SpeechBasics-WPF. Basically, we’re getting the metadata corresponding to the Kinect acoustic model (GetKinectRecognizer is hardcoded to use English language acoustic model in this sample, but this can be changed by installing additional language packs and modifying GetKinectRecognizer to look for the desired culture name), using it to create a speech engine, turning off some settings related to audio adaptation feature (which makes speech engine better suited for long-running scenarios) and registering to receive events when speech is recognized or rejected, or when audio state (e.g.: silence vs someone speaking) changes.

Binding the Speech Recognition Engine to a Kinect Sensor’s Audio Stream

In order to do this, we override SensorStreamHandlerBase’s implementation of OnSensorChanged, so we can find out about sensors connecting and disconnecting.

public override void OnSensorChanged(KinectSensor newSensor)

{

base.OnSensorChanged(newSensor);

if (this.sensor != null)

{

if (this.speechEngine != null)

{

this.StopRecognition();

this.speechEngine.SetInputToNull();

this.sensor.AudioSource.Stop();

}

}this.sensor = newSensor;

if (newSensor != null)

{

if (this.speechEngine != null)

{

this.speechEngine.SetInputToAudioStream(

newSensor.AudioSource.Start(), new SpeechAudioFormatInfo(EncodingFormat.Pcm, 16000, 16, 1, 32000, 2, null));

this.StartRecognition(this.grammar);

}

}

}

The main thing we need to do here is Start the AudioSource of the newly connected Kinect sensor in order to get an audio stream that we can hook up as the input to the speech engine. We also need to specify the format of the audio stream, which is a single-channel, 16-bits per sample, Pulse Code Modulation (PCM) stream, sampled at 16kHz.

Allow Web Clients to Specify Speech Grammar

We will let clients send us the whole speech grammar that they want recognized, as XML that conforms to the W3C Speech Recognition Grammar Specification format version 1.0. To do this, we will expose a configuration property called “grammarXml”.

Let’s backtrack a little bit because earlier we glossed over the bit of code in the SpeechStreamHandler constructor where we register the handlers for getting and setting stream configuration properties:

this.AddStreamConfiguration(SpeechEventCategory, new StreamConfiguration(this.GetProperties, this.SetProperty));

Now, in the SetProperty method we call LoadGrammarXml method whenever a client sets the “grammarXml” property:

case GrammarXmlPropertyName:

this.LoadGrammarXml((string)propertyValue);

break;

And in the LoadGrammarXml method we do the real work of updating the speech grammar:

private void LoadGrammarXml(string grammarXml)

{

this.StopRecognition();if (!string.IsNullOrEmpty(grammarXml))

{

using (var memoryStream = new MemoryStream(Encoding.UTF8.GetBytes(grammarXml)))

{

Grammar newGrammar;

try

{

newGrammar = new Grammar(memoryStream);

}

catch (ArgumentException e)

{

throw new InvalidOperationException("Requested grammar might not contain a root rule", e);

}

catch (FormatException e)

{

throw new InvalidOperationException("Requested grammar was specified with an invalid format", e);

}this.StartRecognition(newGrammar);

}

}

}

We first stop the speech recognition because we don’t yet know if the specified grammar is going to be valid or not, then we try to create a new Microsoft.Speech.Recognition.Grammar object from the specified property value. If the property value does not represent a valid grammar, newGrammar variable will remain null. Finally, we call StartRecognition method, which loads the grammar into the speech engine (if grammar is valid), and tells the speech engine to start recognizing and keep recognizing speech phrases until we explicitly tell it to stop.

private void StartRecognition(Grammar g)

{

if ((this.sensor != null) && (g != null))

{

this.speechEngine.LoadGrammar(g);

this.speechEngine.RecognizeAsync(RecognizeMode.Multiple);

}this.grammar = g;

}

Send Speech Recognition Events to Web Client

When we created the speech recognition engine, we registered for 3 events: AudioStateChanged, SpeechRecognized and SpeechRecognitionRejected. Whenever any of these events happen we just want to forward the event to the web client. Since the code ends up being very similar, we will focus on the SpeechRecognized event handler:

private async void SpeechRecognized(object sender, SpeechRecognizedEventArgs args)

{

var message = new RecognizedSpeechMessage(args);

await this.ownerContext.SendEventMessageAsync(message);

}

To send messages to web clients we use functionality exposed by ownerContext, which is an instance of the SensorStreamHandlerContext class which was passed to us in the constructor. The messages are sent to clients using a web socket channel, and could be

- Stream messages: Messages that are generated continuously, at a predictable rate (e.g.: 30 skeleton stream frames are generated every second), where the data from each message replaces the data from the previous message. If we drop one of these messages every so often there is no major consequence because another will arrive shortly thereafter with more up-to-date data, so the framework might decide to drop one of these messages if it detects a bottleneck in the web socket channel.

- Event messages: Messages that are generated sporadically, at an unpredictable rate, where each event represents an isolated incident. As such, it is not desirable to drop any one of these kind of messages.

Given the nature of speech recognition, we chose to communicate with clients using event messages. Specifically, we created the RecognizedSpeechMessage class, which is a subclass of EventMessage that serves as a representation of SpeechRecognizedEventArgs which can be easily serialized as JSON and follows the JavaScript naming conventions.

You might have noticed the usage of the “async” and “await” keywords in this snippet. They are described in much more detail in MSDN but, in summary, they enable an asynchronous programming model so that long-running operations don’t block thread execution while not necessarily using more than one thread. The Kinect webserver uses a single thread to schedule tasks so the consequence for you is that ISensorStreamHandler implementations don’t need to be thread-safe, but should be aware of potential re-entrancy due to asynchronous behavior.

Registering a Speech Stream Handler Factory with the Kinect Webserver

The Kinect webserver can be started, stopped and restarted, and each time it is started it creates ISensorStreamHandler instances in a thread dedicated to Kinect data handling, which is the only thread that ever calls these objects. To facilitate this behavior, the server doesn’t allow for direct registration of ISensorStreamHandler instances and instead expects ISensorStreamHandlerFactory instances to be registered in KinectWebserver.SensorStreamHandlerFactories property.

For the purposes of this sample, we declared a private factory class that is exposed as a static singleton instance directly from the SpeechStreamHandler class:

public class SpeechStreamHandler : SensorStreamHandlerBase, IDisposable

{

...static SpeechStreamHandler()

{

Factory = new SpeechStreamHandlerFactory();

}

...public static ISensorStreamHandlerFactory Factory { get; private set; }

...private class SpeechStreamHandlerFactory : ISensorStreamHandlerFactory

{

public ISensorStreamHandler CreateHandler(SensorStreamHandlerContext context)

{

return new SpeechStreamHandler(context);

}

}

}

Finally, back in line 136 of MainWindow.xaml.cs, we replace the TODO comment mentioned above with

// Add speech stream handler to the list of available handlers, so web client

// can configure speech grammar and receive speech events

this.webserver.SensorStreamHandlerFactories.Add(SpeechStreamHandler.Factory);

Configure Speech Recognition Stream in Web Client

The sample web client distributed with WebserverBasics-WPF is already configuring a couple of other streams in the function called updateUserState in SamplePage.html, so we will add the following code to this function:

var speechGrammar = '\

<grammar version="1.0" xml:lang="en-US" tag-format="semantics/1.0-literals" root="DefaultRule" xmlns="\' data-mce-href=">\'>\'>\'>\'>https://www.w3.org/2001/06/grammar">\

<rule id="DefaultRule" scope="public">\

<one-of>\

<item>\

<tag>SHOW</tag>\

<one-of><item>Show Panel</item><item>Show</item></one-of>\

</item>\

<item>\

<tag>HIDE</tag>\

<one-of><item>Hide Panel</item><item>Hide</item></one-of>\

</item>\

</one-of>\

</rule>\

</grammar>';immediateConfig["speech"] = { "enabled": true, "grammarXml": speechGrammar };

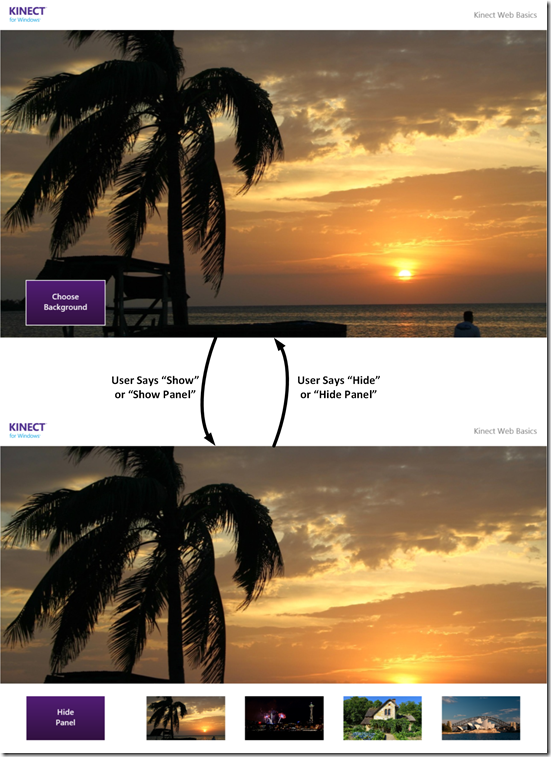

This code enables the speech stream and specifies a grammar that

- triggers a recognition event with “SHOW” as semantic value whenever a user utters the phrases “Show” or “Show Panel”

- triggers a recognition event with “HIDE” as semantic value whenever a user utters the phrases “Hide” or “Hide Panel”

Modify the web UI in response to recognized speech events

The sample web client already registers an event handler function, so we just need to update it to respond to speech events in addition to user state events:

function onSpeechRecognized(recognizedArgs) {

if (recognizedArgs.confidence > 0.7) {

switch (recognizedArgs.semantics.value) {

case "HIDE":

setChoosePanelVisibility(false);

break;

case "SHOW":

setChoosePanelVisibility(true);

break;

}

}

}

...sensor.addEventHandler(function (event) {

switch (event.category) {

...

case "speech":

switch (event.eventType) {

case "recognized":

onSpeechRecognized(event.recognized);

break;

}

break;

}

});

Party Time!

At this point you can rebuild the updated solution and run it to see the server UI. From this UI you can click on the link that reads “Open sample page in default browser” and play with the sample UI. It will look the same as before the code changes, but will respond to the speech phrases “Show”, “Show Panel”, “Hide” and “Hide Panel”. Now try changing the grammar to include more phrases and update the UI in different ways in response to speech events.

Happy coding!

Additional Resources

- Kinect for Windows Programming Guide for Web Applications

- Kinect for Windows Sample Description for WebserverBasics-WPF

- Kinect for Windows JavaScript API Reference

- Microsoft Speech Platform SDK 11 Documentation

- HTML5 Specification

- WebSocket API Reference