Updated SDK, with HTML5, Kinect Fusion improvements, and more

I am pleased to announce that we released the Kinect for Windows software development kit (SDK) 1.8 today. This is the fourth update to the SDK since we first released it commercially one and a half years ago. Since then, we’ve seen numerous companies using Kinect for Windows worldwide, and more than 700,000 downloads of our SDK.

We build each version of the SDK with our customers in mind—listening to what the developer community and business leaders tell us they want and traveling around the globe to see what these dedicated teams do, how they do it, and what they most need out of our software development kit.

The new background removal API is useful for advertising, augmented reality gaming, training

and simulation, and more.

Kinect for Windows SDK 1.8 includes some key features and samples that the community has been asking for, including:

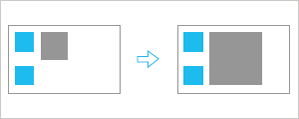

- New background removal. An API removes the background behind the active user so that it can be replaced with an artificial background. This green-screening effect was one of the top requests we’re heard in recent months. It is especially useful for advertising, augmented reality gaming, training and simulation, and other immersive experiences that place the user in a different virtual environment.

- Realistic color capture with Kinect Fusion. A new Kinect Fusion API scans the color of the scene along with the depth information so that it can capture the color of the object along with its three-dimensional (3D) model. The API also produces a texture map for the mesh created from the scan. This feature provides a full fidelity 3D model of a scan, including color, which can be used for full color 3D printing or to create accurate 3D assets for games, CAD, and other applications.

- Improved tracking robustness with Kinect Fusion. This algorithm makes it easier to scan a scene. With this update, Kinect Fusion is better able to maintain its lock on the scene as the camera position moves, yielding a more reliable and consistent scanning.

- HTML interaction sample. This sample demonstrates implementing Kinect-enabled buttons, simple user engagement, and the use of a background removal stream in HTML5. It allows developers to use HTML5 and JavaScript to implement Kinect-enabled user interfaces, which was not possible previously—making it easier for developers to work in whatever programming languages they prefer and integrate Kinect for Windows into their existing solutions.

- Multiple-sensor Kinect Fusion sample. This sample shows developers how to use two sensors simultaneously to scan a person or object from both sides—making it possible to construct a 3D model without having to move the sensor or the object! It demonstrates the calibration between two Kinect for Windows sensors, and how to use Kinect Fusion APIs with multiple depth snapshots. It is ideal for retail experiences and other public kiosks that do not include having an attendant available to scan by hand.

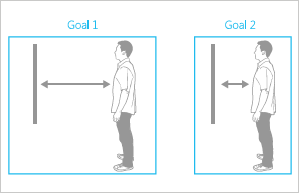

- Adaptive UI sample. This sample demonstrates how to build an application that adapts itself depending on the distance between the user and the screen—from gesturing at a distance to touching a touchscreen. The algorithm in this sample uses the physical dimensions and positions of the screen and sensor to determine the best ergonomic position on the screen for touch controls as well as ways the UI can adapt as the user approaches the screen or moves further away from it. As a result, the touch interface and visual display adapt to the user’s position and height, which enables users to interact with large touch screen displays comfortably. The display can also be adapted for more than one user.

We also have updated our Human Interface Guidelines (HIG) with guidance to complement the new Adaptive UI sample, including the following:

Design a transition that reveals or hides additional information

without obscuring the anchor points in the overall UI.

Design UI where users can accomplish all tasks for each goal

within a single range.

My team and I believe that communicating naturally with computers means being able to gesture and speak, just like you do when communicating with people. We believe this is important to the evolution of computing, and are committed to helping this future come faster by giving our customers the tools they need to build truly innovative solutions. There are many exciting applications being created with Kinect for Windows, and we hope these new features will make those applications better and easier to build. Keep up the great work, and keep us posted!

Bob Heddle, Director

Kinect for Windows

Key links

- Download Kinect for Windows SDK 1.8

- Download Kinect for Windows Toolkit 1.8

- Download Kinect for Windows Runtime 1.8

- Find out more about Kinect for Windows

- See what others are doing with Kinect for Windows

- Read what people are saying about Kinect for Windows

- Tell us about your solutions on Facebook and Twitter

- Kinect for Windows Human Interface Guidelines (PDF, 14.4 MB)

- FAQ