Joshua Blake on Kinect and the Natural User Interface Revolution (Part 2)

The following blog post was guest authored by K4W MVP, Joshua Blake. Josh is the Technical Director of the InfoStrat Advanced Technology Group in Washington, D.C where he and his team work on cutting-edge Kinect and NUI projects for their clients. You can find him on twitter @joshblake or at his blog, https://nui.joshland.org .

Josh recently recorded several videos for our Kinect for Windows Developer Center. This is the second of three posts he will be contributing this month to the blog.

In part 1, I shared videos covering the core natural user interface concepts and a sample application that I use to control presentations called Kinect PowerPoint Control. In this post, I’m going to share videos of two more of my sample applications, one of which is brand new and has never been seen before publicly!

When I present at conferences or workshops about Kinect, I usually demonstrate several sample applications that I’ve developed. These demos enable me to illustrate how various NUI design scenarios and challenges are addressed by features of the Kinect. This helps the audience see the Kinect in action and gets them thinking about the important design concepts used in NUI. (See the Introduction to Natural User Interfaces and Kinect video in part 1 for more on NUI design concepts.)

Below, you will find overviews and videos of two of my open source sample applications: Kinect Weather Map and Face Fusion. I use Kinect Weather Map in most developer presentations and will be using the new Face Fusion application for future presentations.

I must point out that these are still samples - they are perhaps 80% solutions to the problems they approach and lack the polish (and complexity!) of a production system. This means they still have rough edges at certain places but also are easier for a developer to look through and learn from the code.

Kinect Weather Map

This application lets you play the role of a broadcast meteorologist and puts your image in front of a live, animated weather map. Unlike a broadcast meteorologist, you won’t need a green screen or any special background due to the magic of the Kinect! The application demonstrates background removal, custom gesture recognition, and a gesture design that is appropriate to this particular scenario. This project source code is available under an open source license at https://kinectweather.codeplex.com/.

Here are three videos covering different aspects of the Kinect Weather Map sample application:

Community Sample: Kinect Weather Map – Design (1:45)

Community Sample: Kinect Weather Map – Code Walkthrough – Gestures (2:40)

Community Sample: Kinect Weather Map – Code Walkthrough – Background Removal (5:40)

I made Kinect Weather Map a while ago, but it still works great for presentations and is a good reference for new developers for getting started with real-time image manipulation and background removal. This next application, though, is brand new and I have not shown it publicly until today!

Face Fusion

The Kinect for Windows SDK recently added the much-awaited Kinect Fusion feature. Kinect Fusion lets you integrate data across multiple frames to create a 3D model, which can be exported to a 3D editing program or used in a 3D printer. As a side-effect, Kinect Fusion also tracks the position of the Kinect relative to the reconstruction volume, the 3D box in the real-world that is being scanned.

I wanted to try out Kinect Fusion so I was thinking about what might make an interesting application. Most of the Kinect Fusion demos so far have been variations of scanning a room or small area by moving the Kinect around with your hands. Some demos scanned a person, but required a second person to move the Kinect around. This made me think – what would it take to scan yourself without needing a second person? Voila! Face Fusion is born.

Face Fusion lets you make a 3D scan of your own head using a fixed Kinect sensor. You don’t need anyone else to help you and you don’t need to move the Kinect at all. All you need to do is turn your head while in view of the sensor. This project source code is available under an open source license at https://facefusion.codeplex.com.

Here are two videos walking through Face Fusion’s design and important parts of the source code. Watch them first to see what the application does, then join me again below for a more detailed discussion of a few technical and user experience design challenges.

Community Sample: Face Fusion – Design (2:34)

Community Sample: Face Fusion – Code Walkthrough (4:29)

I’m pretty satisfied with how Face Fusion ended up in terms of the ease of use and discoverability. In fact, at one point while setting up to record these videos, I took a break but left the application running. While I wasn’t looking, the camera operator snuck over and started using the application himself and successfully scanned his own head. He wasn’t a Kinect developer and didn’t have any training except watching me practice once or twice. This made me happy for two reasons: it was easy to learn, and it worked for someone besides myself!

Face Fusion Challenges

Making the application work well and be easy to use and learn isn’t as easy as it sounds though. In this section I’m going to share a few of the challenges I came across and how I solved them.

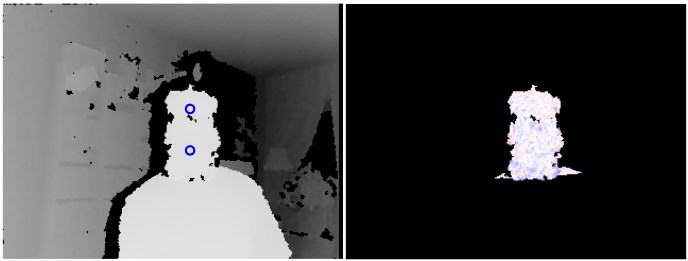

Figure 1: Cropped screenshots from Face Fusion: Left, the depth image showing a user and background, with the head and neck joints highlighted with circles. Right, the Kinect Fusion residual image illustrates which pixels from this depth frame were used in Kinect Fusion.

Scanning just the head

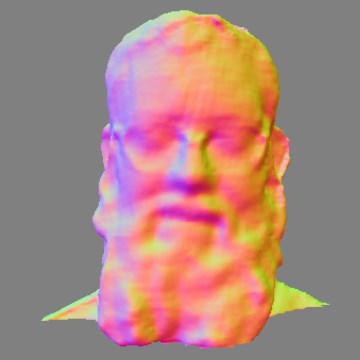

Kinect Fusion tracks the movement of the sensor (or the movement of the reconstruction volume – it is all relative!) by matching up the new data to previously scanned data. The position of the sensor relative to the reconstruction volume is critical because that is how it knows where to add the new depth data in the scan. Redundant data from non-moving objects just reinforces the scan and improves the quality. On the other hand, anything that changes or moves during the scan is slowly dissolved and re-scanned in the new position.

This is great for scanning an entire room when everything is fixed, but doesn’t work if you scan your body and turn your head. Kinect Fusion tends to lock onto your shoulders and torso, or anything else visible around you, while your head just dissolves away and you don’t get fully scanned. The solution here was to reduce the size of the reconstruction volume from “entire room” to “just enough to fit your head” and then center the reconstruction volume on your head using Kinect SDK skeleton tracking.

Kinect Fusion ignores everything outside of the real-world reconstruction volume, even if it is visible in the depth image. This causes Kinect Fusion to only track the relative motion between your head and the sensor. The sensor can now be left in one location and the user can move more freely and naturally because the shoulders and torso are not in the volume.

Figure 2: A cropped screenshot of Face Fusion scanning only the user’s head in real-time. The Face Fusion application uses Kinect Fusion to render the reconstruction volume. Colors represent different surface normal directions.

Controlling the application

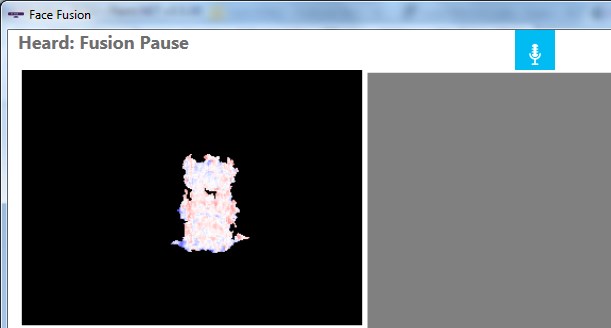

Since the scanning process requires the user to stand (or sit) in view of the sensor rather than at the computer, it is difficult to use mouse or touch to control the scan. This is a perfect scenario for voice control! The user can say “Fusion Start”, “Fusion Pause”, or “Fusion Reset” to control the scan process without needing to look at the screen or be near the computer. (Starting the scan just starts streaming data to Kinect Fusion, while resetting the scan clears the data and resets the reconstruction volume.)

Voice control was a huge help, but I found that when testing the application, I still tended to try to watch the screen during the scan to see how the scan was doing and if the scan had lost tracking. This affected my ability to turn my head far enough for a good scan. If I ignored the screen and slowly turned all the way around, I would often find the scan had failed early on because I moved too quickly and I wasted all that time for nothing. I realized that in this interaction, we needed to have both control of the scan through voice and feedback on the scan progress and quality through non-visual means. Both channels in the interaction are critical.

Figure 3: A cropped Face Fusion screenshot showing the application affirming that it heard the user’s command, top left. In the top right, the KinectSensorChooserUI control shows the microphone icon so the user knows the application is listening.

Providing feedback to the user

Since I already had voice recognition, one approach might have been to use speech synthesis to let the computer guide the user through the scan. I quickly realized this would be difficult to implement and would be a sub-optimal solution. Speech is discrete but the scan progress is continuous. Mapping the scan progress to speech would be challenging.

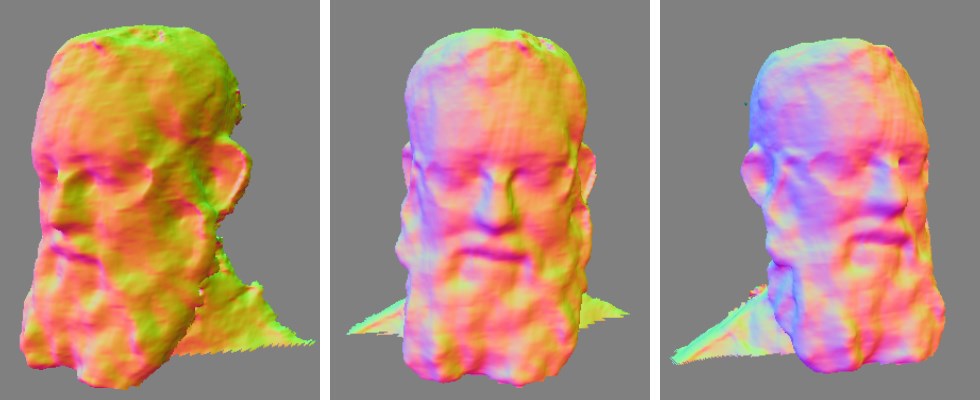

At some point I got the idea of making the computer sing, instead. Maybe the pitch or tonality could provide a continuous audio communication channel. I tried making a sine wave generator using the NAudio open source project and bending the pitch based upon the average error in the Kinect Fusion residual image. After testing a prototype, I figured out that this worked well; it greatly improved my confidence in the scan progress without seeing the screen. Even better, it gave me more feedback than I had before so I knew when to move or hold still, resulting in better scan results!

Face Fusion plays a pleasant triad chord when it has fully integrated the current view of the user's head, and otherwise continuously slides a single note up to an octave downward based upon the average residual error. This continuous feedback lets me decide how far to turn my head and when I should stop to let it catch up. This is easier to understand when you hear it. I encourage you to watch the Face Fusion videos above. Better yet download the code and try it yourself!

The end result may be a little silly to listen to at first, but if you try it out you’ll find that you are having an interesting non-verbal conversation with the application through the Kinect sensor – you moving your head in specific ways and it responding with sound. It helps you get the job done without needing a second person.

This continuous audio feedback technique would also be useful for other Kinect Fusion applications where you move the sensor with your hands. It would let you focus on the object being scanned rather than looking away at a display.

Figure 4: A sequence of three cropped Face Fusion screenshots showing the complete scan. When the user pauses the scan by saying “Kinect Pause”, Face Fusion rotates the scan rendering for user review.

Keep watching this blog this next week for part three, where I will share one more group of videos that break down the designs of several early-childhood educational applications we created for our client, Kaplan Early Learning Company. Those videos will take you behind-the-scenes on our design process and show you how we approached the various challenging aspects of designing fun and educational Kinect applications for small children.

-Josh

@joshblake | joshb@infostrat.com | mobile +1 (703) 946-7176 | https://nui.joshland.org