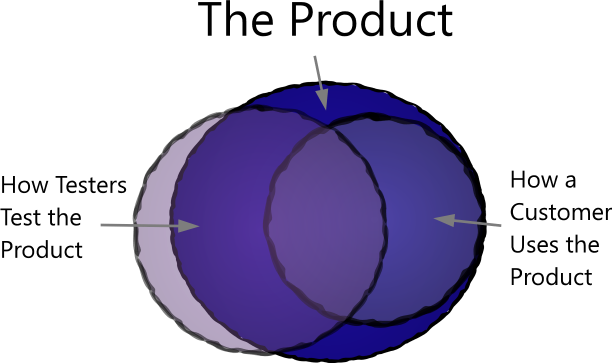

How Testers Test versus How Users Use

With the emphasis on SDETs and generic Engineering teams, I've seen a growing disparity in how products are being tested versus how users actually use a product. Because it is often very easy to crank out more test cases (especially ones which just iterate on existing tests), testers often lose focus of what is actually important to a customer.

When looking at your automation ask yourself these questions:

- Are my tests focused on the functionality which users care the most about? Figure out what matters the most to users. If users spend 80% of their time in one area of your product, that is where 80% of your testing should be as well.

- How much time (both development-time and execution-time) is invested in edge/corner cases versus actual user scenarios? A finely-tuned unit-test suite is a great thing to have, but is not a substitute for actually testing the software. It is just too easy to spend more time adding variations and more test cases that don't offer any additional benefit.

- Does the automation correctly hit all the same code paths under the same environmental situations? If your automation is relying too heavily on internal hooks at lower levels, it might not be exercising the software the way a user will be. Any deviation will reduce the effectiveness of the tests.

- Are test cases executing in a "pristine" state? Often tests are run on a clean system, after a fresh install, utilizing perfectly scripted steps over clean data. Users don't work like that. Run tests under a heavily loaded system. Intermix test scenarios to mimic the user moving chaotically through the software. Use real-world data sets. Then on top of all that, throw in some task-switches, network fluctuations, etc.

To make customers happy, you need a way to quantify how Customerized your tests are. If the "how testers test the product" circle fully overlaps the "how a customer uses the product" circle, then your tests are highly Customerized, and you will have a better chance at satisfying your customers.