Synchronize two tables using SQL Server Integration Services (SSIS)–Part I of II

There are situations where a SQL Server DBA needs to keep two different tables in sync. Usually, the DBA needs to keep a copy of a table in a in a data warehouse repository that is used as a solution for archiving and/or reporting.

SQL Server provides a robust method for keeping data synchronized across databases using Replication but there are situations when all we need is just to keep an online copy of a single table for archiving or reporting purposes and we would prefer to do not tinker into SQL Server replication.

This post is divided into two parts: Part I explains how to update a destination table with the information that is added into a source table while Part II explains how to replicate any change that happens in existing records in the source table into destination. Please, take into account this is just another way of doing this, there are many other possibilities but the one listed here is probably one of the fastest to implement and modify on-demand.

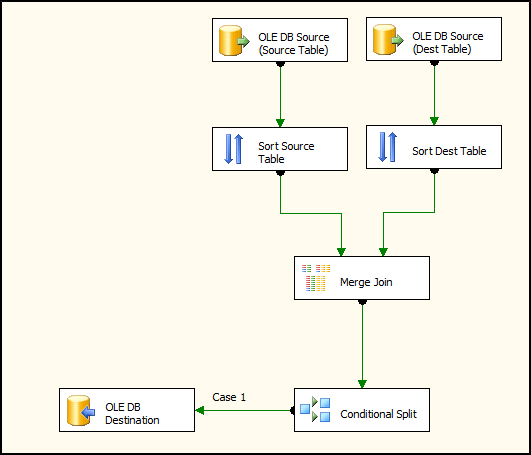

This procedure is based on the following scenario: A “table A” on “database A” is periodically replicated using SSIS into “table B” on “database B”. “Table A” is updated with new records and we need to copy those records into “table B” too. Our final implementation will look like this in SQL Server Business Intelligence Development Studio:

Let’s see how this works:

1. “Source Table” is “table A” in “database A” while “Dest Table” is destination “table B” in “database B”. We start creating two different OLEDB connections in the Data Flow component in SSIS using both the source and destination table as data sources.

2. We need to perform a JOIN operation on the two different data sources to copy the information we need from one table into the other. For this JOIN to work correctly, the data has to be sorted; this is described in the following MSDN link:

In Integration Services, the Merge and Merge Join transformations require sorted data for their inputs. The input data must be sorted physically, and sort options must be set on the outputs and the output columns in the source or in the upstream transformation. If the sort options indicate that the data is sorted, but the data is not actually sorted, the results of the merge or merge join operation are unpredictable.

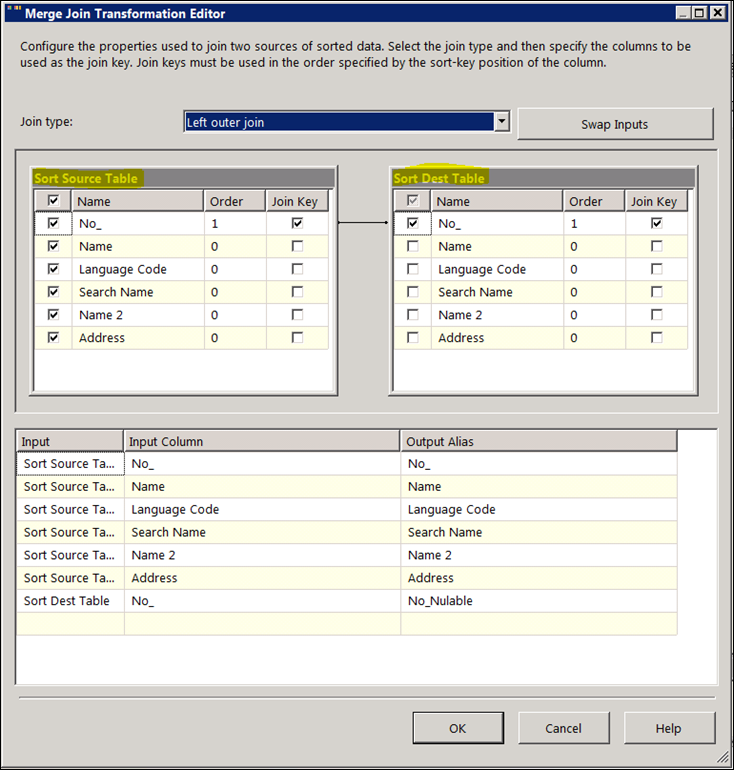

The “Merge Join” operation is where we filter the data that has been added in the source table (the one we need) and the data that has not been added (the one we do not need). In our case the source table (on the left) contains all the columns we want to copy while on the destination table (on the right) contains only the record that corresponds to the Primary Key column “No_”. Here is the description of this task:

Here is the key part of the process: the Left Outer Join retrieves all the records in the source table but those that do not exist on the destination table are retrieved as NULLs in the Join Key column “No_”. This is also described in the product documentation:

To include all products, regardless of whether a review has been written for one, use an ISO left outer join. The following is the query:

USE AdventureWorks2008R2;

GO

SELECT p.Name, pr.ProductReviewID

FROM Production.Product p

LEFT OUTER JOIN Production.ProductReview pr

ON p.ProductID = pr.ProductIDThe LEFT OUTER JOIN includes all rows in the Product table in the results, whether or not there is a match on the ProductID column in the ProductReview table. Notice that in the results where there is no matching product review ID for a product, the row contains a null value in the ProductReviewID column.

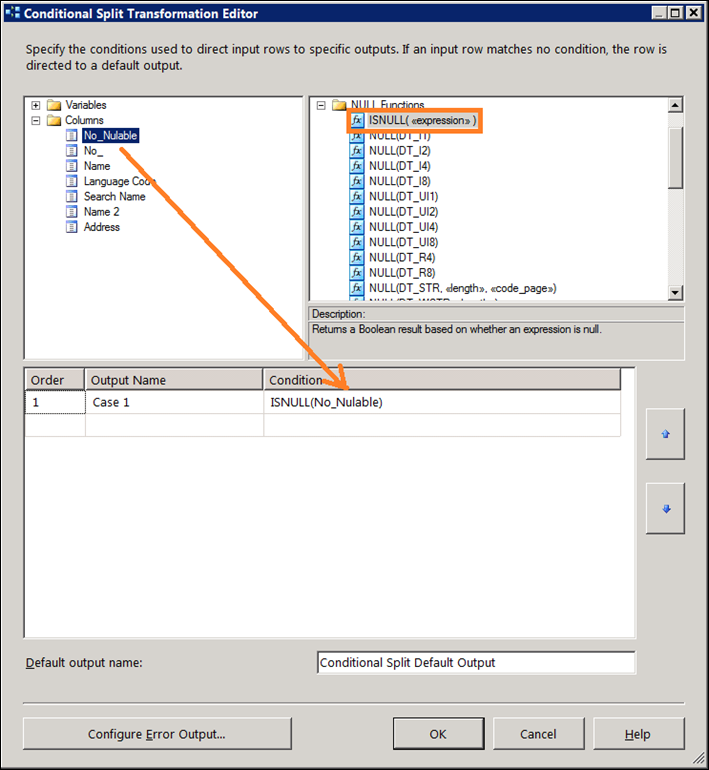

3. Then we have to split the data we need from the one we do not need. For this we use a “Conditional Split” task that takes care of saving the information for those records where the Join Key “No_” was NULL, i.e. saving the information only of the new records. Here is a description of this conditional split task:

4. Finally we INSERT the resultant data from the Split Conditional task into the destination table, which is the same we use as the source table at the very beginning.

In this example the Join task is configured so you can reuse the tasks as many times as you want, the records on the destination table will not be duplicated with the information on the source table, only the new records will be populated into the destination table.