What is Surface?

Well, by now I imagine many folks may have heard a little bit about Surface -- Microsoft's latest computing innovation. There's a reason Surface is getting a lot of buzz: it really is ground breaking! For those who haven't seen it yet, you owe it to yourself to check it out. See www.microsoft.com/surface for videos that show Surface in action.

Also, my colleague Jon Box has done a very nice job putting together a list of links with more info and press coverage of the Surface announcement.

But beyond the very cool demo videos, what really is Surface?

Scott Guthrie points out that the UI experience is built completely in .NET with WPF (Windows Presentation Foundation), and mentions a couple of good WPF books if you're interested in learning yourself. Surface, along with Interknowlogy's 3D Collaborator for CAD drawings, and the Northface kiosk, is another example that really shows the power of WPF to create amazing, compelling user experiences! BTW, Scott also points to a very good Popular Mechanics content, that includes this video:

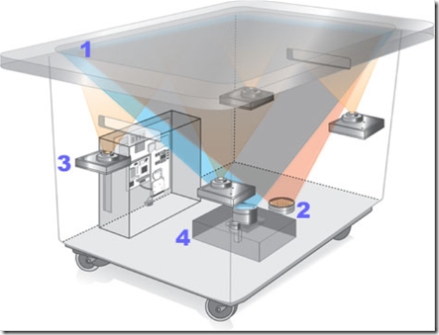

and the descriptive text to this diagram, as well as an article, too.

Basically, the diagram shows that there are 5 cameras (including near-infrared), a DLP light engine, and a modified Vista PC inside the table. As you would expect from a PC, many networking options are available. It appears that RFID is not deployed in the released prototype, but it certainly could be. So those are some of the key bits, but the bits by themselves don't really tell the whole story.

From my perspective, what really makes Microsoft Surface special is that it pulls together advances in two disparate fields of computer science: machine vision and multitouch computing in an elegant, useful way. Machine vision is just what it sounds like: machines (computers) that can process visual information, such as recognizing objects and motion. Learn more about machine vision.

Multitouch computing is the a method of human-computer interaction that allows one or more users to use multiple touch inputs simultaneously. For example, with a traditional tablet PC, you only draw one line at a time. With Multitouch Computing, you can draw many lines at once. This opens the path for "chords" of movement that have meaning and touch-based gesture interactions. By tying multitouch computing and machine vision together, Microsoft Surface provides a new UI paradigm that is more intuitive, more experiential, more intimate, and in some cases more desirable than pointing and clicking.

One of the guys working on multitouch computing is an NYU Researcher named Jeff Han. Phil Bogle has more on Jeff and his company Perceptive Pixel, including a Perceptive Pixel promotional video and a Wikipedia entry on Jeff. From what I've seen, my favorite video of Jeff is this one doing a multitouch computing demo in front of a live audience. For a lot more on multitouch computing, check out Bill Buxton's multitouch overview: "Mult-Touch Systems that I Have Known and Loved".

I'll leave the many, many new capabilities and uses of Surface computing to a future post, but by bringing together two of the most exciting topics in computer science -- machine vision and multitouch computing -- Microsoft Surface opens a new chapter in computational history -- one that is likely to accelerate the blending of the formerly distinct virtual and the physical worlds around us.