Agile Performance Engineering

Note: This article is updated at Agile Performance Engineering.

In this post, I’ll share the model we used successfully for years in Microsoft patterns & practices to bake performance into an Agile Life Cycle.

One of the key challenges with building software, is how to bake quality into your process. Some teams try to do it all up front. Some try to do it all at the end. Some try to do it all in the middle. Some only do it when something bad happens.

The real key is to do some up front, more in the middle, and some in the end. Anything you do up front is about reducing high risk. So the up front exploration, testing, and spiking should concentrate on the significant usage scenarios that are high risk, or will be used often.

When it comes to baking performance into the life-cycle, teams tend to struggle with the how -- how do you actually do this in the real world? They especially struggle if they are using Agile methodologies. The reality is it’s easy, *if* you know the model. If you don’t know the model, you can be lost in the woods forever. We struggled early on, but found a groove that served us well, and we tuned and improved the approach over time.

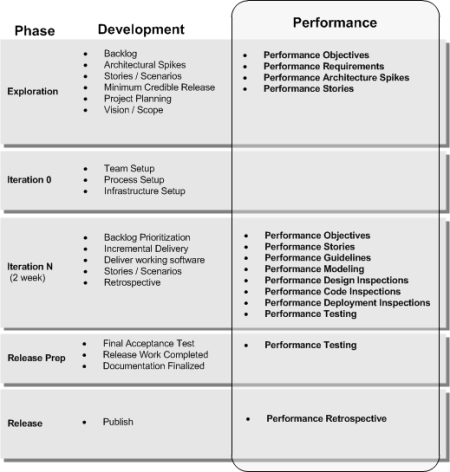

Here is how we did Agile Performance Engineering in patterns & practices:

What's important about the figure is that it shows an example of how you can overlay performance-specific techniques to an existing life cycle. In this case, we simply overlay some performance activities on top of an Agile software cycle.

Rather than make performance a big up front design or doing it all at the end or other performance approaches that don't work, we baked performance into the life cycle. The key here is integrating performance into your iterations.

Key Performance Activities

Here is a summary of the key performance activities and how they play in an agile development cycle:

- Performance Objectives -- This is about getting clarity on your goals, objectives, and constraints so that you effectively prioritize and invest accordingly.

- Performance Spikes -- In Agile, a spike is simply a quick experiment in code for the developer to explore potential solutions. A performance spike is focused on exploring potential performance solutions with the goal of reducing technical risk. During exploration, you can spike on some of the cross-cutting performance concerns for your solution.

- Performance Stories -- In Agile, a story is a brief description of the steps a user takes to perform a goal. A performance story is simply a performance-focused scenario. This might an existing "user" story, but you apply a performance lens, or it might be a new "system" story that focuses on a performance goal, requirement, or constraint. Identify performance stories during exploration and during your iterations.

- Performance Guidelines -- To help guide the performance practices throughout the project you can create a distilled set of relevant performance guidelines for the developers. You can find tune them and make them more relevant for your particular performance stories.

- Performance Modeling -- Use performance modeling to shape your software design. A performance model is a depiction of potential threats to the performance of your solution, along with vulnerabilities. Think of a threat a s potential negative effective and a vulnerability as a weakness that exposes your solution to the threat or attack. You can threat model at the story level during iterations, or you can threat model at the macro level during exploration.

- Performance Design Inspections -- Similar to a general architecture and design review, this is a focus on the performance design. Performance questions and criteria guide the inspection. The design inspection is focused on higher-end, cross-cutting, and macro-level concerns.

- Performance Code Inspection -- Similar to a general code review, this is a focus on inspecting the code for performance issues. Performance questions and criteria guide your inspection.

- Performance Deployment Inspections -- Similar to a general deployment review, this is a focus on inspecting for performance issues of your deployed solution. Physical deployment is where the rubber meets the road and this is where runtime behaviors might expose performance issues that you didn't catch earlier in your design and code inspections.

The sum of these performance activities is more than the parts and using a collection of proven, light-weight activities that you can weave into your cycle help you stack the deck in your favor. This is in direct contrast to relying on one big silver bullet.

Integrating Performance into Iterations

There are two keys to chunking up performance so that you can effectively focus on it during iterations:

- Performance Stories

- Performance Frame

In terms of stories, you should focus on both user and system stories. Stories are a great way to chunk up and deliver incremental value. Each story represents a user or the system performing a useful goal. As such, you can also chunk up your performance work, by focusing on the performance concerns of a story.

A performance frame is a lens for performance. It's simply a set of categories or "hot spots" (e.g. Caching, Communication, Concurrency, Coupling/Cohesion, Data Access, Data Structures / Algorithms, Exception Management, Resource Management, State Management). By grouping your performance practices into these buckets, you can more effectively consolidate and leverage your performance know-how during each iteration. For example, one iteration might have stories that involve caching and resource pooling, while another iteration might have stories that involve data and storage strategies.

Together, stories and performance frames help you chunk up performance and bake it into the life cycle, while learning and responding along the way.

For more information on performance engineering, see patterns & practices Performance Testing Guidance for Web Applications.

Additional Resources

- Performance Modeling

- Managing an Agile Performance Test Cycle

- Types of Performance Testing

- Risks Addressed Through Performance Testing

- Determining Performance Test Objectives

- MSF for Agile Software Development Projects

You Might Also Like