Photo Mosaics Part 9: Caching Analysis

When originally drafting the previous post in this series, I’d intended to include a short write-up comparing the performance and time-to-completion of a photo mosaic when using the default in-memory method versus Windows Azure Caching. As I got further and further into it though, I realized there’s somewhat of a spectrum of options here, and that cost, in addition to performance, is something to consider. So, at the last minute, I decided to focus a blog post on some of the tradeoffs and observations I made while trying out various options in my Azure Photo Mosaics application.

Methodology

While I’d stop short of calling my testing ‘scientific,’ I did make every attempt to carry out the tests in a consistent fashion and eliminate as many variables in the executions as possible. To abstract the type of caching (ranging from none to in-memory), I added a configuration parameter, named CachingMechanism, to the ImageProcessor Worker Role. The parameter takes one of four values, defined by the following enumeration in Tile.vb

Public Enum CachingMechanism

None = 0

BlobStorage = 1

AppFabric = 2

InRole = 3

End Enum

The values are interpreted as follows:

- None: no caching used, every request for a tile image requires pulling the original image from blob storage and re-creating the tile in the requested size.

- BlobStorage: a ‘temporary’ blob container is created to store all tile images in the requested tile size. Once a tile has been generated, subsequent requests for that tile are drawn from the secondary container. The tile thumbnail is generated only once; however, each request for the thumbnail does still require a transaction to blob storage.

- AppFabric: Windows Azure Caching is used to store the tile thumbnails. Whether the local cache is used depends on the cache configuration within the app.config file.

- InRole: tile thumbnail images are stored in memory within each instance of the ImageProcessor Worker Role. This was the original implementation and is bounded by the amount of memory within the VM hosting the role.

To simplify the analysis, the ColorValue of each tile (a 32-bit integer representing the average color of that tile) is calculated just once and then always cached “in-role” within the collection of Tile objects owned by the ImageLibrary.

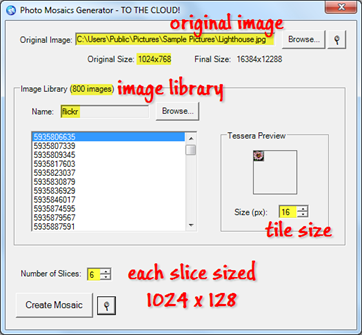

For each request, the same inputs were provided from the client application and are shown in the annotated screen shot to the right:

For each request, the same inputs were provided from the client application and are shown in the annotated screen shot to the right:

- Original image: Lighthouse.jpg from the Windows 7 sample images (size: 1024 x 768)

- Image library: 800 images pulled from Flickr’s ‘interestingness’ feed. Images were requested as square thumbnails (default 75 pixels square)

- Tile size: 16 x 16 (yielding output image of size 16384 x 12288)

- Original image divided into six (6) slices to be apportioned among the three ImageProcessor roles (small VMs); each slice is the same size, 1024 x 128.

These inputs were submitted four times each for the various CachingMechanism values, including twice for the AppFabric value (Windows Azure Caching), once leveraging local (VM) cache with a time-to-live value of six hours and once without using the local cache.

All image generations were completed sequentially, and each ImageProcessor Worker Role was rebooted prior to running the test series for a new CachingMechanism configuration. This approach was used to yield values for a cold-start (first execution) versus a steady-state configuration (fourth execution).

Performance Results

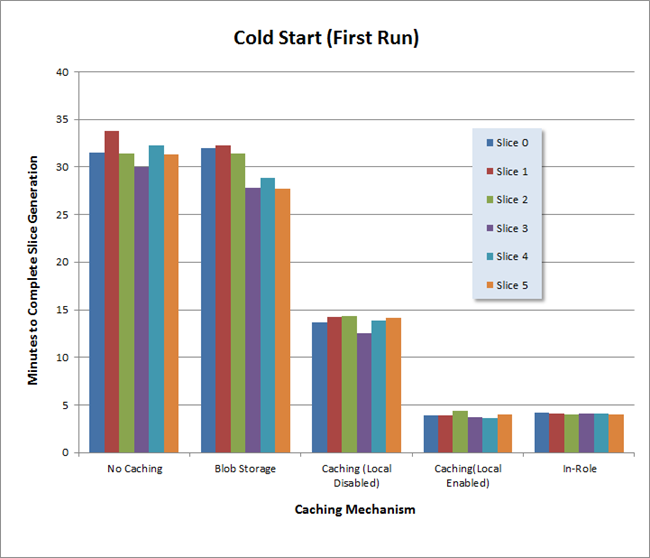

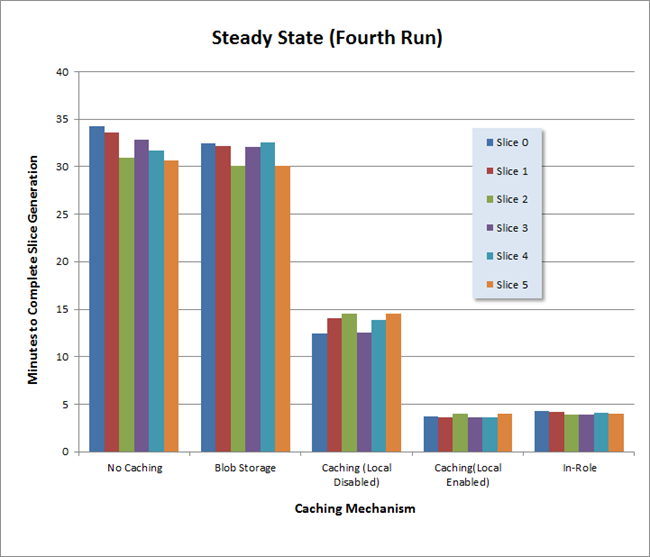

The existing code already records status (in Windows Azure tables) as each slice is completed, so I created two charts displaying that information. The first chart shows the time-to-completion of each slice for the “cold start” execution for each CachingMechanism configuration, and the second chart shows the “steady state” execution.

In each series, the same role VM is shown in the same relative position. For example, the first bar in each series represents the original image slice processed by the role identified by AzureImageProcessor_IN_0, the second by AzureImageProcessor_IN_1, and the third by AzureImageProcessor_IN_2. Since there were six slice requests, each role instance processes two slices of the image, so the fourth bar represents the second slice processed by AzureImageProcessor_IN_0, the fifth bar corresponds to the second slice processed by AzureImageProcessor_IN_1, and the sixth bar represents the second slice processed by AzureImageProcessor_IN_2.

There is, of course, additional processing in the application that is not accounted for by the ImageProcessor Worker Role. The splitting of the original image into slices, the dispatch of tasks to the various queues, and the stitching of the final photo mosaic are the most notable. Those activities are carried out in an identical fashion regardless of the CachingMechanism used, so to simplify the analysis their associated costs (both economic and performance) are largely factored out of the following discussion.

Costs

System at Rest

There are a number of costs for storage and compute that are consistent across the five different scenarios. The costs in the table below occur when the application is completely inactive.

| Service | Usage | Amount | Rate | Cost (monthly) |

| Compute | ClientInterface, JobController, and ImageProcessor roles | 5 small instances | $0.12 per hour | ~$430 |

| Blob storage | Image library (800 images from Flickr) | ~11 MB | $0.14/GB per month | negligible |

| Storage transactions | Worker Roles polling queues for tasks | 4 roles each polling at 10 sec intervals | $0.01 per 10,000 | ~$0.26 |

Note, there are some optimizations that could be made:

- Three instances of ImageProcessor are not needed if the system is idle, so using some dynamic scaling techniques could lower the cost. That said, there is only one instance each of ClientInterface and JobController, and for this system to qualify for the Azure Compute SLA there would need to be a minimum of six instances, two of each type, bringing the compute cost up about another $87 per month.

- Although the costs to poll the queues are rather insignificant, one optimization technique is to use an exponential back-off algorithm versus polling every 10 seconds.

Client Request Costs

Each request to create a photo mosaic brings in additional costs for storage and transactions. Some of the storage needs are very short term (queue messages) while others are more persistent, so the cost for one image conversion is a bit difficult to ascertain. To arrive at an average cost per request, I’d want to estimate usage on the system over a period of time, say a week or month, to determine the number of requests made, the average original image size, and the time for processing (and therefore storage retention). The chart below does list the more significant factors I would need to consider given an average image size and Windows Azure service configuration.

| Service | Usage | Amount |

| Blob storage | Storage of original image, slices, and final image | ~ (2z2 + 1) * original image size, where z is the tile size selected when submitting the job |

| Queue storage | messages for mosaic generation | ~ 2(s + 1) messages of < 1KB, where s is the number of slices into which the original image was partitioned |

| Table storage | status and job entries | ~14s entities of ~1KB, where s is the number of slices into which the original image was partitioned |

| Storage transactions | transactions supporting use of blobs, queues, and tables | queues: ≤ 6r transactions per minute, where r is the number of ImageProcessor roles tables: ~(14s + 5) transactions per image request, where s is the number of slices into which the original image was partitioned blobs: dependent on caching method (read on!) |

Note there are no bandwidth costs, since the compute and storage resources are collocated in the same Windows Azure data center (and there is never a charge for data ingress, for instance, submitting the original image for processing). The only bandwidth costs would be attributable to downloading the finished product.

I have also enabled storage analytics, so that’s an additional cost not included above, but since it’s tangential to the topic at hand, I factored that out of the discussion as well.

Image Library (Tile) Access Costs

Now let’s take a deeper look at the costs for the various caching scenarios, which draws our focus to the storage of tile images and the associated transaction costs. The table below summarizes the requirements for our candidate Lighthouse image, where:

- p is the number of pixels in the original image (786,432),

- t is the number of image tiles in the library (800), and

- s is the number of slices into which the original images was partitioned (6).

| Caching Mechanism | Number of Blob Storage Transactions | Transaction Costs ($0.01 per 10,000) | Storage Costs (monthly) |

| No Caching | p | $0.79 | $.0015 |

| Blob Storage | p + t | $0.79 | $.0015 |

| Caching without local cache | ≥ t | ≥ $0.00081 | ≥ $45.00152 |

| Caching with local cache | ≥ t | ≥ $0.00081 | ≥ $45.00152 |

| In-Role | s * t | $0.0048 | $0.0015 |

1 Caching transaction costs cover the initial loading of the tile images from blob storage into the cache, which technically needs to occur only once regardless of the number of source images processed. Keep in mind though that cache expiration and eviction policies also apply, so images may need to be periodically reloaded into cache, but at nowhere near the rate when directly accessing blob storage.

2 Assumes 128MB cache, plus the miniscule amount of persistent blob container storage. While 128MB fulfills space needs, other resource constraints may necessitate a larger cache size (as discussed below).

Observations

1: Throttling is real

This storage requirements for the 800 Flickr images, each resized to 16 x 16, amount to 560KB. Even with the serialization overhead in Windows Azure Caching (empirically a factor of three in this case) there is plenty of space to store all of the sized tiles in a 128MB cache.

Consider that each original image slice (1024 x 128) yields 131072 pixels, each of which will be replaced in the generated image with one of the 800 tiles in the cache. That’s 131072 transactions to the cache for each slice, and a total of 768K transactions to the cache to generate just one photo mosaic.

Now take a look at the hourly limits for each cache size, and you can probably imagine what happened when I initially tested with a 128MB cache without local cache enabled.

| Cache Size | Transactions (1000s) per Hour | Bandwidth (MB) per Hour | Concurrent Connections | Monthly Cost |

| 128 MB | 400 | 1400 | 10 | $45 |

| 256 MB | 800 | 2800 | 10 | $55 |

| 512 MB | 1600 | 5600 | 20 | $75 |

| 1 GB | 3200 | 11200 | 40 | $110 |

| 2 GB | 6400 | 22400 | 80 | $180 |

| 4 GB | 12800 | 44800 | 160 | $325 |

The first three slices generated fine, and on the other three slices (which were running concurrently on different ImageProcessor instances) I received the following exception:

ErrorCode<ERRCA0017>:SubStatus<ES0009>:There is a temporary failure. Please retry later. (The request failed, because you exceeded quota limits for this hour. If you experience this often, upgrade your subscription to a higher one). Additional Information : Throttling due to resource : Transactions.

In my last post, I mentioned this possibility and the need to code defensively, but of course I hadn’t taken my own advice! At this point, I had three options:

-

reissue the request directly to the original storage location whenever the exception is caught (recall a throttling exception can be determined by checking the DataCacheException SubStatus for the value DataCacheErrorSubStatus.QuotaExceeded [9]),

wait until the transaction ‘odometer’ is reset the next hour (essentially bubble the throttling up to the application level),

provision a bigger cache.

Well, given the goal of the experiment, the third option was what I went with – a 512MB cache, not for the space but for the concomitant transactions-per-hour allocation. That’s not free “in the real world” of course, and would result in a 66% monthly cost increase (from $45 to $75), and that’s just to process a single image! That observation alone should have you wondering whether using Windows Azure Caching in this way for this application is really viable.

Or should it??… The throttling limitation comes into play when the distributed cache itself is accessed, not if the local cache can fulfill the request. With the local cache option enabled and the current data set of 800 images of about 1.5MB total in size, each ImageProcessor role can service the requests intra-VM, from the local cache, with the result that no exceptions are logged even when using a 128MB cache. Each role instance does have to hit the distributed cache at least once in order to populate its local cache, but that’s only 800 transactions per instance, far below the throttling thresholds.

2: Local caching is pretty efficient

I was surprised at how local caching compares to the “in-role” mechanism in terms of performance; the raw values when utilizing local caching are almost always better (although perhaps not to a statistically significant level). While both are essentially in-memory caches within the same memory space, I would have expected a little overhead for the local cache, for deserialization if nothing else, when compared to direct access from within the application code.

The other bonus here of course is that hits against the local cache do not count toward the hourly transaction limit, so if you can get away with the additional staleness inherent in the local cache, it may enable you to leverage a lower tier of cache size and, therefore, save some bucks!

How much can I store in the local cache? There are no hard limits on the local cache size (other than the available memory on the VM). The size of the local cache is controlled by an objectCount property configurable in the app.config or web.config of the associated Windows Azure role. That property has a default of 10,000 objects, so you will need to do some math to determine how many objects you can cache locally within the memory available for the selected role size.

In my current application configuration, each ImageProcessor instance is a Small VM with 1.75GB of memory, and the space requirement for the serialized cache data is about 1.5MB, so there’s plenty of room to store the 800 tile images (and then some) without worrying about evictions due to space constraints.

3: Be cognizant of cache timeouts

The default time-to-live on a local cache is 300 seconds (five minutes). In my first attempts to analyze behavior, I was a bit puzzled by what seemed to be inconsistent performance. In running the series of tests that exercise the local cache feature, I had not been circumspect in the amount of time between executions, so in some cases the local cache had expired (resulting in a request back to the distributed cache) and in some cases the image tile was served directly from the VM’s memory. This all occurs transparently, of course, but you may want to experiment with the TTL property of your caches depending on the access patterns demonstrated by your applications.

Below is what I ultimately set it to for my testing: 21600 minutes = 6 hours.

<localCache isEnabled="true" ttlValue="21600" objectCount="10000"/>

Timeout policy indirectly affects throttling. An aggressive ttlValue value will tend to increase the number of transactions to the distributed cache (which counts against your hourly transaction limit), so you will want to balance the need for local cache currency with the economical and programmatic complexities introduced by throttling limits.

4: The second use of a role instance performs better

4: The second use of a role instance performs better

Recall that the role with ID 0 is responsible for both Slice 0 and Slice 3, ID 1 for Slices 1 and 4, and ID 2 for Slices 2 and 5. Looking at the graphs (see callout to the right), note that the second use of each role instance within a specific single execution almost always results in a shorter execution time. This is the case for the first run, where you might attribute the difference to a warm-up cost for each role instance (seeding the cache, for example), but it’s also found in the steady state run and also on scenarios where caching was not used and where the role processing should have been identical.

Unfortunately, I didn’t take the time to do any profiling of my Windows Azure Application while running these tests, but it may be something I revisit to solve the mystery.

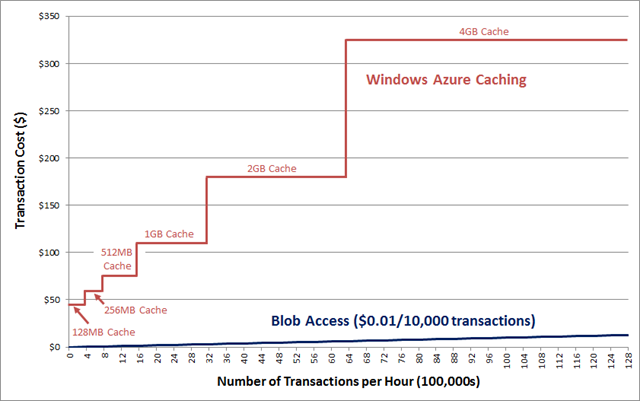

5: Caching pits economics versus performance

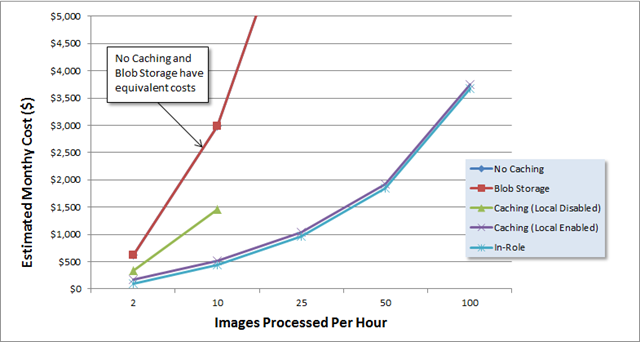

Let’s face it, caching looks expensive; the entry level of 128MB cache is $45 per month. Furthermore, each cache level has a hard limit on number of transactions per hour, so you may find (as I did) that you need to upgrade cache sizes not because of storage needs, but to gain more transactional capacity (or bandwidth or connections, each of which may also be throttled). The graph below highlights the stair-step nature of the transaction allotments per cache size in contrast with the linear charges for blob transactions.

With blob storage, the rough throughput is about 60MB per second, and given even the largest tile blob in my example, one should conservatively get 1000 transactions per second before seeing any performance degradation (i.e., throttling). In the span of an hour, that’s about 3.6 million blob transactions – over four times as many as needed to generate a single mosaic of the Lighthouse image. While that’s more than any of the cache options would seem to support, four images an hour doesn’t provide any scale to speak of!

With blob storage, the rough throughput is about 60MB per second, and given even the largest tile blob in my example, one should conservatively get 1000 transactions per second before seeing any performance degradation (i.e., throttling). In the span of an hour, that’s about 3.6 million blob transactions – over four times as many as needed to generate a single mosaic of the Lighthouse image. While that’s more than any of the cache options would seem to support, four images an hour doesn’t provide any scale to speak of!

BUT, that’s only part of the story: local cache utilization can definitely turn the tables! For sake of discussion, assume that I've configured a local cache ttlValue of at least an hour. With the same parameters as I’ve used throughout this article, each ImageProcessor role instance will need to make only 800 requests per hour to the distributed cache. Those requests are to refresh the local cache from which all of the other 786K+ tile requests are served.

Without local caching, we couldn’t support the generation of even one mosaic image with a 128MB cache. With local caching and the same 128MB cache, we can sustain 400,000 / 800 = 500 ImageProcessor role ‘refreshes’ per hour. That essentially means (barring restarts, etc.) there can be 500 ImageProcessor instances in play without exceeding the cache transaction constraints. And by simply increasing the ttlValue and staggering the instance start times, you can eke out even more scalability (e.g., 1000 roles with a ttlValue of two hours, where 500 roles refresh their cache in the first hour, and the other 500 roles refresh in the second hour).

So, let’s see how that changes the scalability and economics. Below I’ve put together a chart that shows how much you might expect to spend monthly to provide a given throughput of images per hour, from 2 to 100. This assumes an average image size of 1024 x 768 and processing times roughly corresponding to the experiments run earlier: a per-image duration of 200 minutes when CachingMechanism = {0|1}, 75 minutes when CachingMechanism = 2 with no local cache configured, and 25 minutes when CachingMechanism = {2|3} with local cache configured.

The costs assume the minimum number of ImageProcessor Role instances required to meet the per-hour throughput and do not include the costs common across the scenarios (such as blob storage for the images, the cost of the JobController and ClientInterface roles, etc.). The costs do include blob storage transaction costs as well as cache costs.

For the “Caching (Local Disabled)” scenario, the 2 images/hour goal can be met with a 512MB cache ($75), and the 10 images/hour can be met with a 4GB cache. Above 10 images/hour there is no viable option (because of the transaction limitations), other than provisioning additional caches and load balancing among them. For the “Caching (Local Enabled)” scenario with a ttlValue of one hour a 128MB ($45) cache is more than enough to handle both the memory and transaction requirements for even more than 100 images/hour.

Clearly the two viable options here are Windows Azure Caching with local cache enabled or using the original in-role mechanism. Performance-wise that makes sense since they are both in-memory implementations, although Windows Azure Caching has a few more bells-and-whistles. The primary benefit of Windows Azure Caching is that the local cache can be refreshed (from the distributed cache) without incurring additional blob storage transactions and latency; the in-role mechanism requires re-initializing the “local cache” from the blob storage directly each time an instance of the ImageProcessor handles a new image slice. The number of those additional blob transactions (800) is so low though that it’s overshadowed by the compute costs. If there were a significantly larger number of images in the tile library, that could make a difference, but then we might end up having to bump up the cache or VM size, which would again bring the costs back up.

In the end though, I’d probably give the edge to Windows Azure Caching for this application, since its in-memory implementation is more robust and likely more efficient than my home-grown version. It’s also backed up by the distributed cache, so I’d be able to grow into a cache-expiration plan and additional scalability pretty much automatically. The biggest volatility factor here would be the threshold for staleness of the tile images: if they need to be refreshed more frequently than, say, hourly, the economics could be quite different given the need to balance the high-transactional requirements for the cache with the hourly limits set by the Windows Azure Caching service.

Final Words

Undoubtedly, there’s room for improvement in my analysis, so if you see something that looks amiss or doesn’t make sense, let me know. Windows Azure Caching is not a ‘one-size-fits-all’ solution, and your applications will certainly have requirements and data access patterns that may lead you to completely different conclusions. My hope is that by walking through the analysis for this specific application, you’ll be cognizant of what to consider when determining how (or if) you leverage Windows Azure Caching in your architecture.

As timing would have it, I was putting the finishing touches on this post, when I saw that the Forecast: Cloudy column in the latest issue of MSDN Magazine has as its topic Windows Azure Caching Strategies . Thankfully, it’s not a pedantic, deep-dive like this post, and it explores some other considerations for caching implementations in the cloud. Check it out!