Photo Mosaics Part 4: Tables

In this continuation of my deep-dive look at the Azure Photo Mosaics application I built earlier this year, we’ll take a look at the use of Windows Azure Table storage. If you’re just tuning in, you may want to catch up on the other posts of the series or at least the overview of the application.

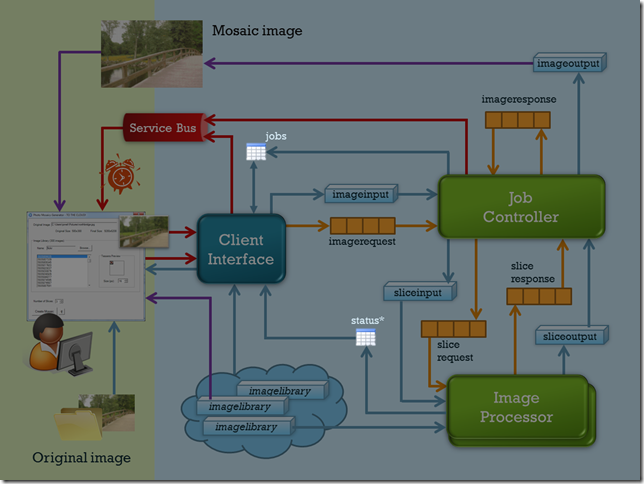

In the application, Windows Azure Tables are the least prevalent aspect of storage and primarily address the cross-cutting concerns of logging/diagnostics and maintaining a history of application utilization. In the diagram below, the two tables – jobs and status – are highlighted, and the primary integrations points are shown. The status table in particular is accessed from just about every method in the roles running on Azure.

Windows Azure Table Storage Primer

Before I talk about the specific tables in use for the application, let’s review the core concepts of Windows Azure tables.

Size limitations

- max of 100TB (this is the maximum for a Windows Azure Storage account, and can be subdivided into blobs, queues, and tables as needed). If 100TB isn’t enough, simply create an additional storage account!

- unlimited number of tables per storage account

- up to 252 user-defined properties per entity

- maximum of 1MB per entity (referencing blob storage is the most common way to overcome this limitation).

-

- up to 5,000 entities per second across a given table or tables in a storage account

- up to 500 entities per second in a single table partition (where partition is defined by the PartitionKey value, read on!)

- latency of about 100ms when access is via services in the same data center (a code-near scenario)

Structured but non-schematized. This means that tables have a structure, rows and columns if you will, but ‘rows’ are really entities and ‘columns’ are really properties. Properties are strongly typed, but there is no requirement that each entity in a table have the same properties, hence non-schematized. This is a far stretch from the relational world many of us are familiar with, and in fact, Windows Azure Table Storage is really an example of a key-value implementation of NoSQL. I often characterize it like an Excel spreadsheet: the row and column structure is clear, but there’s no rule that says column two has to include integers or row four has to have the same number of columns as row three.

Singly indexed. Every table in Windows Azure Storage must have three properties:

- PartitionKey – a string value defining the partition to which the data is associated,

- RowKey – a string value, which when combined with the PartitionKey, provides the one unique index for the table, and

- Timestamp – a readonly, DateTime value.

The selection of partition key (and row key) are the most important decisions you can make regarding the scalability of your table. Data in each partition is serviced by a single processing node, so extensive concurrent reads and writes from the same partition can be a bottleneck for your application. For guidance, I recommend consulting the whitepaper on Windows Azure Tables authored by Jai Haridas, Niranjan Nilakantan, and Brad Calder. Jai also has a number of presentations on the subject from past Microsoft conferences that are available on-line.

REST API. Access to Windows Azure Storage is via a REST API that is further abstracted for developer consumption by the Windows Azure Storage Client API and the WCF Data Services Client Library. All access to table storage must be authenticated via Shared Key or Shared Key Lite authentication.

The Programming Model

The last bullet above mentions that access to Windows Azure Storage is via a RESTful API, and that’s indeed true, but the programmatic abstraction you’ll most likely incorporate is a combination of the Windows Azure Storage Client API and the WCF Data Services Client Library.

In the Storage Client API there are four primary classes you’ll use. Three of these have analogs or extend classes in the WCF Data Services Client Library, which means you’ll have access to most of the goodness of OData, entity tracking, and constructing LINQ queries in your cloud applications that access Windows Azure Table Storage.

| Class | Purpose |

| CloudTableClient | authenticate requests against Table Storage and perform DDL (table creation, enumeration, deletion, etc.) |

| CloudTableQuery | a query to be executed against Windows Azure Table Storage |

| TableServiceContext | a DataServiceContext specific to Windows Azure Table Storage |

| a class representing an entity in Windows Azure Table Storage |

If you’ve built applications with WCF Data Services (over the Entity Framework targeting on-premises databases), you’re aware of the first-class development experience in Visual Studio: create a WCF Data Service, point it at your Entity Framework data model (EDM), and all your required classes are generated for you.

It doesn’t work quite that easily for you in Windows Azure Table Storage. There is no metadata document produced to facilitate tooling – and that actually makes sense. Since Azure tables have flexible schemas, how can you define what column 1 is versus column 2 when each row (entity) may differ?! To use WCF Data Services Client functionality you have to programmatically enforce a schema to create bit of order over the chaos. You could still have differently shaped entities in a single table, but you’ll have to manage how you select data from that table and ensure that it’s selected into a entity definition that matches it.

TableServiceContext

As a developer you’ll define at least one TableServiceContext class per storage account within your application. TableServiceContext actually extends DataServiceContext, one of the primary classes in WCF Data Services, with which you may already be familiar. The TableServiceContext has two primary roles:

- authenticate access, via account name and key, and

- broker access between the data source (Windows Azure Table Storage) and the in-memory representation of the entities, tracking changes made so that the requisite commands can be formulated and dispatched to the data source when an update is requested

You will also typically use the TableServiceContext to help implement a Repository pattern to decouple you application code from the backend storage scheme, which is particularly helpful when testing.

In the Azure Image Processor, the TableAccessor class (through which the web and worker roles access storage) is essentially an Repository interface, and encapsulates a reference to the TableServiceContext. Since this application only has two tables, the context class itself is quite simple:

1: public class TableContext : TableServiceContext

2: {

3:

4: public TableContext(String baseAddress, StorageCredentials credentials)

5: : base(baseAddress, credentials)

6: {

7: }

8:

9: public IQueryable<StatusEntry> StatusEntries

10: {

11: get

12: {

13: return this.CreateQuery<StatusEntry>("status");

14: }

15: }

16:

17: public IQueryable<JobEntry> Jobs

18: {

19: get

20: {

21: return this.CreateQuery<JobEntry>("jobs");

22: }

23: }

24: }

The ‘magic’ here is that each of the method returns an IQueryable, which means that you can further compose queries, such as you can see in the highlighted method of TableAccessor below:

1: public class TableAccessor

2: {

3: private CloudTableClient _tableClient = null;

4:

5: private TableContext _context = null;

6: public TableContext Context

7: {

8: get

9: {

10: if (_context == null)

11: _context = new TableContext(_tableClient.BaseUri.ToString(), _tableClient.Credentials);

12: return _context;

13: }

14: }

15:

16: public TableAccessor(String connectionString)

17: {

18: _tableClient = CloudStorageAccount.Parse(connectionString).CreateCloudTableClient();

19: }

20:

21:

22: {

23: if (_tableClient.DoesTableExist("status"))

24: {

25: CloudTableQuery<StatusEntry> qry =

26: (from s in Context.StatusEntries

27: where s.RequestId == jobId

28: select s).AsTableServiceQuery<StatusEntry>();

29: return qry.Execute();

30: }

31: else

32: return null;

33: }

34:

35: // remainder elided for brevity

In Lines 6ff, you can see the reference to the TableServiceContext, and in Lines 26ff the no-frills StatusEntries property from the context is further narrowed via LINQ to WCF Data Services to return only the jobs corresponding to an input id. If you’re wondering what CloudTableQuery and AsTableServiceQuery are, we’ll get to those shortly.

TableServiceEntity

What’s missing here? Well in the code snippet above, it’s the definition of StatusEntry, and of course, there’s the JobEntry class as well. Both of these extend the TableServiceEntity class, which predefines those three required properties of every Windows Azure Table: PartitionKey, RowKey, and Timestamp. Below is the definition for StatusEntry, and you can crack open the code to look at JobEntry.

1: public class StatusEntry : TableServiceEntity

2: {

3: public Guid RequestId { get; set; }

4: public String RoleId { get; set; }

5: public String Message { get; set; }

6:

7: public StatusEntry() { }

8:

9: public StatusEntry(Guid requestId, String roleId, String msg)

10: {

11: this.RequestId = requestId;

12: this.RoleId = roleId;

13: this.Message = msg;

14:

15:

16:

17: }

18: }

Note that I’ve set the PartitionKey to be the requestId; that means all of the status entries for a given image processing job are within the same partition. In the Windows Forms client application, this data is queried by requestId, so the choice is logical, and the query will return quickly since it’s being handled by a single processing node associated with the given partition.

Where this choice could be a poor one though is if there are rapid fire inserts into the status table. Assume for instance that every line executed in the web and worker role code results in a status update. Since the table is partitioned by the job id, only one processing node can access it, and so a bottleneck may occur, and performance suffers. An alternative would be to partition based on a hash of say the tick count at which the status message was written, thus fanning out the handling of status messages to different processing nodes.

In this application, we don’t expect the status table to be a hot spot, so it’s not of primary concern, but I did want to underscore that how your data is used contextually may affect your choice of partitioning. In fact, it’s not unheard of to duplicate data in order to provide different indexing schemes for different uses of that data. Of course, in that scenario you bear the burden of keeping the data in sync to the degree necessary for the successful execution of the application.

For the RowKey, I’ve opted for a concatenation of the Ticks and a GUID. Why both? First of all, the combination of PartitionKey and RowKey must be unique, and there is a chance, albeit slim, that two different roles processing a given job will write a message at the exact same tick value. As a result, I brought in GUID to differentiate the two. The use of tick also enforces the default sort order, so that (more-or-less) the status entries appear in order. This will certainly be the case for entries written from a given role instance, but clock drift across instances could result in out-of-order events. For this application, exact order is not required, but if it is for you, you’ll need to consider an alternative synchronization mechanism, or better yet (in the world of the cloud) reconsider if that requirement is really a ‘requirement’.

For JobEntry, by the way, the PartitionKey and RowKey are defined as follows:

this.PartitionKey = clientId;

this.RowKey = String.Format("{0:D20}_{1}", this.StartTime.Ticks, requestId);

The clientId is current a SID based on the execution of the Windows Forms client, but would be extensible to any token, such as an e-mail address that might be used in a OAuth type scenario. The RowKey is a concatenation of a Ticks value and the requestId (a GUID). Strictly speaking, the GUID value is enough to guarantee uniqueness – each job has a single entity (row) in the table - but I added the Ticks value to enforce a default sort order, so that when you select all the jobs of a given client they appear in chronological order versus GUID order (which would be non-deterministic).

Be aware of the supported data types! Windows Azure Tables support eight data types, so the properties defined for your TableServiceEntity class need to align correctly, or you’ll get a rather generic DataServiceRequestExceptionmessage:

An error occurred while processing this request.

with details in an InnerException indicating a 501 status code and the message:

The requested operation is not implemented on the specified resource.

RequestId:ddf8af86-521e-4c5e-b817-2b3a9c07007e

Time:2011-08-16T00:54:42.7881423Z

In my JobEntry class for instance, that’s why you’ll see the properties TileSize and Slices typed as Int32, whereas through the rest of the application they are of type Byte.

Curiously, I thought the same would be true of the Uri data, which I redefined to String explicitly, but on revisiting this, they seem to work. I’m assuming here there’s some explicit ToString going on to make it fly.

DataServiceQuery/CloudTableQuery

Now that we’ve got the structure of the data defined and the context to map our objects to the the underlying storage, let’s take a look at the query construction. In an excerpt above, you saw the following query:

CloudTableQuery<StatusEntry> qry =

(from s in Context.StatusEntries

where s.RequestId == jobId

select s).AsTableServiceQuery<StatusEntry>();

return qry.Execute();

That bit in the middle looks like a standard LINQ query to grab from the StatusEntries collection only those entities with a given RequestId, and that’s precisely what it is (and more specifically it’s a DataServiceQuery). In Windows Azure Table Storage though, a DataServiceQuery isn’t always sufficient.

When issuing a request to Windows Azure Table Storage (it’s all REST under the covers, remember), you will get at most 1000 entities returned in response. If there are more than 1000 entities fulfilling your query, you can get the next batch but it requires an explicit call along with a continuation token that is passed as part of the header and tells the Azure Storage engine where to pick up returning results. There are actually other instances where continuation tokens enter the picture even with less than 1000 entities, so it’s a best practice to always handle continuation tokens.

A DataServiceQuery does not handle continuation tokens, but you can use the extension method AsTableServiceQuery to convert the DataServiceQuery to a CloudTableQuery and get access to continuation token handling and some other Azure tables-specific functionality, such as:

- RetryPolicy enables you to specify how a request should be retried in cased where it times out or otherwise fails – remember, failure is a way of life in the cloud! There are a number of retry policies predefined (empirically the undocumented default, as of this writing, is RetryExponential(3, 3, 90, 2)), and you can create your own by defining a delegate of type RetryPolicy.

- Execute runs the query and traverses all of the continuation tokens to return all of the results. This is a convenient method to use, since it handles the continuation tokens transparently, but it can be dangerous in that it will return all of the results requested from 1 to 1 million (or more)!

- BeginExecuteSegmented and EndExecuteSegmented are also aware of continuation tokens, but requires you to loop over each ‘page’ of results. It’s a tad safer than the full-blown Execute and a good choice for a pagination scheme. In fact, I go through all the gory details in a blog post from my Azure@home series.

From the qry.Execute() line above, you can see I took the easy way out by letting CloudTableQuery grab everything in one fell swoop. In this context, that’s fine, because the number of status entries for a given job will be on the order of 10-50 versus thousands.

That’s pretty much it as far as the table access goes; next time we’ll cover the queues used by the Azure Photo Mosaic application.