Photo Mosaics Part 3: Blobs

Given that the Photo Mosaics application's domain is image processing, it shouldn't be a surprise that blobs (namely, images) are the most prevalent storage construct in the application. Blobs are used in two distinct ways:

- image libraries, collections of images used as tiles to generate a mosaic for the image submitted by the client. When requesting that a photo mosaic be created, the end-user picks a specific image library from which the tiles (or tesserae) should be selected.

- blob containers used in tandem with queues and roles to implement the workflow of the application.

Regardless of the blob's role in the application, access occurs almost exclusively via the storage architecture I described in my last post.

Image Libraries

In the Azure Photo Mosaics application, an image library is simply a blob container filled with jpg, GIF, or png images. The Storage Manager utility that accompanies the source code has some functionality to populate two image libraries, one from Flickr and the other from the images in your My Pictures directory, but you can create your own libraries as well. What differentiates these containers as image libraries is simply a metadata element named ImageLibraryDescription.

Storage Manager creates the containers and sets the metadata using the Storage Client API that we covered in the last post; here's an excerpt from that code:

// create a blob container

var container = new CloudBlobContainer(i.ContainerName.ToLower(), cbc);

try

{

container.CreateIfNotExist();

container.SetPermissions(

new BlobContainerPermissions() { PublicAccess = BlobContainerPublicAccessType.Blob });

container.Metadata["ImageLibraryDescription"] = "Image tile library for PhotoMosaics";

container.SetMetadata();

...

In the application, the mere presence of the ImageLibraryDescription metadata element marks the container as an image library, so the actual value assigned really doesn't matter.

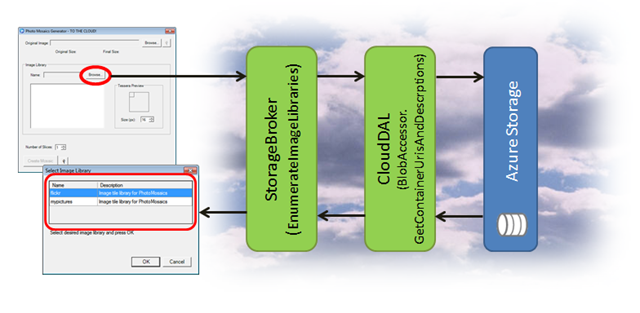

In the application workflow, it's the Windows Forms client application that initiates a request for all of the image libraries (so the user can pick which one he or she wants to use when generating the photo mosaic). As shown below, first the user selects the browse option in the client application. That invocation traverses the data access stack – asynchronously invoking EnumerateImageLibraries in the StorageBroker service, which in turn calls GetContainerUrisAndDescriptions on the BlobAccessor class in the CloudDAL, which accesses the blob storage service via the Storage Client API.

EnumerateImageLibraries (code shown below) ostensibly accepts a client ID to select which Azure Storage account to search for image libraries, but the current implementation ignores that parameter and defaults to the storage account directly associated with the application (via a configuration element in the ServiceConfiguration.cscfg). Swapping out GetStorageConnectionStringForApplication in line 5 for a method that searches for the storage account associated with a specific client would be an easy next step.

The CloudDAL enters the picture at line 6 via the invocation of GetContainerUrisAndDescriptions, passing in the specific metadata tag that differentiates image libraries from other blob containers in that same account.

1: public IEnumerable<KeyValuePair<Uri, String>>

EnumerateImageLibraries(String clientRegistrationId)

2: {

3: try

4: {

5: String connString = new StorageBrokerInternal()

.GetStorageConnectionStringForApplication();

6: return new BlobAccessor(connString)

.

GetContainerUrisAndDescriptions

("ImageLibraryDescription");

7: }

8: catch (Exception e)

9: {

10: Trace.TraceError(

"Client: {0}{4}Exception: {1}{4}Message: {2}{4}Trace: {3}",

11: clientRegistrationId,

12: e.GetType(),

13: e.Message,

14: e.StackTrace,

15: Environment.NewLine);

16:

17: throw new SystemException("Unable to retrieve list of image libraries", e);

18: }

19: }

The implementation of GetContainerUrisAndDescriptions appears below. The Storage Client API provides the ListContainers method (Line 4) which yields an IEnumerable that we can do some LINQ magic on in lines 7-10. The end result of this method is to return just the names and descriptions of the containers (as a collection of KeyValuePair) that have the metadata element specified in the optional parameter. If that parameter is null (or not passed), then all of the container names and descriptions are returned.

1: // get list of containers and optional metadata key value

2: public IEnumerable<KeyValuePair<Uri, String>>

GetContainerUrisAndDescriptions(String metadataTag = null)

3: {

4: var containers = _authBlobClient.

ListContainers

("",

ContainerListingDetails.Metadata);

5:

6: // get list of containers with metadata tag set (or all of them …

7: return from c in containers

8: let descTag = (metadataTag == null) ?

null : HttpUtility.UrlDecode(c.Metadata[metadataTag.ToLower()])

9: where (descTag != null) || (metadataTag == null)

10: select new KeyValuePair<Uri, String>(c.Uri, descTag);

11: }

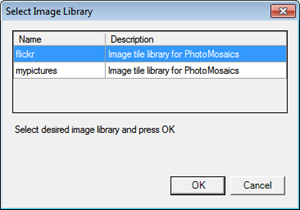

After the list of container names and descriptions has been retrieved, the Windows Forms client displays them in a simple dialog form, prompting the user to select one of them.

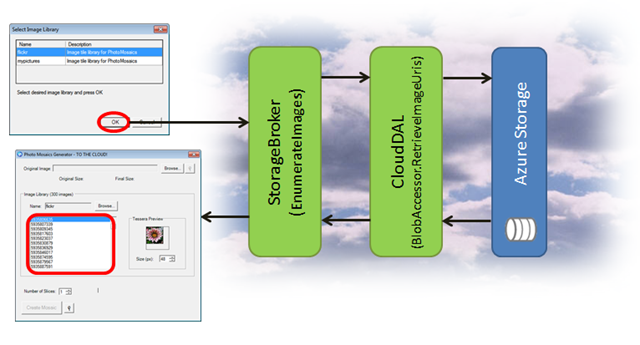

When the user makes a selection, another request flows through the same data access stack:

- The Windows Forms client invokes EnumerateImages on the StorageBroker service, passing in the URI of the image library (i.e., blob container) selected in the previous dialog

- EnumerateImages invokes RetrieveImageUris on the BlobAccessor class in the CloudDAL, also passing in the URI of the container

- RetrieveImageUris (below) builds a list of blobs in that container that have a content type of jpg, png, or GIF

1: // get list of images given blob container name

2: public IEnumerable<Uri> RetrieveImageUris(String containerName, String prefix = "")

3: {

4: IEnumerable<Uri> blobNames = new List<Uri>();

5: try

6: {

7: // get unescaped names of blobs that have image content

8: var contentTypes = new String[] { "image/jpeg", "image/gif", "image/png" };

9: blobNames = from CloudBlob b in

10: _authBlobClient.ListBlobsWithPrefix

(String.Format("{0}/{1}", containerName, prefix),

11: new BlobRequestOptions() { UseFlatBlobListing = true })

12: where contentTypes.Contains(b.Properties.ContentType)

13: select b.Uri;

14: }

15: catch (Exception e)

16: {

17: Trace.TraceError("BlobUri: {0}{4}Exception: {1}{4}Message: {2}{4}Trace: {3}",

18: String.Format("{0}/{1}", containerName, prefix),

19: e.GetType(),

20: e.Message,

21: e.StackTrace,

22: Environment.NewLine);

23: throw;

24: }

25:

26: return blobNames;

27: }

What is the prefix parameter in RetrieveImageUris for? In this specific context, the prefix parameter defaults to an empty string, but generally, it allows us to query blobs as if they represented directory paths. When it comes to Windows Azure blob storage, you can have any number of containers, but containers are flat and cannot be nested. You can however create a faux hierarchy using a naming convention. For instance, https://azuremosaics.blob.core.windows.net/images/foods/dessert/icecream.jpg is a perfectly valid blob URI: the container is images, and the blob name is foods/dessert/icecream.jpg – yes, the whole thing as one opaque string. In order to provide some semantic meaning to that name, the Storage Client API method ListBlobsWithPrefix supports designating name prefixes, so you could indeed enumerate just the blobs in the pseudo-directory food/dessert.

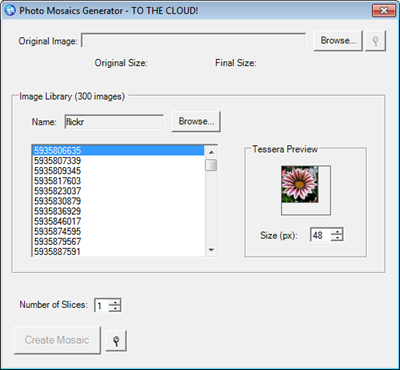

After the call to the service method EnumerateImages has completed, the Windows Form client populates the list of images within the selected image library. When a user selects an image in that list, a preview of the tile, in the selected size, is shown.

You might be inclined to assume that another service call has been made to retrieve the individual image tile, but that's not the case! The retrieval occurs via a simple HTTP GET by virtue of assigning to the ImageLocation property of the Windows Forms PictureBox the blob image URI (e.g., https://azuremosaics.blob.core.windows.net/flickr/5935820179); there are no web service calls, no WCF.

We can do that here because of the access policy we've assigned to the blob containers designated as image libraries. If you revisit the Storage Manager code that I mentioned at the beginning of this post, you'll see that there is a SetPermissions call on the image library containers. SetPermissions is part of the Storage Client API and used to set the public access policy for the blob container; there are three options:

- Blob – allow anonymous clients to retrieve blobs and metadata (but not enumerate blobs in containers),

- Container – allow anonymous clients to additionally view container metadata and enumerate blobs in a container (but not enumerate containers within an account),

- Off – do not allow any anonymous access; all requests must be signed with the Azure Storage Account key.

By setting the public access level to Blob, each blob in the container can be accessed directly, even in a browser, without requiring authentication (but you must know the blob's URI). By the way, blobs are the only storage construct in Windows Azure Storage that allow access without authenticating via the account key, and, in fact, there's another authentication mechanism for blobs that sits between public access and fully authenticated access: shared access signatures.

Shared access signatures allow you to more granularly define what functions can be performed on a blob or container and the duration those functions can be applied. You could, for instance, set up a shared access signature (which is a hash tacked on to the blob's URI) to allow access to, say, a document for just 24 hours and disseminate that link to the designated audience. While anyone with that shared access signature-enabled link could access the content, the link would expire after a day.

Blobs in the Workflow

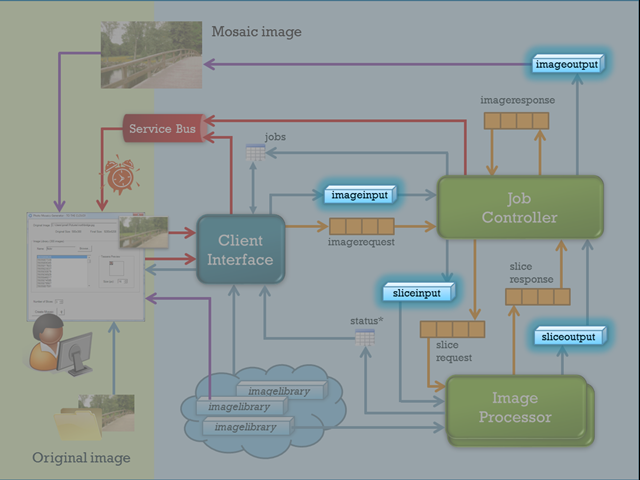

The second use of blobs within the Azure Photo Mosaics application is the transformation of the original image into the mosaic. Recall from the overall workflow diagram (below) that there are four containers used to move the image along as it's being 'mosaicized':

imageinput

This is the entry point of an image into the workflow. The image is transferred from the client via a WCF service call (SubmitImageForProcessing in the JobBroker service of the AzureClientInterface Web Role) and assigned a new name as it's copied to blob storage: a GUID corresponding to the unique job id for the given request.

As you might expect by now, BlobAccessor in the CloudDAL does the heavy lifting via the code below. The entry point is actually the second method (Line 17), in which the image bytes, the imageinput container name, and the GUID are passed in from the service call. Storage Client API methods are used to tag the content type and store the blog (Lines 5 and 11, respectively).

1: // store image with given URI (with optional metadata)2: public Uri StoreImage(Byte[] bytes, Uri fullUri, NameValueCollection metadata = null)3: {4: CloudBlockBlob blobRef = new CloudBlockBlob(fullUri.ToString(), _authBlobClient);5: blobRef.Properties.ContentType = "image/jpeg";6:7: // add the metadata8: if (metadata != null) blobRef.Metadata.Add(metadata);9:10: // upload the image11: blobRef.UploadByteArray(bytes);12:13: return blobRef.Uri;14: }15:16: // store image with given container and image name (with optional metadata)17: public Uri StoreImage(Byte[] bytes, String container, String imageName, NameValueCollection metadata = null)18: {19: var containerRef = _authBlobClient.GetContainerReference(container);20: containerRef.CreateIfNotExist();21:22: return StoreImage(bytes, new Uri(String.Format("{0}/{1}", containerRef.Uri, imageName)), metadata);23: }sliceinput

When an image is submitted by the end-user, the 'number of slices' option in the Windows Forms client user interface is passed along to specify how the original image should be divided in order to parallelize the task across the instances of ImageProcessor worker roles deployed in the cloud. For purposes of the sample application, the ideal number to select is the number of ImageProcessor instances currently running, but a more robust implementation would dynamically determine a optimal number of slices given the current system topology and workload. Regardless of how the number of image segments is determined, the original image is taken from the imageinput container and 'chopped up' in to uniformly sized slices. Each slice is stored in the sliceinput container for subsequent processing.

The real work is processing the image into the slice; once that's done, it's really just a call to StoreImage in the BlobAccessor:

Uri slicePath =blobAccessor.StoreImage

(slices[sliceNum], BlobAccessor.SliceInputContainer,String.Format("{0}_{1:000}", requestMsg.BlobName, sliceNum));In the above snippet, each element of the slices array contains an array of bytes representing one slice of the original image. As you can see by the String.Format call, each slice retains the original name of the image (a GUID) with a suffix indicating a slice number (e.g.,

21EC2020-3AEA-1069-A2DD-08002B30309D _003)sliceoutput

As each slice of the original image is processed, the 'mosaicized' output slice is stored in the sliceoutput container with the exact same name as it had in the sliceinput container. There's nothing much more going on here!

imageoutput

After all of the slices have been processed, the JobController Worker Role has a bit of work to do to piece together the individual processed slices into the final image, but once that's done it's just a simple call to StoreImage in the BlobAccessor to place the completed mosaic in the imageoutput container.

A Quandary

Since I've already broached the subject of access control with the image libraries, let's revisit that topic again now in the context of the blob containers involved in the workflow. Of the four containers, two (sliceinput and sliceoutput) are used for internal processing and their contents never need to be viewed outside the confines of the cloud application. The original image (in imageinput) and the generated mosaic (in imageoutput) do, however, have relevance outside of the generation process.

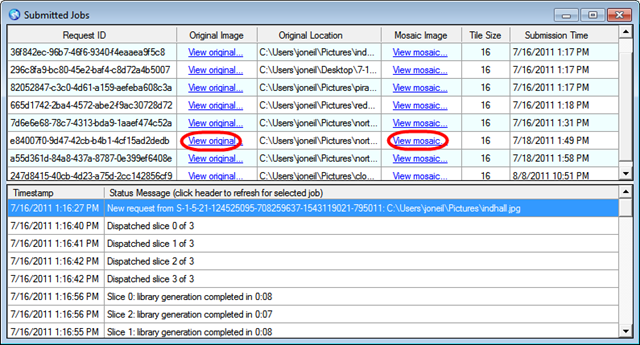

In the current client application, the links that show in the Submitted Jobs window (above) directly reference images that are associated with the current user, and that user can access both the original and the generated image quite easily, via a browser even. That's possible since the imageinput and imageoutput containers have a public access policy just like the image libraries; that is, you can access a blob and its metadata if you know its name. Of course, anyone can access those images if they know the name. Granted the name is somewhat obfuscated since it's a GUID, but it's far from private.

So how can we go about making the images associated with a given user accessible only to that user?

- You might consider a shared access signature on the blob. This allows you to create a decorated URI that allows access to the blob for a specified amount of time. The drawbacks here are that anyone with that URI can still access the blob, so it's only slightly more secure than what we have now. Additionally a simple shared access signature has a maximum lifetime of one hour, so it wouldn't accommodate indefinite access to the blob.

- You can extend shared access signatures with a signed identifier, which associates that signature with a specific access policy on the container. The container access policy doesn't have the timeout constraints, so the client could have essentially unlimited access. The URI can still be compromised, although access can be revoked globally by modifying the access policy that the signed identifier references. Beyond that though, there's a limit of five access policies per container, so if you wanted to somehow map a policy to an end user, you'd be limited to storing images for only five clients per container.

- You could set up customer-specific containers or even accounts, so each client has his own imageimput and imageoutput container, and all access to the blobs is authenticated. That may result in an explosion of containers for you to manage though, and it would require providing a service interface since you don't want the clients to have the authentication keys for those containers. I'd argue that you could accomplish the same goal with a single imageinput and imageoutput container that are fronted with a service and an access policy implemented via custom code.

- Another option is to require that customers get their own Azure account to house the imageinput and imageoutput containers. That's a bit of an encumbrance in terms of on-boarding, but as an added benefit, it relieves you from having to amortize storage costs across all of the clients you are servicing. The clients own the containers so they'd have the storage keys in hand and bear the responsibility for securing them. You'd probably still want a service front-end to handle the access, but the authentication key (or a shared access signature) would have to be provided by the client to the Photo Mosaics application.

My current thinking is that a service interface to access the images in imagerequest and imageresponse is the way to go. It's a bit more code to write, but it offers the most flexibility in terms of implementation. In fact, I could even bring in the AppFabric Access Control Service to broker access to the service. Thoughts?