Azure@home Part 5: The REST of the Story

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

In the last post, I covered (in fairly excruciating detail) the implementation of the WebRole code and how it interfaces with the two Azure tables to start and report on the Folding@home work units. The code that was presented makes use of the StorageClient API, which is a LINQ-enabled abstraction of the underlying REST API used by the Azure Table service. Abstractions make our lives as developers much easier, but sometimes you need to dig a bit deeper to better understand behavior, diagnose a bug, or appropriately address performance issues. That’s my goal for this post, which, by the way, also goes into excruciating detail!

The MSDN documentation of course covers all of this, but in this particular post, I’d like to specifically dissect the WebRole StorageClient API calls down to the HTTP request and response level. This post won’t cover every possible contingency, but it should be enough to give you an idea of how the mechanism really works at the wire level and empower you to troubleshoot and analyze performance on your own applications.

Recommendation: Fiddler is a free Web debugging proxy tool that captures HTTP(S) traffic between your computer and the Internet, and as such is a sine qua non for web developers. It’s the tool I’m using to complete this blog post to capture the traffic accessing Azure table storage in the cloud from the Azure@home application running in my local development fabric. If you’re using Fiddler against local Azure storage, be sure to check out the Configuring Clients page for special instructions on capturing traffic on localhost (127.0.0.1). By the way, if you’re looking for even more extensive network traffic analysis, check out WireShark.

REST API

As mentioned in earlier posts, all of the storage options (queues, tables, and blobs) are ultimately accessed via a REST API – primarily using the standard HTTP verbs of GET (retrieve), PUT (update), POST (insert), and DELETE (delete) to administer storage. In Azure@home, we’re using only table storage, but the concepts apply in large part to blobs and queues as well (blobs, in particular, have additional authentication schemes).

Background Reading: REST (Representational State Transfer) has become a popular topic in the last couple of years, but its genesis was in the nascent stages of the World Wide Web. As an architectural style the term was first coined by Dr. Roy Fielding in his Ph.D. dissertation in 2000. There are now numerous books on the topic including two I highly recommend:RESTful Web Services andRESTful.NET. As part of our regional Northeast Roadshow, I also covered REST in a presentation and Channel 9 screencast in the Fall of 2008.

In conjunction with the use of the standard HTTP verbs, the Azure storage services API leverages the Atom protocol, an XML syndication format, to provide the payload in the various HTTP messages, like the result set from a GET operation or the property values for a new entity to be added to storage via the POST verb. The Atom protocol used by the Azure table service is really that of WCF Data Services (formerly ADO.NET Data Services and before that, Astoria) and includes a few extensions to support metadata and other capabilities. At PDC 2009, the protocol was given a name – Open Data Protocol – and a new, open specification under the Microsoft Open Specification Promise was born. Open Data includes a number of client bindings (for PHP, Ruby, etc.) and many Microsoft products (SharePoint, Azure, project “Dallas”) as well as other sources (Netflix, Websphere, …) can expose data via the OData protocol.

REST by Example

Rather than tediously go through the components of the protocol, let’s take the various data access statements covered in the last post and break them down into their HTTP request/response pairs. This should provide a good overview of what's going on under the covers and enough insight so that you’re equipped to do a similar deep dive on your own code. From that last post, I’ve selected the five distinct StorageClient API method calls or property references that result in some type of communication between the Azure@home application and Azure table service:

- Testing for table existence

- Creating table if it doesn’t exist

- Testing for existence of entity in client table

- Saving new entity to client table

- Retrieving all entities from workunit table

1. Testing for table existence

1. Testing for table existence

In default.aspx and status.aspx, there are tests to determine if the required Azure tables (client and workunit, respectively) exist; the Page_Load method of status.aspx, for instance, includes the following if statement that executes the DoesTableExist method of the StorageClient API:

if (cloudClient.DoesTableExist("workunit"))

Request

Here’s the HTTP request that went out:

GET https://snowball.table.core.windows.net/Tables('workunit') User-Agent: Microsoft ADO.NET Data Services x-ms-version: 2009-09-19 x-ms-date: Sat, 14 Aug 2010 20:14:54 GMT Authorization: SharedKeyLite snowball:SzZSsBp8Ui74Ro64rLrM/B05adLgo42cLWAxjJV4uZ0= Accept: application/atom+xml,application/xml Accept-Charset: UTF-8 DataServiceVersion: 1.0;NetFx MaxDataServiceVersion: 1.0;NetFx Host: snowball.table.core.windows.net

The first thing you’ll note is that this is a GET request, which makes sense, since we’re trying to determine if the table exists or not. workunit is a resource in REST parlance, and so this request will either return a representation of the resource or it will fail with a resource-not-found error. In the URL, Tables is a collection of entities, and the parenthetical “workunit” is a key predicate identifying a unique element in that collection (cf. Addressing Entities in the Open Data Protocol specification).

Following are the nine HTTP headers seen in the request above, many of which may look familiar, some of which are there to support OData:

User-Agent: Microsoft ADO.NET Data Servicessignifies that the API is using the ADO.NET Data Services format, which has since been formalized into the Open Data Protocol (OData) x-ms-version: 2009-09-19used to indicate what version of the storage service API is being requested x-ms-date: Sat, 14 Aug 2010 20:14:54 GMTUTC timestamp for the request – either this header or the standard HTTP Dateheader are required for authenticated requests.x-ms-datesupersedesDateif both are specified, and the request will be denied if it arrives at the server more than 15 minutes after the request was made.Authorization: SharedKeyLite snowball:SzZS…the authorization method and key value. Table storage provides two options SharedKey and SharedKeyLite. The account name is obvious here, and what follows is a Hash-based Method Authentication Code (HMAC) calculated from the HTTP request and signed with the storage account key. Accept: application/atom+xml,application/xmlindicates that the response to this request should be XML, or more specifically, Atom format (which is a XML-based format) Accept-Charset: UTF-8UTF-8 is expected in the response DataServiceVersion: 1.0;NetFxdefines the version of the OData protocol that the request is associated with (along with the type of agent making the request, here the .NET Framework, versus perhaps Ajax) MaxDataServiceVersion: 1.0;NetFxdefines the maximum version of the OData protocol that the requestor is ready to handle. The header is part of the request only and essentially tells the server to only include Version 1.0 output in the response Host: snowball.table.core.windows.netthe host is our Azure storage end-point There are actually additional header fields that may or may not be added depending on the context of the request. For example, a POST request would include Content-Type and Content-Length, and it gets more complex when you start dealing with optimistic concurrency options (ETag) and conditional requests (If-None-Match and If-Match).

Response (success)

HTTP response codes in the 200-299 range are considered successful codes, and in this case, the return code of 200 indicates the entity exists, and the payload provides an Atom document reflecting the requested entity:

HTTP/1.1 200 OK

Cache-Control: no-cache

Content-Type: application/atom+xml;charset=utf-8

Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0

x-ms-request-id: cacd4004-c4c1-4d02-97bb-9606550fc154

x-ms-version: 2009-09-19

Date: Sat, 14 Aug 2010 21:25:42 GMT

Content-Length: 788

<?xml version="1.0" encoding="utf-8" standalone="yes"?>

<entry xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom">

<id>https://snowball.table.core.windows.net/Tables('workunit')</id>

<title type="text"></title>

<updated>2010-08-14T21:25:43Z</updated>

<author>

<name />

</author>

<link rel="edit" title="Tables" href="Tables('workunit')" />

<category term="snowball.Tables" scheme="https://schemas.microsoft.com/ado/2007/08/dataservices/scheme" />

<content type="application/xml">

<m:properties>

<d:TableName>workunit</d:TableName>

</m:properties>

</content>

</entry>

Response (failure: table doesn’t exist)

What if the table didn't exist? You'd get an HTTP response like the following.

HTTP/1.1 404 Not Found Cache-Control: no-cache Content-Type: application/atom+xml Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 629bd8c8-fb5e-4f8e-88cf-6b7c54758030 x-ms-version: 2009-09-19 Date: Sat, 14 Aug 2010 21:29:50 GMT Content-Length: 337 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <error xmlns="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata"> <code>ResourceNotFound</code> <message xml:lang="en-US">The specified resource does not exist. RequestId:629bd8c8-fb5e-4f8e-88cf-6b7c54758030 Time:2010-08-14T21:29:51.3087221Z </message> </error>

The underlying StorageClient API has the wiring needed to turn this 404 code into a false return value for the DoesTableExist API, just as it turns a 200 status code into a true return value.

Response (failure: invalid credentials)

What, though, if something more catastrophic happens? The request times out? there's a server error? or perhaps (as below) the wrong credentials were supplied to the CloudTableClient constructor?

HTTP/1.1 403 Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature. Content-Length: 437 Content-Type: application/xml Server: Microsoft-HTTPAPI/2.0 x-ms-request-id: 435d3d43-059e-4d97-a8da-8a4ec50b0683 Date: Sat, 14 Aug 2010 21:35:37 GMT <?xml version="1.0" encoding="utf-8" standalone="yes"?> <error xmlns="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata"> <code>AuthenticationFailed</code> <message xml:lang="en-US">Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature. RequestId:435d3d43-059e-4d97-a8da-8a4ec50b0683 Time:2010-08-14T21:35:37.3050726Z </message> </error>

The response to that last scenario is a failed authentication (status code 403), and that bubbles up to the StorageClient API as a StorageClientException, which includes elements of the HTTP response in the ExtendedErrorInformation property and the 403 Forbidden as the StatusCode property. Of course, in the current implementation of both default.aspx and status.aspx, this exception isn’t caught, so that pretty much brings down the application in flames!

2. Creating table if it doesn’t exist

2. Creating table if it doesn’t exist

In both default.aspx and status.aspx, the method CreateTableIfNotExist actually precedes the call to DoesTableExist:

cloudClient.CreateTableIfNotExist("workunit");

if (cloudClient.DoesTableExist("workunit"))

As you might expect, CreateTableIfNotExist must first check if the table is already there, and only if it is not, will it create the new table in Azure storage. As such, the method call then may employ two HTTP requests. The first is identical to the one we saw above for DoesTableExist, and only if a status code of 404 (Not Found) results is a second call made to create the workunit (or client) table. We’ll look at that second request below.

Request

Since we’re requesting that something be created, the HTTP verb POST is used, and the payload of the request includes information on what entity is to be created. The URL specifies a container resource to which that new entity, here a table, will be added.

POST https://snowball.table.core.windows.net/Tables HTTP/1.1 User-Agent: Microsoft ADO.NET Data Services x-ms-version: 2009-09-19 x-ms-date: Sat, 14 Aug 2010 23:27:21 GMT Authorization: SharedKeyLite snowball:wpgl+xiOWBCZ0MwHiPBjFcv9G+OJydbDvMzRJhuLCJU= Accept: application/atom+xml,application/xml Accept-Charset: UTF-8 DataServiceVersion: 1.0;NetFx MaxDataServiceVersion: 1.0;NetFx Content-Type: application/atom+xml Host: snowball.table.core.windows.net Content-Length: 494 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <entry xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <title /> <updated>2010-08-14T23:27:21.9446828Z</updated> <author> <name /> </author> <id /> <content type="application/xml"> <m:properties> <d:TableName>workunit</d:TableName> </m:properties> </content> </entry>

Response (success)

In response to successfully creating an object, the return code is not 200, but rather 201 (Created):

HTTP/1.1 201 Created Cache-Control: no-cache Content-Type: application/atom+xml;charset=utf-8 Location: https://snowball.table.core.windows.net/Tables('workunit') Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: fa6659c6-83f3-4fdc-84f5-99a73057586f x-ms-version: 2009-09-19 Date: Sat, 14 Aug 2010 23:27:22 GMT Content-Length: 788 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <entry xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <id>https://snowball.table.core.windows.net/Tables('workunit')</id> <title type="text"></title> <updated>2010-08-14T23:27:22Z</updated> <author> <name /> </author> <link rel="edit" title="Tables" href="Tables('workunit')" /> <category term="snowball.Tables" scheme="https://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /> <content type="application/xml"> <m:properties> <d:TableName>workunit</d:TableName> </m:properties> </content> </entry>

In this response, note specifically the Location header, for which on a 201 response the HTTP Specification dictates:

The newly created resource can be referenced by the URI(s) returned in the entity of the response, with the most specific URI for the resource given by a Location header field.

The specification also notes that

The response SHOULD include an entity containing a list of resource characteristics and location(s) from which the user or user agent can choose the one most appropriate.

and indeed the body of the response includes a link element specifying the location of the resource as well as additional tags reflecting the properties or ‘resource characteristics’.

3. Testing for existence of entity in client table

3. Testing for existence of entity in client table

This test is made in two places with practically identical code; it first appears in the default.aspx Page_Load to determine whether the application has already begun the folding client processes.

if (ctx.Clients.FirstOrDefault<ClientInformation>() != null)

Response.Redirect("/Status.aspx");

The request here begins with the ctx.Clients property, which is actually implemented in AzureAtHomeEntities.cs as

public IQueryable<ClientInformation> Clients

{

get

{

return this.CreateQuery<ClientInformation>("client");

}

}

Because this is an IQueryable<T>, you can further compose the query on the client side before it’s actually executed. You can think of the CreateQuery as setting up the URI for the resulting HTTP request (e.g., https://snowball.table.core.windows.net/client) and anything added later, say via extension methods, becomes a query parameter on that URL.

With the StorageClient API, you can use a few LINQ query operators to further qualify the query to the Azure table service, and here I’ve opted for FirstOrDefault to further refine the base URI.

Request

The HTTP request issued then looks like this:

GET https://snowball.table.core.windows.net/client()?$top=1 HTTP/1.1 User-Agent: Microsoft ADO.NET Data Services x-ms-version: 2009-09-19 x-ms-date: Sun, 15 Aug 2010 00:10:04 GMT Authorization: SharedKeyLite snowball:FRtfAyqfFb24EziANO8uz7Zd0x3vv+kWh7vPtGnBSEE= Accept: application/atom+xml,application/xml Accept-Charset: UTF-8 DataServiceVersion: 1.0;NetFx MaxDataServiceVersion: 1.0;NetFx Host: snowball.table.core.windows.net Connection: Keep-Alive

Note the $top=1 query parameter, indicating the server should return the topmost element from the requested entity collection. $top is one of the system query options supported by the Open Data Protocol and is also used to implement the Take LINQ query operation (note, First and FirstOrDefault can really be thought of as a special case of Take).

Response (success: entity exists)

If there is an entity (row) in the table, the GET simply returns information about that particular entity, with a 200 status code.

HTTP/1.1 200 OK Cache-Control: no-cache Content-Type: application/atom+xml;charset=utf-8 Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 35ddbd4c-ae27-4d5e-992f-ce7ef1771447 x-ms-version: 2009-09-19 Date: Sun, 15 Aug 2010 00:10:03 GMT Content-Length: 1544 <?xml version="1.0" encoding="utf-8" standalone="yes"<feed xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <title type="text">client</title> <id>https://snowball.table.core.windows.net/client</id> <updated>2010-08-15T00:10:03Z</updated> <link rel="self" title="client" href="client" /> <entry m:etag="W/"datetime'2010-08-10T22%3A17%3A20.7946461Z'""> <id>https://snowball.table.core.windows.net/client(PartitionKey='jimoneil',RowKey='')</id> <title type="text"></title> <updated>2010-08-15T00:10:03Z</updated> <author> <name /> </author> <link rel="edit" title="client" href="client(PartitionKey='jimoneil',RowKey='')" /> <category term="snowball.client" scheme="https://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /> <content type="application/xml"> <m:properties> <d:PartitionKey>jimoneil</d:PartitionKey> <d:RowKey></d:RowKey> <d:Timestamp m:type="Edm.DateTime">2010-08-10T22:17:20.7946461Z</d:Timestamp> <d:Latitude m:type="Edm.Double">42.033</d:Latitude> <d:Longitude m:type="Edm.Double">-90.0172</d:Longitude> <d:PassKey></d:PassKey> <d:ServerName>127.0.0.1</d:ServerName> <d:Team>184157</d:Team> <d:UserName>jimoneil</d:UserName> </m:properties> </content> </entry> </feed>

Response (failure: entity does not exist)

If there’s no entity in the table, you might expect the request to result in a 404 (Not Found) status code, since indeed there is no data in the table. Instead though, you get a success return code and a payload that has a feed element, but no entry elements.

HTTP/1.1 200 OK Cache-Control: no-cache Content-Type: application/atom+xml;charset=utf-8 Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: c6c6612a-249a-4c00-a4ad-cf373bad606a x-ms-version: 2009-09-19 Date: Sun, 15 Aug 2010 00:26:34 GMT Content-Length: 486 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <feed xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <title type="text">client</title> <id>https://snowball.table.core.windows.net/client</id> <updated>2010-08-15T00:26:34Z</updated> <link rel="self" title="client" href="client" /> </feed>If that strikes you as counterintuitive, just think back to what happens in your favorite RDBMS when a SELECT statement issued against a table returns no rows. Do you get an error? No, you simply get an empty result set, and that’s precisely what’s happening here.

4. Saving new entity to client table

4. Saving new entity to client table

In default.aspx, a new entity is added to the client table via the TableServiceContext when the page is submitted. The key method here is SaveChanges, but that method can emit multiple HTTP requests depending on the nature of the changes to the entities in the underlying data context. In this case, the only object being tracked by the context is a new object (via the AddObject) call.

var ctx = new ClientDataContext(

cloudStorageAccount.TableEndpoint.ToString(),

cloudStorageAccount.Credentials);

ctx.AddObject("client",

new ClientInformation(

txtName.Text,

PASSKEY,

litTeam.Text,

Double.Parse(txtLatitudeValue.Value),

Double.Parse(txtLongitudeValue.Value),

Request.ServerVariables["SERVER_NAME"]));

ctx.SaveChanges();

Request

SInce a new object is being added to an existing collection, it should be no surprise that the resulting HTTP request is a POST, and the information for the new entity is provided in the HTTP body, in Atom format.

POST https://snowball.table.core.windows.net/client HTTP/1.1 User-Agent: Microsoft ADO.NET Data Services x-ms-version: 2009-09-19 x-ms-date: Sun, 15 Aug 2010 00:52:48 GMT Authorization: SharedKeyLite snowball:AN1LU50G3pww6KqMeAudMx7vmNKYWo/c5FrwO/eAJIY= Accept: application/atom+xml,application/xml Accept-Charset: UTF-8 DataServiceVersion: 1.0;NetFx MaxDataServiceVersion: 1.0;NetFx Content-Type: application/atom+xml Host: snowball.table.core.windows.net Content-Length: 887 Connection: Keep-Alive <?xml version="1.0" encoding="utf-8" standalone="yes"?> <entry xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <title /> <updated>2010-08-15T00:52:48.3338955Z</updated> <author> <name /> </author> <id /> <content type="application/xml"> <m:properties> <d:Latitude m:type="Edm.Double">40.246</d:Latitude> <d:Longitude m:type="Edm.Double">-88.0836</d:Longitude> <d:PartitionKey>jimoneil</d:PartitionKey> <d:PassKey m:null="false" /> <d:RowKey m:null="false" /> <d:ServerName>127.0.0.1</d:ServerName> <d:Team>184157</d:Team> <d:Timestamp m:type="Edm.DateTime">0001-01-01T00:00:00</d:Timestamp> <d:UserName>jimoneil</d:UserName> </m:properties> </content> </entry>

Response (success: entity added to table)

This scenario is analogous to the creation of the client and workunit tables discussed above. There a new resource (a table) was being added to the Tables container resource, and here a new resource (an entity with the properties above) is being added to the client container resource. in both cases the result is a 201 status code.

Recall too, how the Location header reflects the URI by which the newly created resource is accessible. Here note that the entity is identified by the combination of the PartitionKey (with value “jimoneil”) and the RowKey (left blank); those, of course, are the two properties that make up the unique index for a given entity in an Azure table.

HTTP/1.1 201 Created Cache-Control: no-cache Content-Type: application/atom+xml;charset=utf-8 ETag: W/"datetime'2010-08-15T00%3A52%3A48.7553289Z'" Location: https://snowball.table.core.windows.net/client(PartitionKey='jimoneil',RowKey='') Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 5686c1a4-e7cd-40c9-8726-9311726bdabc x-ms-version: 2009-09-19 Date: Sun, 15 Aug 2010 00:52:48 GMT Content-Length: 1291 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <entry xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" m:etag="W/"datetime'2010-08-15T00%3A52%3A48.7553289Z'"" xmlns="https://www.w3.org/2005/Atom"> <id>https://snowball.table.core.windows.net/client(PartitionKey='jimoneil',RowKey='')</id> <title type="text"></title> <updated>2010-08-15T00:52:48Z</updated> <author> <name /> </author> <link rel="edit" title="client" href="client(PartitionKey='jimoneil',RowKey='')" /> <category term="snowball.client" scheme="https://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /> <content type="application/xml"> <m:properties> <d:PartitionKey>jimoneil</d:PartitionKey> <d:RowKey></d:RowKey> <d:Timestamp m:type="Edm.DateTime">2010-08-15T00:52:48.7553289Z</d:Timestamp> <d:Latitude m:type="Edm.Double">40.246</d:Latitude> <d:Longitude m:type="Edm.Double">-88.0836</d:Longitude> <d:PassKey></d:PassKey> <d:ServerName>127.0.0.1</d:ServerName> <d:Team>184157</d:Team> <d:UserName>jimoneil</d:UserName> </m:properties> </content> </entry>

The eagle eyes among you may have spotted a new header, Etag, in the response above, which is taken from the Timestamp property present on every entity in an Azure table. An Etag is a unique identifier for a specific version of a resource and is used to implement optimistic concurrency in conjunction with another HTTP request header, If-Match. The code in Azure@home doesn’t really lend itself to a discussion on concurrency without introducing some contrivances, so I’ll defer to Mike Taulty’s clear and succinct blog post on the Etag/If-Match ‘dance’ in the context of ADO.NET Data Services; the concept applies to Azure storage as well.

Response (failure: entity already exists)

In the code for default.aspx, there is a very remote chance that two separate clients could submit the pages nearly concurrently (and with the same name input field), with the result that an attempt would be made to insert two entities into the client table with the same unique key. The first request would follow the success path shown directly above, and the second request would attempt the same POST operation shortly thereafter.

The result would be a DataServiceRequestException occurring on the call to SaveChanges. The exception wraps an InnerException of type DataServiceClientException that indicates the entity already exists (StatusCode 409). Here’s the HTTP response that leads to that exception:

HTTP/1.1 409 Conflict Cache-Control: no-cache Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 87218aa6-2cf6-47e0-a462-cac29176ddb1 x-ms-version: 2009-09-19 Date: Sun, 15 Aug 2010 02:26:27 GMT Content-Length: 338 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <error xmlns="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata"> <code>EntityAlreadyExists</code> <message xml:lang="en-US">The specified entity already exists. RequestId:87218aa6-2cf6-47e0-a462-cac29176ddb1 Time:2010-08-15T02:26:28.7783982Z </message> </error>

5. Retrieving all entities from workunit table

5. Retrieving all entities from workunit table

status.aspx displays progress for on-going work units as well as the history of completed work units; the data for both are contained in the workunit table with the value of a property, CompletedTime, used to differentiate in-process versus completed units. The implementation selects all of the data (as shown in the line below), materializes it via ToList, and then later uses LINQ to Objects to apply the CompletedTime filter when assigning the DataSource property for the ASP.NET display elements.

var workUnitList = ctx.WorkUnits.ToList<WorkUnit>();

ctx.WorkUnits is defined an IQueryable<WorkUnit> property in AzureAtHomeEntities.cs (in a similar to ctx.Clients discussed above).

public IQueryable<WorkUnit> WorkUnits

{

get

{

return this.CreateQuery<WorkUnit>("workunit");

}

}

Request

The request here is pretty similar to getting the first record from the client table above, with the exception that here there is no qualifying query parameter, like $top.

GET https://snowball.table.core.windows.net/workunit HTTP/1.1 User-Agent: Microsoft ADO.NET Data Services x-ms-version: 2009-09-19 x-ms-date: Sun, 15 Aug 2010 20:29:57 GMT Authorization: SharedKeyLite snowball:MfJOuaJssUJAFRCTr53nGAaupXo9hS/ti5/PZy/OT8Q= Accept: application/atom+xml,application/xml Accept-Charset: UTF-8 DataServiceVersion: 1.0;NetFx MaxDataServiceVersion: 1.0;NetFx Host: snowball.table.core.windows.net

Response

The (truncated) response here looks fairly similar to getting the client record above, except that there’s a LOT more data – 1.4 Mb of it in fact… and that’s not even all of the data! This response includes 1000 entities, the maximum that can be returned by any REST call against the Azure table service.

HTTP/1.1 200 OK Cache-Control: no-cache Content-Type: application/atom+xml;charset=utf-8 Server: Windows-Azure-Table/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 6296fdbb-9a06-45dc-8105-8cc9a6dc0cf4 x-ms-version: 2009-09-19 x-ms-continuation-NextPartitionKey: 1!56!ZGVwbG95bWVudCgxOTIpLkF6dXJlQXRIb21lLldvcmtlclJvbGUuMA-- x-ms-continuation-NextRowKey: 1!64!TW9jay02MzQxNzQ4ODE3OTYwNzE5NTZ8MDk3NXxBdWd1c3QgMTUgMDQ6NTY6MTk- Date: Tue, 17 Aug 2010 02:18:04 GMT Content-Length: 1406564 <?xml version="1.0" encoding="utf-8" standalone="yes"?> <feed xml:base="https://snowball.table.core.windows.net/" xmlns:d="https://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="https://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="https://www.w3.org/2005/Atom"> <title type="text">workunit</title> <id>https://snowball.table.core.windows.net/workunit</id> <updated>2010-08-17T02:18:05Z</updated> <link rel="self" title="workunit" href="workunit" /> <entry m:etag="W/"datetime'2010-08-15T20%3A24%3A45.237658Z'""> <id>https://snowball.table.core.windows.net/workunit(PartitionKey='deployment(191).AzureAtHome.WorkerRole.0',RowKey='Mock-634174861222394794%7CTag%7CAugust%2015%2004%3A22%3A02')</id> <title type="text"></title> <updated>2010-08-17T02:18:05Z</updated> <author> <name /> </author> <link rel="edit" title="workunit" href="workunit(PartitionKey='deployment(191).AzureAtHome.WorkerRole.0',RowKey='Mock-634174861222394794%7CTag%7CAugust%2015%2004%3A22%3A02')" /> <category term="snowball.workunit" scheme="https://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /> <content type="application/xml"> <m:properties> <d:PartitionKey>deployment(191).AzureAtHome.WorkerRole.0</d:PartitionKey> <d:RowKey>Mock-634174861222394794|Tag|August 15 04:22:02</d:RowKey> <d:Timestamp m:type="Edm.DateTime">2010-08-15T20:24:45.237658Z</d:Timestamp> <d:CompleteTime m:type="Edm.DateTime">2010-08-15T20:24:45.0257564Z</d:CompleteTime> <d:DownloadTime>August 15 04:22:02</d:DownloadTime> <d:InstanceId>deployment(191).AzureAtHome.WorkerRole.0</d:InstanceId> <d:Name>Mock-634174861222394794</d:Name> <d:Progress m:type="Edm.Int32">100</d:Progress> <d:StartTime m:type="Edm.DateTime">2010-08-15T20:22:04.1736728Z</d:StartTime> <d:Tag>Tag</d:Tag> </m:properties> </content> </entry> <entry m:etag="W/"datetime'2010-08-15T20%3A27%3A27.7234078Z'""> <id>https://snowball.table.core.windows.net/workunit(PartitionKey='deployment(191).AzureAtHome.WorkerRole.0',RowKey='Mock-634174862856538192%7CTag%7CAugust%2015%2004%3A24%3A45')</id> <title type="text"></title> <updated>2010-08-17T02:18:05Z</updated> <author> <name />So what if you truly need to bring down more data than that in one fell swoop, or what if you want to page through all available data? That’s where continuation tokens come in. You’ll notice the response above shows two new headers

x-ms-continuation-NextPartitionKey: 1!56!ZGVwbG95bWVudCgxOTIpLkF6dXJlQXRIb21lLldvcmtlclJvbGUuMA-- x-ms-continuation-NextRowKey: 1!64!TW9jay02MzQxNzQ4ODE3OTYwNzE5NTZ8MDk3NXxBdWd1c3QgMTUgMDQ6NTY6MTk-which indicate (in a hashed manner) where this segment of 1000 entities left off. The current implementation in Azure@home does not inspect the header to determine that indeed there are more results available, but that could be implemented in a couple of different ways:

- By checking for the presence of these headers in a response and including the same header values in subsequent requests. See the Query Timeout and Pagination topic on MSDN for one example of how to accomplish this. Steve Marx also covered this in a (dated, but still valid) blog post.

- Utilizing the new CloudTableQuery class in the StorageClient.

As a quick, ‘don’t do this at home’ example, I updated the current implementation by converting the existing query to a CloudTableQuery via the AsTableServiceQuery extension method:

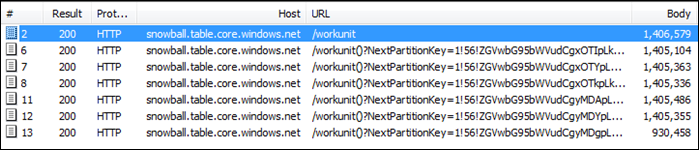

var workUnitList = ctx.WorkUnits.AsTableServiceQuery().ToList();which, when materialized via ToList forces all of the table contents to be retrieved. From the Fiddler session list below, you can see that seven individual HTTP requests were issued (resulting in 6336 rows). The responses to the first six requests include the two continuation token headers so that each subsequent request can, in turn, include those values as part of the URL query string. The final response doesn’t include the headers signifying that the query results are complete.

That’s of course a brute force mechanism and somewhat defeats the purpose of gating an individual response at 1000 entities. There is an overload of CloudTableQuery’s Execute method that accepts a ContinuationToken parameter, but that just enables you to change the starting point of the retrieve and still continues to issue HTTP GETs until the query results are completely retrieved.

The right way to do this is employ the asynchronous method pairs, BeginExecuteSegmented and EndExecuteSegmented, as part of an asynchronous Web request. For some insight into this pattern, check out Scott Densmore’s blog post and example. In my next post, I’ll walk through this important pattern by modifying the existing status.aspx page to support pagination of completed work unit results.