S is for… sqlmetal

sqlmetal is one of the rare cases where a Microsoft utility or application ends up seeing the light of day with a cool name! If you’re working with LINQ to SQL you may know what sqlmetal is, but since much of what it does is part of the Visual Studio designer experience there’s a fair chance you’ve been doing LINQ to SQL work and had no idea it existed.

sqlmetal is a command-line utility that you can use in lieu of the built-in Visual Studio designer to create a dbml (database markup language) file that defines the entities and DataContext for LINQ to SQL models. The utility is installed with the SDK that accompanies Visual Studio; on my machine I found it in

C:\Program Files\Microsoft SDKs\Windows\v6.0A\Bin

For sake of examples in the post, I’ll use the timeless Northwind database as the targeted database.

LINQ To SQL within Visual Studio

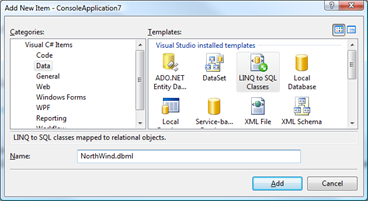

Within the Visual Studio environment, creating a LINQ to SQL model is pretty straightforward:

- You add a new item in the Data category

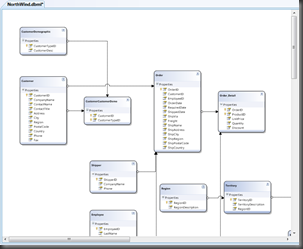

- and up comes the visual designer where you can drag and drop tables and procedures to the surface

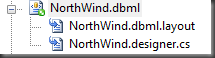

- save, and you get a couple of files created for you

- the

dbmlfile, a file that captures the schema from the database in XML format,- the

layoutfile, an XML file used by the visual designer, and- the

designer.csfile, which contains generated classes for the tables (entities) in the database.

LINQ to SQL with sqlmetal

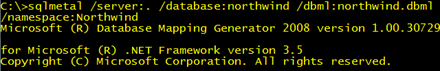

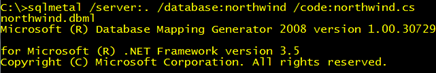

With sqlmetal you can accomplish essentially the same task in two steps

1. Generate the dbml file, and

2. use the dbml file to generate the classes

There isn’t a layout file in this case, of course, since we’re doing everything outside of the Visual Studio IDE.

Output Comparison

I thought I might try to compare the files generated from within Visual Studio to those output by sqlmetal, expecting them to be nearly identical, but I stumbled upon some differences:

- The designer default is to use smart pluralization, so from the

Categoriestable, for instance, I’d get a class namedCategory. In sqlmetal, the default is to not do this, but you can use the/pluralizeswitch to engage that feature. - Associations between tables generated by sqlmetal have a naming convention of

FK_foreignkeytable_primarykeytable, whereas the designer produces the nameprimarykeyentity_foreignkeyentity - sqlmetal adds a

DeleteRule="NO ACTION"to primary key associations, but since that’s the default, the lack of the attribute in the designer-generated version is inconsequential. - Similarly, the sqlmetal generated code file explicitly includes

CanBeNull="true"for some columns (I saw it specifically on the Image type), but true is the default for that attribute as well, so leaving it off is not an issue.

Which Method to Choose?

While I was a little surprised by the discrepancies in the files, none of them really affect the functionality. So, given that, why bother with the effort of bringing up the command-line, executing two commands, and importing files into your project, when you can get the same result right within the Visual Studio IDE? Here’s a couple of reasons to consider.

1. Performance

With large databases, the Visual Studio designer can be a bit slow. After all, it’s handling not only the dbml and code file generation, but also the visual layout of the classes and relationships. The point at which this becomes noticeable, as many other such variables, depends.

2. Scriptability

If you’re using msbuild scripts, for instance, then command line operations are right up your alley. If your schema is in a state of flux, using sqlmetal within a batch script is a reasonable method to refresh the dbml and code files. Within the Visual Studio IDE there isn’t a 'synchronize to database’ option, so if your schema changes, you have no recourse but to recreate the model from scratch in Visual Studio (or hack into the IDE-generated files).

Of course, if your schema is too volatile you’ll have issues in that developers may have written code addressing various entities and properties (tables and columns) that no longer compiles if you’ve renamed them, changed types, etc.

3. Flexibility

One of the criticisms of the default LINQ to SQL generation is the heavy use of attributes on the generated classes. Each entity has an attribute relating it to the table schema, and each property has an attribute relating it to the associated column’s properties. For instance, here’s a snippet of code for the

TerritoryIDproperty.[Column(Storage="_TerritoryID", DbType="NVarChar(20) NOT NULL", CanBeNull=false, IsPrimaryKey=true)] public string TerritoryID { get { return this._TerritoryID; } set { if ((this._TerritoryID != value)) { this.OnTerritoryIDChanging(value); this.SendPropertyChanging(); this._TerritoryID = value; this.SendPropertyChanged("TerritoryID"); this.OnTerritoryIDChanged(); } } }

Many developers are strong proponents of POCO (Plain-old CLR classes) and would prefer to use classes that are not marked by the various attributes tying the object to the underlying data store.

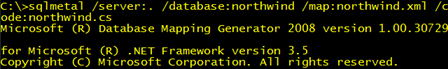

With sqlmetal, you have the option to generate an external XML mapping and nearly-POCO classes for your entities (below). By the way, I say nearly- POCO based on the fact that the classes generated still incorporate

EntityRefandEntityStateclasses, thus pulling in the LINQ infrastructure. Classes and properties are, however, unadorned by attributes.

The resulting map XML file looks quite a bit like the dbml file, however, it includes a

memberandstorageattribute to tie the abstracted model back to specific properties and storage fields in the POCO class, a class you might even have coded from scratch yourself.In fact, this mapping file can help insulate you from backend changes in the database as well. For example, if the name of the field in the database changes, you need only update the mapping file. No modification of class files is needed – something impossible to do with the default attribute-based approach.

Instantiating your LINQ to SQL

DataContextto incorporate a mapping file merely involves using an overloaded constructor that accepts aMappingSource, like this:

String connString = ConfigurationSettings. AppSettings["NorthwindConnectionString"]; XmlMappingSource map = XmlMappingSource.FromStream( File.Open("northwind.xml", FileMode.Open)); Northwind ctx = new Northwind(connString, map);

Additional References

If you want to look a bit more under the covers of sqlmetal, here’s a couple of reference links on MSDN:

Code Generation Tool (SqlMetal.exe)

There is no shortage of posts on techniques for using POCO with LINQ To SQL; a Live Search will bring you to plenty of reading on the subject! One of the hits even led me to an open source project on CodePlex called Close2Poco. I haven’t had a chance to check it out, but it takes the trivial setup I proposed here much further toward reality.