Azure Virtual Machines -- 4 designing proposals on switching Azure IaaS VM for higher availability.

After migrating whole local solution to Azure or integrating cloud solution with local modules, customer might be impacted by monthly Azure backend update or exceptional backend physical issues, this resulted in availability impact to customers. In order to improve service availability and avoid troubles from unexpected VM reboot from either Azure platform upgrade or other exceptional reasons, here below I concluded 4 designing proposals to overcome such headaches.

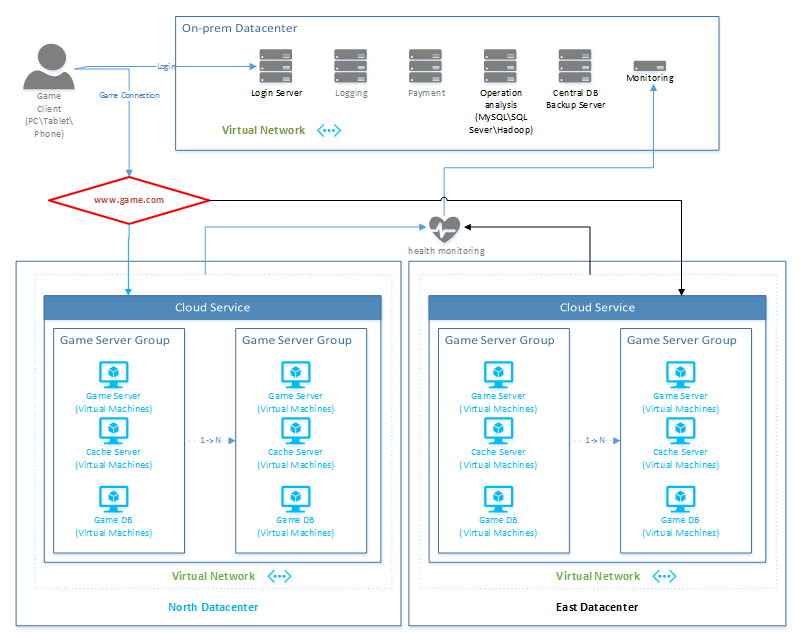

Scenario 1: Solution migrated from On Premise

Before Switch:

1. Setup VM solutions in multiple datacenters.

2. Solutions from different datacenter were connected to on premise DC via virtual networks.

3. Each solution has its own cloud domain, like test.chinacloudapp.cn.

4. Only primary solution is active at most time, while other solution VMs are shut down at that period.

5. Gaming is served at a custom name like www.game.com, the custom domain is pointing to cloud domain, which is actually working.

Under switch:

1. Gaming Company has got the notification on when the primary solution VM will be rebooted/updated.

2. Notify gaming players the time of following switch.

3. Gaming Company starts the secondary solution VMs by a script or management portal.

4. Once a secondary solution is up, Gaming Company configures its custom domain to point to the domain of secondary solution.

5. After backend reboot/update is finished, Gaming Company can start the primary solution back with same way.

Risk:

1. During the switch, all active data in those independent cache servers will be lost.

2. If data in different DB set is not synced, then switch may also cause data discrepancy.

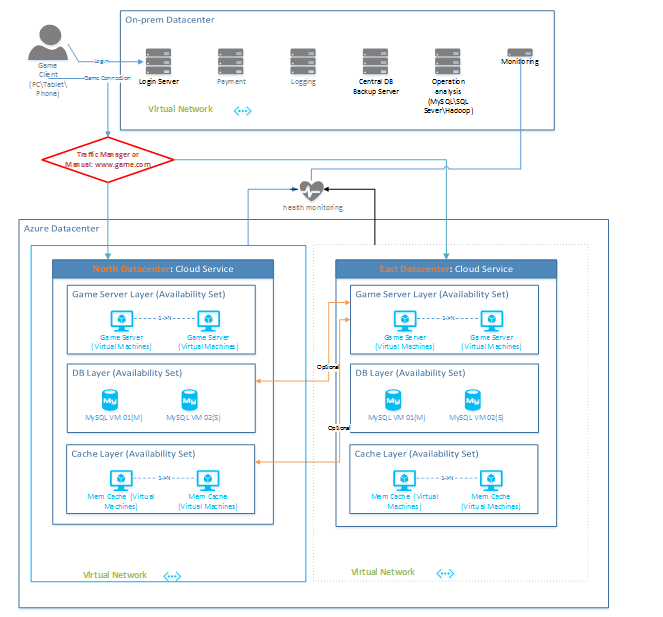

Scenario 2: Solution deployed with Azure features

Before Switch:

1. Setup VM solutions in multiple datacenters.

2. Solutions from different datacenter were connected to on premise DC via virtual networks.

3. Each solution has its own cloud domain, like test.chinacloudapp.cn.

4. Only primary solution is active at most time, while other solution VMs are shut down at that period.

5. Gaming is served at a custom name like www.game.com, the custom domain is pointing to traffic manager domain, which is load balancing multi cloud services with failover strategy. (traffic manage is reported to be released in China Azure very soon, in the short time before it is released, custom name can be set to map cloud domain.)

6. Each solution is decoupled into multi layers, like cache layer and DB layer, and in each layer, high availability is implemented with Azure IaaS feature.

7. Multiple cloud services can share the same database layer or cache layer via virtual network, the shared layers can be hosted either in Azure end or on premise datacenter.

8. In order to set up database layer and cache layer, customer can refer to traditional expertise to build service cluster.

Under switch:

1. Gaming Company has got the notification on when the primary solution VM will be rebooted/updated.

2. Notify gaming players the time of following switch.

3. Gaming Company starts the secondary solution VMs by a script or management portal.

4. If traffic manager is not used here, once a secondary solution is up, Gaming Company configures its custom domain to point to the domain of secondary solution.

5. After backend reboot/update is finished, Gaming Company can start the primary solution back with same way.

Risk:

1. If database layer or cache layer is not shared, during the switch, all active data in those independent servers will not result to end gaming players.

2. Once application layer is switched to a secondary datacenter, communication between application layer and shared layers may hit network issue.

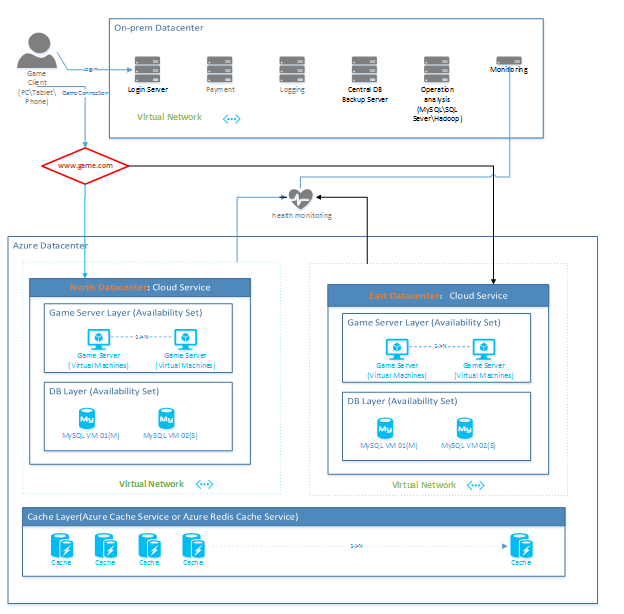

Scenario 3: Solution integrated with Azure services

Before Switch:

1. Setup VM solutions in multiple datacenters.

2. Solutions from different datacenter were connected to on premise DC via virtual networks.

3. Each solution has its own cloud domain, like test.chinacloudapp.cn.

4. Only primary solution is active at most time, while other solution VMs are shut down at that period.

5. Gaming is served at a custom name like www.game.com, the custom domain is pointing to traffic manager domain, which is load balancing multi cloud services with failover strategy. (traffic manage is reported to be released in China Azure very soon, in the short time before it is released, custom name can be set to map cloud domain.)

6. Each solution is decoupled into multi layers, like cache layer and DB layer, and in certain layer, high availability is implemented with Azure IaaS feature.

7. Multiple cloud services can share the same database layer via virtual network, the shared layers can be hosted either in Azure end or on premise datacenter.

8. In order to set up database layer, customer can refer to traditional expertise to build service cluster.

9. Cache layer is realized by applying Azure caching service instead of customer’s own caching solution. (currently managed cache and Redis cache is not released in China Azure, but it is widely used in global, suppose that will come to China customers soon)

Under switch:

1. Gaming Company has got the notification on when the primary solution VM will be rebooted/updated.

2. Notify gaming players the time of following switch.

3. Gaming Company starts the secondary solution VMs by a script or management portal.

4. If traffic manager is not used here, once a secondary solution is up, Gaming Company configures its custom domain to point to the domain of secondary solution.

5. After backend reboot/update is finished, Gaming Company can start the primary solution back with same way.

Risk:

1. During the switch, all active data in old primary databases servers will not result to end gaming players.

2. Network performance between managed caching service and application layer may be not good to games requiring high load of traffic.

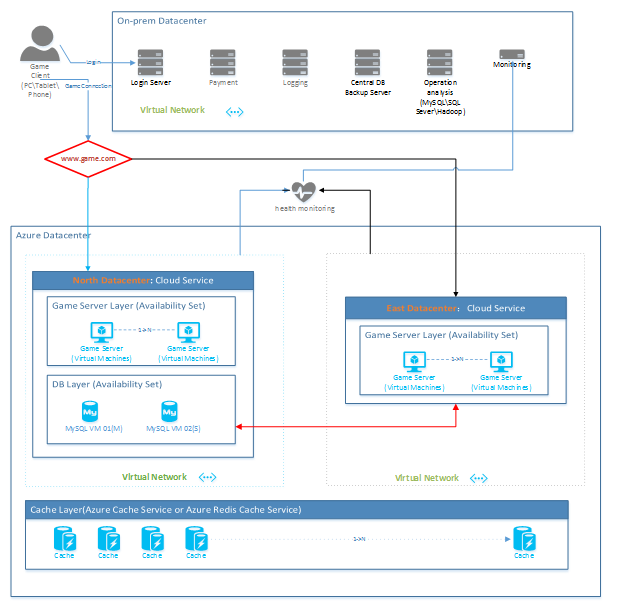

Scenario 4: Solution 2 integrated with Azure services

This solution works similar to the 3rd one above, the main highlight in this one is sharing database layer between multiple application layers and that will try to reduce the impact at most during backend update and manual switch, database layer can be done with SQL cluster. Since one database layer can be filled with multiple servers and is distributed in several upgrade/fault domains in Azure end, the database layer should still work fine even one/two machines get restarted/recycled.

Update and archived into : https://www.doingcloud.com/index.php/2015/10/16/building-high-availability-of-online-gaming-in-azure-iaas/