Kinect-Emotiv mashup prototype

Longtime readers of the Learning Curve know about my fascination with brainwaves, aka, neurodata (Again with the brainwaves). Then along came Kinect, which provided a pleasant distraction. But how to get the best of both worlds?

With the release of the Kinect for Windows SDK, it became an interesting experiment to see if the real-time data streams from both devices can co-exist on one machine.

As it turns out, they can.

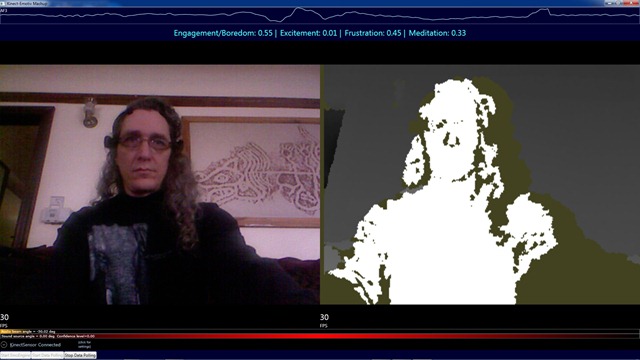

Screenshot of the Kinect-Emotiv mashup running on my laptop.

At the top of the screen is the real-time neurodata control that I wrote a couple of years ago (Fun with brainwaves, part 3: Here’s some code). In the middle of the screen are the video and z-stream from the Kinect device. Emotiv’s sophisticated signal processing of the neurodata gives us neat signals for affective state, like “Excitement” and “Frustration”.

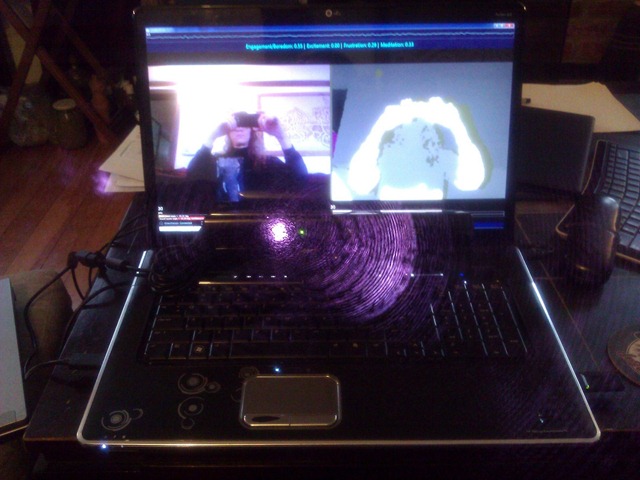

Here’s the rig:

The Kinect-Emotiv mashup running on my laptop. Note the lens flare from the infrared sensor on the Kinect device. The bluetooth dongle for the neuroheadset is on the right.

I was surprised to see that the camera on my HTC Trophy is so sensitive to infrared light – it’s much brighter on the camera’s display than to the naked eye.

One can imagine numerous scenarios in which the Kinect and Emotiv data streams can be joined. If there’s interest, I can post the code on CodePlex.