My Pocket Solitaire, MapReduce and Azure HDInsight

This post continues My Pocket Solitaire series.

In my previous post I explained how you can create solver which uses bottom up approach to solve this interesting problem. Now we're going to continue from that using MapReduce as our solving algorithm. If MapReduce is new concept to you then I would definitely recommend that you check out the Wikipedia article about it before reading this post. I'm going to take use of the concepts and terms mentioned in there so it might be a bit challenging without that base information.

Now we know (based on the previous posts) that we can solve My Pocket Solitaire nicely using bottom up approach. This gives us chance to identify steps we used during that process and now we can come up with new ways of running the same algorithm in different environment. If you recall my pseudo algorithm in previous post then we identified these following steps:

- We have list of unique positions in level one

- We execute moves for the each of the positions in the list

- We come up with new list of positions (includes possible duplicates)

- Remove duplicates away

- We have now list of unique positions in level two

And if you then slightly modify that above list of steps into this format:

- List of items

- Map each item with function

- We have new list of items based on output of function

- Reduce items from list based on function

- We have final output of items

This list awfully starts to look like Map and Reduce –like algorithm. So what if we built new version of our solving routine using MapReduce then? What we have to do in order to achieve that? First we will have to understand a bit more how you can put this kind of task into Apache Hadoop. To get easiest start on that journey you're quite easily routed into solution called Hadoop Streaming. Especially if you don’t have Java knowledge and you don't want to learn a lot of new stuff in order to get this implemented. I especially like the Practical Help section of Hadoop Streaming Wiki Page which gives you simple and direct way to get started with development locally and then learn more after that. Their script sample actually directly translates in my head into this:

type SolverInit.txt | SolverMapper.exe | SolverReducer.exe

This means that we have some kind of "SolverInit.txt" providing input into our process which is then executed through our "SolverMapper.exe" and that output is then put through "SolverReducer.exe". Output of reducer would be then again something that our solver would be able to use again when executed through MapReduce.

In order to implement this we have to now understand more about the input format. So what is this "SolverInit.txt" file then? Again we take a look at Hadoop Streaming documentation which clearly says that input is coming in standard input stream (stdin) as lines into MapReduce processes and each line contains key-value pair. Key is until first tab on that line and rest of the line is considered to be value (this is the default setup but it can be changed).

So now we need to come up with format that has each game position in one line and key would position of the board. And since we want to now calculate again the magic number (which we already managed to solve in previous post) then we need to have count of solutions behind that key as counter. We also put there moves so that we can later validate that is this correct solution path. This leads us into this format for the "SolverInit.txt":

65536 1

Key for that is coming from board position (final board position in string format is 000000000000000010000000000000000) which is then converted using Bin->Dec conversion (from bits to number). You might be wondering that why I chose this format and not directly the string format… well reason is that since I was doing this in my local laptop and was forced to squeeze the data elements in order to be able to execute my algorithms ![]() . And even if you start to utilize distributed processing model like this you still have the responsibility to keep in mind that how do you balance with the CPU, network data transfer need and storage use. But I might do another post with even simpler set up later.

. And even if you start to utilize distributed processing model like this you still have the responsibility to keep in mind that how do you balance with the CPU, network data transfer need and storage use. But I might do another post with even simpler set up later.

"SolverMapper.exe" and "SolverReducer.exe" implementations have actually quite small responsibility in this entire solution. Mapper takes board positions as input from single line and performs all the possible available backward moves and outputs these new board positions as new lines to the output stream. Reducer in turn reads incoming lines and if there are multiple lines with same key (note: Hadoop hands these lines in sorted order of keys) then it will output just single line with value which is sum of all those duplicate lines.

So if we now think conceptually this MapReduce in My Pocket Solitaire context we can maybe best describe the process using this kind of batch file (lines omitted for simplicity):

type SolverInit.txt |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe |^

SolverMapper.exe | sort | SolverReducer.exe

That pretty much demonstrates that what kind of process this MapReduce streaming actually is!

How to run this in HDInsight then?

Okay now we have some understanding that how could we run this streaming job but what's the best way to do it then? And here comes the nice part of Azure HDInsight. You can actually built nice PowerShell script that runs this entire solving process as automated process. You just need to have Azure subscription and you're good to go! You might want to read this excellent article before you proceed because I won’t go necessarily through all the details to set you up.

Of course I can login to the management portal and create HDInsight clusters etc from there but since I'm now going to demo how you can do this using just script then we need to do something extra to set it up. In order to script to work without you providing credentials to it, it needs use certificate in order to access the service. When access is solved in script then script would have to create needed storage account, create HDInsight cluster, run the entire MapReduce algorithm loop for 32 levels and then get the final output file out from storage. And of course when this process is completed it will release all the resources created during the script execution. And while script is doing the heavy work (or more precisely Azure is doing the heavy work ![]() ) then you can be somewhere else doing something completely different.

) then you can be somewhere else doing something completely different.

So in order to get the automatic login to work you have to download so called publish settings. You can get it using Azure PowerShell and executing following command there:

# Get Azure publish settings file using this command:

Get-AzurePublishSettingsFile

This will open up browser and it will spit out you file from Azure which will contain management certificate in there. This file allows you to run these scripts as unattended. But this of course means that you have make sure that proper security is applied when handling this file. Read more information from here. In my solver script I have the call to import this publish settings file in order to demonstrate that how this full end to end process can be implemented. My script expects that to be saved into same folder as the script itself using name "Subscription.publishsettings".

What to expect when you then execute "Solve.bat" (which is wrapper to start the real PowerShell script to make sure that it actually doesn't just open that script file but rather executes it):

@powershell.exe -NoProfile -InputFormat none -ExecutionPolicy unrestricted -File %~dp0Solve.ps1

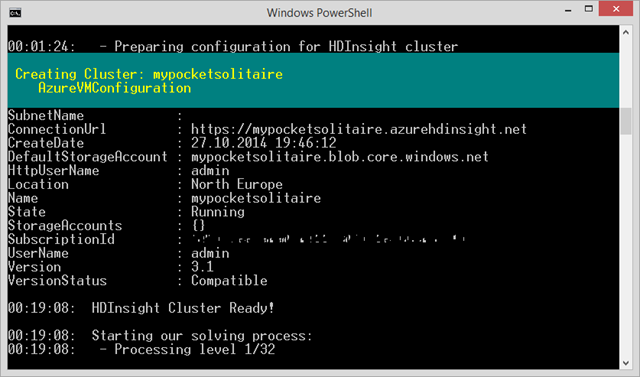

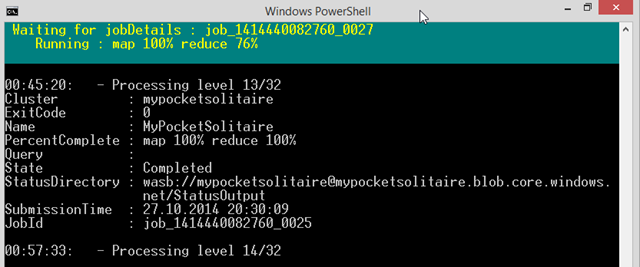

Well you should see PowerShell script starting the execution and providing updates about the progress:

If you want to run this script by yourself then you can just get all the resources from here.

Here’s the full "Solve.ps1" script that makes all these tricks:

# Full Solve.ps1 will be here if you view this blog post through web browser ![]()

NOTE: If you want to use this script then you have to modify top part of in order to use your correct Azure Subscription name and correct management certificate. And you have to also change the Azure resource names (storage, HDInsight) so that you have unique names for those resources.

After the process you end up with "Input32/part-00000" file in your local folder:

6442450943 10215411760019992 OQDPKIPD2PUWGUXVZXUWMKXJJLP2AIHJ1M5W7Y6XR4WYMK4RJLCKSQBJQELJJB

8589410303 10215411760019992 EQHJAIPDNPQOSQ4R1YR4CA7YXZ2PAIJHM1LJ1YQEFD6XYWGIP25WDPP2UW2PPN

8589869055 40861647040079968 SQ4RWYR47YZXUWFRMKXV5WJL1M6XMKP2HJGUAIUW2PPDRFCKKIQ3DPOQBJJX3Q

8589926399 10215411760019992 OQDPGIUGWU5WXVZX7YXZ1YR4JHGIFRMKRFTRCKRFPDACCKDF6XUWFRQSWY4RRT

8589934589 10215411760019992 SQ4RWYR47YZXUWFRMKXV5WJL1M6XMKP2HJGUAIUW2PPDRFCKKIQ3DPOQBJJXX6

And that of course contains that magical number in the third row.

Okay we have now demonstrated that we can use HDInsight to run our Hadoop Streaming tasks. Of course this kind of solving task is far from optimal (in performance wise and just by pure solution wise) for our problem but it’s still quite cool way to run the actual solving process ![]() . Now go and implement your own algorithms and logics using Azure PowerShell!

. Now go and implement your own algorithms and logics using Azure PowerShell!

Anyways... Happy hacking!

J