Basic Blocks Aren't So Basic

In the book How We Test Software at Microsoft I discuss structural testing techniques. Structural testing techniques are systematic procedures designed to analyze and evaluate control flow through a program. These are classic white box test design techniques, although my friend and respected colleague Alan Richardson states in his review of the book that he also employs similar techniques on models and I have to agree with him on that point.

Also, Peter M. sent me mail pointing out a reasonably obvious bug in the code chunks on pages 118 and 119. Both functions are declared as static void, but each has a return statement. Somehow this oversight made it through the review process, but of course a return statement in a function declared as static void would cause a compiler error. (Thanks for discovering that bug Peter and letting us know so we can fix it for the 2nd edition!)

Peter also asked for further clarification of how blocks are counted, and why a test that evaluated both conditional clauses in the compound expression as true in the below example (and on page 119) results in 85.71% coverage. Unfortunately, the answer for that is not simple.

Some surprising details…

1: public static int BlockExample1(bool cond_1, bool cond_2)

2: {

3: int x = 0, y = 0, z = 0;

4: if (cond_1 && cond_2)

5: {

6: x = 1;

7: y = 2;

8: z = 3;

9: }

10: return x + y + z;

11: }

The above code can be re-written as:

1: public static int BlockExample2(bool cond_1, bool cond_2)

2: {

3: int x = 0, y = 0, z = 0;

4: if (cond_1)

5: {

6: if (cond_2)

7: {

8: x = 1;

9: y = 2;

10: z = 3;

11: }

12: }

13: return x + y + z;

14: }

First, a 'basic block' is defined as a set of contiguous executable statements with no logical branches which seems pretty straight forward. So, based on our definition of basic blocks it appears there are 4 blocks of contiguous statements. However, the conditional clauses on line 4 and line 6 in the BlockExample2 method introduce logical branches which theoretically introduce 2 implicit blocks (e.g. one block when control flow follows the true path, and another block when control flow follows the false path). So, that is essentially how the 6 blocks are determined. But, that's not the end of the story.

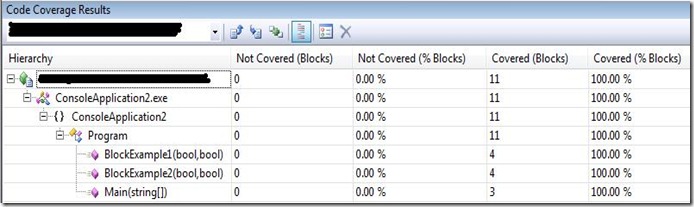

If we pass a Boolean true to both cond_1 and cond_2 conditional clauses the block coverage measure in BlockExample1 results in 85.71% coverage; however, the block coverage measure for BlockExample2 actually results in 100% coverage as illustrated below.

What? How can this be? Both BlockExample1 and BlockExample2 are syntactically identical. Well, to understand this we would really need to dig deeper into compilers and coverage tools. That is well beyond the boundaries of this blog, but the IL does provide some insight.

What? How can this be? Both BlockExample1 and BlockExample2 are syntactically identical. Well, to understand this we would really need to dig deeper into compilers and coverage tools. That is well beyond the boundaries of this blog, but the IL does provide some insight.

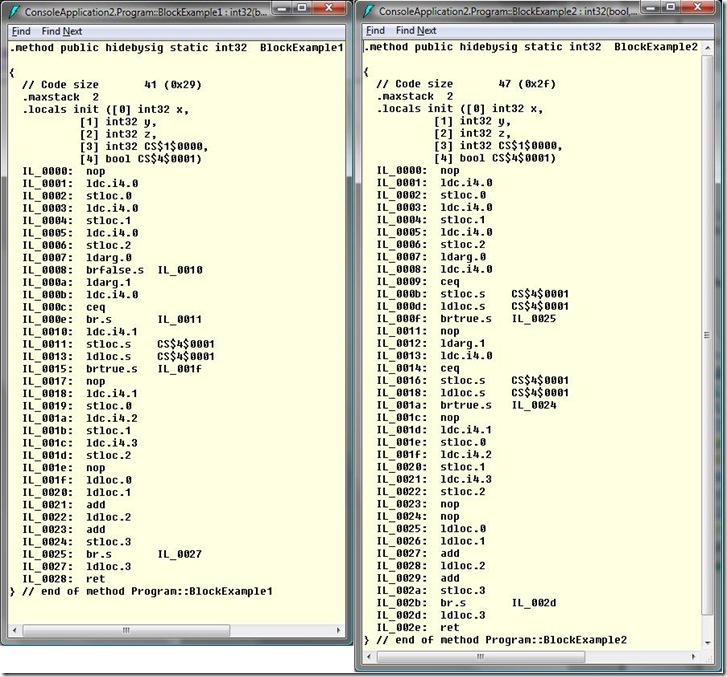

The MSIL for BlockExample1 is on the left and BlockExample2 is on the right. Now, I don't want to do a deep dive into MSIL, but those who are really observant can see that for some reason the Visual Studio compiler evaluated a branch in BlockExample1 to false (instruction IL_0008), and then instruction IL_000c compares the 2 values for equality and instruction IL_0015 appears to evaluate the optimized compound conditional expression to true. Compare that to BlockExample2 MSIL which shows the first comparison of 2 values occurs at IL_0009 and the branch is evaluated as true (IL_000f) and the second comparison of 2 values occurs at IL_0014 and again evaluates to true at instruction IL_001a.

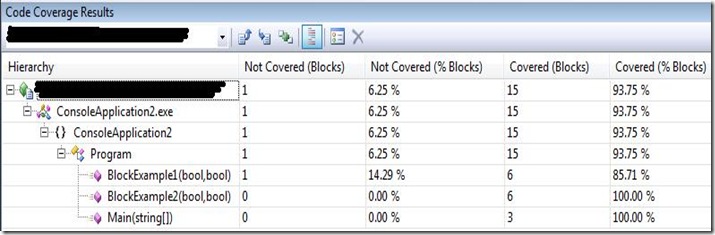

But wait…it gets even more confusing. We typically measure structural coverage using the debug build. So, imagine my surprise when I recompiled the code using the retail build settings and again passed true arguments to the cond_1 and cond_2 parameters for BlockExample1 and BlockExample2 and the coverage tool in Visual Studio indicated these methods now only had 4 blocks, and the block coverage measure for both methods was 100% as illustrated below.

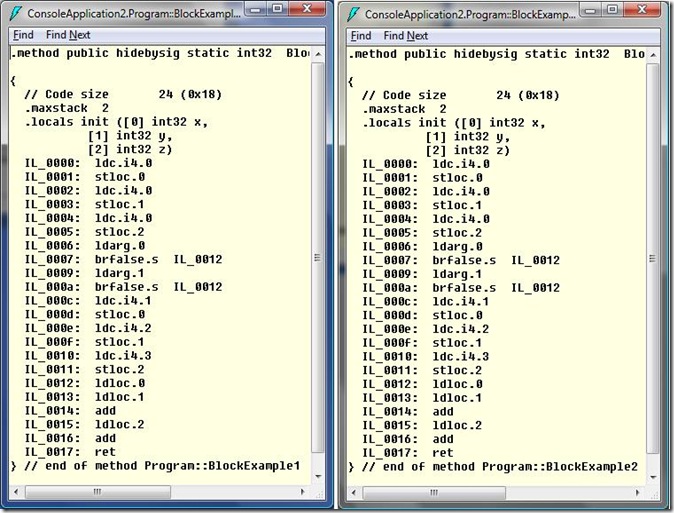

Also, interestingly enough the compiler optimized the code so both methods had identical MSIL op code instructions as illustrated below. Steve Carroll (a senior developer in Visual Studio) wrote we "shouldn't be too concerned if you can't exactly identify where all the blocks are. When you turn the optimizer on your binary, block counts are fairly unpredictable. Don't worry though, the source line coloring will almost always lead you to the parts of the code that you need to worry about targeting to get your coverage stats up. "

Steve Carroll (a senior developer in Visual Studio) wrote we "shouldn't be too concerned if you can't exactly identify where all the blocks are. When you turn the optimizer on your binary, block counts are fairly unpredictable. Don't worry though, the source line coloring will almost always lead you to the parts of the code that you need to worry about targeting to get your coverage stats up. "

I agree with Steve when he states block counts are unpredictable when the code is optimized (and different tools that measure block coverage may provide different results). However, I only partially with his statement that source line coloring leading us to parts of the code we need to test. Maybe it will, maybe it won't. But, professional testers performing an in-depth analysis of code coverage results will help us identify important parts of the code that require further investigation and testing.

So, what does it all mean?

Block testing is useful for unit testing and designing white box tests for switch statements and exception handlers (based on how we can track control flow through source code using a debugger as opposed to through the IL Disassembler). But, as I stated in How We Test Software at Microsoft block testing is the weakest form of structural testing. But, it does provide a different perspective as compared to other structural approaches or techniques and is useful when used by a professional tester in the right context.

But, the important point here is that just as we wouldn't rely on only one tool to tune the carburetor on an automobile, we certainly would rely on only one technique or approach for designing structural tests; and we certainly wouldn't only rely on structural testing as a single approach to testing. This example further reinforces another important point that I make in the book; code coverage is not directly related to quality. Any professional tester can clearly see that although we are able to achieve high levels of coverage with one test, these methods are not at all well tested.

Only a fool would use code coverage metrics to derive some measure of quality, or suggest the implication that high coverage measures equal greater quality. In truth, the value of code coverage is in its ability to help professional testers identify areas of the code that have not been previously exercised and to design tests to evaluate those areas of the code more effectively to help reduce overall risk.

If we don't execute an area of code then we have zero probability of exposing errors in that code if they exist. However, just because we do execute a code statement doesn't mean we expose all potential errors. But, it at least increases the probability from 0% and helps reduce risk.